ASC X9

INFORMATIVE

REPORT

Informative Reports developed through the Accredited Standards Committee X9,

Inc. (“X9”), are copyrighted by X9. Informative Reports are available free of charge

however, all copyrights belong to and are retained by X9. For additional information,

contact the Accredited Standards Committee X9, Inc. at ASC X9, Inc., 275 West

Street, Suite 107, Annapolis, Maryland 21401

© ASC X9, Inc., 2022 – All Rights Reserved

Number: ASC X9 IR-F01-2022

Title: Quantum Computing Risks to

the Financial Services Industry

Published: November 29, 2022

ASC X9 IR-F01-2022

©ASC X9 Inc. 2022 – All Rights Reserved

i

Table of Contents Page

INFORMATIVE REPORT .......................................................................................................................................

1 Executive Summary .............................................................................................................................. 1

2 Introduction ............................................................................................................................................ 2

2.1 Background ............................................................................................................................................ 2

2.2 Purpose .................................................................................................................................................. 3

2.3 Scope ...................................................................................................................................................... 3

2.4 Future Editions and Participation ........................................................................................................ 3

3 Normative References ........................................................................................................................... 4

4 Terms and Definitions ........................................................................................................................... 4

5 Symbols and Abbreviations ............................................................................................................... 10

6 Overview of Quantum Computing Risks, Timelines, and Mitigations ........................................... 16

6.1 Quantum Computing vs Classical Computing ................................................................................. 16

6.2 Quantum Computing Risks to Cryptography ................................................................................... 18

6.3 Other Quantum Computing Risks ...................................................................................................... 19

6.4 Expected Timelines for Quantum Computers .................................................................................. 21

6.5 Assessing and Mitigating Risks......................................................................................................... 23

7 Overview of Quantum Computing ..................................................................................................... 26

7.1 Description of Classical Computing .................................................................................................. 27

7.2 Quantum Mechanical Properties ........................................................................................................ 28

7.2.1 Superposition ....................................................................................................................................... 28

7.2.2 Coherence ............................................................................................................................................ 29

7.2.3 Entanglement ....................................................................................................................................... 29

7.3 Qubits ................................................................................................................................................... 30

7.3.1 Physical Qubits .................................................................................................................................... 30

7.3.2 Logical Qubits ...................................................................................................................................... 32

7.4 Description of Quantum Computing.................................................................................................. 32

7.5 Quantum Algorithms ........................................................................................................................... 33

7.5.1 Quantum Gates .................................................................................................................................... 34

7.5.2 Quantum Circuits................................................................................................................................. 35

7.6 Qubit Architectures ............................................................................................................................. 36

7.6.1 Superconducting ................................................................................................................................. 36

7.6.2 Ion Trap ................................................................................................................................................. 36

7.6.3 Photonic Quantum Computing .......................................................................................................... 37

7.6.4 Color Defects ....................................................................................................................................... 37

7.7 Metrics for Qubit Quality ..................................................................................................................... 37

7.8 Quantum Scaling ................................................................................................................................. 38

7.8.1 Quantum Error Correction .................................................................................................................. 39

7.8.2 Cooling and Temperature Requirements .......................................................................................... 41

7.8.3 Scaling of Components ...................................................................................................................... 41

7.9 Quantum Computing Devices ............................................................................................................ 41

7.9.1 Quantum Annealers ............................................................................................................................ 42

7.9.2 Noisy Intermediate Scale Quantum Technologies ........................................................................... 42

7.9.3 Fault-tolerant Quantum Computers ................................................................................................... 43

7.10 Expected Timelines for Quantum Computers .................................................................................. 43

7.10.1 Mosca’s XYZ Theorem ........................................................................................................................ 46

8 Review of Current Cryptosystems ..................................................................................................... 48

8.1 Symmetric Key Cryptosystems.......................................................................................................... 48

8.1.1 The Data Encryption Standard ........................................................................................................... 49

8.1.2 The Advanced Encryption Standard.................................................................................................. 50

ASC X9 IR-F01-2022

©ASC X9 Inc. 2022 – All Rights Reserved

ii

8.1.3 The Unstructured Search Problem .................................................................................................... 51

8.2 Asymmetric Key Cryptosystems ....................................................................................................... 51

8.2.1 The RSA Algorithms ............................................................................................................................ 52

8.2.2 The Integer Factorization Problem .................................................................................................... 52

8.2.3 Elliptic Curve Cryptography ............................................................................................................... 53

8.2.4 The Discrete Logarithm Problem ....................................................................................................... 53

8.3 Hash Functions .................................................................................................................................... 54

8.4 Cryptographic Protocols .................................................................................................................... 56

8.4.1 Transport Layer Security .................................................................................................................... 56

8.4.2 Secure Shell (SSH) .............................................................................................................................. 58

8.4.3 Internet Protocol Security (IPsec) ...................................................................................................... 59

8.4.4 Virtual Private Network (VPN) ............................................................................................................ 60

9 Post Quantum Cryptography ............................................................................................................. 61

9.1 Post Quantum Mathematical Methods .............................................................................................. 61

9.1.1 Lattice-based Cryptography ............................................................................................................... 61

9.1.2 Code-based Cryptography ................................................................................................................. 61

9.1.3 Multivariate Quadratic Polynomial-based Cryptography ................................................................ 62

9.1.4 Supersingular Isogeny-based Cryptography ................................................................................... 62

9.1.5 Symmetric-based Cryptography ........................................................................................................ 63

9.1.5.1 Stateful Hash-based Signature Systems ................................................................................... 63

9.1.5.2 Stateless Hash-based Signature Systems ................................................................................ 63

9.1.5.3 Zero Knowledge Proof Signature Systems ............................................................................... 63

9.2 Quantum Cryptography ...................................................................................................................... 64

9.2.1 Quantum Key Distribution .................................................................................................................. 64

9.2.2 Quantum Random Number Generators ............................................................................................ 65

9.3 Hybrid Cryptography ........................................................................................................................... 67

9.4 Cryptographic Agility .......................................................................................................................... 67

10 Post Quantum Cryptography Standardization ................................................................................. 69

10.1 The NIST PQC Standardization Process ........................................................................................... 69

10.2 Status of the NIST PQC Standardization Process ............................................................................ 69

11 Quantum Computing Risks to Current Cryptosystems ................................................................... 71

11.1 Quantum Algorithms for Classically Hard Problems....................................................................... 71

11.1.1 Grover’s Algorithm .............................................................................................................................. 71

11.1.2 Shor’s Algorithm.................................................................................................................................. 74

11.2 Risks to Current Cryptosystems ........................................................................................................ 77

11.2.1 Risks to Symmetric Key Cryptosystems .......................................................................................... 77

11.2.1.1 The Data Encryption Standard ................................................................................................... 77

11.2.1.2 The Advanced Encryption Standard .......................................................................................... 77

11.2.2 Risks to Asymmetric Key Cryptosystems ........................................................................................ 77

11.2.2.1 The RSA Algorithms .................................................................................................................... 77

11.2.2.2 Elliptic Curve Cryptography ....................................................................................................... 78

11.2.3 Risks to Hash Functions ..................................................................................................................... 80

11.2.4 Risks to Cryptographic Protocols ..................................................................................................... 81

12 Quantum Threats ................................................................................................................................. 82

12.1 Online and Offline Attacks .................................................................................................................. 82

12.1.1 Online Attacks...................................................................................................................................... 82

12.1.2 Offline Attacks ..................................................................................................................................... 82

12.2 Future Threat Dimensions .................................................................................................................. 83

12.3 Economic and Social Impacts of Quantum Computing .................................................................. 85

12.3.1 Potential Channels for the Creation of a Quantum Hegemony ...................................................... 86

12.3.1.1 Economic Inequalities ................................................................................................................. 86

12.3.1.2 Financial Inequalities ................................................................................................................... 86

12.3.1.3 Market Space Inequalities ........................................................................................................... 86

ASC X9 IR-F01-2022

©ASC X9 Inc. 2022 – All Rights Reserved

iii

12.3.2 Ethics in Quantum Computing ........................................................................................................... 86

13 Suggestions for Mitigation ................................................................................................................. 88

13.1 Understanding Probabilities of Threats ............................................................................................ 89

13.2 Understanding the Impact of Vulnerabilities .................................................................................... 90

13.3 Understanding and Minimizing Risks................................................................................................ 95

13.4 Forming a Migration Strategy and Roadmap .................................................................................... 96

Annex A Bibliography .................................................................................................................................... 102

Annex B Quantum Computing Research Centers ...................................................................................... 103

Annex C Quantum Roadmaps and Research .............................................................................................. 106

Annex D Selected PQC Algorithm Characteristics ..................................................................................... 111

ASC X9 IR-F01-2022

©ASC X9 Inc. 2022 – All Rights Reserved

iv

Foreword

This Informative Report has been approved and released by the Accredited Standards Committee X9, Incorporated,

275 West Street, Suite 107, Annapolis, MD 21401. This document is copyrighted by X9 and is not an American

National Standard and the material contained herein is not normative in nature. Comments on the content of this

document should be sent to: Attn: Executive Director, Accredited Standards Committee X9, Inc., 275 West Street,

Suite 107, Annapolis, MD 21401,

This Informative Report is a product of the Accredited Standards Committee X9 Financial Industry Standards and

was generated by the Quantum Computing Risk Study Group created by the X9 Board of Directors in December

of 2017 to research the state of quantum computing and to generate a report summarizing the findings of the group.

Suggestions for the improvement or revision of this report are welcome. They should be sent to the X9 Committee

Secretariat, Accredited Standards Committee X9, Inc., Financial Industry Standards, 275 West Street, Suite 107,

Annapolis, MD 21401 USA.

Published by

Accredited Standards Committee X9, Incorporated

Financial Industry Standards

275 West Street, Suite 107

Annapolis, MD 21401 USA

X9 Online http://www.x9.org

Copyright © 2022 ASC X9, Inc.

All rights reserved.

No part of this publication may be reproduced in any form, in an electronic retrieval system or otherwise, without

prior written permission of the publisher. Published in the United States of America.

ASC X9 IR-F01-2022

©ASC X9 Inc. 2022 – All Rights Reserved

v

X9 Board of Directors:

At the time this Informative Report was published, the ASC X9 Board of Directors had the following member

companies, and primary company representatives and X9 had the following management and staff:

Corby Dear, X9 Board of Directors Chair

Michelle Wright, X9 Board of Directors Vice Chair

Alan Thiemann, X9 Treasurer

Steve Stevens, X9 Executive Director

Janet Busch, Senior Program Manager

Ambria Frazier, Program Manager

Lindsay Conley, Administrative Support

Organization Represented on the X9 Board Representative

ACI Worldwide ..........................................................................................................Julie Samson

Amazon .....................................................................................................................Tyler Messa

American Bankers Association .................................................................................Tab Stewart

Arvest Bank ..............................................................................................................Yurik Paroubek

Bank of America .......................................................................................................Daniel Welch

Bank of New York Mellon .........................................................................................Kevin Barnes

BankVOD ..................................................................................................................Sean Dunlea

Bloomberg LP ...........................................................................................................Corby Dear

Capital One ...............................................................................................................Valerie Hodge

Citigroup, Inc. ............................................................................................................David Edelman

Communications Security Establishment .................................................................Jonathan Hammell

Conexxus, Inc. ..........................................................................................................Alan Thiemann

CUSIP Global Services.............................................................................................Gerard Faulkner

Deluxe Corporation ...................................................................................................Andy Vo

Diebold Nixdorf .........................................................................................................Bruce Chapa

Digicert ......................................................................................................................Dean Coclin

Discover Financial Services .....................................................................................Susan Pandy

Dover Fueling Solutions ...........................................................................................Simon Siew

Federal Reserve Bank ..............................................................................................Ainsley Hargest

FirstBank ...................................................................................................................Ryan Buerger

FIS ............................................................................................................................Stephen Gibson-Saxty

Fiserv ........................................................................................................................Lisa Curry

FIX Protocol Ltd - FPL ..............................................................................................James Northey

Futurex ......................................................................................................................Ryan Smith

Gilbarco ....................................................................................................................Bruce Welch

Harland Clarke/Vericast ............................................................................................Jonathan Lee

Hudl...........................................................................................................................Lisa McKee

Hyosung TNS Inc. .....................................................................................................Joe Militello

IBM Corporation ........................................................................................................Richard Kisley

Ingenico ....................................................................................................................Steven Bowles

ISITC .........................................................................................................................Lisa Iagatta

ITS, Inc. (SHAZAM Networks) ..................................................................................Manish Nathwani

J.P. Morgan Chase ...................................................................................................Ted Rothschild

MasterCard Europe Sprl ...........................................................................................Mark Kamers

NACHA The Electronic Payments Association ........................................................George Throckmorton

National Security Agency .........................................................................................Mike Boyle

NCR Corporation ......................................................................................................Charlie Harrow

Office of Financial Research, U.S. Treasury Department ........................................Thomas Brown Jr.

PCI Security Standards Council ...............................................................................Ralph Poore

PNC Bank .................................................................................................................David Bliss

ASC X9 IR-F01-2022

©ASC X9 Inc. 2022 – All Rights Reserved

vi

SWIFT/Pan Americas ...............................................................................................Anne Suprenant

TECSEC Incorporated ..............................................................................................Ed Scheidt

Thales DIS CPL USA Inc ..........................................................................................James Torjussen

U.S. Bank ..................................................................................................................Michelle Wright

U.S. Commodity Futures Trading Commission (CFTC) ...........................................Robert Stowsky

University Bank .........................................................................................................Stephen Ranzini

USDA Food and Nutrition Service ............................................................................Lisa Gifaldi

Valley Bank ...............................................................................................................Michael Duffner

VeriFone, Inc. ...........................................................................................................Joachim Vance

Viewpointe ................................................................................................................Richard Luchak

VISA ..........................................................................................................................Kristina Breen

Wells Fargo Bank .....................................................................................................Sotos Barkas

Wolters Kluwer ..........................................................................................................Colleen Knuff

Zions Bank ................................................................................................................Kay Hall

X9F Quantum Computing Risk Study Group:

At the time this informative report was published, the X9F Quantum Computing Risk Study Group had the following

officers and members:

Steve Stevens, Study Group Co-Chair

Tim Hollebeek, Study Group Co-Chair

Philip Lafrance, Study Group Co-Chair and Editor of the Informative Report

Organization Represented Representative

Accredited Standards Committee X9, Inc. ...............................................................Steve Stevens

American Express Company ....................................................................................Gail Chapman

American Express Company ....................................................................................Vinay Singh

American Express Company ....................................................................................Alejandro Vences

American Express Company ....................................................................................Kevin Welsh

Bank of America .......................................................................................................Andi Coleman

Capital One ...............................................................................................................Johnny Lee

Cisco .........................................................................................................................Scott Fluhrer

Citigroup, Inc. ............................................................................................................Sudha Iyer

Communications Security Establishment .................................................................Jonathan Hammell

Communications Security Establishment .................................................................Erin McAfee

Conexxus, Inc. ..........................................................................................................David Ezell

Consultant .................................................................................................................David Cooper

CryptoNext Security ..................................................................................................Ludovic Perret

Delap LLP .................................................................................................................Spencer Giles

Diebold Nixdorf .........................................................................................................Alexander Lindemeier

Digicert ......................................................................................................................Tim Hollebeek,

Federal Reserve Bank ..............................................................................................Scott Gaiti

Federal Reserve Bank ..............................................................................................Dillon Glasser

Federal Reserve Bank ..............................................................................................Ray Green

Federal Reserve Bank ..............................................................................................Mark Kielman

Federal Reserve Bank ..............................................................................................Francois Leclerc

Fiserv ........................................................................................................................Lisa Curry

FIX Protocol Ltd - FPL ..............................................................................................Daniel Bukowski

FIX Protocol Ltd - FPL ..............................................................................................James Northey

Gilbarco ....................................................................................................................Bruce Welch

Harrisburg University ................................................................................................Terrill Frantz

IBM Corporation ........................................................................................................Anne Dames

IBM Corporation ........................................................................................................Richard Kisley

ASC X9 IR-F01-2022

©ASC X9 Inc. 2022 – All Rights Reserved

vii

IBM Corporation ........................................................................................................Michael Osborne

Introspect Tech .........................................................................................................Tony Thompson

ISARA Corporation ...................................................................................................Philip Lafrance

J.P. Morgan Chase ...................................................................................................Darryl Scott

Member Emeritus .....................................................................................................Todd Arnold

Member Emeritus .....................................................................................................Larry Hines

Member Emeritus .....................................................................................................Bill Poletti

Member Emeritus .....................................................................................................Richard Sweeney

Micro Focus LLC - USSM .........................................................................................Luther Martin

Micro Focus LLC - USSM .........................................................................................Timothy Roake

National Security Agency .........................................................................................Mike Boyle

National Security Agency .........................................................................................Austin Calder

National Security Agency .........................................................................................Nick Gajcowski

Office of Financial Research, U.S. Treasury Department ........................................Jennifer Bond-Caswell

PCI Security Standards Council ...............................................................................Ralph Poore

Performance Food Group .........................................................................................Pinkaj Klokkenga

Qrypt .........................................................................................................................Denis Mandich

Quantum Bridge Technologies .................................................................................Mattia Montagna

Quantum Bridge Technologies .................................................................................Paul O’Leary

SteinTech LLC ..........................................................................................................Clay Epstein

Sympatico .................................................................................................................Mary Horrigan

TECSEC Incorporated ..............................................................................................Ed Scheidt

TECSEC Incorporated ..............................................................................................Jay Wack

Thales DIS CPL USA Inc ..........................................................................................Amit Sinha

The Clearing House ..................................................................................................Jackie Pagán

University Bank .........................................................................................................Michael Talley

University of Maryland ..............................................................................................Jonathan Katz

University of Waterloo...............................................................................................Chin Lee

University of Waterloo...............................................................................................Michele Mosca

Utimaco Inc. ..............................................................................................................Jillian Benedick

VeriFone, Inc. ...........................................................................................................John Barrowman

VeriFone, Inc. ...........................................................................................................Chetan Katira

VeriFone, Inc. ...........................................................................................................Joachim Vance

VISA ..........................................................................................................................Yilei Chen

VISA ..........................................................................................................................Eric Le Saint

VISA ..........................................................................................................................Peihan Miao

VISA ..........................................................................................................................Kim Wagner

VISA ..........................................................................................................................Gaven Watson

Wells Fargo Bank .....................................................................................................Peter Bordow

Wells Fargo Bank .....................................................................................................Robert Carter

Wells Fargo Bank .....................................................................................................Goriola Dawodu

Wells Fargo Bank .....................................................................................................Joe Janas

Wells Fargo Bank .....................................................................................................Rameshchandra Ketharaju

Wells Fargo Bank .....................................................................................................Abhijit Rao

Wells Fargo Bank .....................................................................................................Jeff Stapleton

Wells Fargo Bank .....................................................................................................Tony Stieber

Wells Fargo Bank .....................................................................................................Hunter Storm

Wells Fargo Bank .....................................................................................................Richard Toohey

Whitebox Advisors ....................................................................................................Kerry Manaster

United States Military Academy West Point .............................................................Lubjana Beshaj

Whitebox Advisors ....................................................................................................Kerry Manaster

ASC X9 IR-F01-2022

© ASC X9 Inc., 2022 – All Rights Reserved

1

ASC X9 IR-F01-2022

Quantum Computing Risks to the Financial Services Industry

Informative Report

1 Executive Summary

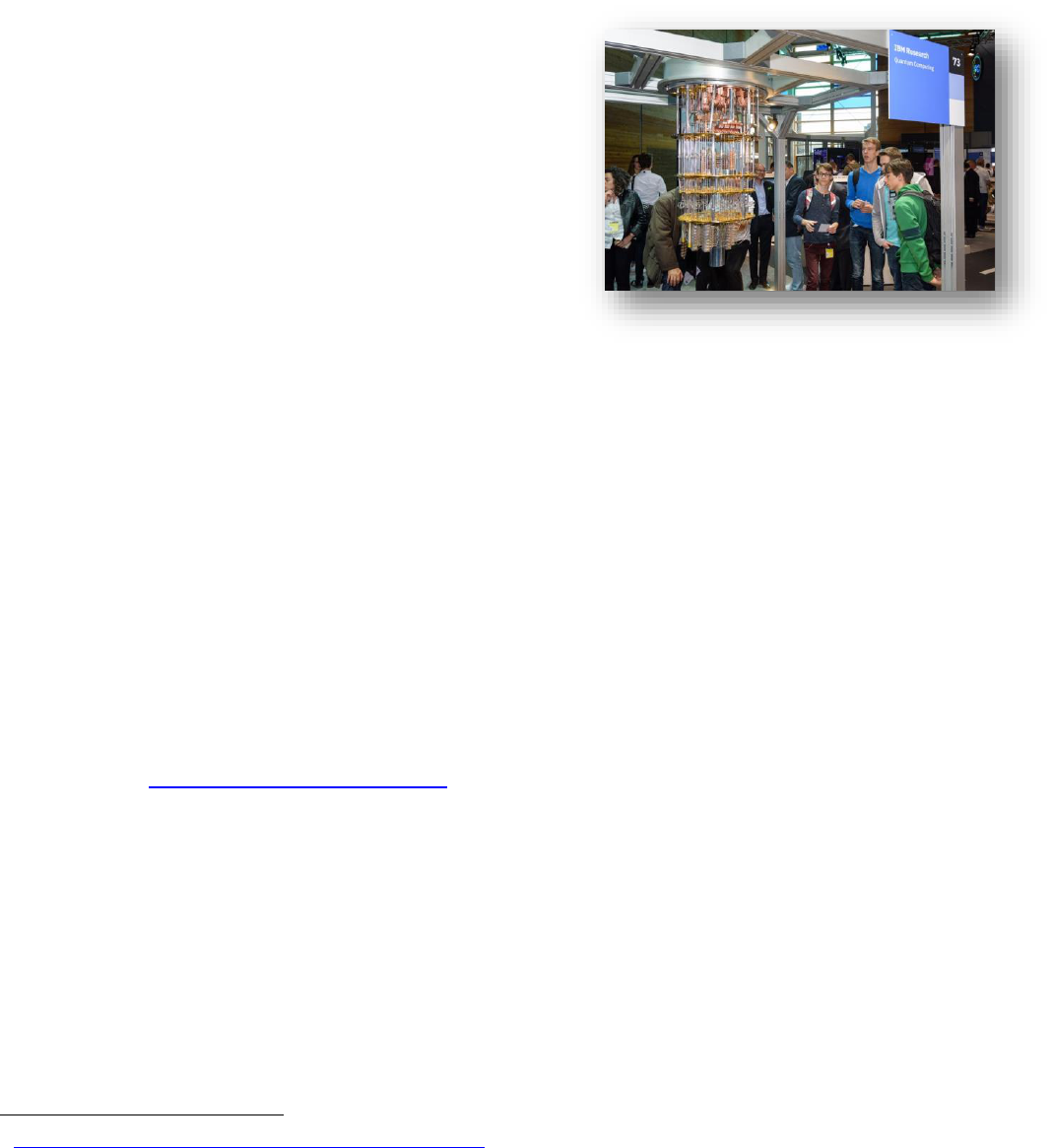

A Cryptographically Relevant Quantum Computer (CRQC) is a

computer that harnesses quantum mechanical phenomena as

computing elements and has operating parameters sufficient to break

some of today’s most commonly used cryptographic algorithms in a

short period of time. In some cases, the time to break a code is

expected to be measured in minutes or hours. Much smaller and less

able quantum computers exist today, but the creation of a CRQC is

beyond the ability of current technology. However, tens of billions of

dollars a year are being spent on research to achieve a CRQC. For

decades, the question was “can the issues and technological barriers

preventing the creation of a cryptography-breaking quantum

computer ever be overcome”. Now it is generally accepted that the

question is “when” will the issues be solved.

If you accept the premise that the arrival of a CRQC is a matter of “when”

and not “if”, your mindset should turn from one of simply monitoring progress to one of planning for the arrival. The

results of such planning will look different for each agency, organization, or company depending on the types of

assets they need to protect and the periods of time this protection must survive attacks by both conventional and

quantum means. That said, the planning processes for all entities have requirements in common. For example, they

each must determine their different classes of assets and what additional protections the assets will require to

withstand quantum attacks. Each class is defined by the length of time the assets must be protected, the value of

the assets, the exposure the assets have to quantum attack vectors (e.g., is your data normally transmitted over the

Internet) and the ability of currently used cryptography to protect the assets. Actions to protect each class of assets

must be defined; this includes creating plans, budgets, and times frames to implement the actions. Required

changes to current cryptography can range from increasing key lengths to replacement of some or all of the

cryptographic algorithms and methods in use. Regrettably, long-lived data that is currently protected with quantum-

vulnerable algorithms is already at risk, as attackers can capture the encrypted data and store it for future decryption

with a CRQC.

If you accept the inevitability of a CRQC, the central question is “when”. There is no consensus on this issue. You

will hear timeframes, from different experts, that vary wildly from 5 years to 30 years. A lot depends on the amount

of money spent on R&D and the ability to solve the remaining engineering problems. Another issue is we may not

know if or when the first CRQC is actually created, as some critical work is being conducted in secret. The best

predictions for the arrival of a CRQC typically assign a percentage denoting the level of confidence that a CRQC

will arrive by different future dates. Because of all the unknown variables, the event horizon for the arrival of a CRQC

covers a wide time period of at least 10 years. The period of time with the highest probability is between 10 and 20

years from now. As time passes, the confidence levels for the expected arrival times will most certainly become

greater, but that could mean less time to prepare.

The remainder of this document provides a much more detailed and technical review of quantum computers, their

development, how they threaten modern-day information security, and how to plan to mitigate the risks they pose

(including guidance on performing cryptographic transitions to quantum-safe algorithms). The ASC X9 Quantum

Computing Risk Study Group is composed of industry experts who will continue to track the issues and

development of a CRQC. Future editions of this report are planned as are other related reports. This group is open

to anybody that wishes to participate. Thank you for your interest.

ASC X9 IR-F01-2022

© ASC X9 Inc., 2022 – All Rights Reserved

2

2 Introduction

2.1 Background

This document provides a high-level, but broad, background on

quantum computing and the risks it is expected to pose to

cryptography—specifically, the cryptography used by the

financial services industry. Further, this document gives some

suggestions for organizations to assess and mitigate these

quantum risks.

The quantum computing landscape is undergoing an increasing

rate of change as more research and larger investment is

allocated to the development of quantum technologies. Across

the globe, more and more attention is being given to the

advancement of quantum computing, including fundamental

research, physical development, applications of the technology,

and so on. As the applications for quantum computing become

more varied and more practical, more people and organizations

are entering the field and contributing to its further development.

From Artificial Intelligence and Machine Learning (AI/ML) to applications in the Life Sciences and fundamental

scientific research, all the way to securing nation states from cyberattacks, the world is only now beginning to realize

the breadth of applicability for these next-paradigm machines.

According to a November 2021 report by International Data Corporation (IDC)

1

, the global market for quantum

computing is expected to increase to $8.6 billion (USD) by the end of 2027, up from $412 million in 2020. If this

estimate holds true, it represents a nearly 51% compound annual growth rate (CAGR) over a period of six years.

Further, the same IDC report forecasts that investment into quantum computing will increase with an 11.3% CAGR

over that same six-year period. This forecast also comes with the very reasonable suggestion that the projected

increase in investment can help to overcome current technological and engineering hurdles and propel the quantum

computing market into the next stages of maturity. Importantly, these estimates are very likely not to include

discretionary or secret government spending. And so, one can expect that the numbers presented in IDC’s

(unclassified) report fall short of the true numbers.

This document is the second edition of the X9 Quantum Computing Risks to the Financial Services Industry

Informative Report, with the first edition being published in late 2019. X9 is committed to tracking the ongoing

evolution in quantum computing and will continue to periodically revise this document. X9 also maintains a quantum

computing risk web page on its public web site that tracks major developments and has links to relevant documents

from other sites:

https://x9.org/quantum-computing/. Please contact X9 staff if you have information you would like

referenced on this page.

This document provides information tailored towards both management and technical people. The Overview section

(section 6) provides a shorter and less technical description of quantum computing, what it can and cannot do, and

the expected risks that it poses and how to mitigate them. While it is not possible to avoid all discussions of technical

issues, the Overview is written to be as non-technical as possible. The remaining sections of this document provide

a much more in-depth description and background of the software and hardware that make up a quantum computer.

This includes a discussion of the quantum algorithms that can crack certain cryptographic systems. This document

also discusses some of the hurdles that must still be overcome to create a large-scale, fault-tolerant, quantum

computer. Finally, the document gives recommendations for assessing and mitigating the quantum computing

threats, including steps that can be taken now to defend against future quantum-enabled attacks.

1

https://www.idc.com/getdoc.jsp?containerId=prUS48414121

ASC X9 IR-F01-2022

© ASC X9 Inc., 2022 – All Rights Reserved

3

As this report is primarily concerned with the nature of quantum computing and the risks quantum computing poses

to currently deployed cryptography, the potential non-cryptographic applications of quantum computing are not

detailed herein. By limiting the discussion to the information security impacts of quantum computing, it can be easy

for a reader to arrive at the erroneous conclusion that quantum computing will be a net-negative for society, and that

it will not have positive use-cases. In reality, there are numerous applications of quantum computing that are

expected to be enormously beneficial. Example applications include materials science, the design of

pharmaceuticals, chemical system simulations, artificial intelligence and machine learning, weather prediction, and

various other optimization problems (some of these examples are briefly discussed at the top of section 7). For a

sampling of positive applications of quantum computing within different industries (and other excellent information

on quantum computing), the reader is encouraged to download IBM’s The Quantum Decade report, which is freely-

available through the IBM website at the following URL:

https://www.ibm.com/thought-leadership/institute-business-

value/report/quantum-decade#.

2.2 Purpose

The purpose of this report is:

• To provide information on the threats and risks posed by large-scale, fault-tolerant, quantum computers,

including how quantum computers might be used to attack current cryptosystems, threat models for quantum-

enabled attacks, and other cryptographic and non-cryptographic considerations.

• To provide a description of how quantum computers operate, how they might be built, and to provide and

regularly update estimates for when a large-scale, fault-tolerant, quantum computer will be built.

• To provide a description of post quantum cryptography and the current state of post quantum algorithm

standardization.

• To provide suggestions for assessing and mitigating the threats and risks posed by quantum computing.

2.3 Scope

This report provides:

• A description of what quantum computing is, how it differs from classical computing, and the underlying

physical properties quantum computers use.

• A description of qubits, including an explanation of physical and logical qubits, possible ways to build

physical qubits, and ways to measure qubit quality.

• A description of the general technological and engineering requirements for building a large-scale, fault-

tolerant, quantum computer, and possible timelines for when one might be built.

• A description of different types of quantum computation devices.

• A description of post quantum cryptography and the ongoing efforts to standardize post quantum

cryptographic algorithms.

• A description of the quantum computing threat to current cryptosystems, protocols, and primitives.

• A description of the more general threats and risks posed by quantum computers, including threat models

and different components of the threats and risks.

• Suggestions for assessing and mitigating the threats and risks posed by quantum computers.

2.4 Future Editions and Participation

The ASC X9F Quantum Computing Risk Study Group (QCR SG) aims to regularly review and update the present

document. Participation in the development of future editions is open to X9 members and non-members alike. If

your organization is not an X9 member and wishes to participate in the Study Group, you are encouraged to contact

the Study Group Chair, Steve Stevens, at Steve.Steve[email protected] for more information about how you can contribute

your expertise to this important subject.

ASC X9 IR-F01-2022

© ASC X9 Inc., 2022 – All Rights Reserved

4

3 Normative References

Not applicable.

4 Terms and Definitions

For the purposes of this document, the following terms and

definitions apply:

4.1 Advanced encryption standard (AES)

AES is a symmetric encryption algorithm defined by FIPS PUB

197. With an appropriate mode of operation, it can provide

privacy (encryption) and integrity validation. AES uses an

internal block size of 128-bits, and allows keys of length 128-,

192-, and 256-bits.

4.2 Algorithm

A clearly specified mathematical process for computation; a set

of rules that, if followed, will give a prescribed result.

4.3 Asymmetric cryptography

Cryptography that uses two separate keys to exchange data, one to encrypt or digitally sign the data and one for

decrypting the data or verifying the digital signature. Also known as public key cryptography.

4.4 Bit string

An ordered sequence of zeros and ones (e.g., 0101011100).

4.5 Bit length

A positive integer that expresses the number of bits in a bit string.

4.6 Bloch Sphere

A three-dimensional geometric representation of the pure state space of a single quantum bit.

4.7 Block cipher

An invertible symmetric-key cryptographic algorithm that operates on fixed-length blocks of input using a secret

key and an unvarying transformation algorithm. The resulting output block is the same length as the input block.

4.8 Brute force attack

A trial-and-error method used to obtain information such as an encryption key, user password, or personal

identification number (PIN); the attacker simply tries all possible values of the key/password/PIN until he finds the

correct one. In a brute force attack, automated software is used to generate a large number of consecutive

guesses as to the value of the desired data.

4.9 Certificate

A set of data that uniquely identifies an entity, contains the entity’s public key and possibly other information, and

is digitally signed by a trusted party, thereby binding the public key to the entity identified in the certificate.

Additional information in the certificate could specify how the key is used and the validity period of the certificate.

NOTE: Also known as a digital certificate or a public key certificate.

4.10 Certificate Authority (CA)

A trusted entity that issues and revokes public key certificates.

ASC X9 IR-F01-2022

© ASC X9 Inc., 2022 – All Rights Reserved

5

4.11 Cipher

Series of transformations that converts plaintext to ciphertext using a cryptographic key.

4.12 Ciphertext

Data in its encrypted form.

4.13 Circuit

A way of expressing the sequence of operations required for implementing a given algorithm.

4.14 Circuit diagram

A graphical representation of a circuit.

4.15 Classical computer

A computer that operates using binary, Boolean, logic; can be modeled as a deterministic Turing Machine.

4.16 Code-based cryptography

The branch of post quantum cryptography concerned with the development of cryptographic systems based on the

difficulty of decoding error correcting codes.

4.17 Computer

A device that accepts digital data and manipulates the information based on a program or sequence of instructions

for how data is to be processed.

4.18 Confidentiality

Preserving authorized restrictions on information access and disclosure, including means for protecting personal

privacy and proprietary information.

4.19 Cryptanalysis

The study of mathematical techniques for attempting to defeat cryptographic techniques and information-system

security. This includes the process of looking for errors or weaknesses in the implementation of an algorithm or in

the algorithm itself.

4.20 Cryptography

Discipline that embodies principles, means and methods for the transformation of data to hide its information content,

prevent its undetected modification, and prevent its unauthorized use or a combination thereof.

4.21 Cryptographic agility

The capacity of a system to change the cryptographic algorithms or primitives it utilizes without requiring significant

changes to system infrastructure, and while minimizing disruption to system availability and functionality and that of

dependent systems.

NOTE: Also known as crypto agility.

4.22 Cryptographic hash function

A function that maps a bit string of arbitrary length to a fixed-length bit string. The function is usually expected to

have the properties of preimage resistance and collision resistance.

NOTE: A cryptographic hash function can satisfy additional security properties beyond those named above.

4.23 Cryptographic key

A parameter used in conjunction with a cryptographic algorithm that determines the specific operation of that

algorithm.

4.24 Discrete logarithm

Given any (discrete) group and elements of , an integer such that

is called the discrete logarithm

(of ) and is denoted by

.

ASC X9 IR-F01-2022

© ASC X9 Inc., 2022 – All Rights Reserved

6

4.25 Elliptic curve cryptography

The branch of cryptography concerned with the development of cryptographic systems based on the difficulty of

calculating discrete logarithms in elliptic curve groups.

4.26 Encryption

The process of using algorithmic schemes to transform plaintext information into a non-readable form called

ciphertext. A key (or algorithm) is required to decrypt the information and return it to its original plaintext format.

4.27 Ephemeral Key

Private or public key that is unique for each execution of a cryptographic scheme.

NOTE: An ephemeral private key is to be destroyed as soon as computational need for it is complete. An

ephemeral public key may or may not be certified.

4.28 Exhaustive key strength

If a cryptographic system employs a key with a bit length of , then there are

possible keys for a given instance

of that system. It takes at most

guesses to find the correct key via a brute force attack. In this case, the

exhaustive key strength of the system is bits.

4.29 Fault tolerance

The property that enables a system to continue operating properly in the event of the failure of one or more of its

components.

4.30 Grover’s algorithm

A quantum algorithm that finds, with high probability, the unique input to a black box function that produces a

particular output value. In theory, Grover's algorithm is known to reduce the security strength of symmetric

cryptosystems and primitives. However, due to real-world considerations (e.g., resource costs), Grover’s algorithm

might not be practical for cryptographic applications.

4.31 Integer

A member of the set of positive whole numbers , negative whole numbers , and zero .

4.32 Integer factor

A non-zero integer that can be divided evenly into another integer.

4.33 Integer factorization

The process of calculating the prime factors of a given integer. The Fundamental Theorem of Arithmetic states

that each positive integer has a unique factorization into prime numbers, excluding permutations of the ordering.

Negative integers also have unique prime factorizations up to ordering, but additionally include the non-prime

factor .

4.34 Intractable problem

A problem for which there is no known efficient algorithm (i.e., an algorithm with polynomial complexity) for solving

it. If such an algorithm is known, then the problem is said to be tractable.

4.35 Key agreement

A key-establishment procedure where the resultant keying material is a function of information contributed by two

or more participants, so that an entity cannot predetermine the resulting value of the keying material independently

of any other entity’s contribution.

4.36 Key establishment

A procedure that results in secret keying material that is shared among different parties.

ASC X9 IR-F01-2022

© ASC X9 Inc., 2022 – All Rights Reserved

7

4.37 Key management

The activities involved in the handling of cryptographic keys and other related parameters (e.g., IVs and domain

parameters) during the entire life cycle of the keys, including their generation, storage, establishment, entry and

output into cryptographic modules, use and destruction.

4.38 Lattice-based cryptography

The branch of post quantum cryptography concerned with the development of cryptographic systems based on the

difficulty of solving certain problems within discrete additive subsets of -dimensional euclidean space.

4.39 Logic gate

The mechanism used to perform logical operations on data. Examples of classical gates include the AND, OR,

and NOT gates. Examples of quantum gates include the Pauli X, Y, and Z gates, the Hadamard gate, and the

Phase-shift gate.

4.40 Logical qubit

A system composed of one or more physical qubits implemented to behave as a single qubit in a quantum circuit.

Quantum logic gates are applied to logical qubits during the execution of a quantum circuit.

4.41 Key pair

A public key and its corresponding private key; a key pair is used with a public-key algorithm.

4.42 Multivariate quadratic polynomial-based cryptography

The branch of post quantum cryptography concerned with the development of cryptographic systems based on the

difficulty of finding solutions to systems of multivariate quadratic polynomials.

4.43 Offline attack

Occurs when an adversary precomputes the relevant information to break the security of a system (such as a

cryptographic security protocol), with the intent to use that information sometime in the future when the system is

run.

4.44 Online attack

Occurs when an adversary attacks a system (such as a cryptographic security protocol) in real-time. For example,

while the system is in use or while the cryptographic protocol is actively being executed.

4.45 Physical qubit

A physical system that can exist in any superposition of two independent (distinguishable) quantum states and is

subject to noise and errors that may or may not be corrected for.

4.46 Plaintext

Intelligible data that has meaning and can be read or acted upon without the application of decryption. Also known

as cleartext.

4.47 Post quantum cryptography

The branch of cryptography concerned with the development of asymmetric cryptographic systems resistant to

attacks which utilize either quantum computers or classical computers.

NOTE: Post quantum cryptography is often used synonymously with quantum-safe cryptography (4.60). However,

the present document distinguishes the two terms.

4.48 Prime number

A positive integer not equal to 1 whose only integer factors are 1 and itself. For example, the first few prime

numbers are 2, 3, 5, 7, 11, 13, 17, 19, 23, and 29.

ASC X9 IR-F01-2022

© ASC X9 Inc., 2022 – All Rights Reserved

8

4.49 Private key

In an asymmetric (public) key cryptosystem, the key of an entity’s key pair that is known only by that entity.

NOTE: A private key may be used to compute the corresponding public key, to make a digital signature that may

be verified by the corresponding public key, to decrypt data encrypted by the corresponding public key; or together

with other information to compute a piece of common shared secret information.

4.50 Public key

That key of an entity’s key pair that may be publicly known in an asymmetric (public) key cryptosystem.

NOTE: A public key may be used to verify a digital signature that is signed by the corresponding private key, to

encrypt data that may be decrypted by the corresponding private key, or by other parties to compute shared

information.

4.51 Quantum advantage

A quantum-capable device is said to have quantum advantage (over classical devices) if it can perform useful

operations no classical device can.

4.52 Quantum annealing

A process for finding optimal, or near optimal, solutions to certain kinds of computational problems by finding low-

energy states of a quantum system that encodes the given computational problem.

4.53 Quantum bit (qubit)

A quantum-mechanical system, that can exist in two perfectly distinguishable states, that serves as the basic unit

of quantum information. Unlike classical systems, in which a bit exists in one state or the other, a qubit can exist in

a coherent superposition of both states simultaneously.

4.54 Quantum coherence

The property of a quantum system whereby it can maintain the purity of its state and not succumb to unintended

effects of the environment.

4.55 Quantum computer

A computer that operates and performs computations by leveraging the quantum mechanical properties of nature,

such as superposition, entanglement, and interference.

4.56 Quantum entanglement

Two or more quantum particles are entangled when their states cannot be described independently no matter their

physical separation; a measurement on one particle is correlated with information on the other(s).

4.57 Quantum error correction

The study of methods to protect quantum information from errors due to energy fluctuations, electromagnetic

interference, environmental disturbances, and other events which may have led to decoherence.

4.58 Quantum fidelity

A probabilistic measure of how similar a given quantum state is to a target quantum state.

4.59 Quantum measurement

The act, intentional or otherwise, of collapsing a quantum wave function. Quantum measurement can be thought

of as a transformation of a quantum superposition into a pure, classical state.

4.60 Quantum-safe cryptography

The branch of cryptography concerned with the development of cryptographic systems resistant to attacks which

utilize either quantum computers or classical computers. Unlike post quantum cryptography, which focuses only

on asymmetric systems, quantum-safe cryptography includes the study of asymmetric systems, symmetric

systems, and quantum key distribution.

ASC X9 IR-F01-2022

© ASC X9 Inc., 2022 – All Rights Reserved

9

4.61 Quantum superposition

The quantum mechanical property whereby a quantum system exists in two or more distinguishable quantum

states at the same time. Such a superposition of quantum states is itself a quantum state.

4.62 Quantum supremacy

The point where quantum computers can do things that classical computers can’t, regardless of whether those

tasks are useful.

NOTE: Today, this term is mostly used in a historical context. Quantum advantage is the more modern and

suitable metric.

4.63 Quantum threshold theorem

A theorem stating that if the rate of physical errors in a quantum circuit can be made low enough (below some

threshold), then the logical error rate can be made arbitrarily small by adding some number of additional quantum

gates.

4.64 Quantum volume

A metric for quantifying the largest random quantum circuit of equal width and depth that a given quantum

computer can successfully implement.

4.65 Shor’s algorithm

A quantum algorithm that can find the prime factors of a given input and calculate discrete logarithms. More

generally, Shor's algorithm is an efficient quantum algorithm for solving the Hidden Subgroup Problem. Shor’s

algorithm breaks classical asymmetric cryptosystems such as RSA and those based on Elliptic Curve

Cryptography.

4.66 Static key

Private or public key that is common to many executions of a cryptographic scheme.

NOTE: A static public key may be certified.

4.67 Stream Cipher

A symmetric encryption method in which a cryptographic key and algorithm are applied to each binary digit in a

data stream, one bit at a time.

4.68 Supersingular isogeny-based cryptography

The branch of post quantum cryptography concerned with the development of cryptographic systems based on the

difficulty of computing isogenies between supersingular elliptic curves.

4.69 Symmetric cryptography

Cryptography that uses the same secret key for its operation and, if applicable, for reversing the effects of the

operation (e.g., an AES key for encryption and decryption).

NOTE: The key shall be kept secret between the two communicating parties.

4.70 Symmetric key

A cryptographic key that is used to perform both the cryptographic operation and its inverse (e.g., to encrypt, decrypt,

create a message authentication code, or verify a message authentication code).

4.71 Unitary matrix

A square, complex-valued, matrix with inverse equal to its conjugate transpose.

ASC X9 IR-F01-2022

© ASC X9 Inc., 2022 – All Rights Reserved

10

5 Symbols and Abbreviations

For the purposes of this document, the following symbols and abbreviated terms apply:

5.1 3DES

Triple Data Encryption Algorithm

NOTE: Also known as the Triple Data Encryption Algorithm (TDEA) and Triple Data Encryption Standard (TDES)

5.2 AAD

Additional Authenticated Data

5.3 ABE

Attribute-based Encryption

5.4 AEAD

Authenticated Encryption with Associated Data

5.5 AES

Advanced Encryption Standard

5.6 AH

Authentication Header

5.7 AI

Artificial Intelligence

5.8 BC

Business Continuity

5.9 BIA

Business Impact Assessment

5.10 BIKE

Bit-flipping Key Exchange

5.11 BSI

Federal Office for Information Security

NOTE: BSI is an agency of the German government. The above is an English translation of the German name:

Bundesamt für Sicherheit in der Informationstechnik.

5.12 CBC

Cipher Block Chaining

5.13 CCCS

Canadian Centre for Cybersecurity

5.14 CFDIR

Canadian Forum for Digital Infrastructure Resilience

5.15 CSA

Cloud Security Alliance

5.16 COW

Coherent One-Way

ASC X9 IR-F01-2022

© ASC X9 Inc., 2022 – All Rights Reserved

11

5.17 CRYSTALS

Cryptographic Suite for Algebraic Lattices

5.18 CRQC

Cryptographically Relevant Quantum Computer

5.19 CSPRNG

Cryptographically Secure Pseudorandom Number Generator

5.20 CSS

Calderbank-Shor-Steane

5.21 DES

Data Encryption Standard

NOTE: Also known as the Data Encryption Algorithm (DEA).

5.22 DH

Diffie-Hellman

5.23 DHE

Ephemeral Diffie-Hellman

5.24 DR

Disaster Recovery

5.25 DSA

Digital Signature Algorithm

5.26 DTLS

Datagram Transport Layer Security

5.27 ECC

Elliptic Curve Cryptography

5.28 ECDH

Elliptic Curve Diffie-Hellman

5.29 ECDSA

Elliptic Curve Digital Signature Algorithm

5.30 ENISA

European Union Agency for Cybersecurity

NOTE: The abbreviation comes from the original name of the agency: the European Network and Information

Security Agency.

5.31 ESP

Encapsulating Security Payload

5.32 ETSI

European Telecommunications Standards Institute

5.33 FALCON

Fast-Fourier Lattice-based Compact Signatures over NTRU

ASC X9 IR-F01-2022

© ASC X9 Inc., 2022 – All Rights Reserved

12

5.34 FASP

Fast and Secure Protocol

5.35 FHE

Fully Homomorphic Encryption

5.36 FIM

Federated Identity Management

5.37 FIPS

Federal Information Processing Standard

5.38 FT

Fourier Transform

5.39 FTPS

File Transfer Protocol Secure

NOTE: FTPS is distinct from SFTP.

5.40 GCHQ

Government Communications Headquarters

5.41 GDPR

General Data Protection Regulation

5.42 GeMSS

Great Multivariate Short Signature

5.43 HMAC

Hash-based Message Authentication Code

5.44 HQC

Hamming Quasi-Cyclic

5.45 HRNG

Hardware Random Number Generator

5.46 HSP

Hidden Subgroup Problem

5.47 HTTPS

Secure Hypertext Transport Protocol

5.48 IANA

Internet Assigned Numbers Authority

5.49 IBM

International Business Machines

5.50 ICV

Integrity Check Value

5.51 IETF

Internet Engineering Task Force

ASC X9 IR-F01-2022

© ASC X9 Inc., 2022 – All Rights Reserved

13

5.52 IID

Independent and Identically Distributed

5.53 IIoT

Industrial Internet of Things

5.54 IKEv2

Internet Key Exchange version 2

5.55 IoT

Internet of Things

5.56 IPSec

Internet Protocol Security

5.57 ISO

International Organization for Standardization

NOTE: ISO is not technically an abbreviation. Rather, ISO is a convenient short form for the organization’s name,

selected to avoid the issue of the abbreviation being different in different languages.

5.58 ISP

Internet Service Provider

5.59 IV

Initialization Vector

5.60 KDF

Key Derivation Function

5.61 KEM

Key Encapsulation Mechanism

5.62 LMS

Leighton-Micali Signature

5.63 MIT

Massachusetts Institute of Technology

5.64 ML

Machine Learning

5.65 NISQ

Noisy Intermediate-Scale Quantum

5.66 NIST

National Institute of Standards and Technology

5.67 NSA

National Security Agency

5.68 NTRU

Number Theorists R Us

5.69 PAN

Primary Account Number

ASC X9 IR-F01-2022

© ASC X9 Inc., 2022 – All Rights Reserved

14

5.70 PHI

Protected Health Information

5.71 PIC

Photonic Integrated Circuit

5.72 PII

Personally Identifiable Information

5.73 PKI

Public Key Infrastructure

5.74 PQC

Post Quantum Cryptography

5.75 PRF

Pseudorandom Function

5.76 PRNG

Pseudorandom Number Generator

NOTE: Also known as a Deterministic Random Bit Generator (DRBG).

5.77 QAOA

Quantum Approximate Optimization Algorithm

5.78 QEC

Quantum Error Correction

5.79 QFT

Quantum Fourier Transform

5.80 QKD

Quantum Key Distribution

5.81 QRNG

Quantum Random Number Generator

5.82 RNG

Random Number Generator

5.83 SIKE

Supersingular Isogeny Key Encapsulation

5.84 RSA

Rivest-Shamir-Adleman

5.85 SCADA

Supervisory Control And Data Acquisition

5.86 SCP

Secure Copy

ASC X9 IR-F01-2022

© ASC X9 Inc., 2022 – All Rights Reserved

15

5.87 SFTP

Secure File Transfer Protocol

NOTE: SFTP is distinct from FTPS.

5.88 SHA

Secure Hash Algorithm

5.89 SIKE

Supersingular Isogeny Key Exchange

5.90 SLA

Service Level Agreement

5.91 SNDL

Store-Now, Decrypt-Later

NOTE: Also known as Harvest-Now, Decrypt-Later (HNDL)

5.92 SPHINCS+

Stateless Practical Hash-based Incredibly Nice Cryptographic Signatures Plus

5.93 SSH

Secure Shell

5.94 SSL

Secure Sockets Layer

5.95 SSO

Single Sign On

5.96 TLS

Transport Layer Security

5.97 TRNG

True Random Number Generator

5.98 URL

Uniform Resource Locator

5.99 VPN

Virtual Private Network

5.100 VQE

Variational Quantum Eigensolver

5.101 WEF

World Economic Forum

5.102 XMSS

eXtended Merkle Signature Scheme

5.103 Y2Q

Years to Quantum

Note: The term is also used to describe the year in which the first CRQC is built.

ASC X9 IR-F01-2022

© ASC X9 Inc., 2022 – All Rights Reserved

16

6 Overview of Quantum Computing Risks, Timelines, and Mitigations

6.1 Quantum Computing vs Classical Computing

The computers we know and use today are also known as

classical computers. These machines work by taking in some

input, and then by using something called an instruction set,

they manipulate that input using a classical, Boolean logic (i.e.,

using the logical operations AND, OR, and NOT), to get some

desired output. The inputs, when reduced to their most basic

level, are simply strings of bits (i.e., 0's and 1's), which basically

indicate "on" or "off", or "yes" or "no". By using these basic

binary inputs, together with some clever instruction set,

classical computers can do the operations necessary to

perform computations. In practice, classical computers are

restricted by resource requirements such as time, memory, and

computational power. Even so, today's classical computers are

marvels of scientific achievement. The smartphone in your

pocket has more computing power and memory than was used by Apollo 11's Guidance Computer when humankind

was first put on the Moon in 1969. And not just a little bit more power and memory, modern smartphones have more

than a million times the memory of the Apollo 11 Guidance Computer, and around one hundred thousand times the

computing power. Never mind what today's supercomputers are capable of.

Classical computers have come a long way, but they still have their limits in terms of solving many practical

computational problems. In theory, given unbounded resources to use (including as much time as is necessary), a

classical computer can solve any computational problem that can be solved. In reality, there are not unbounded

resources available for any classical machine, including large high-performance computing clusters. As a result,

certain computational problems that we would like to solve remain out of reach.

Quantum computers, first theorized by the theoretical physicist Richard Feynman in 1982, are devices that operate

not only on classical logic, but rather on an extended quantum logic that harnesses the physical properties of nature,

the properties of quantum mechanics. The fundamental unit of classical computation is the (classical) bit, and the

fundamental unit of quantum computation is the quantum bit, or more commonly, the qubit.

Suppose for example that you flipped a coin. You know that when the coin lands, it will come up either heads or tails

(ignoring double-sided coins and the possibility of the coin landing on its edge). But what is the value of the coin

before it lands? At any point in time while the coin is in the air, is it heads? Tails? Neither? Both? There is not really

a good answer to this question, and without a complete understanding of the prior state of the coin and the forces

imparted on it the end-value is unknowable until the coin lands. Thus, we assign a probability to each outcome: a

50% chance of heads and 50% chance of tails.

When you measure a qubit (i.e., after the coin lands), its state will be either (tails) or (heads), which you can

easily read off. However, prior to measurement, the state of the qubit is actually a combination of and . This

combination of values is called a superposition and is essentially what is meant in popular science articles that

describe quantum computing by saying things like “a qubit takes on all possible values at the same time”, or “a qubit

is every possible value in-between and , at the same time”. An unmeasured qubit can indeed be in a state that

is simultaneously both of those values, but just not necessarily in equal parts. The sizes of the parts are weighted

according to certain “quantum probabilities” called probability amplitudes.