Syracuse University Syracuse University

SURFACE at Syracuse University SURFACE at Syracuse University

Theses - ALL

Winter 12-22-2021

Seedemu: The Seed Internet Emulator Seedemu: The Seed Internet Emulator

Honghao Zeng

Syracuse University

Follow this and additional works at: https://surface.syr.edu/thesis

Part of the Computer Sciences Commons

Recommended Citation Recommended Citation

Zeng, Honghao, "Seedemu: The Seed Internet Emulator" (2021).

Theses - ALL

. 592.

https://surface.syr.edu/thesis/592

This Thesis is brought to you for free and open access by SURFACE at Syracuse University. It has been accepted for

inclusion in Theses - ALL by an authorized administrator of SURFACE at Syracuse University. For more information,

please contact surface@syr.edu.

Abstract

I studied and experimented with the idea of building an emulator for the Internet. While there are

various already available options for such a task, none of them takes the emulation of the entire Internet

as an important feature in mind. Those emulators and simulators can handle small-scale networks pretty

well, but lacks the ability to handle large-size networks, mainly due to:

• Not being able to run many nodes, or requires very powerful hardware to do so,

• Lacks convenient ways to build a large emulation, and

• Lacks reusability: once something is built, it is very hard to re-use them in another emulation

I explored, in the context of for-education Internet emulators, different ways to overcome the above

limitations. I came up with a framework that enables one to create emulation using code. The framework

provides basic components of the Internet. Some examples include routers, servers, networks, Internet

exchanges, autonomous systems, and DNS infrastructure. Building emulation with code means it is easy

to build emulation with complex topologies since one can make use of the common control structures

like loops, subroutines, and functions.

The framework exploits the idea of “layers.” The idea of “layers” can be seen as an analogy of the

idea of “layers” in image processing software, in the sense that each layer contains parts of the image (in

this case, part of the emulation), and need to be “rendered” to obtain the resulting image.

There are two types of layers, base layers and service layers. Base layers describe the “base” of the

topologies, like how routers, servers, and networks are connected, how autonomous systems are peered

with each other; service layers describe the high-level services on the Internet. Examples of services

layers are web servers, DNS servers, ethereum nodes, and botnet nodes. No layers are tied to any other

layers, meaning each layer can be individually manipulated, exported, and re-used in another emulation.

One can build an entire DNS infrastructure, complete with root DNS, TLD DNS, and deploy it on any

base layer, even with vastly different underlying topologies.

The result of the rendered layer is a set of data structures that represents the objects in a network

emulation, like host, router, and networks. These representations can then be “compiled” into something

that one can execute using a compiler. The main target platform of the framework is Docker.

The source of the SEEDEMU project is publicly available on Github: https://github.com/seed-

labs/seed-emulator.

SEEDEMU: The SEED Internet Emulator

by

Honghao Zeng

B.S., Computer Science, Syracuse University, 2020

Thesis

Submitted in partial fulfillment of the requirements for the degree of

Master of Science in Computer Science.

Syracuse University

December 2021

Copyright © Honghao Zeng 2021

All rights reserved

Acknowledgements

Part of the SEEDEMU project is supported by the National Science Foundation (NSF) under grant

number 1718086 and an internal grant from the Syracuse University.

I would like to thank Dr. Wenliang Du for his invaluable supervision, mentorship, and insights on the

SEEDEMU project. I would like to also express my thanks to the following individuals for contributing

to the SEEDEMU project codebase (in alphabetical order):

• Keyi Li: contributed to the initial version of botnet service, tor network service, and ethereum

service.

• Rawi Sader: contributed to serval improvements to the ethereum service.

I would also like to thank my committee members, professor Endadul Hoque, professor Nadeem

Ghani, and professor Roger Chen for serving as my committee members. I would especially like to

express my appreciation to my family and friends for their encouragement and support all through my

studies.

iv

Contents

1 Introduction, background and motivations 1

1.1 The software emulation approach . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 1

1.2 The configuration files approach . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

1.3 Setting the goals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 3

1.4 The programming framework approach . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 4

2 Related works 5

2.1 NS-3 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 5

2.2 EVE-NG and GNS3 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

2.3 CORE . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

2.4 Greybox . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

2.5 mini-Internet . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

3 Core concepts 7

3.1 Breaking the emulation-building and emulation-running apart . . . . . . . . . . . . . . . . 7

3.2 Enabling sharing and re-use of the emulations . . . . . . . . . . . . . . . . . . . . . . . . . 10

3.3 The core classes and interfaces . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 11

3.3.1 The Node class . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

3.3.2 The Network class and the Interface . . . . . . . . . . . . . . . . . . . . . . . . . 12

3.4 The Emulator class . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 13

3.5 The object registry . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 14

3.6 Layers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

3.6.1 Base layers and service layers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 15

3.6.2 Virtual nodes, bindings, and configure-on-render . . . . . . . . . . . . . . . . . . . 16

3.6.3 The binding system . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 17

3.7 Rendering . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 19

3.7.1 Layer dependencies . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 20

3.7.2 Layers lifecycle and configure-on-render . . . . . . . . . . . . . . . . . . . . . . . . 20

3.7.3 Rendering flow . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

3.8 Components: sharing emulations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

3.8.1 Emulation import and export . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 23

3.8.2 Component classes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

3.8.3 Merging emulations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

v

4 The base layers 26

4.1 The base layer . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

4.1.1 Renaming interface . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

4.1.2 Examples . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

4.2 The routing layer . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

4.2.1 Multiple routing tables . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

4.2.2 Direct interfaces . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

4.2.3 The dummy interface and loopback IP . . . . . . . . . . . . . . . . . . . . . . . . . 28

4.2.4 The real-world integration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

4.2.5 Examples . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 29

4.3 The EBGP layer . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

4.3.1 How is it done in the real world? . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

4.3.2 EBGP in the SEEDEMU framework . . . . . . . . . . . . . . . . . . . . . . . . . . 33

4.3.3 Multi-table for EBGP . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

4.3.4 Evaluation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 37

4.3.5 Examples . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

4.4 Internal routing options . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

4.4.1 How is it done in the real world? . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

4.4.2 Internal routing in the SEEDEMU framework . . . . . . . . . . . . . . . . . . . . . 40

4.4.3 The IBGP + OSPF option . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 41

4.4.4 The MPLS option . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 43

4.4.5 Evaluation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 44

5 The service layers 44

5.1 Domain name related services . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

5.1.1 The domain name server service . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

5.1.2 The local domain name server service . . . . . . . . . . . . . . . . . . . . . . . . . 46

5.1.3 Make traceroute looks better . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

5.1.4 DNSSEC . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

5.2 Other services . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

5.2.1 The Ethereum service . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 49

5.2.2 The web service . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

5.2.3 The BGP looking glass service . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

5.2.4 The Botnet services . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

vi

6 Compilers 52

6.1 The Docker compiler . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 52

6.1.1 Node compilation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 54

6.1.2 Network compilation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 56

6.1.3 Custom images . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

6.2 Other compilers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

6.2.1 The distributed Docker compiler . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

6.2.2 Graphviz Compiler . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 58

7 The web UI 58

7.1 The UI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

7.1.1 The start page . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

7.1.2 Node details and quick actions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

7.1.3 Packet flow visulization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

7.1.4 Replay and recording . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

7.1.5 Node search . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

7.1.6 Console windows . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64

7.2 The backend . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64

7.2.1 The API server . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64

7.2.2 The worker . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 66

8 Case study 66

8.1 Simple BGP setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 66

8.1.1 Import and create required components . . . . . . . . . . . . . . . . . . . . . . . . 66

8.1.2 Create an Internet exchange . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

8.1.3 Create an autonomous system . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68

8.1.4 Set up BGP peerings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 70

8.1.5 Render and compile the emulation . . . . . . . . . . . . . . . . . . . . . . . . . . . 71

8.2 Simple transit BGP setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 72

8.2.1 Import and create required components . . . . . . . . . . . . . . . . . . . . . . . . 72

8.2.2 Create a transit autonomous system . . . . . . . . . . . . . . . . . . . . . . . . . . 72

8.2.3 Set up BGP peering . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73

8.2.4 Save the emulation for later use . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73

8.3 Create a MPLS-based transit autonomous system . . . . . . . . . . . . . . . . . . . . . . . 74

8.3.1 Host system support . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 74

8.3.2 Import and create required components . . . . . . . . . . . . . . . . . . . . . . . . 74

vii

8.3.3 Create a transit autonomous system . . . . . . . . . . . . . . . . . . . . . . . . . . 74

8.4 Exploring emulator features with the simple transit BGP setup . . . . . . . . . . . . . . . 75

8.4.1 Allow real-world access to the emulation . . . . . . . . . . . . . . . . . . . . . . . . 75

8.4.2 Customizing the visualization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 77

8.4.3 Components and emulator merging . . . . . . . . . . . . . . . . . . . . . . . . . . . 77

8.5 Complex BGP setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

8.5.1 The helper tools . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 80

8.5.2 Building the topology . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 80

8.6 Exploring emulator features on the complex BGP setup . . . . . . . . . . . . . . . . . . . 81

8.6.1 BGP hijacks . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81

8.6.2 Deploying DNS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83

8.6.3 Deploying anycast . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86

8.7 Developing a new layer . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 87

8.7.1 Implementing the Layer interface . . . . . . . . . . . . . . . . . . . . . . . . . . . . 87

8.7.2 Implementing the Configurable interface . . . . . . . . . . . . . . . . . . . . . . . . 88

8.7.3 Working with the global object registry . . . . . . . . . . . . . . . . . . . . . . . . 88

8.7.4 Working with other layers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 89

8.7.5 Working with merging . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 90

8.8 Developing a new service . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 91

8.8.1 Implementing the Server interface . . . . . . . . . . . . . . . . . . . . . . . . . . . 91

8.8.2 Implementing and working with the Service interface . . . . . . . . . . . . . . . . . 91

8.8.3 Retrieving list of virtual nodes and physical nodes . . . . . . . . . . . . . . . . . . 92

8.8.4 Change render and configuration behavior . . . . . . . . . . . . . . . . . . . . . . . 92

9 Performance Evaluation 92

9.1 Goals . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 93

9.2 Methods . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 93

9.2.1 Per-unit performances . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 94

9.3 Tests . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 95

9.3.1 Large number of autonomous systems . . . . . . . . . . . . . . . . . . . . . . . . . 95

9.3.2 Large number of hosts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 97

9.3.3 Large number of networks, but small amount of autonomous systems . . . . . . . . 100

9.3.4 Packet forwarding . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 102

9.3.5 Smaller scale tests . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 105

9.4 Conclusion . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 108

viii

10 Summary and future work 110

10.1 Future work . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 110

11 Appendix 112

11.1 Perform the evaluation locally . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 112

11.1.1 CPU and memory usage . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 112

11.1.2 Packet forwarding . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 118

11.2 Complete code used in case studies . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 123

ix

List of Figures

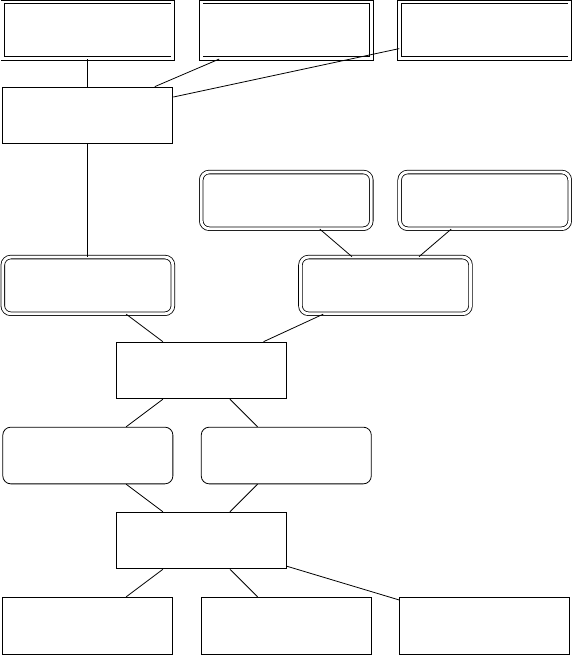

1 Layer structure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

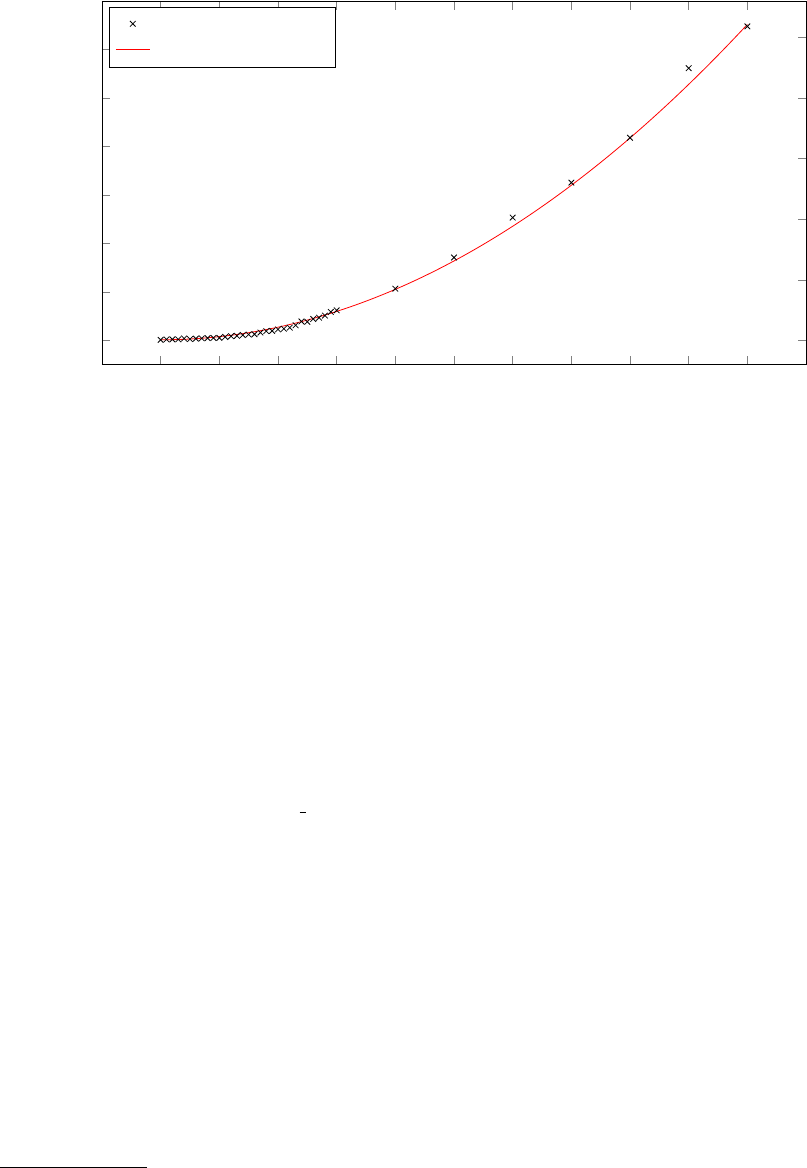

2 Layer lifecycle . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 21

3 BGP relationships and export filters . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 34

4 Structure of the web UI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 59

5 The start page of the web UI . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

6 Node details and quick actions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 60

7 Packet flow visualization . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 61

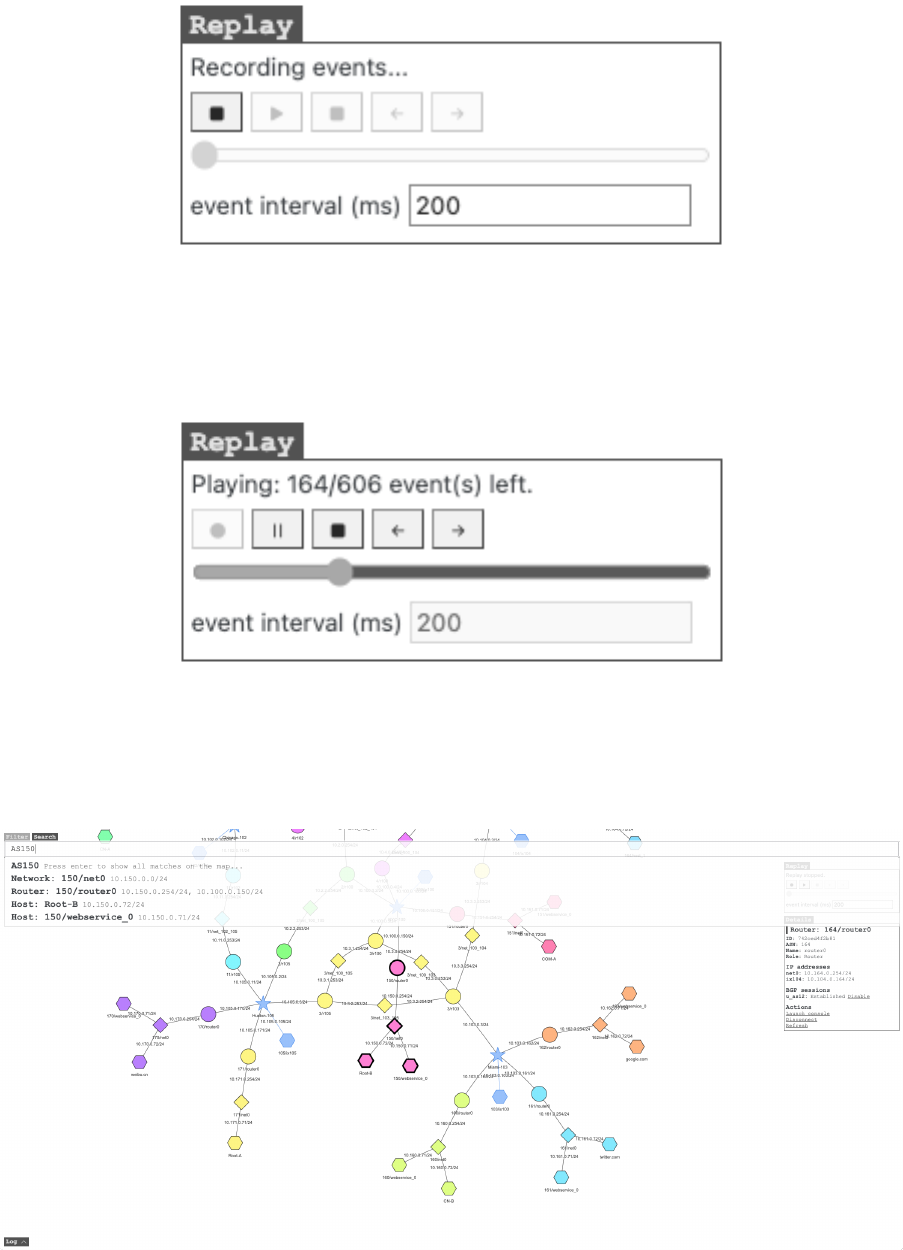

8 Replaying packet flow . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

9 Replay controls: stopped . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

10 Replay controls: recording . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63

11 Replay controls: playing . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63

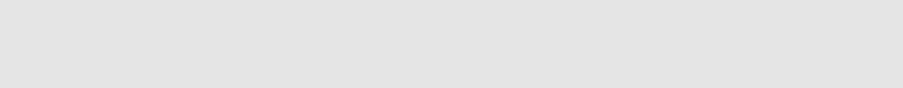

12 Search for nodes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63

13 Console window actions . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64

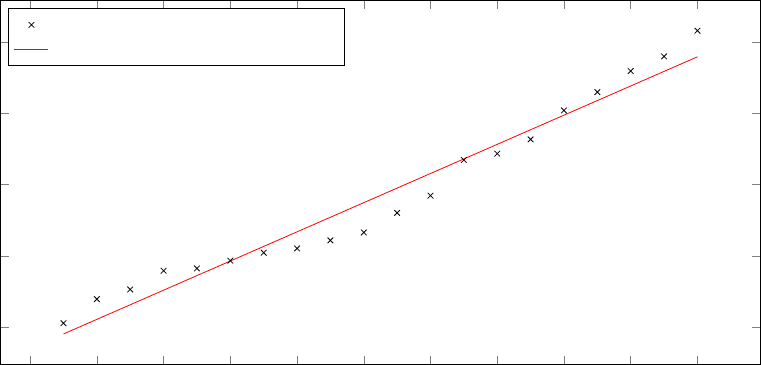

14 Number of ASes vs. memory consumption . . . . . . . . . . . . . . . . . . . . . . . . . . . 96

15 Number of ASes vs. CPU time . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 97

16 Number of ASes vs. CPU time (when memory is sufficient) . . . . . . . . . . . . . . . . . 98

17 Number of hosts per AS vs. memory consumed . . . . . . . . . . . . . . . . . . . . . . . . 99

18 Number of hosts per AS vs. CPU time . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 99

19 Number of routers per AS vs. memory consumed . . . . . . . . . . . . . . . . . . . . . . . 100

20 Number of routers per AS vs. CPU time . . . . . . . . . . . . . . . . . . . . . . . . . . . . 101

21 Number of IBGP sessions vs. CPU time . . . . . . . . . . . . . . . . . . . . . . . . . . . . 102

22 Number of hops vs. TX/RX speed . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 103

23 Number of hops vs. RTT . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 104

24 Number of streams vs. TX/RX speed . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 104

25 Number of streams vs. RTT . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 105

26 Number of ASes vs. memory consumption . . . . . . . . . . . . . . . . . . . . . . . . . . . 106

27 Number of ASes vs. CPU time . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 107

28 Number of hops vs. TX/RX speed . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 107

29 Number of hops vs. RTT . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 108

30 Number of streams vs. TX/RX speed . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 109

31 Number of streams vs. RTT . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 109

x

Listings

1 Sample configuration . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 2

2 Creating and joining an Internet exchange . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

3 Creating a stub autonomous system . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

4 Snippet for enabling access to real-world . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

5 Snippet for enabling access to real-world . . . . . . . . . . . . . . . . . . . . . . . . . . . . 30

6 BIRD export filter . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 35

7 BGP routing table . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 36

8 Create peerings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 38

9 Creating a DNS infrastructure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 45

10 Configuring local DNS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 47

11 Cymru query example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

12 Hosting the generated zones . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

13 mtr result . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 48

14 Creating an Ethereum chain . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

15 Creating a web server . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 50

16 Creating a looking glass . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

17 Creating a botnet . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

18 Snippet of docker-compose.yml file . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 53

19 Example of Dockerfile file . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 55

20 Node labels . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 64

21 Network labels . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 65

22 Importing components . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 66

23 Initializing components . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

24 Creating Internet exchange . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 67

25 Creating autonomous system . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 68

26 Creating an internal network . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 69

27 Creating a router . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 69

28 Creating a host . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 69

29 Installing service on virtual node . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 70

30 Creating binding . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 70

31 Set up BGP peerings . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 71

32 Adding layers to emulator and render them . . . . . . . . . . . . . . . . . . . . . . . . . . 71

33 Using the docker compiler backend . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 71

34 Create AS150 and its internal networks . . . . . . . . . . . . . . . . . . . . . . . . . . . . 72

xi

35 Create and connect routers to networks . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73

36 Configure BGP peers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73

37 Dumping the emulation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 73

38 Loading the MPLS module . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 74

39 Enabling MPLS on AS150 . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 74

40 MPLS topology . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 74

41 Traceroute result . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 75

42 tcpdump result . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 75

43 Import OpenVPN remote access provider . . . . . . . . . . . . . . . . . . . . . . . . . . . 76

44 Use OpenVPN remote access provider . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 76

45 Create the autonomous system . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 76

46 Create the real-world router . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 76

47 Joining the exchange . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 76

48 Setting node/network display name and description . . . . . . . . . . . . . . . . . . . . . . 77

49 Load dumped emulation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 77

50 Retrieving layers from emulator . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 78

51 Interactive with the layers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 78

52 Merge emulations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 79

53 Create transit . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81

54 Create stub . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81

55 Create real-world AS . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81

56 Allow remote access from the real world . . . . . . . . . . . . . . . . . . . . . . . . . . . . 81

57 Add attacker to the complex BGP setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . 82

58 BIRD configuration for hijacking . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 83

59 Creating root servers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 84

60 Adding records . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 84

61 Dumping the infrastructure . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 84

62 Merging emulations . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 85

63 Binding DNS nodes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 85

64 Binding DNS nodes . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 85

65 Set name servers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86

66 Loading previously built emulation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86

67 Creating the anycast AS ans hosts . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86

68 Creating the routers . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 87

69 Getting objects from the global registry . . . . . . . . . . . . . . . . . . . . . . . . . . . . 89

xii

70 Testing object existent in the global registry . . . . . . . . . . . . . . . . . . . . . . . . . . 89

71 Iterating through all objects in the global registry . . . . . . . . . . . . . . . . . . . . . . 89

72 Iterating through all objects of some type in the global registry . . . . . . . . . . . . . . . 89

73 Registering new object in the global registry . . . . . . . . . . . . . . . . . . . . . . . . . . 89

74 Linux ARP table overflow . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 100

75 Emulation generator: Large numbers of routers, hosts and/or autonomous systems . . . . 113

76 Driver script: Large numbers of routers, hosts and/or autonomous systems . . . . . . . . 116

77 Trick: start only ten containers at a time . . . . . . . . . . . . . . . . . . . . . . . . . . . 117

78 Summary script: Large numbers of routers, hosts and/or autonomous systems . . . . . . . 118

79 Summary script: example output . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 118

80 Emulation generator: Large numbers of hops and/or streams . . . . . . . . . . . . . . . . 119

81 Driver: Large numbers of hops and/or streams . . . . . . . . . . . . . . . . . . . . . . . . 121

82 Summary script: Large numbers of hops and/or streams . . . . . . . . . . . . . . . . . . . 122

83 Summary script: example output . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 123

84 Full code for the simple BGP setup example . . . . . . . . . . . . . . . . . . . . . . . . . . 123

85 Full code for the simple transit setup example . . . . . . . . . . . . . . . . . . . . . . . . . 124

86 Full code for the simple MPLS transit setup example . . . . . . . . . . . . . . . . . . . . . 126

87 Full code for the real-world example . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 127

88 Full code for the complex BGP setup example . . . . . . . . . . . . . . . . . . . . . . . . . 128

89 Full code for DNS infrastructure setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 131

90 Full code for deploying DNS on complex BGP . . . . . . . . . . . . . . . . . . . . . . . . . 131

91 Full code for the anycast setup . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 132

xiii

1 Introduction, background and motivations

One can already experiment with small-scale attacks like ARP poisoning, TCP hijacking, and DNS

poisoning since those usually require only a few hosts. However, it is impractical to apply the same

methodology used for small attacks emulation for large-scale networks. A reasonable-sized emulation for

large attack scenarios may require tens of nodes, which computers of regular researchers and students

cannot handle traditional emulation methods like virtual machines.

The final goal was to build a system that allows anyone with a reasonably recent PC to spin up a

reasonably-sized scenario and play with nation-level attacks that are launched against the entire Internet.

This project initially started as an exploration of how to effectively simulate or emulate a large BGP

network in an educational context for students and researchers to experience and experiment with BGP-

related attacks, like BGP hijacks.

This project, the SEEDEMU framework, has reached the set goals. The framework has user-friendly

APIs and ran reasonably large emulation on average PC (Over 100 nodes on a virtual machine with two

virtual CPU cores and 4 GB of memory.) Detailed scalability evaluations are available in the evaluation

section.

1.1 The software emulation approach

The very first approach to this problem was to use software simulation; instead of running actual virtual

machines, one can simulate the behavior of a real machine with pure software. The software I resorted

to is NS-3 [1]. NS-3 is a pure software-based simulator that simulates every aspect of Internet systems.

NS-3 can simulate thousands of nodes on a single physical host given that it has sufficient memory

since nodes in NS-3 are just data structures in the memory. NS-3 also provides a module that allows

interaction with the real world by exchanging ethernet traffics using a Linux TAP interface. NS-3 has

a detailed document for their C++ interface, so users can develop new modules and create simulations

with C++.

I initially implemented the BGP protocol inside NS-3, as there was no useable BGP module available

for NS-3. I followed multiple RFCs to implement a BGP library in C++ and developed a BGP module

for NS-3. While the implementation worked pretty well and was able to interact with the real world, it

soon came to my realization that the performance of the simulation was not very good. The bottleneck

comes from how NS-3 handles the simulation. Note that NS-3 simulates everything in software, from

TCP/IP stack to physical ethernet card behavior like exponential backoff, even physical properties of

RF propagating for the wireless link. For simulation that needs to be realistic, NS-3 is a great fit. The

goal of this project, however, was only to create a realistic-looking network with BGP routers connecting

with each other; layer two and physical NIC behavior are the least of the concerns, yet, NS-3 spent most

1

of the CPU times doing those works.

NS-3 also abstracts everything that happened in the simulation as events. Some examples of the

events include simulated high-level applications sending TCP packets, IP stack sending ARP requests,

or NIC hardware requesting to send packets to a simulated network media. Each event has a timestamp

and a field that indicates which node it belongs to. One event might create various other events. For

example, sending a “ping” to an unknown local destination will trigger an ARP query, which eventually

triggers a send-packet event on the node’s NIC.

All the events get queued up in the scheduler queue. Then a single-threaded scheduler pops events

out from the queue and executes them. Understandably, NS-3 opts for a single-threaded approach since

the complexity of the events dependencies and handling them in a multithreaded environment would be

non-trivial. While the single-threaded approach mitigates the complex dependency problems, it comes

with a significant performance penalty as only one CPU thread can be used for the entire simulation.

The slow performance is acceptable for NS-3’s intended usage, as many of the researchers using NS-3

do not need the simulation to be run in real-time. In fact, NS-3 defaults to non-real-time simulation

mode. In the non-real-time mode, the NS-3 schedules execute the next event in the queue immediately

after the current one and add the time difference to the simulated clock. When there are only a small

amount of nodes, this runs faster than the real-world time, but it slows down as the number of events

in the simulation increases. The goal of this project is to create a simulator that runs in real-time and

allows real-time human intervention. NS-3’s real-time simulation mode is the best-effort basis, where the

scheduler will try its best to keep up with the real time, or wait if it is faster than the real time, which

does not help in our case since the scheduler is already overloaded.

1.2 The configuration files approach

Since the ns-3 approach is deemed unscalable, I shifted my focus from simulation to emulation. The

target platform for the emulation was LXC containers. Since containers share the same kernel, only

software running on the containers consumes memories. The container itself does not consume much

memory and allows creating bridges for interconnecting containers. However, I soon find myself spending

too much time on managing the containers and creating bridges, which I figure should not be the main

focus of the project. Then I resorted to using docker [7], which has utilities like docker-compose to

allow easy management of containers, networks, and connections. At this stage, it is basically to create

a configuration file that “transpile” to docker-compose configuration file. The configuration file looked

like this:

Listing 1: Sample configuration

networks {

ix100 10.100.0.0/24;

}

2

router as150_router {

networks {

ix100 10.100.0.150;

}

bird {

router id 10.100.0.150;

protocol kernel {

import none;

export all;

}

protocol device {

}

protocol static {

route 10.150.0.0/24 blackhole;

}

protocol bgp {

import all;

export all;

local 10.100.0.150 as 150;

neighbor 10.100.0.151 as 151;

}

}

}

While this style of configuration works, it is a bit too verbose and does not scale well. It is verbose

in the sense that it will have a lot of repetitive parts in the configuration to create a complex network.

Furthermore, in order to add new features, for example, to support a new service, one will have to create

a new syntax for the configuration file. One will also need to hard-code IP addresses in the configuration.

It is also hard to work with. Once the configuration file is created, if one wants to add new hosts

or networks to it, they will need some deep understanding of the network that already existed in the

configuration. Since the emulator targets for-education uses, allowing one to quickly make changes to

emulations shared by others is important.

I also later found out that the configuration file approach has already been attempted by another

project, the CORE project [2]. Some more details about the CORE project are in the related work

section.

1.3 Setting the goals

Due to the reasons above, after months spent working with NS-3 and the configuration files, I ended up

dropping the idea of using simulation to archive the goal, or just simply transpile configuration files, and

start to re-evaluate the ultimate goal of the project. The goals were as follows:

• This system must be able to handle a large number of nodes.

3

• This system must be able to run a reasonably-sized network on a single, average computer. It may

optionally allow the network to run distributedly on multiple computers.

• This system must be run in real-time and allow easy user interaction.

• Users must be able to create a reasonably-sized network easily. Provide trivial ways for users to

create multiple networks and nodes.

• Try to make part of the network re-useable. Users should be able to share and re-use part of

networks or services running on nodes in another network.

• In order to offer high reusability, there should be some mechanism to allow one to merge emulations

with trivial efforts.

• Different parts of the emulation should be able to work independently of each other. If one is only

interested in working with DNS, they should not need to worry about BGP, routing, and layer two

connectivity too much.

1.4 The programming framework approach

With the above goals in mind, I came up with a framework, named SEEDEMU (SEcurity EDucation

EMUlator) framework, that enables one to create emulation using code. The framework provides basic

components of the Internet, like routers, servers, networks, Internet exchanges, autonomous systems,

DNS infrastructure, and a variety of services.

Building emulation with code means it is easy to build emulation with complex topologies and

emulation with a large number of nodes. The framework exploits the idea of “layers.” There are two

types of layers, base layers, and service layers. Base layers describe the “base” of the topologies, like

how routers, servers, and networks are connected, how autonomous systems are peered with each other;

service layers describe the high-level services on the Internet. Examples of services layers are web servers,

DNS servers, ethereum nodes, and botnet nodes. No layers are tied to any other layers, meaning each

layer can be individually manipulated, exported, and re-used in another emulation.

One can build an entire DNS infrastructure, complete with root DNS, TLD DNS, etc., and deploy it

on any base layer, even if the base layers have vastly different underlying topologies. Merging of layers is

also supported, meaning as long as two emulations do not have conflicting nodes or networks with each

other, one can merge them into a single emulation with just one simple API call.

The framework abstracts the elements of emulation, like physical nodes and networks. When one

tries to build an emulation, they interact with the abstractions only. This allows maximum flexibility in

how the framework can “run” those abstractions in the emulation. The framework can convert the said

abstractions to Docker containers, virtual machines, or even configuration files of other network emulation

4

and simulation platforms. With that being said, I have chosen to focus on converting the abstractions

to docker containers, as docker offers different ways to deploy containers (locally or distributed across

multiple computers), consumes minimum resources, and has much lower overhead than running real VMs.

Relatively average computers (one with 2 CPU cores, 4 GB of RAMs) can run hundreds of nodes. The

framework also provides APIs for enabling remote access (usually with VPN) to the emulated networks,

meaning users can participate in the emulation as if their computer is inside the emulation.

Also included with the framework is a web-based client program that allows users to view the

topology of the network and access the node’s console directly in the browser. The client program also

allows packet capture with BPF expression and visualizes the path that a packet traverse on the network

topology map. Quick actions like disconnect/reconnect networks, disable/enable BGP peers are also

built-in to the client program, to allow instant change and BGP re-route visualization.

2 Related works

While the project’s goal has been shifted from focusing on how to run the emulation to how to build

the emulation, the project still uses docker as its main emulation platform. There are no other projects

that put the emulation building as their main focus, and therefore this section will cover other emulation

platforms, not other emulation-building frameworks.

Note that since the SEEDEMU framework focuses on building emulations with the core classes and

uses the compiler to compile the core classes into docker compiler, it can, in theory, output simulations

or emulations for any of the platforms listed below. The framework focuses on docker since it is one of

the most mature platforms for managing containers. Given that one understands the target platform’s

configuration format, it will be trivial to create new compilers for the said platform. This offers maximum

flexibility - if some superior emulation platform is available in the future, one can create a compiler for

it, and minimum other code changes are required.

2.1 NS-3

NS-3 is a discrete-event network simulator, targeted primarily for research and educational use [1]. NS-

3 was used in earlier attempts to build the emulator. The motivation section covered the details of

previous attempts. NS-3 also uses programming APIs for building emulation but does not employ the

layer concept, limiting the reusability of emulations.

Another problem with NS-3 is that it is a simulation platform, not an emulation platform. Leave

aside the beforementioned performance issues; to run programs in NS-3, one needs to use NS-3 versions

of the APIs. Since nodes are no longer real machines, system calls, socket APIs, and everything that

has operating system APIs involved needs to be re-written in NS-3’s API to run inside NS-3. NS-3

5

attempts to solve this issue by providing the Direct Code Execution (DCE) framework, which provides

emulated Linux APIs that programs can use. However, NS-3 implementations of the APIs are not

complete, meaning most of the programs will not be able to run properly under DCE or require major

code changes.

2.2 EVE-NG and GNS3

EVE-NG [4], and Graphical Network Simulator-3, or GNS-3 [5], are two network emulators that use real

virtual machines to do the emulation of networks. While GNS-3 calls itself a simulator, it is, in fact, an

emulator. These two emulators are very similar, with both of them using a graphical user interface to

build emulations. Since the emulations are done by real virtual machines, they can support any kind of

nodes, including commercial router software from vendors like Cisco and Juniper.

While they are perfect for professionals, these emulators do not align with what I want to archive

very well either. The most significant issue is that they both require interacting with a user interface to

build emulation. It is impractical to build large size emulation with a user interface. While one can argue

it is possible to generate a configuration file for them directly, the fact that they run emulations using

virtual machines means each host will consume a significant amount of memory and CPU resources,

making running large emulations impossible without some expensive hardware.

2.3 CORE

Common Open Research Emulator, or CORE, is a tool for emulating networks on one or more machines

[2]. It is also a Python-based emulation framework. The major difference is that they are focusing on

running an emulation rather than building emulations. Their primary focus is to construct emulations

with the GUI, but they offer python APIs for building emulation. Their API allows one to create nodes,

create links, and install services on nodes. They do not have the layer concept, and it will be difficult to

re-use emulations, as there are no automated ways to allow easy re-use.

Instead, their main focus is to get the emulation up and running. They manage Linux namespaces

and create containers themselves. If the layers, binding, and merging features are removed from the

SEEDEMU framework, the API provided by SEEDEMU core classes is similar to the CORE APIs. It is

relatively trivial to create a SEEDEMU compiler that targets the CORE platform. However, as docker

is more feature-rich and sophisticated, the main focus of the SEEDEMU framework will be the docker

platform.

2.4 Greybox

CMU greybox is a single-host Internet simulator for offline exercise and training networks. It allows a

single host (physical or VM) to provide the illusion of connectivity to the real Internet: a realistic BGP

6

backbone topology with point-to-point link delays based on the physical distance between the routers’

real-world locations, combined with TopGen’s application services (HTTP, DNS, email, and more) [3].

This is, in fact, very similar to what the SEEDEMU framework attempts to do. The differences are:

• The target platform of greybox is limited to CORE, the emulator in the last subsection.

• It uses configuration to describe emulations instead of building emulations programmatically. For

the reasons stated in the motivations section, while configurations is an acceptable format for saving

the final product of the emulation, it does not offer good portability and reusability.

• The way it runs services on hosts is that only one node runs actual services for all servers in the

emulation. The router nodes merely forward traffic to that one single node. This dramatically

reduces the reality of the emulation and does not align with the goal of for-education emulators

very well. Servers and hosts should be individual nodes. They should be able to work independently

of each other.

• It offers limited options for services.

2.5 mini-Internet

The mini-internet project [6] is a project that also targets classroom and for-education use. It also

uses a configuration file based approach. The main goal of the project is to build a large network

with multiple autonomous systems and internet exchanges to enable students to understand Internet

operations alongside their pitfalls.

The mini-internet project archived its goal pretty well. However, while it is a good fit for experi-

menting with Internet operations, it lacks the ability to host other services, which is another important

part of the for-education Internet emulation. The project is also built using container technology. Its

configuration file is a TSV-like language. While that reduces the verbosity of the syntax significantly, it

also compromises the expressivity of the configuration file.

For the reasons above, existing solutions are not good enough for the goals. These reasons lead to

the development of the SEEDEMU framework.

3 Core concepts

This section will go through the important design concepts and features in the SEEDEMU framework.

3.1 Breaking the emulation-building and emulation-running apart

One of the major differences between the SEEDEMU framework and other network emulators is how

the SEEDEMU framework separates the construction and building of the emulation and the actual

7

execution of the emulation. This decouples the framework into two major parts: the first part is classes,

components, and layers that interact and make changes to the core classes (Node and Network, which

abstract computer/server/router and network in the real world; will be talked about in details in the

following few paragraphs) to build the emulation, and the second part is the compiler, where core classes

are converted into some other formats understandable by the existing platforms to emulate.

The core classes are simplified representations of the components that one will expect to have in a

network emulator. The core classes are:

• Node: represents a server, a router, or a host on the network. Properties on the Node class include

a list of interfaces, a list of files and their content, a list of software to add to the node, a list of

commands to run when building the image for the node, and a list of commands to run when the

node starts.

• Network: represents a local network or an Internet exchange network. Properties on the network

class include the network prefix on the link, MTU, and a list of interfaces connected to the network.

• Interface: represents a network interface card. An interface connects a node to a network. Prop-

erties on the interface include IP address, packet loss probability, and port speed.

The three classes above are the base of the entire SEEDEMU framework. In fact, these three

components are the base component of most network emulators. In other emulators, like in eve-ng,

one may use the web UI to create and link nodes and networks with each other. In the SEEDEMU

framework, users will be constructing emulations similarly, only that these components are represented

as objects in the framework, and one will interact with them using APIs, instead of dragging cables and

nodes on a UI. Building an emulation with code may not be as easy as point and click using the UI.

Building with code, however, provides the most scalability. It would be impractical to create hundreds

of nodes and connect hundreds of different cables using the UI, but with code that could be just a few

lines of code using loops.

In the SEEDEMU framework, these core class objects are treated as intermediate representations

and are compiled down to the “runnable” emulation. Examples of the “runnable” can be a set of

containers with a docker-compose configuration, some Vagrant virtual machines, some configuration to

deploy contains and virtual machines on a cloud platform, or even configuration files for other network

emulators or simulators. The compilation process is detailed in the later sections.

While one may also create an emulation only using the core classes, like using some other more

traditional network emulators, the intended use of these core classes are to serve as the building blocks

and to be created by the various layers in the emulator. The layered design will be discussed in detail in

the later sections.

8

Web Service Ethereum

Botnet

Bindings

Base layer Routing layer

BGP layer OSPF layer

Renderer

Node objects Network objects

Compiler

Containers

Virtual machines other formats...

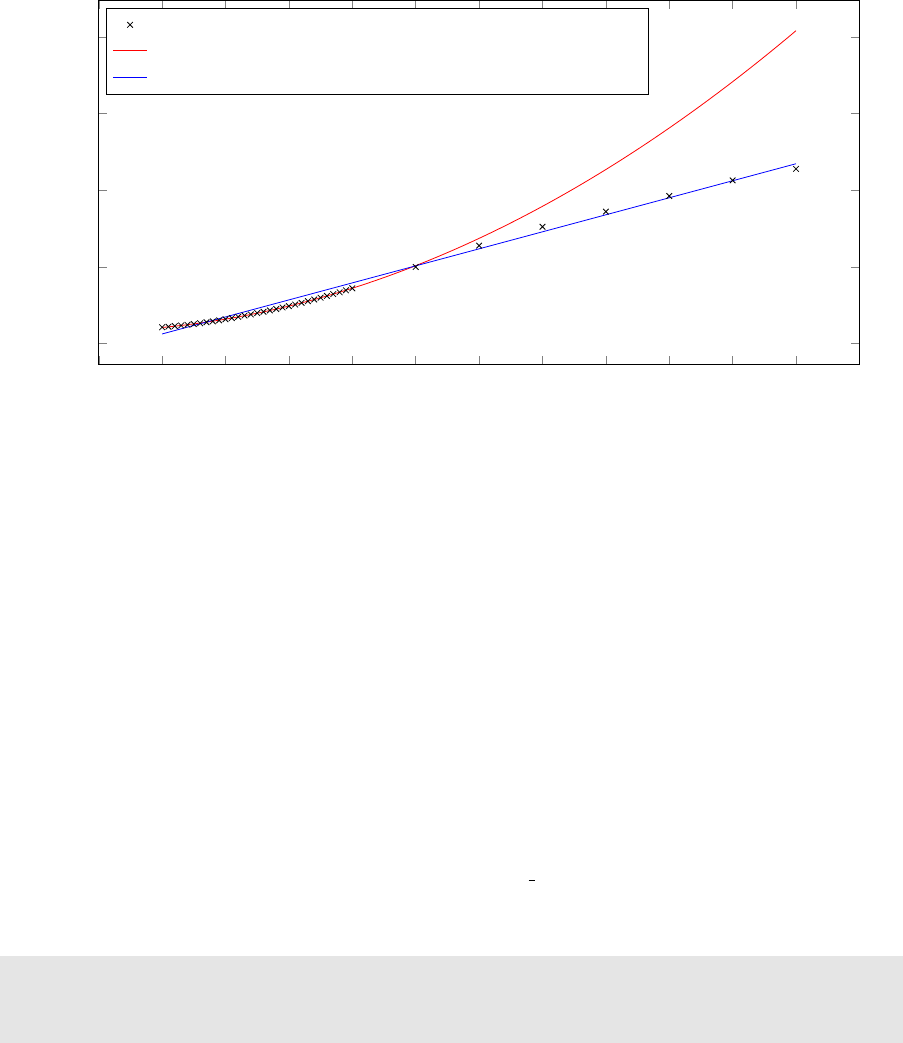

Figure 1: Layer structure

9

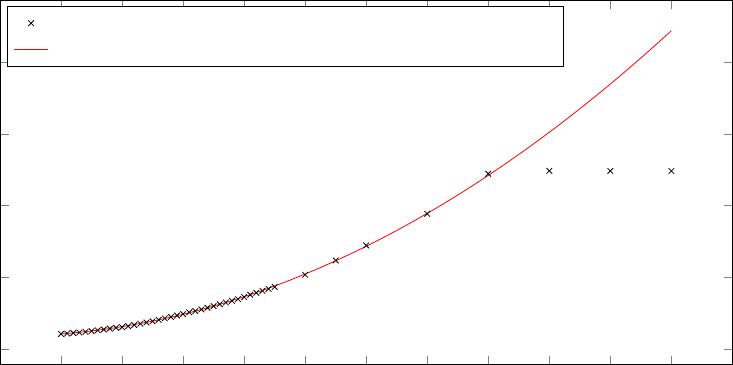

Figure 1 shows the high-level relationships between layers, core classes, and compilers. For layers,

there are two types: service layer and base layers. Service layers install service onto a single node. They

are rectangles with sharp edges and double borders in the figure. The scope of changes made by a service

layer is generally limited to a single node. Note that service layers do not make changes directly to

the core node classes. Instead, they make changes to “virtual nodes,” and the “virtual nodes” are later

bound to the core “Node‘ class, or a “physical” node. The reason for this will be discussed in the later

sections.

Base layers make changes to the entire emulation. Examples of this are the routing protocols, which

will involve changes on multiple router nodes, and the base layer, which itself creates hosts and routers

in the emulation. The base layers are rectangles with rounded edges and double borders in the figure.

The layers pass through the renderer to be converted to node and network objects. Node and network

objects are the core class objects; they are the single border, rounded edge rectangles in the figure. The

core class objects then pass through the compiler to become the “runnable” emulation.

Note that Figure 1 is only an overview, and therefore, only some available layers are shown. The

SEEDEMU offers many layers to facilitate different needs.

3.2 Enabling sharing and re-use of the emulations

One major consideration when the framework was designed was to enable users to re-use part of the

emulation. Which such a mechanism, one can share with others the emulation they built, or use the

emulations others had built in their own emulation.

In order to support the sharing feature, I came up with the following guidelines:

• Emulations should be separated into different logical parts, where there are no hard-coded depen-

dencies and references between them. Each part should be able to function on its own, without

relying on other parts, during the emulation construction step.

• The emulation parts, either as code or as some dump file, should be imported and exported from

existing emulations easily.

• The emulations parts must be able to merge seamlessly should there be no conflicting definitions in

them. If there are conflicting definitions or configurations, the user should manually resolve such

conflicts, either with code or by interacting at run time with the framework.

In order to support the abovementioned guidelines, the following design decisions and features were

introduced to the framework:

Layered design. The emulations will be built from different components called layers. Consider the

layer as the layer in the image editing software; each layer contains part of the information about the

10

emulation. Examples of layers are the base layer which stores information about what hosts and networks

are in the emulation, what networks they connect to, and more; the BGP layer, which describes how

autonomous systems have peered with each other; and the DNS layer, which stores information about

DNS zones, and what zones are being hosted on what host, etc. Each layer will only “mind their own

business,” in a sense that they only keep track of changes they want to make on the emulation - like

keeping an internal blueprint of the emulation. The changes are only committed to the emulation during

the render stage, where all the layers are “rendered” into one single emulation.

Binding and virtual nodes. As stated in the guideline, no hard-coded dependencies and references

between components (will be called “layers” from now on) are allowed. Layers that install services, like

the DNS layer, however, need to know what node in the emulation (will be called “physical node” from

now on) to commit their internal blueprint on - basically, what physical node to install and configure

the service on. In order to archive this, the binding and virtual node mechanism is introduced. A virtual

node is merely a string, a name text in the layers that would like to make changes to some physical

nodes. These layers track the changes they would like to make to different physical nodes, identified by

the virtual node name, internally. Then, a set of rules can be defined for each virtual node name to map

them onto the physical node.

Merging In order to allow the merging of an existing emulation with another emulation, the merging

feature is introduced. In case there are no common layers shared between two emulations, the merging

process is trivial. However, if two emulations contain the same type of layers, the merging will need some

special attention. Consider the case where there exist two DNS layers with different zones configured in

them. In order to solve this, the framework allows users to implement mergers, where users can request

the emulation to pass two objects of the same type to the user for further merging operations. A set of

default mergers is included with the framework, which will try its best to merge two layers.

3.3 The core classes and interfaces

The core classes are the lowest-level representations of emulation objects. They are the direct abstractions

of the components in the lower-level emulation platforms. For example, the Node class may resemble

a service (i.e., a container in docker) or a virtual machine. The Network class may resemble a Linux

bridge. The Interface may resemble a Linux veth, a SR-IOV NIC, or even a physical NIC. It is up to

the compiler to “compile” them into their final form on the target emulation platform.

For all the core classes, some APIs are provided to allow one to make changes to those objects.

The core classes cannot represent their real-world counterparts fully, and in fact, they never meant to.

Features provided by the core classes are carefully selected to work well under the context of for-education

emulation.

11

3.3.1 The Node class

The node class represents a computer, a server, a router, or any device that has Internet support. In

the SEEDEMU framework, a node is assumed to be a generic Linux host running a Debian-based distro.

The node class has APIs to allow one to do the followings:

• Connect to a network: Connecting to a network or other nodes is the essential feature of a node

that connects to the Internet.

• Adding files to node: There is also API to allow one to add files onto the node. Having this API

enables many emulation features, like adding configuration files onto nodes.

• Add commands to run when “building” the node: In order to make a generic node running Linux

into a node that performs some role in the emulation, one may need to run some commands on

the node. One such example is running commands to install some software. The API to add build

command allows one to customize nodes to have any features they want.

• Add commands to run when the node starts: While adding commands to nodes during build time

allows one to customize the node, it is necessary to have the ability to add commands to run when

a node starts too. For example, on a router node, the routing daemon needs to be started when

the node starts, and on a client node, one may want to make it ping or curl to some other node

when it starts. The API to add start commands is therefore added.

These APIs, while not able to represent the full feature of real-world hosts, have provided enough

customizability that enables the emulation of complex scenarios for education uses. Example usages of

the framework are discussed in the case study section. Note that one may also interact with the nodes

directly after the node starts by accessing the console of the node directly. Therefore, it is not strictly

necessary to add everything one wants to run as start commands. Since the node is just a regular host

running Linux, one may also make changes to it that are not provided by the API. It is even possible to

have real-world computers join the emulation and appear to be a node in the emulation. These features

are discussed in the later sections.

3.3.2 The Network class and the Interface

The network class represents an Ethernet network. It can be considered as a switch, where nodes can

connect to. A network can be an Internet exchange, a part of some backbone, or just an internal LAN

network. On the network class, there is one API to allow one to enable remote access from the real world

to the network, usually by means of hosting a VPN server that is accessible from the real world. Details

of the real-world feature are in the real-world section.

12

An interface object connects a node to the network. The interface class has the APIs to allow one

to do the followings:

• Change MTU: More and more real-world Internet providers allows the use of the jumbo frame.

Having the API to set MTU allows the framework to handle those aspects of the Internet. Some

SEEDEMU features, like the MPLS layer, use this API, since they introduce layer two header

overhead.

• Set link emulation properties: There are APIs on the interface class to allow one to change the

link properties. These properties include link speed, packet drop probability, and max bandwidth.

This provides another important aspect of real-world networks.

• Setting the “direct” attribute: Networks that have “direct” attributes are learned by the routing

daemons and sent to BGP peers. Details of this design are described in the base layer and routing

section.

Again, the goals of these APIs are only to provide enough customizations, so it matches with

the previously set goals - for-education network emulation. And, even if some customizations are not

available, one can always enter the console of the nodes and perform the customizations manually.

3.4 The Emulator class

The Emulator class houses the core services one may need to work with building the emulation.

• Hooks: The emulator class provides hooks. Hooks allow one to hook onto various stages during

the rendering process to make changes to the emulation. The hook feature allows one to make

changes to the layer’s behavior without modifying the layer implementation.

• Bindings: Bindings allow one to bind virtual nodes to physical nodes. Virtual nodes are nodes

used in service layers to keep track of services to install and the configuration files. Virtual nodes

are bound to physical nodes during render. This decouples node references and allows service layers

to operate on their own without base layers. Details about bindings are in the binding section.

• Virtual physical node: When a virtual node is created, one may want to make some additional

changes to the physical node that it is about to bound to. However, the physical node may not

even exist yet. The virtual physical node allows one to make changes to a real physical node object.

The changes made to that virtual physical node are then copied to the actual physical node upon

binding. Details about virtual nodes are in the virtual node section.

• The object registry: The object registry is where all objects in the emulation are stored: layers,

nodes, networks, hooks, and everything. One emulator class has only one object registry, storing

all objects in the emulation.

13

• Merging: The emulator class has a merge method to allow one to merge an emulation with another

to create a new emulation. This allows one to re-use emulations built by others. Details about the

merging feature are in the components section.

• Dumping and loading emulations: Dumping allows one to serialize the entire emulation into

a single binary file for easy sharing. Loading loads serialize the single binary file back into an

emulator object. Generally, this is used with the merging feature to share and re-use emulations.

Details about the dump and load feature are in the merging section.

• Service Network: A service network is a special network that has access to the real-world

Internet. The implementation of the service network depends on the compiler; the API merely

creates a network with a special type telling the compiler that this network is not an emulated

network, but is a network that should have access to the real-world Internet. This is used to support

various import features like VPN access for real-world hosts to connect to the emulated network

from the outside world, and NAT access for the hosts inside emulation to access the real-world

Internet. Details about the service network and service node are in the real-world section.

• Rendering and resolving layer dependencies: Naturally, one would want the base layers to

finish configuring the emulation before other layers since no node exists before the base layer does

the configurations. The emulator class automatically learns and checks the dependencies of the

layers. The rendering logic is also handled by the emulator class. Details about rendering logic are

discussed in the rendering section.

3.5 The object registry

The object registry is a class to store objects in the emulations. All objects, including the core classes,

layers, hooks, and bindings, are stored in the registry. Every object registered in the registry will have

three keys to identify them. Namely, the scope, type, and name. Generally, the scope defines the owner

of the said object, the type defines the type of the object, and the name defines the name of the object.

For example, a router named router0 node belongs to AS150 will have a scope, type, and name value

of 150, rnode (router node), and router0.

Some objects registered in the registry will also have their own equivalent of the name, type, or scope

field as their class attribute or method. For example, node objects have ASN attribute, which is also

used as its scope, node role attribute, which corresponds to the type, and name attribute, will be used as

its name. Those equivalent attributes are maintained by the object itself, so in theory, those attributes

can be different from how they are registered in the registry. However, all objects created by layers or

other bulletin emulation functions will keep the attributes on the object itself and the attribute used

14

for the registry the same. Unless one builds an emulation without using layers and explicitly registers

objects in an odd way, the attribute will be consistent.

3.6 Layers

The layer is one of the most critical concepts and components in the SEEDEMU framework. The different

functionality of the emulator is separated into different layers. The base layer, for example, describes

the base of the emulation:

• what autonomous systems and Internet exchanges are in the emulator,

• what nodes and networks are in each of the autonomous systems,

• how nodes are connected with networks

BGP layer, on the other hand, describes how autonomous systems and where are peered with each

other, and what is the relationship between the peers.

Layers themselves are like helper tools to create and make changes like installing new software, or

adding configuration files for the said software, on the core class objects (the intermediate representa-

tions). While one can technically create an emulation without using any of the layers and by manually

creating and updating the core class objects themselves, just like in any other network emulation software

and framework, the layer is one of the most important features that make the SEEDEMU framework

different.

3.6.1 Base layers and service layers

The SEEDEMU framework classifies layers into two different categories. The first category is the base

layers, and the second category is the service layers.

Base layers, not to be confused with the base layer, which is one of the base layers, make changes to

the emulation as a whole. A base layer makes changes to the entire emulation (like BGP, which configure

peeing across multiple different autonomous systems, exchanges, and routers), and routing, which will

configure routing protocols, loopback addresses on all router nodes. The characteristic of base layers is

that they provide the basics to support the emulation and higher-level layers.

In contrast, service layers will typically only make changes to individual nodes. Examples of service

layers are the web service layer, which allows one to install web servers on nodes, and the DNS layer,

which allows users to host DNS zones on nodes. While the service layer is the type of layer that interacts

with a single node to install service on them instead of making changes to the entire emulation as a

whole, service layers can also have their internal “global” state. For example, the DNS layer keeps an

internal tree structure for DNS zones. Individual zones can be hosted on a single server (node-specific

15

data), but the zone structure itself is an “global” data of the DNS layer. The DNS layer uses the zone

tree to build appropriate NS records to aid the creation of the complete DNS chain, enabling one to

build a DNS infrastructure easily.

Design changes in different iterations In the early iteration of the SEEDEMU framework, services

are installed by providing the node object to the services. This leads to one major problem. Referencing

other objects in the emulation means that it would not work if one were to use this same piece of code in

another emulation. This contradicts the early guideline, where each emulation component should have

no direct code dependencies on other codes for portability. Two new mechanisms, namely the virtual

node and binding mechanism, are introduced to solve this problem.

In this new design, layers must not directly reference objects that do not belong to themselves.

Instead, layers retrieve nodes from the registry, using the names and install services that way. It soon

finds that this design still has some downsides. One will have to know the names of objects in the other

layers for this to work, which is less than ideal, as one may get the lower layers from someone else and

may not have the details, like names of objects, that exist in the lower layers. One approach to this

problem is to have the users inspect the imported layers themselves to find out the said details. Such an

approach, however, still does not offer a seamless experience for users. This leads to the development of

the virtual node and binding system.

3.6.2 Virtual nodes, bindings, and configure-on-render

Three new mechanisms, namely the virtual node, binding, and configure-on-render mechanism, are in-

troduced to further increase the portability. The general idea is that, first, the layer must not use any

node object to identify which node to install the services on, as that creates dependencies to the base

layer, as mentioned in the previous paragraph, and second, specifying the name of nodes in the lower

layers should not be the only way users can use to install service on a node.

Virtual node. As explained in the earlier section, the virtual node is the mechanism to enable services

to track changes they would like to make to a node. This virtual node name is only significant to the

service.

Binding. A binding allows one to set how the virtual node gets bound to a physical node, using a set

of optional predicates, like ASN, IP, name, network prefix, of the node. Users may also supply their

functions to do the tests to elect a physical node for a virtual node. It is also entirely optional for set

predicates. If no predicates are given, the virtual node will be bound to a random available physical

node. Alternatively, should no physical nodes match the given predicates, the user can ask the binding

system to create a node that matches the given conditions. This enables one without any knowledge

16

about the lower layers to add new services with trivial efforts.

Virtual physical node. A virtual physical node is an actual node object instance. Since the virtual

nodes are only bound to a physical node after the render process, if a user would like to make some

changes to that physical node, they would need to wait after the render process. Waiting, however, may

not always be an option. If a user is developing a component (a partial emulation that can be “plugged”

into another bigger emulation to form a full emulation; will be discussed in detail in the later sections.),

they would never have the chance to render the emulation themselves. In order to enable making changes

to physical nodes, in this case, the framework offers a “virtual physical node” mechanism. The virtual

physical node provides a way for users to interact with the physical node by creating an actual physical

node object. Users can make changes to this imaginary physical node (with some limitations. Adding

new network interface cards, for example, are not supported.). The emulator will keep track of those

changes and apply the changes to the real physical node when the binding happens.

Configure-on-render. In order to take the portability one step further, the configure-on-render mech-

anism is introduced. This means layers will keep track of changes they planned to make internally and

only actually commit those changes during the render stage. This further allows layers to operate on

their own. With this mechanism, users can do operations like building services and configuring BGP

peerings without the base layer. The design of this mechanism will be discussed in the later sections.

3.6.3 The binding system

Services are installed onto the virtual nodes. Virtual nodes are just a name that exists only inside the

service layers. The binding system is the system that allows one to “bind” a virtual node onto the

physical node, so the service can actually be installed onto the node. A binding consists of the binding

target, the binding filters, and the binding action.

Binding target Binding targets define the “target” virtual nodes of this binding. It can either be the

full name of the virtual node or a regular expression to test the name of the virtual node. The binding

will further evaluate virtual nodes that match the target. Otherwise, the binding system will skip to

the next binding in the bindings list. By offering the option to use regular expressions, one can match

multiple virtual nodes and apply the same binding rules to them. This is found to be extremely useful

when deploying some services. For example, one can prefix all virtual nodes with web services with web-,

and match all those nodes with a single binding using web-.* as the target. While the binding targets

may match multiple virtual nodes, each node is bound individually by evaluating the filters and actions.

17

Binding filters Binding filters allow one to define a set of rules that the physical node must match in

order to be considered a binding candidate for the virtual node. SEEDEMU framework offers some of

the most commonly used predicates in the binding filters:

• asn: The asn option allows one to narrow down physical nodes by their autonomous system

number. Useful if one wants their service to be deployed in a given autonomous system.

• ip: The ip option allows one to match against the IP address of the node.