17

Through the Looking Glass: Study of Transparency in

Reddit’s Moderation Practices

PRERNA JUNEJA, Virginia Tech, USA

DEEPIKA RAMA SUBRAMANIAN, Virginia Tech, USA

TANUSHREE MITRA, Virginia Tech, USA

Transparency in moderation practices is crucial to the success of an online community. To meet the growing

demands of transparency and accountability, several academics came together and proposed the Santa Clara

Principles on Transparency and Accountability in Content Moderation (SCP). In 2018, Reddit, home to uniquely

moderated communities called subreddits, announced in its transparency report that the company is aligning

its content moderation practices with the SCP. But do the moderators of subreddit communities follow

these guidelines too? In this paper, we answer this question by employing a mixed-methods approach on

public moderation logs collected from 204 subreddits over a period of ve months, containing more than 0.5M

instances of removals by both human moderators and AutoModerator. Our results reveal a lack of transparency

in moderation practices. We nd that while subreddits often rely on AutoModerator to sanction newcomer

posts based on karma requirements and moderate uncivil content based on automated keyword lists, users are

neither notied of these sanctions, nor are these practices formally stated in any of the subreddits’ rules. We

interviewed 13 Reddit moderators to hear their views on dierent facets of transparency and to determine

why a lack of transparency is a widespread phenomenon. The interviews reveal that moderators’ stance on

transparency is divided, there is a lack of standardized process to appeal against content removal and Reddit’s

app and platform design often impede moderators’ ability to be transparent in their moderation practices.

CCS Concepts:

• Human-centered computing → Empirical studies in collaborative and social com-

puting;

Keywords: online communities, content moderation, rules, norms, transparency, mixed methods

ACM Reference Format:

Prerna Juneja, Deepika Rama Subramanian, and Tanushree Mitra. 2019. Through the Looking Glass: Study of

Transparency in Reddit’s Moderation Practices . In Proceedings of the ACM on Human-Computer Interaction,

Vol. 4, GROUP, Article 17 (January 2019). ACM, New York, NY. 35 pages. https://doi.org/10.1145/3375197

1 INTRODUCTION

In 2019, moderators of subreddit r/Pics removed an image depicting the aftermath of the Tiananmen

Square massacre that was well within the rules of the subreddit

[22]

. The post was later restored

in response to the uproar. However, the event calls attention to two important shortcomings in

the moderation process: the moderators did not indicate what part of the content triggered the

moderation and why. To date, the content moderation process has mostly been non transparent

and little information is available on how social media platforms make moderation decisions; even

transparency reports only provide number of removals without capturing the context in which the

Authors’ addresses: Prerna Juneja, Virginia Tech, Blacksburg, USA, [email protected]; Deepika Rama Subramanian, Virginia

Tech, Blacksburg, USA, [email protected]; Tanushree Mitra, Virginia Tech, Blacksburg, USA, [email protected]du.

Permission to make digital or hard copies of all or part of this work for personal or classroom use is granted without fee

provided that copies are not made or distributed for prot or commercial advantage and that copies bear this notice and

the full citation on the rst page. Copyrights for components of this work owned by others than ACM must be honored.

Abstracting with credit is permitted. To copy otherwise, or republish, to post on servers or to redistribute to lists, requires

prior specic permission and/or a fee. Request permissions from [email protected].

© 2019 Association for Computing Machinery.

2573-0142/2019/1-ART17 $15.00

https://doi.org/10.1145/3375197

PACM on Human-Computer Interaction, Vol. 4, No. GROUP, Article 17, Publication date: January 2019.

17:2 Prerna Juneja, Deepika Rama Subramanian, and Tanushree Mitra

removals occurred

[60]

. Content removal without justication and change in content moderation

rules without notication have been common occurrences in all major social media platforms, like

Facebook and Reddit

[21, 57]

. When asked to publicly comment on these contentious decisions,

platforms respond with short, formal statements that rarely indicate the overall ideology of the

organization’s moderation systems.

[21]

. This lack of information can puzzle users

[65]

, making it

dicult for them to understand rules governing the platform’s moderation policies or even learn

from their experience after their content is sanctioned on the platform

[64]

. Lack of proper feedback

also leads users to form folk theories and develop a belief of bias in the moderation process

[64]

.

Thus, in recent times, there has been a huge demand for transparency and accountability in content

moderation of all social media platforms [36, 60].

In an attempt to address what transparency and accountability entails in social media platforms,

three partners—Queensland University of Technology (QUT), University of Southern California

(USC) and Electronic Frontier Foundation (EFF)

1

jointly created the Santa Clara Principles (hence-

forth referred to as SCP). SCP outlines a set of minimum guidelines for transparency and an appeal

process that internet platforms should follow. For example, it requires companies to provide de-

tailed guidelines about what content is prohibited, explain how automated tools are used to detect

problematic content and give users a reason for content removal. In response to these principles,

Reddit, for the rst time in 2018, included some statistics regarding the content that was removed

by subreddit moderators and Reddit admins for violating their Content Policy

[26]

. The report

stresses the fact that Reddit is aligning its content moderation practices with the SCP.

“It (transparency report) helps bring Reddit into line with The Santa Clara Principles on Transparency

and Accountability in Content Moderation, the goals and spirit of which we support as a starting

point for further conversation”

While Reddit as a company claims to abide by the SCP, are its communities following these

principles too? It is important to note that calls for transparency are not limited to Reddit’s

Content Policy, the company has also issued moderator guidelines (MG)

[50]

that reiterate how

transparency is important to the platform. They ask moderators to have “clear, concise and consistent”

guidelines and state that “secret guidelines aren’t fair to the users”. In this study, we examine if

content moderation practices in Reddit communities abide by the transparency guidelines outlined

in the SCP and the moderator guidelines issued by the platform (MG).

Reddit is one of the largest and most popular discussion platforms. It consists of millions of com-

munities called subreddits where people discuss a myriad of topics. These subreddit communities

are governed by human moderators with assistance from an automated bot called AutoModerator.

Thus, the platform provides us with a unique opportunity to study the transparency of human-

machine decision making, an aspect rarely studied in previous literature (with an exception of a

few studies like

[29]

). Reddit is also unique in its two-tier governance structure. While the company

has a site wide Content Policy [

51

], every subreddit has a set of rules and norms. While rules are

explicitly stated regulations, norms are dened as unspoken but understood moderation practices

followed by moderators of the online communities. Looking through the dual lens of SCP and MG,

we sketch how transparency works in Reddit’s two tier governance enforced via platform’s policies,

community’s rules and norms. We ask:

RQ1:

How do content moderation practices in Reddit sub-communities align with principles of

transparency outlined in the SCP guidelines?

RQ1a:

Do all sanctioned posts correspond to a rule that was violated, where the rule is either

explicitly stated in the community’s rule-book or on Reddit’s Content Policy?

1

http://e.org

PACM on Human-Computer Interaction, Vol. 4, No. GROUP, Article 17. Publication date: January 2019.

Through the Looking Glass: Study of Transparency in Reddit’s Moderation Practices 17:3

RQ1b:

Do moderators provide reasons for removal after taking a content moderation action on a

user’s post or comment?

RQ1c: What are the norms prevalent in various subreddit communities?

RQ1d:

Are rules in subreddits clearly dened and elucidated? Are they enforced and operational-

ized in a consistent and transparent manner by human and auto moderators?

We employed a mixed methods approach to answer these research questions. First, we quan-

titatively identied “meta communities”— cohorts of subreddits that sanction similar kinds of

transgressions. Next, we qualitatively mapped high ranking post/comment removals from every

meta community to their corresponding subreddit rule violation as well as Reddit’s Content Policy

violations that led to the removals. Unmapped instances revealed unsaid norms that moderators

followed to sanction such posts and comments. Among the several norms that surfaced through our

analysis, the following are the most unsettling: (1) moderators sanctioned comments containing

criticism of their moderation actions and (2) moderators themselves posted and removed rule-

violating expletives, sometimes even while providing feedback to the community that they were

moderating. Through our qualitative analysis, we also uncovered several moderation practices

that violate SCP transparency guidelines. We observed that most of the subreddits present in our

dataset do not notify users about the reason for content removal. We also found that enforcement

and operationalization of rules is not transparent. AutoModerator congurations such as inclusion

of blacklisted words and karma requirements have not been publicly revealed in subreddits’ rules.

To understand the rationale behind the widespread transparency violations, we decided to

interview moderators to comprehend their side of the story. SCP, along with RQ1 ndings inspired

our RQ2 questions.

RQ2: How do moderators view the following facets of transparency?

(a) Communities silently removing the content without notifying the user

(b) Revealing reasons for removals or citing rules while sanctioning content

(c) Vaguely worded subreddit’s rules

(d) Transparent/non-transparent enforcement of rules

(e) User appeals against content removal

We interviewed 13 Reddit moderators. They had a divided stance on transparency. While one

half believed being transparent during the moderation process is essential for healthy communities,

the other half provided several insights on the pitfalls of being transparent. They believed notifying

users about content removal and providing reasons for these removals act as negative reinforcement,

thereby making users more uncivil. Being transparent about rule enforcement is also problematic.

Miscreants and trolls could use this information to game the system. We found how the design

of the Reddit platform acts as a hindrance and prevents moderators from being more transparent.

Although Reddit does provide a way for users to appeal suspension or restriction of their accounts

2

,

moderators revealed that there is a lack of standardized appeal process for content removal across

subreddits. In Reddit’s world, where moderation is voluntary with scarce resources, it is important

to appeal against a moderator’s decision in an appropriate way. Based on the responses from

moderators, we have compiled a complaint etiquette —guidelines that users should follow to appeal

against content removal in order to get their case heard. Taken together, our ndings suggest

that while existing moderation practices such as not providing proper feedback are problematic,

practicing complete transparency in rule enforcement is not pragmatic for social media platforms.

There is a need to nd the ‘juste-milieu’ in content moderation where healthy communities

are cultivated by providing appropriate feedback while simultaneously avoiding abuse of this

information by miscreants.

2

https://www.reddit.com/appeals

PACM on Human-Computer Interaction, Vol. 4, No. GROUP, Article 17. Publication date: January 2019.

17:4 Prerna Juneja, Deepika Rama Subramanian, and Tanushree Mitra

2 STUDY CONTEXT: SANTA CLARA PRINCIPLES ON TRANSPARENCY AND

ACCOUNTABILITY

In this section, we briey discuss the Santa Clara Principles of Transparency and Accountability in

content moderation. SCP includes a set of three recommendations that serve as a starting point,

providing minimum levels of transparency and accountability. The recommendations provided are

[49]:

(1)

Numbers: Companies should publish the number of posts removed and accounts permanently

or temporarily suspended due to violations of their content guidelines.

(2)

Notice: Companies should provide notice to each user whose content is taken down or account

is suspended about the reason for the removal or suspension. They should also provide: (a)

detailed information as to what content is disallowed, including examples of permissible and

non-permissible content, (b) information about the guidelines used by the moderators and (c)

explanation of how the automated tools are used for detection of non-permissible content

during moderation.

(3)

Appeals: Companies should provide a meaningful opportunity for timely appeal of any content

removal or account suspension.

In order to formulate these recommendations, the three partners (QUT, USC and EFF) undertook a

thematic analysis of 380 survey responses that were submitted to the EFF’s—a leading non-prot

advocating free speech and digital privacy—website

onlinecensorship.org

by users who were

seriously aected by the censorship of their content or temporary suspension of their accounts

on social media platforms

[44]

. In addition to this, several deliberative sessions that took place at

the “All Things in Moderation”

3

conference—a symposium organized by UCLA

4

on online content

review, also contributed to the formulation of the guidelines

[44]

. As of 2019, twelve companies

including Reddit and Facebook publicly support the Santa Clara Principles

[19, 48, 62]

. In this study,

we focus mostly on Notice, the second SCP, while briey discussing the accepted methods for

appeals during our interviews with moderators. Examining the aspects of the second and third

SCP gives the HCI community an excellent opportunity to ‘design for transparency’. We leave the

investigation of subreddits’ adherence to the rst SCP to future work.

The rest of this paper is organized as follows. We start by reviewing related research. After briey

describing our dataset, we describe RQ1 methods followed by results detailing SCP violations.

Next, we describe RQ2 method followed by describing in detail the insights gained from our

interviews with moderators. Finally, we discuss our results, design implications of our work and

future directions before concluding with the limitations of our study.

3 BACKGROUND

Rules, Norms and Policies:

Deviant behavior is a serious and pervasive problem in online

communities [

11

]. One way to tackle this kind of behavior in online platforms is by enforcing

rules [

31

], norms [

6

] and content policies [

5

]. Policies and rules are ubiquitous through terms and

conditions which are set either by the platform [

17

] or by the community [

30

] or collaboratively by

both [

4

]. On the other hand, norms are emergent and develop from the interactions among users of

the community [

45

]. For example, in a platform like Reddit that has a two tier regulatory structure,

the company has a site wide Content Policy [

51

] that is enforced throughout the platform. In

addition, each subreddit has its own formal rules (formulated by the moderators of those subreddits)

and cultural norms that arise from the interactions among users and moderators. Past studies

have focused on rules, norms and policies together

[3, 6]

and individually

[16, 17, 37]

. Lessig et

3

https://ampersand.gseis.ucla.edu/ucla-is-presents-conference-on-all-things-in-moderation/

4

University of California, Los Angeles —http://www.ucla.edu/

PACM on Human-Computer Interaction, Vol. 4, No. GROUP, Article 17. Publication date: January 2019.

Through the Looking Glass: Study of Transparency in Reddit’s Moderation Practices 17:5

al. studied how policies shape the behavior of online communities and play a role in regulating

them [

37

]. Fiesler et al. characterized subreddits’ rules, studied the frequency of rule types across

the subreddits and examined the relationship between rule types and subreddit characteristics

[

17

]. Chandrasekharan et al. analyzed content that has been removed from various subreddits and

extracted micro (violated in a single subreddit), meso (violated in a small group of subreddits) and

macro (violated all over Reddit) norm and rule violations [6]. While the aforementioned work [6]

studied rule and norms as loosely coupled entities, we consider the dichotomy between them. We

use this distinction to propose a unique way of discovering community norms by mapping each

sanctioned post/comment with site wide as well as subreddit level rules.

Content Moderation:

It is not sucient for social media platforms to craft rules and policies,

they have to be enforced through moderation processes. There are a variety of ways in which

content is moderated online. Some platforms ( Facebook, Reddit, Twitter, YouTube) rely on the

community to “ag” inappropriate and oensive content that violates the platform’s rules and

policies [

9

]. Others (Facebook and Twitter) employ commercial content moderators [

53

]. They hire

large number of paid contractors and freelancers who police the platforms’ content [

8

,

32

]. Some

platforms (Wikipedia, subreddits) rely on volunteer moderators instead of commercial moderators

to moderate the content posted in their communities

[12, 15, 66]

. These moderators and their

actions build and shape online communities [

41

]. Seering et al. examined the process to become a

moderator and studied how moderators handle misbehavior and create rules for their subreddits.

However, their study does not handle aspects of moderator feedback for community development

which this paper will address in future sections. Moderator feedback is given not only by humans,

but sometimes by automated tools as well. These tools assist the moderators in their day-to-

day moderation tasks but their conguration is a black box to the users [

28

]. We explore this

phenomenon of hidden AutoModerator conguration along with other practices followed by

moderators to examine whether they adhere to SCP and Reddit’s moderator guidelines.

Transparency in Moderation:

The way content moderation is done by moderators in many

online platforms is non transparent and murky with content disappearing without explanation [

21

].

Users nd the lack of communication from the moderators very frustrating

[27]

. In some platforms,

even the presence of moderators themselves is sometimes unknown

[54]

. This lack of transparency

can confuse users and lead to high drop out rates [

65

]. Thus, in recent times, researchers

[21, 23, 61]

and the media

[22, 24]

have called for greater transparency in the moderation process followed by

the platforms. But when we talk about transparency in online content moderation, the rst problem

is the lack of a formal denition of what transparency actually means

[61]

. Several researchers

have tried to dene it

[18, 42]

. For example, Ann Florini

[18]

denes transparency as “the degree

to which information is available to outsiders that enables them to have informed voice in decisions

and/or to assess the decisions made by insiders”. Researchers have also proposed several models and

guidelines using which platforms can transparently govern themselves. One such guideline that

has recently gained a lot of traction and is widely embraced by many social media platforms is SCP

[49]

. Another framework that has become popular in recent times is the ‘fairness, accountability

and transparency’ (FAT) model. This model has especially been used to understand the algorithmic

decision making process

[34, 35, 52]

. Many content moderation systems such as YouTube, Facebook

use articial intelligence techniques to carry out their moderation. However, these techniques are

not interpretable and often questioned for being a black box. Transparency can have its pitfalls too.

Providing too much information to the user can lead to inadvertent opacity —a situation where

important piece of information gets concealed in the bulk of data made visible to the user

[59]

. It

can also endanger privacy, suppress authentic conversations

[56]

and allow miscreants to game the

system [13], a sentiment also shared by moderators during the interviews.

PACM on Human-Computer Interaction, Vol. 4, No. GROUP, Article 17. Publication date: January 2019.

17:6 Prerna Juneja, Deepika Rama Subramanian, and Tanushree Mitra

Fig. 1. Flowchart depicting methodology and data processing pipeline. Additional method details with respect

to the antitative Analysis are outlined in Appendix A

4 DATASET

We use /u/publicmodlogs

5

, a Reddit bot, to gain access to the public moderation logs from subreddits.

Each subreddit that wants to provide public access to their moderator action logs (referred to as

modlog in the rest of the paper) invites this bot to be a moderator with read-only permission.

Once the bot accepts the invitation, it gains access to the subreddit’s moderation logs and starts

5

https://www.Reddit.com/user/publicmodlogs

PACM on Human-Computer Interaction, Vol. 4, No. GROUP, Article 17. Publication date: January 2019.

Through the Looking Glass: Study of Transparency in Reddit’s Moderation Practices 17:7

Modaction Description

removelink Removal of a link

removecomment Removal of a post/comment

muteuser Mute a user from the moderator mail (ModMail)

ignorereports Ignore all reports made for a particular post/comment

spamcomment Content of post/comment is marked spam

spamlink Link(s) present in a post/comment is marked spam

Table 1. Description of a sample of modactions present in our modlog dataset

generating a JSON feed containing those logs. This bot is one of the many third party initiatives

that provide access to the moderation logs and Reddit has no say on why/how/when a subreddit

can opt for it.

At any given time, the modlogs contain complete moderation action data from the previous 3

months. Using this bot, we have been continuously scraping data of 204 subreddits from March

2018 to September 2018. The collected logs have several elds capturing the moderation action

(henceforth referred to as modaction) that was performed (

action

), who performed it (

mod

,

mod_id

), time of moderation (

created_tc

), short description for the action (

description

,

such as removecomment, removelink, etc.), detailed explanation stating the reason for the modac-

tion (

details

), community on which it was performed (

subreddit_name_prefixed

, e.g.

r/conspiracy, sr_id, subreddit,), user whose contribution was moderated (

target_author

), con-

tent of the post or comment that was moderated (

target_body

), permanent static link of the

moderated content (

permalink

) and title of the post (

title

). Note that the moderation action

can be performed either on the post published by the user or comment made by other users on exist-

ing subreddit posts. Hence, we will refer to the moderated content (captured by the

target_body

eld) as post/comment throughout the paper.

Our data contains 44 unique modactions. Table 1 presents a sample along with a brief explanation.

Since our goal is to study norms and transparency in rule enforcement, we focus on modactions that

explicitly represent removal of content. For this purpose, we shortlist four modactions—removelink,

removecomment, muteuser and unignorereports—all of which represent removing either a post or

comment by the moderator because they deemed it was unsuitable for that particular community.

After ltering for these modactions, we had 479,618 rows in our modlog data-set. Our data also

revealed that the ‘description’ eld corresponding to these modactions was mostly blank. While

some moderators provide detailed explanations behind their modactions, others do not. The ‘details’

eld includes explanations as vague as remove to as detailed as Section 1B-2 - Blacklisted Domains.

Domain detected: Coincodex.com. There is a lack of common standards when it comes to explaining

and justifying modactions—a phenomenon which this study investigates.

Figure 1 shows the methodology ow chart and data processing pipeline used in our project to

answer the research questions. It consists of ve stages that involve both machine computation

and human qualitative coding. We describe them in detail in the following sections.

5 RQ1: HOW DO CONTENT MODERATION PRACTICES ON SUBREDDITS ALIGN

WITH SCP’S PRINCIPLES OF TRANSPARENCY

In order to study how rules and norms are operationalized and enforced, it is essential to study why

content gets removed from the platform. The reasons could range anywhere between violation of

rules and norms to deviation from site wide Content Policies. We make use of both quantitative

and qualitative methods to determine these reasons. The quantitative part aims to nd the “meta

communities”—groups of subreddits—that share the same types of transgressions. We use qualitative

methods to analyze the transgressions in each of the meta communities in order to determine the

reason for removal. The detailed methodology as well as the results are discussed in sections below.

PACM on Human-Computer Interaction, Vol. 4, No. GROUP, Article 17. Publication date: January 2019.

17:8 Prerna Juneja, Deepika Rama Subramanian, and Tanushree Mitra

Meta Community Sample subreddits Mean subscribers Freq. of Violations

Gaming, erotic & political meta com-

munity (MC1)

ElderScrolls, AVGN, PO-

TUSWatch, SugarBaby, Cuck-

oldPregnancy, moderatepolitics

62248.48

Pro free speech & anti-censorship

meta community (MC2)

dark_humor, BadRedditN-

oDonut, AllModsAreBastards

6720.7

Cryptocurrency, news & special in-

terest meta community (MC3)

CryptoCurrency, ethereum,

conspiracy, MakingaMurderer

63305.9

Conspiratorial (MC4) ConspiracyII 15000

Academic (MC5) Indian_Academia 2100

Table 2. Meta-communities along with a few constituting subreddits. Mean subscribers stands for mean

subscriber count per meta-community. Note that the last two meta communities consist of only one subreddit.

denotes the percentage of sanctioned posts that coders could not map to a rule. denotes the percentage

of sanctioned posts where moderators do not provide an explanation for content removal.

5.1 antitative Method: Find Meta-Communities Sanctioning Similar Content

The aim of the quantitative method is to determine “meta communities” that sanction similar

content. We posit that communities that remove similar content will have similar rules and norms.

To nd the types of sanctioned content that were removed from the subreddits, we empirically

nd topics in the modlog dataset using the Author Topic Modelling (ATM)

[55]

algorithm—an

extension of LDA (Latent Dirichlet Allocation)

[1]

, a widely used topic modeling technique. Since

topic modelling algorithms are highly susceptible to noisy data, robust pre-processing of the

dataset is necessary in order to obtain interpretable topics. We applied standard text pre-processing

techniques (see Appendix

A

) on the sanctioned content and fed it to the ATM algorithm. ATM

extracts common themes and topics from documents and groups them by their authors. For this

study, we considered sanctioned posts/comments as the documents and subreddits in which they

were posted as the authors. By using ATM, we obtained 55 topics that represent the types of

transgressions sanctioned across various subreddits. Appendix

A.0.2

provides our empirical process

behind choosing 55 topics. To interpret the common themes spanning these topics, two authors

independently coded each of the topics by examining the top 25 posts and comments. Many of these

topics related to spam posts. For example, we found 10 topics that were dominated by spam. Top

posts representing these spam topics promoted and highlighted certain websites, cryptocurrencies

and social issues. The remaining topics discussed conspiracy theories, controversial political and

geopolitical themes, erotic lifestyles, and miscellaneous content. Appendix

B

lists the 55 extracted

topics and details of our qualitative investigation and interpretation of these topics. Finally, to nd

groups of communities that sanction similar content, we applied Louvain Community Detection

[2]

algorithm to cluster the subreddits spanning the 55 topics discovered by ATM. Louvain’s algorithm

is a popular method used to detect communities from large networks. This algorithm has also been

used on various Reddit datasets that deal with inter-subreddit relationships

[10, 46]

. After applying

Louvain, we obtained 5 clusters. Each cluster represents a “meta community” that sanctions similar

kinds of transgressions. Table

2

enlists all the discovered meta communities along with a few

selected subreddits. We allocated each meta community a shorthand name to improve readability.

We present descriptions of each meta community along with all of its constituting subreddits in

Appendix A.0.4

PACM on Human-Computer Interaction, Vol. 4, No. GROUP, Article 17. Publication date: January 2019.

Through the Looking Glass: Study of Transparency in Reddit’s Moderation Practices 17:9

Fig. 2. Example of an instance where human moderator specified the reason for removing a comment

5.2 alitative Methods: Investigating adherence to SCP through Rule Mapping and

Norm Discovery

In order to study moderation practices, rules and their enforcement in each of the 5 “meta com-

munities”, we conducted a deep content analysis of 50 high ranking posts and comments from

each of the topics present in the 5 cohorts. High ranking posts/comments for a topic in a meta

community are the ones that have high probability of belonging to that topic; hence are the most

representative of that topic. Note that in some meta communities, the probability of occurrence of

few topics was very low. Thus, we did not get 50 posts from those topics to annotate. Finally, we

analyzed a total of 6260 posts/comments. Two authors qualitatively mapped these posts/comments

to subreddits’ rules and Reddit’s Content Policy. The absence of a rule results in the discovery of

a norm that has not been formalized as a rule. In the process, we also study how transparently

rules are enforced by moderators in the communities. Contrary to popular belief, content is not the

only reason why posts/comments are sanctioned. They are sanctioned due to a variety of other

reasons. Minimum karma and account age, title and format of the post and history of the user’s

rule violations can all result in sanctions. Posts/comments can also be removed if their theme

clashes with the ideology of the subreddit. For example, Pro Trump comments are removed from

subreddit Socialism. Socialism is a subreddit that is dedicated to discussing current events from

a socialist/anti-capitalist perspective. Since there is a clash in ideology, Socialism disallows any

posts supporting capitalism. The views of Donald Trump and the Republican party are the exact

opposite of those of socialism. Therefore, the subreddit disallows any posts supporting Trump. This

indicates that understanding the context surrounding the post/comment is essential to discover

norms and study transparency in operationalizing rules.

To understand the context in which a comment was removed, we relied on the ‘target_permalink’

eld present in the modlog. This eld provides us with either the link to the sanctioned post or the

link to the post to which the comment was posted by a user. At this link, even though we are unable

to see the actual sanctioned post/comment, we have access to all the other surrounding content

and the overall discussion. Removed posts are represented by the placeholder text [removed] and

removed comments are represented by the placeholder text “comment deleted by moderator”. In

some cases an explanation provided by the moderator (human moderator or AutoModerator) is

present in the form of a comment after this placeholder as shown in Figure 2. We relied on this

comment, the ‘details’ eld present in the modlog and the context in which the sanctioned comment

was posted to determine why the content was removed.

The mapping task was performed by two authors independently in an iterative manner. Both

authors are passive users of Reddit and have been on the platform for seven and fteen months

respectively. They mapped each post/comment, keeping in mind the context and subreddit in

which it was posted, with the subreddit’s rules and Reddit’s content policy. They then separated

PACM on Human-Computer Interaction, Vol. 4, No. GROUP, Article 17. Publication date: January 2019.

17:10 Prerna Juneja, Deepika Rama Subramanian, and Tanushree Mitra

Transparency guidelines Transparency violations

Provide detailed guidance to the community about

what content is prohibited

Secret guidelines in the form of community norms (RQ1a and RQ1c)

23% of the annotated posts were either ambiguous removals or norm

violations. For example, moderators of meta community 1 remove de-

risive posts/comments.

Provide notice to each user whose content is taken

down or account is suspended about the reason for

the removal or suspension

Lack of explanations and reasons for content removal (RQ1b)

∼50% of annotated posts were not accompanied by reason for removal.

Provide an explanation of how automated detection

is used across each category of content.

Hidden AutoModerator conguration (RQ1d)

Subreddits do not reveal banned words, projects and karma

requirement. For example, subreddit MurderedByWords removes

posts/comments containing the word feminazi.

Table 3. Table summarizing SCP transparency guidelines and corresponding moderation practices that violate

these guidelines. represents the remarks and examples corresponding to the violations.

posts/comments that were removed because of a norm or a reason that was not clearly reected in

rules. Finally, the authors came together and discussed the codes. Disagreements were resolved by

discussions and by re-iterating over codes. Through the qualitative analysis, we discovered hidden

norms and transparency violations in several moderation practices. We present our ndings in the

next section.

5.3 RQ1 Results

Our qualitative analysis revealed several moderation practices across meta communities that

violate the SCP guidelines. We discuss these practices in detail in the subsequent sections. Table 3

summarizes the SCP guidelines and corresponding violations briey.

5.4 RQ1a: Do all sanctioned posts correspond to a rule that they have violated that is

either explicitly stated in the community’s rule book or Reddit’s Content Policy?

Content on Reddit can be removed due to a violation of the site-wide Content Policy, subreddit’s

rules or norms. During the qualitative analysis, we mapped each sanctioned post/comment to

the subreddit’s rules and Reddit’s Content Policy. In the process, we discovered norms as well as

several ambiguous removals. Ambiguous removals are posts/comments that seemed rule-abiding,

but annotators couldn’t determine any rational reason for their removal. Out of the 6

,

260 posts

annotated, there were 3

,

030 sanctions with no explanations for removal. Out of these, 965 (31

.

8%)

instances were removed due to unstated norm violations and 454 (15%) instances were ambiguous

removals. We discuss community norms in detail in Section

5.6

. We argue that it would be equally

dicult for the user who posted this content as well as a newcomer in the community to understand

what kind of content is sanctioned from the community. Thus, it becomes essential that moderators

specify the reasons for removals.

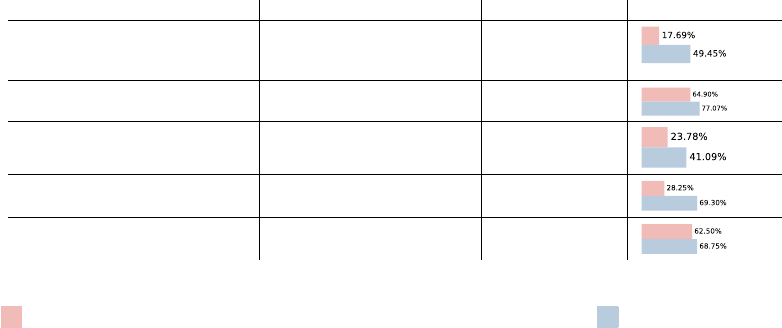

Figure

2

shows percentage of (unique) sanctioned posts/comments where authors could not map

content to a rule (norms+ambiguous removals) for each of the ve meta communities. The Pro free

speech & anti-censorship meta community (MC2) and Academic meta community (MC5) have the

highest percentage of such removals. Subreddits belonging to MC2 were mostly pro free speech

communities that had zero to a handful of rules. Similarly, MC5 consists of one subreddit that had

only one rule specied at the time of qualitative coding. Thus, most of its sanctions were either

coded as norms or were ambiguous removals since coders could not map them to any site-wide or

subreddit specic rules.

5.5 RQ1b: Do moderators provide reason for removals aer removing user's content?

The second principle of the SCP (Notice) states that users should be informed why their content was

removed from a social media platform. Recall that 3030 annotated posts/comments did not provide a

PACM on Human-Computer Interaction, Vol. 4, No. GROUP, Article 17. Publication date: January 2019.

Through the Looking Glass: Study of Transparency in Reddit’s Moderation Practices 17:11

reason for removal. This shows that the SCP Notice guideline is violated in many subreddits. Figure

2

shows percentage of sanctioned posts/comments where moderators do not provide an explanation

for content removal for each of the ve meta communities. The Pro free speech & anti-censorship

meta community (MC2) and Academic meta community (MC5) again have a large amount of

such removals indicating that moderators of these subreddits sanction several posts/comments

because of norm violations but seldom provide any feedback. The Gaming, erotic and political

meta community (MC1) also has signicant number of sanctions without explanations. This meta

community has several subreddits that provide a platform for healthy discussions and debates

which often turn into ame wars. As a reactive measure, moderators of these subreddits nuke and

lock threads containing such heated arguments without providing any explanations for content

deletions. As a result of this action, several rule-abiding posts/comments are sacriced increasing

the count of both ambiguous removals as well as content without feedback.

Out of the 3030 posts that were sanctioned without providing a reason, human moderators and

AutoModerator moderated 68

.

8% and 31

.

2% of the posts respectively. In the current Reddit design,

moderators do not have a feature where they can provide feedback about content removal. Thus,

they either have to manually provide feedback as a “reply” to the deleted post or send a personal

message to the user. We discuss this limitation and design implications in Section 7.

5.6 RQ1c: Are rules in subreddits clearly defined and elucidated? Are they enforced

and operationalized in a consistent and transparent manner by human and auto

moderators?

Our qualitative analysis reveals several practices where rules were either not clearly elucidated or

their enforcement was not transparent.

Hidden word lters: Manually reviewing every post/comment is laborious. The Gaming, erotic

and political meta community (MC1) and the Cryptocurrency, news and special interest meta

community (MC3) are high trac meta communities with mean subscriber counts 62248.48 and

63305.9 respectively. Thus, moderators of these communities rely on AutoModerator to identify

troublesome posts. They congure word lters using custom regexes to detect profanities and other

oensive content. However, the exact details of the word lters are not revealed to the user. These

oensive words could be pejorative terms for females (feminazi, fucking bitch, pussies) or males

(cuck), abusive slangs and phrases (fat fucks, shit lord, overweight, prick, shut the fuck up, fucking

mouth, jack o ), disrespectful terms for homosexuals (fags) and racist slurs (nigger, negro, nig, porch

monkey). Words and slangs like jew, hymies and kike are used to lter out posts/comments about

Jews in an attempt to prevent antisemitism. In addition to oensive content, AutoModerator is

also used to detect low quality posts by either setting a word count threshold or a word lter. For

example, phrases like shill me, rate my portfolio, censor, shill, and arbitrage and b eer markets are

often removed from cryptocurrency related subreddits (MC3) as they are generally associated with

low-quality content. All these banned and blacklisted words and slangs haven’t been made public

in any of the rules.

It is important to note that even after a violation, an oender seldom learns which particular

word caused his content to be removed. For example, subreddit POTUSWatch does not disclose the

banned word because of which the following comment was removed: “This has dominated the 24

hours new cycle. I think it was done so Donny Two Scoops could give Melania a day in the limelight.

You know, so he could transfer a little bit of heat from him to her.”. We suspect that the comment

was sanctioned because of the presence of the slang two scoops. However, there was no way to

conrm this since the “detail” eld in the modlog contained the phrase “word lter” and no message

explaining the reason for removal was found with the original comment.

PACM on Human-Computer Interaction, Vol. 4, No. GROUP, Article 17. Publication date: January 2019.

17:12 Prerna Juneja, Deepika Rama Subramanian, and Tanushree Mitra

Some comments which used the banned content innocently were also caught by these lters

and removed. In some subreddits, these posts were re-reviewed by human moderators and were

brought back while in others they were not. For example, consider the following two posts (1)

“...what heritage is that about? German, British, Dutch, Swedish, Italian, Spanish, Portuguese, Polish,

European

Jewish

, Gypsy? Those cultures and heritages have nothing in common. ’White pride’ is to

those cultures and heritages the same thing an ’Asian restaurant’ is to the cuisine of Asian nations:

great by themselves....” (2) “What is rule 5? I mean

bitch

in an inadequately masculine gangster

rap way, and I mean

fag

in a South Park contemptible person way.” The rst post was removed

because of the presence of the word Jew but was later brought back by the moderators. On the

other hand, the second post was removed permanently even though words bitch and fag were

used in a non-derogatory way. This practice indicates that posts removed by AutoModerator are

not thoroughly reviewed by the human moderators. We plan to investigate this line of thought in

future work.

Non-exhaustive rules:

A few subreddits in the Pro free-speech and anti-censorship meta community

(MC2) and the Cryptocurrency, news and special interests meta community (MC3) provide a list

of blacklisted words and slurs but this list is by no means exhaustive. For example, the subreddit

Socialism (present in MC3) is a community for socialists with a robust set of rules. The list of

prohibited words have been publicly listed as a part of one of their rules. It includes both explicitly

abusive terms (e.g. retarded, bitch, homo, etc.) as well as normalized abusive terms (e.g., stupid, crazy,

bitching, etc.). However, this list of examples provided by the moderators is not comprehensive.

For example, we suspect the following comment was removed because of the presence of word

brainless which is not present in the subreddit’s blacklisted word set. “...removed a word that the

brainless automoderated bot mistook for an insult since it

'

s not able to read context proving that it

'

s a

dangerous and un-bright idea to automate censorship.”

Banned Topics:

A few cryptocurrency related subreddits in MC3 do not permit the use of certain

projects and platforms. For example, subreddit CryptoCurrency has banned coin projects and

platforms like VeChain, Skycoin, Foresting, Maecenas etc. It also removes posts that mention any

of the pump-and-dump

6

(PnD) cryptocurrency groups. We found no explicit rules providing an

exhaustive list of such projects. We also found posts that were not specically discussing these

projects being removed. For example, the post “I believe Teeka Tiwari prediction of $40k by end

of year. Dude has been accurate the last 3 years” was removed because of the use of PnD group

Teeka, but the post talks about the nancial advisor “Teeka Tiwari”. Neither was the user notied

about the removal, nor did the moderators provide any feedback. This example clearly shows

that AutoModerator cannot determine the context in which a banned word is used. Thus, the

involvement of human moderators is critical in content moderation.

Hidden karma and account age requirement:

Karma is a very important concept in Reddit and was

introduced to curb spam, o-topic and low quality content. Members with higher karma points

have certain privileges that others don’t. In almost all the subreddits present across MC1, MC3 and

MC4, a minimum comment karma is required to post content, a rule enforced by AutoModerator.

Surprisingly, many subreddits have not mentioned this practice in their rules. For example, subred-

dits KotakuInAction, Cuckold, Vinesauce and btc (MC3) lter posts using the karma lter but none

of them mention this requirement in their rules. Subreddit Sugarbaby (MC1) requires accounts

to be at least 7 days old before being allowed to create new posts, but this requirement has not

6

https://en.wikipedia.org/wiki/Pump_and_dump

PACM on Human-Computer Interaction, Vol. 4, No. GROUP, Article 17. Publication date: January 2019.

Through the Looking Glass: Study of Transparency in Reddit’s Moderation Practices 17:13

Subreddit

Example post/comment removed Remarks (*/**)

MC 1

POTUSWatch

The investigation is over. It’s Obama’s fault. short comment**

NeutralCryptoTalk

As someone who doesn’t truly understand the economics of the crypto

market, can anyone inform me as whether this is a dip/crash or when/will

it recover? Is there correlation with the traditional stock market? ... Any

insight would be much appreciated

noob comment*

MC 2

Ninjago

PSA: Regarding the recent netix issues Don’t have Hulu :( o topic*

dark_humor

[Dunking on Helpless Boy](https://m.youtube.com/watch?v=yuIDC3cq6fU) ableist jokes*

MC 3

Socialism

eu blandit Ut consectetur bibendum volutpat. amet non neque nisi adip-

iscing dolor amet, tempus Vestibulum consequat sit venenatis Aenean leo

Sed a amet quis eros condimentum. in tempor sapien sit euismod

non-english comment*

RedditCensors

Still absolutely no acknowledgement of the fact that u/*** is blatantly

just banning anyone trying to discuss the fact that her friends get special

treatment and anyone questioning authority is banned.

attacking mods*

MC 4

ConspiracyII

Please, do elaborate.. This sub you’re in, harping on about my joke of a /u/;

you do know that I and some pals created all of this, right? Do you feel silly

now? You should.Worry not, I’ll be abandoning this username soon. I’m

tired of hearing nonsense from fucking delicate idiots with misunderstood

shill hysteria. What is /u/AllThat? Are you a movie for tweens? How about

I change my shit to /u/Hannibal? Am I now a serial killer? Come back

when you have an apology at the ready and you pick your head up from

the dirt, dumbass.

lead by example*

ConspiracyII

Damn, very interesting information, thank you now I’m going to do a

little digging myself.

phatic talk*

MC 5

Indian_Academia

My gawd, you Pretentious bastard. derisive content*

Indian_Academia

Hi Indu, I have been scavenging the internet to nd info regarding the

same. I found this video on YouTube and found it useful. Do check it out:

http://bit.ly/2FAuT73 All the Best!

URLs with incorrect format*

Table 4. Sample posts/comments from all meta communities that were removed due to norm violations.

Remarks column contains the rationale for removal of the content. Reasons marked * are removed by human

moderators and the ones marked ** are removed by the AutoModerator.

been explicitly specied anywhere. Even after a violation, the exact karma requirement was not

revealed by the moderators. For example, subreddit MurderedByWords (MC1) removes posts by

new members, leaving a message “Your comment has been removed due to your account having low

karma. This is done to combat spam. If you would like to participate on /r/MurderedByWords, you will

need to earn karma on other subreddits.” The message does not reveal the minimum karma required.

In some subreddits, comments with negative karma (number of downvotes > number of upvotes)

are also removed. Again, neither are the users notied about the removal nor is this requirement

specied in the subreddit’s rules.

5.7 RQ1d: What are the norms prevalent in various subreddit communities?

In this section, we present the norms that we uncovered in the meta communities. Table

4

presents

sample posts/comments from all the ve meta communities that were removed because of norm

violations.

Threads containing ame wars nuked (MC1):

Discussions on controversial topics like politics and

important public gures often turn into heated personal arguments in MC1. It is a common practice

followed by a few subreddits in this meta community to delete entire threads containing ame wars

and slap ghts, often sacricing a few innocent comments. Other subreddits take a step further

and lock the threads stopping community members to participate in the discussion.

Threads containing arguments nuked (MC4):

The moderators of this meta community nuke entire

threads containing arguments. As a result several rule abiding (valid and civil) comments were

removed too. For example, a user’s civil comment “If it’s upsetting to you please, appeal” was

removed when a thread was nuked. The users are neither notied about the removal nor do the

PACM on Human-Computer Interaction, Vol. 4, No. GROUP, Article 17. Publication date: January 2019.

17:14 Prerna Juneja, Deepika Rama Subramanian, and Tanushree Mitra

moderators provide reasons for such removals.

Short posts and comments removed (MC1):

Posts/comments with low character/word count are con-

sidered low quality and are removed. Few of the subreddits have congured AutoModerator to

perform this task. Neither this practice nor the word/character count used to perform the ltering

has been made public in any of the rules.

Derisive content removed (MC1, MC5):

Sarcasm and snide remarks are not appreciated in meta com-

munities 1 and 5. Few subreddits had an explicit rule, while in others, it’s a practice. Moderators in

these meta communities believe that being snarky doesn’t contribute much in the discussion.

Content without corroboration removed (MC1): Arguments containing conspiracy theories and

posts/comments without substantiation from trustworthy sources are often removed from meta

community 1.

Noob comments and posts removed (MC1):

In some subreddits, basic questions (that one could have

searched online) asked by new users are removed. There are no rules specifying this practice. Table

4 contains an example of such a post.

Shadow bans (MC1):

Some comments in the content we analyzed were shadow banned. When a

user is shadow banned, his posted content is only visible to the user but not to the community.

Content posted by repeat oenders and trolls removed (MC1):

Some subreddits in this meta commu-

nity have a list of Redditors who are known trolls or repeat oenders in their communities. Content

posted by such users are put in the moderator queue by the AutoModerator and are reviewed

by human moderators. We found instances where posts (not violating any rule) posted by repeat

oenders were removed and no reason for removal was provided.

Posts with disguise d links, banned domains and incorrect format removed (MC1, MC3, MC5): A few

moderators expect the posts published on their subreddits to follow a certain format. They also

disallow use of certain domains and shortened URLs. Most of the times human moderators rely

on AutoModerator to perform this task. For example, moderators of subreddit RBI lter posts

containing Facebook links. Subreddit SugarBaby requires the post to be in a certain format ([age]

[online] or [2 character state abbreviation for irl and online] catchy header)]. But this requirement

has not been specied in the sidebar rules. A newcomer can learn this norm either after a violation

or by observing the subreddit for a certain period of time.

Sexist and ableist jokes removed (MC2):

Subreddit i_irl, a meme aggregator, allows people to cross

post a variety of memes from all over Reddit. It asks users to submit “dark jokes and memes without

white knighting and general faggotry”. But, the subreddit removes jokes with hints of ableism and

sexual connotation.

O topic content removed (MC2):

O topic content is removed from almost every subreddit under

our scrutiny. Many communities all over Reddit have an explicit rule stating this practice. But, we

found a handful of growing communities (with less number of subscribers) in this meta community

with no rules, where the practice of removing irrelevant content is followed. There are also some

communities where description of the subreddit is not enough to know what kind of content is

welcomed by the moderators and members. For example, a TV show based subreddit removed the

PACM on Human-Computer Interaction, Vol. 4, No. GROUP, Article 17. Publication date: January 2019.

Through the Looking Glass: Study of Transparency in Reddit’s Moderation Practices 17:15

post where the user complains that he/she can

'

t see the series online [“Post: PSA: Regarding the

recent Netix issues Don't have Hulu :(”].

Attacking Mod (MC3):

Name calling the moderators and protesting against censorship is not encour-

aged. We found several posts attacking the authority getting removed from this meta community

by the moderators.

Non-English posts (MC3):

Most of the subreddits in this meta community 3 have a norm to remove

non-English posts.

Unexplained removal of posts containing Facebook links (MC4): Posts containing Facebook links were

removed by AutoModerator. None of the sanctioned posts were accompanied by reason for removal.

Phatic Talk (MC4):

Several comments that add little value to the conversation were removed by the

AutoModerator. However, we were unable to identify the exact basis of classication of content as

phatic and low quality. The AutoModerator neither leaves the reason for removal as a reply to the

deleted comment nor did we nd any accompanying description in the modlog dataset.

Lead by Example: Moderators posting and deleting comments that violate subreddit’s rules (MC4): We

found moderators removing their own comments. These comments were demeaning in nature and

clearly violated the rules of the subreddit. For example, consider the comment “you do know that I

and some pals created all of this, right? Do you feel silly now? You should. Worry not, I’ll be abandoning

this username soon. I’m tired of hearing nonsense from fucking delicate idiots with misunderstoo d shill

hysteria. What is All That? Are you a movie for tweens? How about I change my shit to Hannibal? Am

I now a serial killer? Come back when you have an apology at the ready and you pick your head up

from the dirt, dumbass.”

The ndings from our qualitative coding raised several questions about the widespread lack of

transparency in subreddit communities. Several aspects of transparency are violated. For example,

posts and comments are silently removed without notifying the user about the reason for the

removal. Rules’ enforcement is hidden from the community in most cases. We summarize the SCP

guidelines and the corresponding violations in Table 3.

6 RQ2: HOW DO MODERATORS VIEW THE VARIOUS FACETS OF TRANSPARENCY?

By delving into the moderators’ side of the story, RQ2 helps in understanding the reasons behind

the widespread transparency violations.

6.1 Method: Interview with moderators

We conducted semi-structured interviews with 13 Reddit moderators between January, 2019 and

May, 2019. 11 of them are moderating the communities present in our public modlog dataset. A

semi structured interview script was designed to understand the rationale behind the widespread

transparency violations summarized in Table

3

. The script was revised multiple times based on

the feedback received from four researchers. In order to get additional insights about moderation

practices, we rst asked moderators about their background, moderation process that they followed

in their respective subreddits as well as the rules and norms of those subreddits. We probed them

about the design of certain rules. We inquired about the use of AutoModerator and the appeal

process against content removals and bans. We also discussed how a user is notied about a rule

violation. Finally, we asked them the ways Reddit can help them in terms of policy changes, interface

PACM on Human-Computer Interaction, Vol. 4, No. GROUP, Article 17. Publication date: January 2019.

17:16 Prerna Juneja, Deepika Rama Subramanian, and Tanushree Mitra

Interviewee

Subreddit Topic

Moderation Experience

(in months)

Gender Country

P1* censorship 12 M USA

P2* archive 84 M USA

P3 school admissions 12 M USA

P4* entertainment, niche interests 156 M USA

P5 news sharing 48 F USA

P6* memes 20 F FRA

P7* country-related 24 M AUS

P8* cryptocurrency-related 72 M USA

P9* health 60 M USA

P10* sexual fetish 12 M USA

P11* data & analytics, technology 48 M USA

P12* memes 53 F USA

P13* tv show 36 F USA

Table 5. Moderators’ characteristics. A * in the Interviewee column indicates that the moderator is moderating

a subreddit present in our public modlog dataset. We refrain from specifying the subreddit names in order to

protect the identity of the moderators. Instead we present high-level community topics that describe the

subreddits.

and tools to make their job easier and eective. It is important to note that to elicit more detailed

responses, we did not include direct questions about “transparency” and “SCP”. The transparency

theme automatically emerged from moderator’s detailed narratives in response to the following

interview questions: Do you have a list of words whose use is prohibited in the subreddit? Have you

made it public, i.e specied it as a part of any of the rules, why/why not? How does a user get notied

that his comment has be en deleted or he has been banned? How does the user learn why his content

was deleted? etc. Appendix C lists the complete interview protocol.

We adopted convenience sampling to recruit our subjects. We posted recruitment messages on

several subreddits that accept surveys, polls, and interview calls, such as r/SampleSize and r/Favors.

We also posted these messages on subreddits that are run specically for moderators, for example,

r/modhelp, r/modclub, and r/AskModerators. We also sent out personal messages to moderators

who are moderating subreddits that are part of our modlog dataset.

The interviews lasted between 30 to 180 minutes with variance according to medium of interview

and how active and how strictly moderated the community is. Interviews conducted through text

based chats took longer time and moderators governing highly active subreddits with stricter

moderation principles had more to say. It also depended on how many subreddits a moderator was

managing. Some moderators brought insights from multiple subreddits. The minimum subscriber

count of a subreddit moderated by our interviewees was 79 and the maximum subscriber count

was 21

.

3 million as of May, 2019. Table 5 summarizes our study participants, the nature of the

communities they moderate, length of their moderation experience, gender and country where

they belong. In order to keep the privacy of moderators, we do not reveal the names of their

corresponding subreddits. We also use the term subreddit_name whenever a moderator refers

to his subreddit in any of his quotes. All interviewees received $15 as compensation for their

participation. We used three modes to conduct the interviews according to the interviewee’s

preference - audio/video, chat and email. The audio/video interviews were recorded using the

inbuilt features on Skype/Zoom or the recording features on our mobile phones. After transcribing

the interviews, the authors manually coded them to determine common themes. All conicts were

resolved through discussions. Once the codes were agreed-upon by both the authors, we performed

axial coding to deduce relationships among themes.

PACM on Human-Computer Interaction, Vol. 4, No. GROUP, Article 17. Publication date: January 2019.

Through the Looking Glass: Study of Transparency in Reddit’s Moderation Practices 17:17

6.2 RQ2 Results

Interviewing moderators helped us unpack the various facets of transparency, including silent

removal of content, removals without specifying reasons, enforcement and operationalization of

rules, and the appeal process.

6.2.1 Silent Removals: No notifications about post/comment removals. After talking to moderators,

we discovered that Reddit, as a platform, does not notify users when their comment gets deleted.

Moderators told us that users can gure out the deletion once they either reload the page containing

the deleted content (P5), log out and log in to Reddit again (P2) or check the public modlogs (P4,

P13). They informed us that it is up to the human moderators to either send user a private message

or leave a comment as a “Reply” to the deleted content detailing the reason for removal. Conguring

the AutoModerator to notify a user about content removal and stating the reason for that removal

is left to the discretion of the human moderators of that subreddit. User bans, on the other hand,

are always issued with a message that includes a link to ModMail—a messaging system used to

communicate with the moderator team—along with instructions about the appeal process.

“Comment removals don’t generate a removal notice in any of the subreddits. Bans always are

issued with a message that includes a link to ModMail and instructions. ” - P10

“I don’t think people can get customized notications for comments until they congure the auto

moderator system. ” - P10

“Reddit doesn’t even have a system in place for notifying either. They (subreddit members) notice

it (post/comment) disappeared if they log out and don’t see the comment. Bans are very dierent.

Notications of a ban are built into reddit. the exact details/reasons are for us to provide in that

process. ” - P2

Moderators did not have a consensus on silent removals. An equal number of moderators in our

dataset (n=5) were for and against (n=5) silent removals. A handful of moderators (n=3) believed

that silent removals must be applied depending on the situation.

Few moderators shared that users would be irked by multiple notications and private messages.

“People would hate getting that many PMs (private messages) about removed comments, most of

Reddit operates the way we do.” - P12

“ And I don’t really think that (notications) would be very necessar y, right, because people leave

hundreds of comments a day, So it kind of makes Reddit a very bloated system.” - P10

One moderator shared that explicitly pointing out removals could make users belligerent towards

that moderator and could lead to users exhibiting more bad behavior.

“So I nd that silent removal, um, both visually and policy wise is the best course of action. If you

negatively reinforce people, they tend to be more pigheaded about it. They tend to be more stubborn

and they will out of spite, try and continue this negative behavior.” - P11

Moderators who opposed silent removals claimed that this practice is pro censorship and can

decrease the willingness of users to participate in the subreddits. For example,

“We message that user and tell them, Hey, we had to remove this. Here is why, or we removed the

comment. We have to do that because the subreddit is devoted to censorship and pointing that out.” -

P1

Few moderators were on the fence about notifying users. One moderator indicated that he

notied users whenever he could as the process was labor-intensive.

“I notify people like here is exactly why you’re being punished. But other times I’m just completely

silent and just let them be confused. I’m generally not very consistent cause I’m just like one person,

you know. So if I had to write down a special message for every single person, I banned it would

take up a lot of time. ” - P10

PACM on Human-Computer Interaction, Vol. 4, No. GROUP, Article 17. Publication date: January 2019.

17:18 Prerna Juneja, Deepika Rama Subramanian, and Tanushree Mitra

Another point of view that emerged was that silent removals were suitable in certain cases. For

example, one moderator shared that he selectively noties law abiding users.

“I think it depends if it

'

s somebody who

'

s clearly like a participant in the community, and mayb e

they, like, break the rules once or twice. Then I think it

'

s very appropriate to say, Hey, I removed

your comment with a public post. Hey, I removed your comment for breaking this rule. Um, if it

'

s

things like cleaning up spam links, I don’t really feel any obligation, to reply to all of those and say

I removed it for being spam.” - P8

In summary, while the practice of silently removing content is in direct violation of the SCP

transparency guidelines, which ask social media platforms to notify users when their content is

taken down from the platform, interviews revealed the other side of the picture. Lack of proper

infrastructure coupled with millions of users leaving millions of comments makes it impossible for

moderators to notify each and every user about the content removal. As a result, few moderators

start providing feedback selectively to rule-abiding participants.

6.2.2 Unexplained Censorship: Removing posts/comments without specifying a rule/reason. While

not providing notication to users about content removal is one transparency violation, not

including specic reasons for removals in those notications is another violation. The SCP clearly

states that the minimum level of information required for a notice to be considered adequate

includes the “specic clause of the guidelines that the content was found to violate”

[49]

. During the

qualitative analysis, we discovered that moderators seldom leave reasons or point to community

rules while removing content. Our interviews showed that moderators’ stance on unexplained

censorship was divided.

Five moderators believed that specifying a rule/reason while removing content is helpful for the

community. They pointed out that informing users about the rules they have previously broken

serve as a learning opportunity to avoid such behavior in the future.

“We remove and we inform userbase by leaving a comment on why it was removed, it sorts of

educates other users who go through the comments that this is what the moderators remove.” - P7

“We always post a comment specifying which rule was broken and distinguish+sticky it after we’re

done with the removal. It is incredibly helpful. Other communities just remove posts ‘learned the

lesson or not’. By showing our users which rule they

'

ve exactly broken, they can learn from it, and

avoid specic behavior next time.” - P6

One moderator stated that explanations will improve the image of the moderators and the

subreddit.

“I think it’s helpful, and it makes us look better, right.” - P3

Another moderator recounted that his past experience as a Reddit user shaped his actions

as a moderator. Silent removals without reasons decreased his willingness to participate in the

subreddits. Thus, as a moderator he notied users of his subreddit about content removal and also

specied the rules that they had violated.

“Well, those kind of practices, they extremely decreased my willingness to participate in those subs.

Now that I found out.... like I didn

'

t even realize it was happening because you never know your

content is being removed. So after I gured I just stopped participating in subreddits that silently

remove content, which is a lot of them.” - P9

Five moderators were on the other end of the spectrum and believed that specifying a rule or

reason for removal is not helpful for the community. Some believed that people who sincerely

exchange ideas do not need to be reminded of the rules.

“When dealing with these people, that value their account, and sincerely want to exchange ideas...

people that are of value to us...they don’t need to be told to act civilized...the rules lawyers that

demand a list of forbidden words to avoid are there to use our rules as a game” - P2

PACM on Human-Computer Interaction, Vol. 4, No. GROUP, Article 17. Publication date: January 2019.

Through the Looking Glass: Study of Transparency in Reddit’s Moderation Practices 17:19

Other moderators shared similar sentiments about posting rules. They reported that miscreants

will not change their behavior and will continue to break subreddit’s rules despite transparent

moderation practices.

“Because the people who are going to violate the rules, they’re going to violate the rules, no matter

what you tell them. And those who really are making a mistake and are in good faith are going to

appeal. Moderators explain why the message was removed very rarely because the type of comments

we remove are only those which are bigoted and that almost ninety nine percent of the time comes

with an instant permanent ban” - P4

One moderator informed us that most of the trac on Reddit is coming from the smartphone app.

While the sidebar is host to subreddit rules on Reddit’s desktop client, it is not visible on Reddit’s

mobile application. Thus, he did not see any value in mentioning the violated rules.

“Well... funny thing about the sidebar... 80% of the trac never sees the sidebar (rules) anyway.

mobile users don’t” - P2

Another participant (P5) told us that negative reinforcement, sometimes, makes the user aggres-

sive which leads to arguments with the moderator and further removals of the user’s content. At

other times, community members supporting the moderator’s decision also start down-voting an

oender’s other posted content.

“A comment removal reasons are generally unhelpful because they would be posted in the same

thread as the removed comment, not sent separately. I’ve modded one sub where some of the mods

posted comment removal comments. It never worked out well. Either the user was belligerent about

having one comment removed and procee ded to ght with the removing mod leading to more

removed comments. Or other users agree with the mod and then nd the person with the removed

comments’ other submissions and downvote them.” - P5

Both P5 and P10 asserted that it is too much of a manual eort to provide reasons for each and

every removal since hundreds of posts and comments get posted on the subreddits. Similarly P11

also shared that he does not nd writing reasons worthwhile since majority of posts removed from

his subreddit are spam.

“Mods are free to send an individual removal PM (personal message) but I don

'

t know that many do

this. Removal reasons in threads are not something that can be handed smoothly now that Reddit

has millions of users.” - P5

“There are only a handful of genuine posts that get removed and those people do not learn the reason.

But such posts are in minority and it’s not worth the eort to write reasons and notify users about

every removal because most of them are spam or actually bad posts” - P11

Other moderators selectively provide reasons to users they believe were participating in good

faith. One participant (P8) told us that he provides reasons for removals only to community members

who have broken rules either once or twice. Two moderators revealed that posts are more important

than comments and thus, moderators of that subreddit provide reasons only for post removals. For

example,

“The post are the meat of the subreddit, right? The posts are what’s going to get pushed up to the

front page or what’s going to get seen. We’re less concerned about the comments. ” - P1

Although few moderators favored either selective or no explanations for content removal, some

of them admitted that they were open to the idea of providing reasons if the process is automated

by Reddit. For example,

“ I just don’t do that because I would have to, like, manually type message. Um, but if it was just, like

a pop up when I clicked, removed post and said, Please select why you’re removing this comment,

and then it automatically posted a message. I would use that. ” - P8

PACM on Human-Computer Interaction, Vol. 4, No. GROUP, Article 17. Publication date: January 2019.

17:20 Prerna Juneja, Deepika Rama Subramanian, and Tanushree Mitra

6.2.3 Vague Rules. Previous studies have shown that community policies and rules can be

unclear and vaguely worded [

20

,

47

] making it dicult for users to understand them. While

mapping posts/comments to rules during our qualitative coding process (RQ1 phase), we discovered