PostgreSQL 7.3.2 Administrator’s

Guide

The PostgreSQL Global Development Group

PostgreSQL 7.3.2 Administrator’s Guide

by The PostgreSQL Global Development Group

Copyright © 1996-2002 by The PostgreSQL Global Development Group

Legal Notice

PostgreSQL is Copyright © 1996-2002 by the PostgreSQL Global Development Group and is distributed under the terms of the license of the

University of California below.

Postgres95 is Copyright © 1994-5 by the Regents of the University of California.

Permission to use, copy, modify, and distribute this software and its documentation for any purpose, without fee, and without a written agreement

is hereby granted, provided that the above copyright notice and this paragraph and the following two paragraphs appear in all copies.

IN NO EVENT SHALL THE UNIVERSITY OF CALIFORNIA BE LIABLE TO ANY PARTY FOR DIRECT, INDIRECT, SPECIAL, INCI-

DENTAL, OR CONSEQUENTIAL DAMAGES, INCLUDING LOST PROFITS, ARISING OUT OF THE USE OF THIS SOFTWARE AND ITS

DOCUMENTATION, EVEN IF THE UNIVERSITY OF CALIFORNIA HAS BEEN ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

THE UNIVERSITY OF CALIFORNIA SPECIFICALLY DISCLAIMS ANY WARRANTIES, INCLUDING, BUT NOT LIMITED TO, THE IM-

PLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR A PARTICULAR PURPOSE. THE SOFTWARE PROVIDED HERE-

UNDER IS ON AN “AS-IS” BASIS, AND THE UNIVERSITY OF CALIFORNIA HAS NO OBLIGATIONS TO PROVIDE MAINTENANCE,

SUPPORT, UPDATES, ENHANCEMENTS, OR MODIFICATIONS.

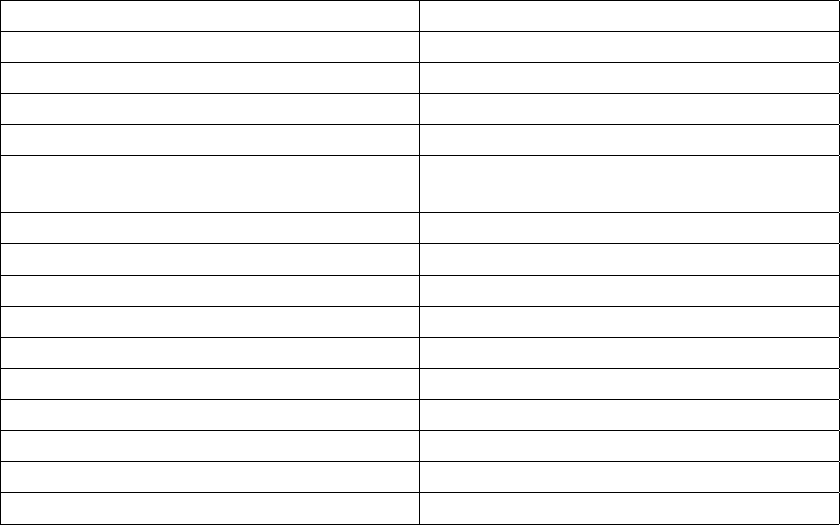

Table of Contents

Preface .........................................................................................................................................................i

1. What is PostgreSQL?......................................................................................................................i

2. A Short History of PostgreSQL ......................................................................................................i

2.1. The Berkeley POSTGRES Project ................................................................................... ii

2.2. Postgres95......................................................................................................................... ii

2.3. PostgreSQL...................................................................................................................... iii

3. What’s In This Book .....................................................................................................................iv

4. Overview of Documentation Resources........................................................................................iv

5. Terminology and Notation .............................................................................................................v

6. Bug Reporting Guidelines.............................................................................................................vi

6.1. Identifying Bugs...............................................................................................................vi

6.2. What to report................................................................................................................. vii

6.3. Where to report bugs ..................................................................................................... viii

1. Installation Instructions........................................................................................................................1

1.1. Short Version...............................................................................................................................1

1.2. Requirements ..............................................................................................................................1

1.3. Getting The Source .....................................................................................................................3

1.4. If You Are Upgrading .................................................................................................................4

1.5. Installation Procedure .................................................................................................................5

1.6. Post-Installation Setup ..............................................................................................................10

1.6.1. Shared Libraries............................................................................................................11

1.6.2. Environment Variables .................................................................................................11

1.7. Supported Platforms..................................................................................................................12

2. Installation on Windows......................................................................................................................18

3. Server Run-time Environment ...........................................................................................................19

3.1. The PostgreSQL User Account.................................................................................................19

3.2. Creating a Database Cluster......................................................................................................19

3.3. Starting the Database Server.....................................................................................................20

3.3.1. Server Start-up Failures................................................................................................21

3.3.2. Client Connection Problems.........................................................................................22

3.4. Run-time Configuration ............................................................................................................23

3.4.1. pg_settings....................................................................................................................24

3.4.2. Planner and Optimizer Tuning......................................................................................24

3.4.3. Logging and Debugging...............................................................................................26

3.4.4. General Operation ........................................................................................................29

3.4.5. WAL .............................................................................................................................36

3.4.6. Short Options................................................................................................................37

3.5. Managing Kernel Resources .....................................................................................................38

3.5.1. Shared Memory and Semaphores.................................................................................38

3.5.2. Resource Limits............................................................................................................42

3.6. Shutting Down the Server.........................................................................................................43

3.7. Secure TCP/IP Connections with SSL......................................................................................44

3.8. Secure TCP/IP Connections with SSH Tunnels........................................................................45

iii

4. Database Users and Privileges............................................................................................................47

4.1. Database Users..........................................................................................................................47

4.2. User Attributes..........................................................................................................................47

4.3. Groups.......................................................................................................................................48

4.4. Privileges...................................................................................................................................48

4.5. Functions and Triggers..............................................................................................................49

5. Managing Databases............................................................................................................................50

5.1. Overview...................................................................................................................................50

5.2. Creating a Database ..................................................................................................................50

5.3. Template Databases...................................................................................................................51

5.4. Database Configuration.............................................................................................................52

5.5. Alternative Locations................................................................................................................53

5.6. Destroying a Database...............................................................................................................54

6. Client Authentication...........................................................................................................................55

6.1. The pg_hba.conf file..............................................................................................................55

6.2. Authentication methods ............................................................................................................59

6.2.1. Trust authentication......................................................................................................59

6.2.2. Password authentication...............................................................................................60

6.2.3. Kerberos authentication................................................................................................60

6.2.4. Ident-based authentication............................................................................................61

6.2.4.1. Ident Authentication over TCP/IP....................................................................61

6.2.4.2. Ident Authentication over Local Sockets.........................................................62

6.2.4.3. Ident Maps .......................................................................................................62

6.2.5. PAM Authentication.....................................................................................................63

6.3. Authentication problems...........................................................................................................63

7. Localization..........................................................................................................................................65

7.1. Locale Support..........................................................................................................................65

7.1.1. Overview ......................................................................................................................65

7.1.2. Benefits.........................................................................................................................66

7.1.3. Problems.......................................................................................................................67

7.2. Multibyte Support .....................................................................................................................68

7.2.1. Supported character set encodings ...............................................................................68

7.2.2. Setting the Encoding.....................................................................................................69

7.2.3. Automatic encoding conversion between server and client..........................................70

7.2.4. What happens if the translation is not possible? ..........................................................72

7.2.5. References ....................................................................................................................72

7.2.6. History..........................................................................................................................72

7.2.7. WIN1250 on Windows/ODBC.....................................................................................74

7.3. Single-byte character set recoding............................................................................................75

8. Routine Database Maintenance Tasks...............................................................................................77

8.1. General Discussion ...................................................................................................................77

8.2. Routine Vacuuming...................................................................................................................77

8.2.1. Recovering disk space ..................................................................................................77

8.2.2. Updating planner statistics ...........................................................................................78

8.2.3. Preventing transaction ID wraparound failures............................................................79

8.3. Routine Reindexing...................................................................................................................80

iv

8.4. Log File Maintenance ...............................................................................................................81

9. Backup and Restore.............................................................................................................................82

9.1. SQL Dump................................................................................................................................82

9.1.1. Restoring the dump.......................................................................................................82

9.1.2. Using pg_dumpall......................................................................................................83

9.1.3. Large Databases............................................................................................................83

9.1.4. Caveats..........................................................................................................................84

9.2. File system level backup...........................................................................................................85

9.3. Migration between releases.......................................................................................................85

10. Monitoring Database Activity ..........................................................................................................87

10.1. Standard Unix Tools................................................................................................................87

10.2. Statistics Collector ..................................................................................................................88

10.2.1. Statistics Collection Configuration.............................................................................88

10.2.2. Viewing Collected Statistics.......................................................................................88

10.3. Viewing Locks ........................................................................................................................92

11. Monitoring Disk Usage......................................................................................................................95

11.1. Determining Disk Usage.........................................................................................................95

11.2. Disk Full Failure .....................................................................................................................96

12. Write-Ahead Logging (WAL)...........................................................................................................97

12.1. General Description ................................................................................................................97

12.1.1. Immediate Benefits of WAL.......................................................................................97

12.1.2. Future Benefits............................................................................................................97

12.2. Implementation .......................................................................................................................98

12.2.1. Database Recovery with WAL ...................................................................................98

12.3. WAL Configuration.................................................................................................................99

13. Regression Tests...............................................................................................................................101

13.1. Introduction...........................................................................................................................101

13.2. Running the Tests..................................................................................................................101

13.3. Test Evaluation......................................................................................................................102

13.3.1. Error message differences ........................................................................................102

13.3.2. Locale differences.....................................................................................................103

13.3.3. Date and time differences.........................................................................................103

13.3.4. Floating-point differences.........................................................................................103

13.3.5. Polygon differences..................................................................................................104

13.3.6. Row ordering differences .........................................................................................104

13.3.7. The “random” test.....................................................................................................104

13.4. Platform-specific comparison files........................................................................................105

A. Release Notes.....................................................................................................................................106

A.1. Release 7.3.2 ..........................................................................................................................106

A.1.1. Migration to version 7.3.2 .........................................................................................106

A.1.2. Changes .....................................................................................................................106

A.2. Release 7.3.1 ..........................................................................................................................107

A.2.1. Migration to version 7.3.1 .........................................................................................107

A.2.2. Changes .....................................................................................................................107

A.3. Release 7.3 .............................................................................................................................107

A.3.1. Overview....................................................................................................................108

v

A.3.2. Migration to version 7.3 ............................................................................................109

A.3.3. Changes .....................................................................................................................109

A.3.3.1. Server Operation ...........................................................................................109

A.3.3.2. Performance ..................................................................................................110

A.3.3.3. Privileges.......................................................................................................110

A.3.3.4. Server Configuration.....................................................................................110

A.3.3.5. Queries..........................................................................................................111

A.3.3.6. Object Manipulation .....................................................................................112

A.3.3.7. Utility Commands.........................................................................................112

A.3.3.8. Data Types and Functions.............................................................................113

A.3.3.9. Internationalization .......................................................................................115

A.3.3.10. Server-side Languages................................................................................115

A.3.3.11. Psql..............................................................................................................115

A.3.3.12. Libpq...........................................................................................................116

A.3.3.13. JDBC...........................................................................................................116

A.3.3.14. Miscellaneous Interfaces.............................................................................116

A.3.3.15. Source Code................................................................................................117

A.3.3.16. Contrib ........................................................................................................118

A.4. Release 7.2.4 ..........................................................................................................................119

A.4.1. Migration to version 7.2.4 .........................................................................................119

A.4.2. Changes .....................................................................................................................119

A.5. Release 7.2.3 ..........................................................................................................................119

A.5.1. Migration to version 7.2.3 .........................................................................................119

A.5.2. Changes .....................................................................................................................120

A.6. Release 7.2.2 ..........................................................................................................................120

A.6.1. Migration to version 7.2.2 .........................................................................................120

A.6.2. Changes .....................................................................................................................120

A.7. Release 7.2.1 ..........................................................................................................................121

A.7.1. Migration to version 7.2.1 .........................................................................................121

A.7.2. Changes .....................................................................................................................121

A.8. Release 7.2 .............................................................................................................................121

A.8.1. Overview....................................................................................................................121

A.8.2. Migration to version 7.2 ............................................................................................122

A.8.3. Changes .....................................................................................................................123

A.8.3.1. Server Operation ...........................................................................................123

A.8.3.2. Performance ..................................................................................................123

A.8.3.3. Privileges.......................................................................................................124

A.8.3.4. Client Authentication....................................................................................124

A.8.3.5. Server Configuration.....................................................................................124

A.8.3.6. Queries..........................................................................................................124

A.8.3.7. Schema Manipulation ...................................................................................124

A.8.3.8. Utility Commands.........................................................................................125

A.8.3.9. Data Types and Functions.............................................................................125

A.8.3.10. Internationalization .....................................................................................126

A.8.3.11. PL/pgSQL ...................................................................................................126

A.8.3.12. PL/Perl ........................................................................................................127

A.8.3.13. PL/Tcl..........................................................................................................127

A.8.3.14. PL/Python ...................................................................................................127

vi

A.8.3.15. Psql..............................................................................................................127

A.8.3.16. Libpq...........................................................................................................127

A.8.3.17. JDBC...........................................................................................................127

A.8.3.18. ODBC..........................................................................................................128

A.8.3.19. ECPG ..........................................................................................................128

A.8.3.20. Misc. Interfaces...........................................................................................129

A.8.3.21. Build and Install..........................................................................................129

A.8.3.22. Source Code................................................................................................129

A.8.3.23. Contrib ........................................................................................................130

A.9. Release 7.1.3 ..........................................................................................................................130

A.9.1. Migration to version 7.1.3 .........................................................................................130

A.9.2. Changes .....................................................................................................................130

A.10. Release 7.1.2 ........................................................................................................................131

A.10.1. Migration to version 7.1.2 .......................................................................................131

A.10.2. Changes ...................................................................................................................131

A.11. Release 7.1.1 ........................................................................................................................131

A.11.1. Migration to version 7.1.1 .......................................................................................131

A.11.2. Changes ...................................................................................................................131

A.12. Release 7.1 ...........................................................................................................................132

A.12.1. Migration to version 7.1 ..........................................................................................132

A.12.2. Changes ...................................................................................................................133

A.13. Release 7.0.3 ........................................................................................................................136

A.13.1. Migration to version 7.0.3 .......................................................................................136

A.13.2. Changes ...................................................................................................................136

A.14. Release 7.0.2 ........................................................................................................................137

A.14.1. Migration to version 7.0.2 .......................................................................................138

A.14.2. Changes ...................................................................................................................138

A.15. Release 7.0.1 ........................................................................................................................138

A.15.1. Migration to version 7.0.1 .......................................................................................138

A.15.2. Changes ...................................................................................................................138

A.16. Release 7.0 ...........................................................................................................................139

A.16.1. Migration to version 7.0 ..........................................................................................139

A.16.2. Changes ...................................................................................................................140

A.17. Release 6.5.3 ........................................................................................................................146

A.17.1. Migration to version 6.5.3 .......................................................................................146

A.17.2. Changes ...................................................................................................................146

A.18. Release 6.5.2 ........................................................................................................................147

A.18.1. Migration to version 6.5.2 .......................................................................................147

A.18.2. Changes ...................................................................................................................147

A.19. Release 6.5.1 ........................................................................................................................147

A.19.1. Migration to version 6.5.1 .......................................................................................148

A.19.2. Changes ...................................................................................................................148

A.20. Release 6.5 ...........................................................................................................................148

A.20.1. Migration to version 6.5 ..........................................................................................149

A.20.1.1. Multiversion Concurrency Control .............................................................150

A.20.2. Changes ...................................................................................................................150

A.21. Release 6.4.2 ........................................................................................................................153

A.21.1. Migration to version 6.4.2 .......................................................................................154

vii

A.21.2. Changes ...................................................................................................................154

A.22. Release 6.4.1 ........................................................................................................................154

A.22.1. Migration to version 6.4.1 .......................................................................................154

A.22.2. Changes ...................................................................................................................154

A.23. Release 6.4 ...........................................................................................................................155

A.23.1. Migration to version 6.4 ..........................................................................................156

A.23.2. Changes ...................................................................................................................156

A.24. Release 6.3.2 ........................................................................................................................159

A.24.1. Changes ...................................................................................................................160

A.25. Release 6.3.1 ........................................................................................................................160

A.25.1. Changes ...................................................................................................................161

A.26. Release 6.3 ...........................................................................................................................162

A.26.1. Migration to version 6.3 ..........................................................................................163

A.26.2. Changes ...................................................................................................................163

A.27. Release 6.2.1 ........................................................................................................................166

A.27.1. Migration from version 6.2 to version 6.2.1............................................................167

A.27.2. Changes ...................................................................................................................167

A.28. Release 6.2 ...........................................................................................................................167

A.28.1. Migration from version 6.1 to version 6.2...............................................................167

A.28.2. Migration from version 1.x to version 6.2 ..............................................................168

A.28.3. Changes ...................................................................................................................168

A.29. Release 6.1.1 ........................................................................................................................170

A.29.1. Migration from version 6.1 to version 6.1.1............................................................170

A.29.2. Changes ...................................................................................................................170

A.30. Release 6.1 ...........................................................................................................................171

A.30.1. Migration to version 6.1 ..........................................................................................171

A.30.2. Changes ...................................................................................................................171

A.31. Release 6.0 ...........................................................................................................................173

A.31.1. Migration from version 1.09 to version 6.0.............................................................173

A.31.2. Migration from pre-1.09 to version 6.0...................................................................174

A.31.3. Changes ...................................................................................................................174

A.32. Release 1.09 .........................................................................................................................176

A.33. Release 1.02 .........................................................................................................................176

A.33.1. Migration from version 1.02 to version 1.02.1........................................................176

A.33.2. Dump/Reload Procedure..........................................................................................177

A.33.3. Changes ...................................................................................................................177

A.34. Release 1.01 .........................................................................................................................178

A.34.1. Migration from version 1.0 to version 1.01.............................................................178

A.34.2. Changes ...................................................................................................................180

A.35. Release 1.0 ...........................................................................................................................180

A.35.1. Changes ...................................................................................................................180

A.36. Postgres95 Release 0.03.......................................................................................................181

A.36.1. Changes ...................................................................................................................181

A.37. Postgres95 Release 0.02.......................................................................................................183

A.37.1. Changes ...................................................................................................................184

A.38. Postgres95 Release 0.01.......................................................................................................184

viii

Bibliography...........................................................................................................................................186

Index........................................................................................................................................................188

ix

List of Tables

3-1. pg_settings Columns ..........................................................................................................................24

3-2. Short option key .................................................................................................................................37

3-3. System V IPC parameters...................................................................................................................38

7-1. Character Set Encodings ....................................................................................................................68

7-2. Client/Server Character Set Encodings..............................................................................................70

10-1. Standard Statistics Views .................................................................................................................89

10-2. Statistics Access Functions ..............................................................................................................90

10-3. Lock Status System View.................................................................................................................93

List of Examples

6-1. An example pg_hba.conf file..........................................................................................................58

6-2. An example pg_ident.conf file .....................................................................................................63

x

Preface

1. What is PostgreSQL?

PostgreSQL is an object-relational database management system (ORDBMS) based on POSTGRES,

Version 4.2

1

, developed at the University of California at Berkeley Computer Science Department. The

POSTGRES project, led by Professor Michael Stonebraker, was sponsored by the Defense Advanced

Research Projects Agency (DARPA), the Army Research Office (ARO), the National Science Foundation

(NSF), and ESL, Inc.

PostgreSQL is an open-source descendant of this original Berkeley code. It provides SQL92/SQL99 lan-

guage support and other modern features.

POSTGRES pioneered many of the object-relational concepts now becoming available in some commer-

cial databases. Traditional relational database management systems (RDBMS) support a data model con-

sisting of a collection of named relations, containing attributes of a specific type. In current commercial

systems, possible types include floating point numbers, integers, character strings, money, and dates. It is

commonly recognized that this model is inadequate for future data-processing applications. The relational

model successfully replaced previous models in part because of its “Spartan simplicity”. However, this

simplicity makes the implementation of certain applications very difficult. PostgreSQL offers substantial

additional power by incorporating the following additional concepts in such a way that users can easily

extend the system:

• inheritance

• data types

• functions

Other features provide additional power and flexibility:

• constraints

• triggers

• rules

• transactional integrity

These features put PostgreSQL into the category of databases referred to as object-relational. Note that

this is distinct from those referred to as object-oriented, which in general are not as well suited to support-

ing traditional relational database languages. So, although PostgreSQL has some object-oriented features,

it is firmly in the relational database world. In fact, some commercial databases have recently incorporated

features pioneered by PostgreSQL.

1. http://s2k-ftp.CS.Berkeley.EDU:8000/postgres/postgres.html

i

Preface

2. A Short History of PostgreSQL

The object-relational database management system now known as PostgreSQL (and briefly called Post-

gres95) is derived from the POSTGRES package written at the University of California at Berkeley. With

over a decade of development behind it, PostgreSQL is the most advanced open-source database available

anywhere, offering multiversion concurrency control, supporting almost all SQL constructs (including

subselects, transactions, and user-defined types and functions), and having a wide range of language bind-

ings available (including C, C++, Java, Perl, Tcl, and Python).

2.1. The Berkeley POSTGRES Project

Implementation of the POSTGRES DBMS began in 1986. The initial concepts for the system were pre-

sented in The design of POSTGRES and the definition of the initial data model appeared in The POST-

GRES data model. The design of the rule system at that time was described in The design of the POST-

GRES rules system. The rationale and architecture of the storage manager were detailed in The design of

the POSTGRES storage system.

Postgres has undergone several major releases since then. The first “demoware” system became opera-

tional in 1987 and was shown at the 1988 ACM-SIGMOD Conference. Version 1, described in The im-

plementation of POSTGRES, was released to a few external users in June 1989. In response to a critique

of the first rule system (A commentary on the POSTGRES rules system), the rule system was redesigned

(On Rules, Procedures, Caching and Views in Database Systems) and Version 2 was released in June 1990

with the new rule system. Version 3 appeared in 1991 and added support for multiple storage managers,

an improved query executor, and a rewritten rewrite rule system. For the most part, subsequent releases

until Postgres95 (see below) focused on portability and reliability.

POSTGRES has been used to implement many different research and production applications. These in-

clude: a financial data analysis system, a jet engine performance monitoring package, an asteroid tracking

database, a medical information database, and several geographic information systems. POSTGRES has

also been used as an educational tool at several universities. Finally, Illustra Information Technologies

(later merged into Informix

2

, which is now owned by IBM

3

.) picked up the code and commercialized it.

POSTGRES became the primary data manager for the Sequoia 2000

4

scientific computing project in late

1992.

The size of the external user community nearly doubled during 1993. It became increasingly obvious that

maintenance of the prototype code and support was taking up large amounts of time that should have been

devoted to database research. In an effort to reduce this support burden, the Berkeley POSTGRES project

officially ended with Version 4.2.

2.2. Postgres95

In 1994, Andrew Yu and Jolly Chen added a SQL language interpreter to POSTGRES. Postgres95 was

subsequently released to the Web to find its own way in the world as an open-source descendant of the

original POSTGRES Berkeley code.

Postgres95 code was completely ANSI C and trimmed in size by 25%. Many internal changes improved

performance and maintainability. Postgres95 release 1.0.x ran about 30-50% faster on the Wisconsin

2. http://www.informix.com/

3. http://www.ibm.com/

4. http://meteora.ucsd.edu/s2k/s2k_home.html

ii

Preface

Benchmark compared to POSTGRES, Version 4.2. Apart from bug fixes, the following were the major

enhancements:

• The query language PostQUEL was replaced with SQL (implemented in the server). Subqueries were

not supported until PostgreSQL (see below), but they could be imitated in Postgres95 with user-defined

SQL functions. Aggregates were re-implemented. Support for the GROUP BY query clause was also

added. The libpq interface remained available for C programs.

• In addition to the monitor program, a new program (psql) was provided for interactive SQL queries

using GNU Readline.

• A new front-end library, libpgtcl, supported Tcl-based clients. A sample shell, pgtclsh, provided

new Tcl commands to interface Tcl programs with the Postgres95 backend.

• The large-object interface was overhauled. The Inversion large objects were the only mechanism for

storing large objects. (The Inversion file system was removed.)

• The instance-level rule system was removed. Rules were still available as rewrite rules.

• A short tutorial introducing regular SQL features as well as those of Postgres95 was distributed with

the source code

• GNU make (instead of BSD make) was used for the build. Also, Postgres95 could be compiled with an

unpatched GCC (data alignment of doubles was fixed).

2.3. PostgreSQL

By 1996, it became clear that the name “Postgres95” would not stand the test of time. We chose a new

name, PostgreSQL, to reflect the relationship between the original POSTGRES and the more recent ver-

sions with SQL capability. At the same time, we set the version numbering to start at 6.0, putting the

numbers back into the sequence originally begun by the Berkeley POSTGRES project.

The emphasis during development of Postgres95 was on identifying and understanding existing problems

in the backend code. With PostgreSQL, the emphasis has shifted to augmenting features and capabilities,

although work continues in all areas.

Major enhancements in PostgreSQL include:

• Table-level locking has been replaced by multiversion concurrency control, which allows readers to

continue reading consistent data during writer activity and enables hot backups from pg_dump while

the database stays available for queries.

• Important backend features, including subselects, defaults, constraints, and triggers, have been imple-

mented.

• Additional SQL92-compliant language features have been added, including primary keys, quoted iden-

tifiers, literal string type coercion, type casting, and binary and hexadecimal integer input.

• Built-in types have been improved, including new wide-range date/time types and additional geometric

type support.

• Overall backend code speed has been increased by approximately 20-40%, and backend start-up time

has decreased by 80% since version 6.0 was released.

iii

Preface

3. What’s In This Book

This book covers topics that are of interest to a PostgreSQL database administrator. This includes in-

stallation of the software, set up and configuration of the server, management of users and databases,

and maintenance tasks. Anyone who runs a PostgreSQL server, either for personal use, but especially in

production, should be familiar with the topics covered in this book.

The information in this book is arranged approximately in the order in which a new user should read it.

But the chapters are self-contained and can be read individually as desired. The information in this book is

presented in a narrative fashion in topical units. Readers looking for a complete description of a particular

command should look into the PostgreSQL Reference Manual.

The first few chapters are written so that they can be understood without prerequisite knowledge, so that

new users who need to set up their own server can begin their exploration with this book. The rest of this

book which is about tuning and management presupposes that the reader is familiar with the general use

of the PostgreSQL database system. Readers are encouraged to look at the PostgreSQL Tutorial and the

PostgreSQL User’s Guide for additional information.

This book covers PostgreSQL 7.3.2 only. For information on other versions, please read the documentation

that accompanies that release.

4. Overview of Documentation Resources

The PostgreSQL documentation is organized into several books:

PostgreSQL Tutorial

An informal introduction for new users.

PostgreSQL User’s Guide

Documents the SQL query language environment, including data types and functions, as well as

user-level performance tuning. Every PostgreSQL user should read this.

PostgreSQL Administrator’s Guide

Installation and server management information. Everyone who runs a PostgreSQL server, either for

personal use or for other users, needs to read this.

PostgreSQL Programmer’s Guide

Advanced information for application programmers. Topics include type and function extensibility,

library interfaces, and application design issues.

PostgreSQL Reference Manual

Reference pages for SQL command syntax, and client and server programs. This book is auxiliary to

the User’s, Administrator’s, and Programmer’s Guides.

PostgreSQL Developer’s Guide

Information for PostgreSQL developers. This is intended for those who are contributing to the Post-

greSQL project; application development information appears in the Programmer’s Guide.

iv

Preface

In addition to this manual set, there are other resources to help you with PostgreSQL installation and use:

man pages

The Reference Manual’s pages in the traditional Unix man format. There is no difference in content.

FAQs

Frequently Asked Questions (FAQ) lists document both general issues and some platform-specific

issues.

READMEs

README files are available for some contributed packages.

Web Site

The PostgreSQL web site

5

carries details on the latest release, upcoming features, and other informa-

tion to make your work or play with PostgreSQL more productive.

Mailing Lists

The mailing lists are a good place to have your questions answered, to share experiences with other

users, and to contact the developers. Consult the User’s Lounge

6

section of the PostgreSQL web site

for details.

Yourself!

PostgreSQL is an open-source effort. As such, it depends on the user community for ongoing support.

As you begin to use PostgreSQL, you will rely on others for help, either through the documentation or

through the mailing lists. Consider contributing your knowledge back. If you learn something which

is not in the documentation, write it up and contribute it. If you add features to the code, contribute

them.

Even those without a lot of experience can provide corrections and minor changes in the documenta-

to get going.

5. Terminology and Notation

An administrator is generally a person who is in charge of installing and running the server. A user

could be anyone who is using, or wants to use, any part of the PostgreSQL system. These terms should

not be interpreted too narrowly; this documentation set does not have fixed presumptions about system

administration procedures.

We use /usr/local/pgsql/ as the root directory of the installation and /usr/local/pgsql/data as

the directory with the database files. These directories may vary on your site, details can be derived in the

Administrator’s Guide.

5. http://www.postgresql.org

6. http://www.postgresql.org/users-lounge/

v

Preface

In a command synopsis, brackets ([ and ]) indicate an optional phrase or keyword. Anything in braces ({

and }) and containing vertical bars (|) indicates that you must choose one alternative.

Examples will show commands executed from various accounts and programs. Commands executed from

a Unix shell may be preceded with a dollar sign (“$”). Commands executed from particular user accounts

such as root or postgres are specially flagged and explained. SQL commands may be preceded with “=>”

or will have no leading prompt, depending on the context.

Note: The notation for flagging commands is not universally consistent throughout the documentation

set. Please report problems to the documentation mailing list <[email protected]>.

6. Bug Reporting Guidelines

When you find a bug in PostgreSQL we want to hear about it. Your bug reports play an important part

in making PostgreSQL more reliable because even the utmost care cannot guarantee that every part of

PostgreSQL will work on every platform under every circumstance.

The following suggestions are intended to assist you in forming bug reports that can be handled in an

effective fashion. No one is required to follow them but it tends to be to everyone’s advantage.

We cannot promise to fix every bug right away. If the bug is obvious, critical, or affects a lot of users,

chances are good that someone will look into it. It could also happen that we tell you to update to a newer

version to see if the bug happens there. Or we might decide that the bug cannot be fixed before some

major rewrite we might be planning is done. Or perhaps it is simply too hard and there are more important

things on the agenda. If you need help immediately, consider obtaining a commercial support contract.

6.1. Identifying Bugs

Before you report a bug, please read and re-read the documentation to verify that you can really do

whatever it is you are trying. If it is not clear from the documentation whether you can do something or

not, please report that too; it is a bug in the documentation. If it turns out that the program does something

different from what the documentation says, that is a bug. That might include, but is not limited to, the

following circumstances:

• A program terminates with a fatal signal or an operating system error message that would point to a

problem in the program. (A counterexample might be a “disk full” message, since you have to fix that

yourself.)

• A program produces the wrong output for any given input.

• A program refuses to accept valid input (as defined in the documentation).

• A program accepts invalid input without a notice or error message. But keep in mind that your idea of

invalid input might be our idea of an extension or compatibility with traditional practice.

• PostgreSQL fails to compile, build, or install according to the instructions on supported platforms.

Here “program” refers to any executable, not only the backend server.

vi

Preface

Being slow or resource-hogging is not necessarily a bug. Read the documentation or ask on one of the

mailing lists for help in tuning your applications. Failing to comply to the SQL standard is not necessarily

a bug either, unless compliance for the specific feature is explicitly claimed.

Before you continue, check on the TODO list and in the FAQ to see if your bug is already known. If you

cannot decode the information on the TODO list, report your problem. The least we can do is make the

TODO list clearer.

6.2. What to report

The most important thing to remember about bug reporting is to state all the facts and only facts. Do not

speculate what you think went wrong, what “it seemed to do”, or which part of the program has a fault.

If you are not familiar with the implementation you would probably guess wrong and not help us a bit.

And even if you are, educated explanations are a great supplement to but no substitute for facts. If we are

going to fix the bug we still have to see it happen for ourselves first. Reporting the bare facts is relatively

straightforward (you can probably copy and paste them from the screen) but all too often important details

are left out because someone thought it does not matter or the report would be understood anyway.

The following items should be contained in every bug report:

• The exact sequence of steps from program start-up necessary to reproduce the problem. This should

be self-contained; it is not enough to send in a bare select statement without the preceding create table

and insert statements, if the output should depend on the data in the tables. We do not have the time

to reverse-engineer your database schema, and if we are supposed to make up our own data we would

probably miss the problem. The best format for a test case for query-language related problems is a file

that can be run through the psql frontend that shows the problem. (Be sure to not have anything in your

~/.psqlrc start-up file.) An easy start at this file is to use pg_dump to dump out the table declarations

and data needed to set the scene, then add the problem query. You are encouraged to minimize the size

of your example, but this is not absolutely necessary. If the bug is reproducible, we will find it either

way.

If your application uses some other client interface, such as PHP, then please try to isolate the offending

queries. We will probably not set up a web server to reproduce your problem. In any case remember

to provide the exact input files, do not guess that the problem happens for “large files” or “mid-size

databases”, etc. since this information is too inexact to be of use.

• The output you got. Please do not say that it “didn’t work” or “crashed”. If there is an error message,

show it, even if you do not understand it. If the program terminates with an operating system error,

say which. If nothing at all happens, say so. Even if the result of your test case is a program crash or

otherwise obvious it might not happen on our platform. The easiest thing is to copy the output from the

terminal, if possible.

Note: In case of fatal errors, the error message reported by the client might not contain all the

information available. Please also look at the log output of the database server. If you do not keep

your server’s log output, this would be a good time to start doing so.

vii

Preface

• The output you expected is very important to state. If you just write “This command gives me that

output.” or “This is not what I expected.”, we might run it ourselves, scan the output, and think it

looks OK and is exactly what we expected. We should not have to spend the time to decode the exact

semantics behind your commands. Especially refrain from merely saying that “This is not what SQL

says/Oracle does.” Digging out the correct behavior from SQL is not a fun undertaking, nor do we all

know how all the other relational databases out there behave. (If your problem is a program crash, you

can obviously omit this item.)

• Any command line options and other start-up options, including concerned environment variables or

configuration files that you changed from the default. Again, be exact. If you are using a prepackaged

distribution that starts the database server at boot time, you should try to find out how that is done.

• Anything you did at all differently from the installation instructions.

• The PostgreSQL version. You can run the command SELECT version(); to find out the version of

the server you are connected to. Most executable programs also support a --version option; at least

postmaster --version and psql --version should work. If the function or the options do not

exist then your version is more than old enough to warrant an upgrade. You can also look into the

README file in the source directory or at the name of your distribution file or package name. If you run

a prepackaged version, such as RPMs, say so, including any subversion the package may have. If you

are talking about a CVS snapshot, mention that, including its date and time.

If your version is older than 7.3.2 we will almost certainly tell you to upgrade. There are tons of bug

fixes in each new release, that is why we make new releases.

• Platform information. This includes the kernel name and version, C library, processor, memory infor-

mation. In most cases it is sufficient to report the vendor and version, but do not assume everyone knows

what exactly “Debian” contains or that everyone runs on Pentiums. If you have installation problems

then information about compilers, make, etc. is also necessary.

Do not be afraid if your bug report becomes rather lengthy. That is a fact of life. It is better to report

everything the first time than us having to squeeze the facts out of you. On the other hand, if your input

files are huge, it is fair to ask first whether somebody is interested in looking into it.

Do not spend all your time to figure out which changes in the input make the problem go away. This will

probably not help solving it. If it turns out that the bug cannot be fixed right away, you will still have time

to find and share your work-around. Also, once again, do not waste your time guessing why the bug exists.

We will find that out soon enough.

When writing a bug report, please choose non-confusing terminology. The software package in total is

called “PostgreSQL”, sometimes “Postgres” for short. If you are specifically talking about the backend

server, mention that, do not just say “PostgreSQL crashes”. A crash of a single backend server process is

quite different from crash of the parent “postmaster” process; please don’t say “the postmaster crashed”

when you mean a single backend went down, nor vice versa. Also, client programs such as the interactive

frontend “psql” are completely separate from the backend. Please try to be specific about whether the

problem is on the client or server side.

6.3. Where to report bugs

viii

Preface

requested to use a descriptive subject for your email message, perhaps parts of the error message.

Another method is to fill in the bug report web-form available at the project’s web site

http://www.postgresql.org/. Entering a bug report this way causes it to be mailed to the

<[email protected]> mailing list.

subscribers normally do not wish to receive bug reports. More importantly, they are unlikely to fix them.

This list is for discussing the development of PostgreSQL and it would be nice if we could keep the bug

reports separate. We might choose to take up a discussion about your bug report on pgsql-hackers, if

the problem needs more review.

If you have a problem with the documentation, the best place to report it is the documentation mailing

unhappy with.

If your bug is a portability problem on a non-supported platform, send mail to

platform.

Note: Due to the unfortunate amount of spam going around, all of the above email addresses are

closed mailing lists. That is, you need to be subscribed to a list to be allowed to post on it. (You need

not be subscribed to use the bug report web-form, however.) If you would like to send mail but do not

want to receive list traffic, you can subscribe and set your subscription option to nomail. For more

information send mail to <[email protected]> with the single word help in the body of the

message.

ix

Chapter 1. Installation Instructions

This chapter describes the installation of PostgreSQL from the source code distribution.

1.1. Short Version

./configure

gmake

su

gmake install

adduser postgres

mkdir /usr/local/pgsql/data

chown postgres /usr/local/pgsql/data

su - postgres

/usr/local/pgsql/bin/initdb -D /usr/local/pgsql/data

/usr/local/pgsql/bin/postmaster -D /usr/local/pgsql/data >logfile 2>&1 &

/usr/local/pgsql/bin/createdb test

/usr/local/pgsql/bin/psql test

The long version is the rest of this chapter.

1.2. Requirements

In general, a modern Unix-compatible platform should be able to run PostgreSQL. The platforms that had

received specific testing at the time of release are listed in Section 1.7 below. In the doc subdirectory of

the distribution there are several platform-specific FAQ documents you might wish to consult if you are

having trouble.

The following software packages are required for building PostgreSQL:

• GNU make is required; other make programs will not work. GNU make is often installed under the

name gmake; this document will always refer to it by that name. (On some systems GNU make is the

default tool with the name make.) To test for GNU make enter

gmake --version

It is recommended to use version 3.76.1 or later.

• You need an ISO/ANSI C compiler. Recent versions of GCC are recommendable, but PostgreSQL is

known to build with a wide variety of compilers from different vendors.

• gzip is needed to unpack the distribution in the first place. If you are reading this, you probably already

got past that hurdle.

• The GNU Readline library (for comfortable line editing and command history retrieval) will be used

by default. If you don’t want to use it then you must specify the --without-readline option for

configure. (On NetBSD, the libedit library is readline-compatible and is used if libreadline is

not found.)

1

Chapter 1. Installation Instructions

• To build on Windows NT or Windows 2000 you need the Cygwin and cygipc packages. See the file

doc/FAQ_MSWIN for details.

The following packages are optional. They are not required in the default configuration, but they are

needed when certain build options are enabled, as explained below.

• To build the server programming language PL/Perl you need a full Perl installation, including the

libperl library and the header files. Since PL/Perl will be a shared library, the libperl library

must be a shared library also on most platforms. This appears to be the default in recent Perl versions,

but it was not in earlier versions, and in general it is the choice of whomever installed Perl at your site.

If you don’t have the shared library but you need one, a message like this will appear during the build

to point out this fact:

*** Cannot build PL/Perl because libperl is not a shared library.

*** You might have to rebuild your Perl installation. Refer to

*** the documentation for details.

(If you don’t follow the on-screen output you will merely notice that the PL/Perl library object,

plperl.so or similar, will not be installed.) If you see this, you will have to rebuild and install Perl

manually to be able to build PL/Perl. During the configuration process for Perl, request a shared

library.

• To build the Python interface module or the PL/Python server programming language, you need a

Python installation, including the header files. Since PL/Python will be a shared library, the libpython

library must be a shared library also on most platforms. This is not the case in a default Python instal-

lation.

If after building and installing you have a file called plpython.so (possibly a different extension),

then everything went well. Otherwise you should have seen a notice like this flying by:

*** Cannot build PL/Python because libpython is not a shared library.

*** You might have to rebuild your Python installation. Refer to

*** the documentation for details.

That means you have to rebuild (part of) your Python installation to supply this shared library.

The catch is that the Python distribution or the Python maintainers do not provide any direct way to do

this. The closest thing we can offer you is the information in Python FAQ 3.30

1

. On some operating sys-

tems you don’t really have to build a shared library, but then you will have to convince the PostgreSQL

build system of this. Consult the Makefile in the src/pl/plpython directory for details.

• If you want to build Tcl or Tk components (clients and the PL/Tcl language) you of course need a Tcl

installation.

• To build the JDBC driver, you need Ant 1.5 or higher and a JDK. Ant is a special tool for building

Java-based packages. It can be downloaded from the Ant web site

2

.

1. http://www.python.org/doc/FAQ.html#3.30

2. http://jakarta.apache.org/ant/index.html

2

Chapter 1. Installation Instructions

If you have several Java compilers installed, it depends on the Ant configuration which one gets used.

Precompiled Ant distributions are typically set up to read a file .antrc in the current user’s home

directory for configuration. For example, to use a different JDK than the default, this may work:

JAVA_HOME=/usr/local/sun-jdk1.3

JAVACMD=$JAVA_HOME/bin/java

Note: Do not try to build the driver by calling ant or even javac directly. This will not work. Run

gmake normally as described below.

• To enable Native Language Support (NLS), that is, the ability to display a program’s messages in a

language other than English, you need an implementation of the Gettext API. Some operating sys-

tems have this built-in (e.g., Linux, NetBSD, Solaris), for other systems you can download an add-on

package from here: http://www.postgresql.org/~petere/gettext.html. If you are using the gettext imple-

mentation in the GNU C library then you will additionally need the GNU Gettext package for some

utility programs. For any of the other implementations you will not need it.

• Kerberos, OpenSSL, or PAM, if you want to support authentication using these services.

If you are build from a CVS tree instead of using a released source package, or if you want to do develop-

ment, you also need the following packages:

• Flex and Bison are needed to build a CVS checkout or if you changed the actual scanner and parser

definition files. If you need them, be sure to get Flex 2.5.4 or later and Bison 1.50 or later. Other yacc

programs can sometimes be used, but doing so requires extra effort and is not recommended. Other lex

programs will definitely not work.

If you need to get a GNU package, you can find it at your local GNU mirror site (see

http://www.gnu.org/order/ftp.html for a list) or at ftp://ftp.gnu.org/gnu/.

Also check that you have sufficient disk space. You will need about 65 MB for the source tree during

compilation and about 15 MB for the installation directory. An empty database cluster takes about 25

MB, databases take about five times the amount of space that a flat text file with the same data would take.

If you are going to run the regression tests you will temporarily need up to an extra 90 MB. Use the df

command to check for disk space.

1.3. Getting The Source

The PostgreSQL 7.3.2 sources can be obtained by anonymous FTP from

ftp://ftp.postgresql.org/pub/postgresql-7.3.2.tar.gz. Use a mirror if possible. After you have obtained the

file, unpack it:

gunzip postgresql-7.3.2.tar.gz

tar xf postgresql-7.3.2.tar

3

Chapter 1. Installation Instructions

This will create a directory postgresql-7.3.2 under the current directory with the PostgreSQL sources.

Change into that directory for the rest of the installation procedure.

1.4. If You Are Upgrading

The internal data storage format changes with new releases of PostgreSQL. Therefore, if you are up-

grading an existing installation that does not have a version number “7.3.x”, you must back up and

restore your data as shown here. These instructions assume that your existing installation is under the

/usr/local/pgsql directory, and that the data area is in /usr/local/pgsql/data. Substitute your

paths appropriately.

1. Make sure that your database is not updated during or after the backup. This does not affect the

integrity of the backup, but the changed data would of course not be included. If necessary, edit the

permissions in the file /usr/local/pgsql/data/pg_hba.conf (or equivalent) to disallow access

from everyone except you.

2. To back up your database installation, type:

pg_dumpall > outputfile

If you need to preserve OIDs (such as when using them as foreign keys), then use the -o option when

running pg_dumpall.

pg_dumpall does not save large objects. Check Section 9.1.4 if you need to do this.

To make the backup, you can use the pg_dumpall command from the version you are currently

running. For best results, however, try to use the pg_dumpall command from PostgreSQL 7.3.2,

since this version contains bug fixes and improvements over older versions. While this advice might

seem idiosyncratic since you haven’t installed the new version yet, it is advisable to follow it if you

plan to install the new version in parallel with the old version. In that case you can complete the

installation normally and transfer the data later. This will also decrease the downtime.

3. If you are installing the new version at the same location as the old one then shut down the old server,

at the latest before you install the new files:

kill -INT ‘cat /usr/local/pgsql/data/postmaster.pid‘

Versions prior to 7.0 do not have this postmaster.pid file. If you are using such a version you must

find out the process id of the server yourself, for example by typing ps ax | grep postmas-

ter, and supply it to the kill command.

On systems that have PostgreSQL started at boot time, there is probably a start-up file that will

accomplish the same thing. For example, on a Red Hat Linux system one might find that

/etc/rc.d/init.d/postgresql stop

works. Another possibility is pg_ctl stop.

4. If you are installing in the same place as the old version then it is also a good idea to move the old

installation out of the way, in case you have trouble and need to revert to it. Use a command like this:

mv /usr/local/pgsql /usr/local/pgsql.old

4

Chapter 1. Installation Instructions

After you have installed PostgreSQL 7.3.2, create a new database directory and start the new server.

Remember that you must execute these commands while logged in to the special database user account

(which you already have if you are upgrading).

/usr/local/pgsql/bin/initdb -D /usr/local/pgsql/data

/usr/local/pgsql/bin/postmaster -D /usr/local/pgsql/data

Finally, restore your data with

/usr/local/pgsql/bin/psql -d template1 -f outputfile

using the new psql.

These topics are discussed at length in Section 9.3, which you are encouraged to read in any case.

1.5. Installation Procedure

1. Configuration

The first step of the installation procedure is to configure the source tree for your system and choose