Page 1

Introduction

This document describes the command-line tools included with SMRT Link

v8.0. These tools are for use by bioinformaticians working with secondary

analysis results.

• The command-line tools are located in the $SMRT_ROOT/smrtlink/

smrtcmds/bin

subdirectory.

Installation

The command-line tools are installed as an integral component of the SMRT

Link software. For installation details, see SMRT Link Software

Installation (v8.0).

• To install only the command-line tools, use the --smrttools-only

option with the installation command, whether for a new installation or

an upgrade. Examples:

smrtlink-*.run --rootdir smrtlink --smrttools-only

smrtlink-*.run --rootdir smrtlink --smrttools-only --upgrade

Pacific Biosciences Command-Line Tools

Following is information on the Pacific Biosciences-supplied command-line

tools included in the installation. Third-party tools installed are described at

the end of the document.

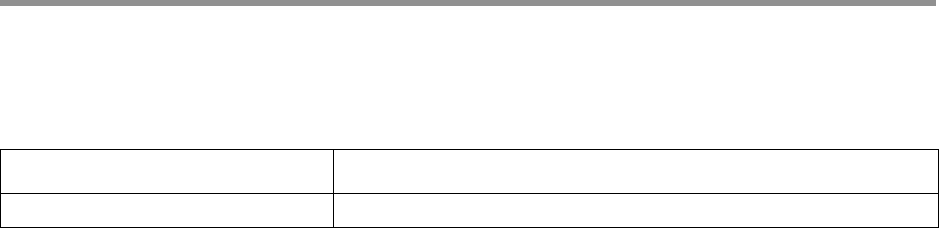

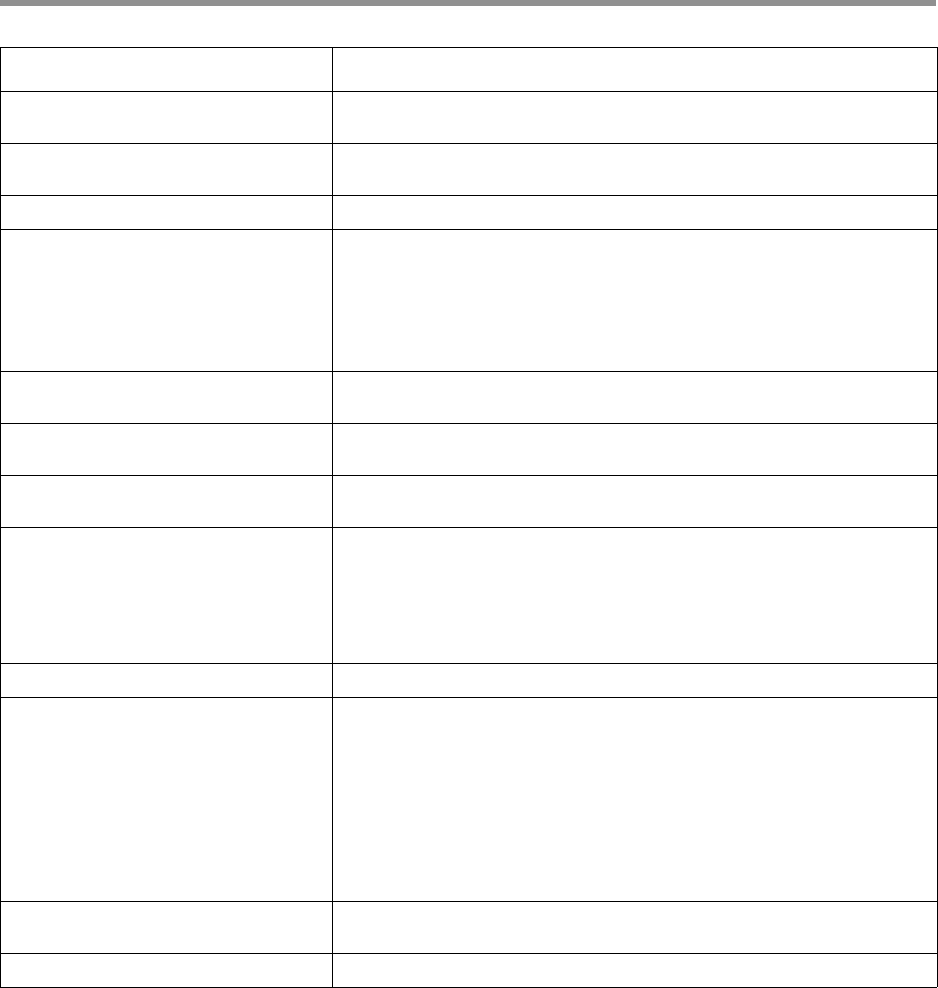

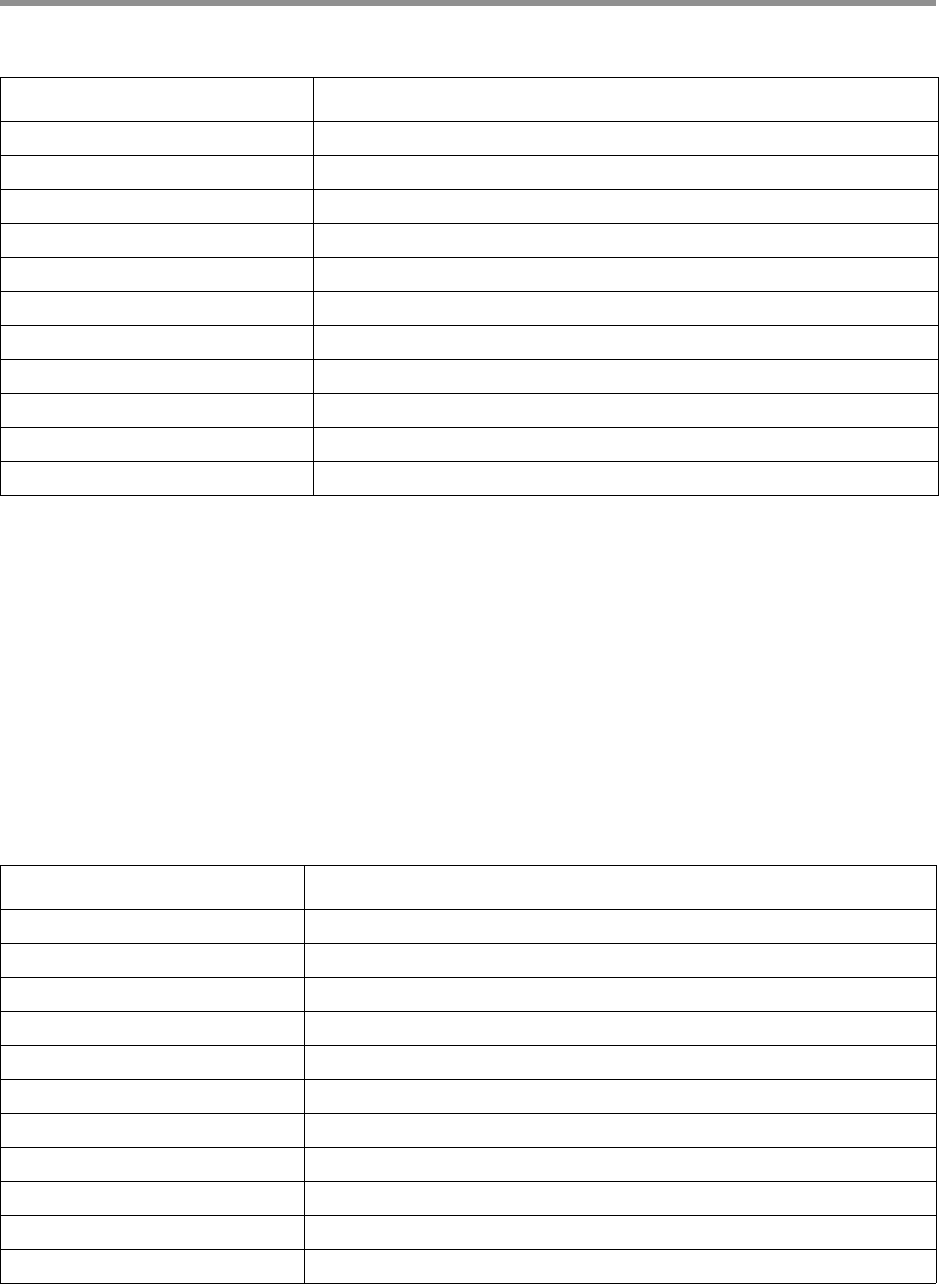

Tool Description

bam2fasta/

bam2fastq

Converts PacBio

®

BAM files into gzipped FASTA and FASTQ files.

See “bam2fasta/bam2fastq” on page 2.

bamsieve Generates a subset of a BAM or PacBio Data Set file based on either a

whitelist of hole numbers, or a percentage of reads to be randomly selected.

See “bamsieve” on page 3.

blasr Aligns long reads against a reference sequence. See “blasr” on page 5.

ccs Calculates consensus sequences from multiple “passes” around a circularized

single DNA molecule (SMRTbell

®

template). See “ccs” on page 10.

dataset Creates, opens, manipulates and writes Data Set XML files.

See “dataset” on page 15.

Demultiplex

Barcodes

Identifies barcode sequences in PacBio single-molecule sequencing data. See

“Demultiplex Barcodes” on page 21.

gcpp Variant-calling tool which provides several variant-calling algorithms for PacBio

sequencing data. See

“gcpp” on page 32.

ipdSummary Detects DNA base-modifications from kinetic signatures.

See “ipdSummary” on page 34.

SMRT

®

Tools Reference Guide

Page 2

bam2fasta/

bam2fastq

The bam2fastx tools convert PacBio BAM files into gzipped FASTA and

FASTQ files, including demultiplexing of barcoded data.

Usage

Both tools have an identical interface and take BAM and/or Data Set files

as input.

Examples

bam2fasta -o projectName m54008_160330_053509.subreads.bam

bam2fastq -o myEcoliRuns m54008_160330_053509.subreads.bam

m54008_160331_235636.subreads.bam

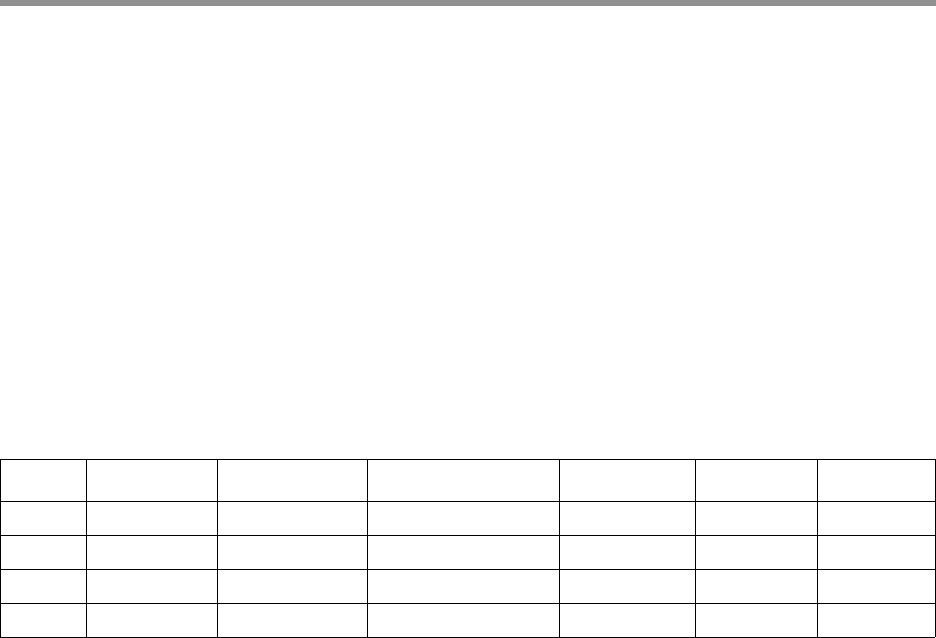

isoseq3 Characterizes full-length transcripts and generates full-length transcript

isoforms, eliminating the need for computational reconstruction.

See “isoseq3” on page 38.

juliet A general-purpose minor variant caller that identifies and phases minor single

nucleotide substitution variants in complex populations.

See “juliet” on page 41.

laa Finds phased consensus sequences from a pooled set of amplicons

sequenced with Pacific Biosciences’ SMRT technology. See

“laa” on page 49.

motifMaker Identifies motifs associated with DNA modifications in prokaryotic genomes.

See “motifMaker” on page 55.

pbalign Aligns PacBio reads to reference sequences; filters aligned reads according to

user-specified filtering criteria; and converts the output to PacBio BAM, SAM,

or PacBio DataSet format. See

“pbalign” on page 57.

pbcromwell Pacific Biosciences’ wrapper for the cromwell scientific workflow engine

used to power SMRT Link. For details on how to use pbcromwell to run

workflows, see

“pbcromwell” on page 60.

pbdagcon Implements DAGCon (Directed Acyclic Graph Consensus); a sequence

consensus algorithm based on using directed acyclic graphs to encode

multiple sequence alignments. See

“pbdagcon” on page 63.

pbindex Creates an index file that enables random access to PacBio-specific data in

BAM files. See

“pbindex” on page 64.

pbmm2 Aligns PacBio reads to reference sequences. A SMRT wrapper for minimap2,

and the successor to blasr and pbalign. See

“pbmm2” on page 65.

pbservice Performs a variety of useful tasks within SMRT Link.

See “pbservice” on page 71.

pbsv Structural variant caller for PacBio reads. See “pbsv” on page 75.

pbvalidate Validates that files produced by PacBio software are compliant with Pacific

Biosciences’ own internal specifications. See

“pbvalidate” on page 79.

sawriter Generates a suffix array file from an input FASTA file.

See “sawriter” on page 81.

summarizeModifica

tions

Generates a GFF summary file from the output of base modification analysis

combined with the coverage summary GFF generated by resequencing

pipelines. See

“summarize Modifications” on page 82.

Tool Description

Page 3

bam2fasta -o myHumanGenomem54012_160401_000001.subreadset.xml

Input Files

• One or more *.bam files

• *.subreadset.xml file (Data Set file)

Output Files

• *.fasta.gz

• *.fastq.gz

bamsieve

The bamsieve tool creates a subset of a BAM or PacBio Data Set file

based on either a whitelist of hole numbers, or a percentage of reads to be

randomly selected, while keeping all subreads within a read together.

Although

bamsieve is BAM-centric, it has some support for dataset XML

and will propagate metadata, as well as scraps BAM files in the special

case of SubreadSets.

bamsieve is useful for generating minimal test Data

Sets containing a handful of reads.

bamsieve operates in two modes: whitelist/blacklist mode where the

ZMWs to keep or discard are explicitly specified, or percentage/count

mode, where a fraction of the ZMWs is randomly selected.

ZMWs may be whitelisted or blacklisted in one of several ways:

• As a comma-separated list on the command line.

• As a flat text file, one ZMW per line.

• As another PacBio BAM or Data Set of any type.

Usage

bamsieve [-h] [--version] [--log-file LOG_FILE]

[--log-level {DEBUG,INFO,WARNING,ERROR,CRITICAL} | --debug | --quiet

| -v]

[--show-zmws] [--whitelist WHITELIST] [--blacklist BLACKLIST]

[--percentage PERCENTAGE] [-n COUNT] [-s SEED]

[--ignore-metadata][--barcodes]

input_bam [output_bam]

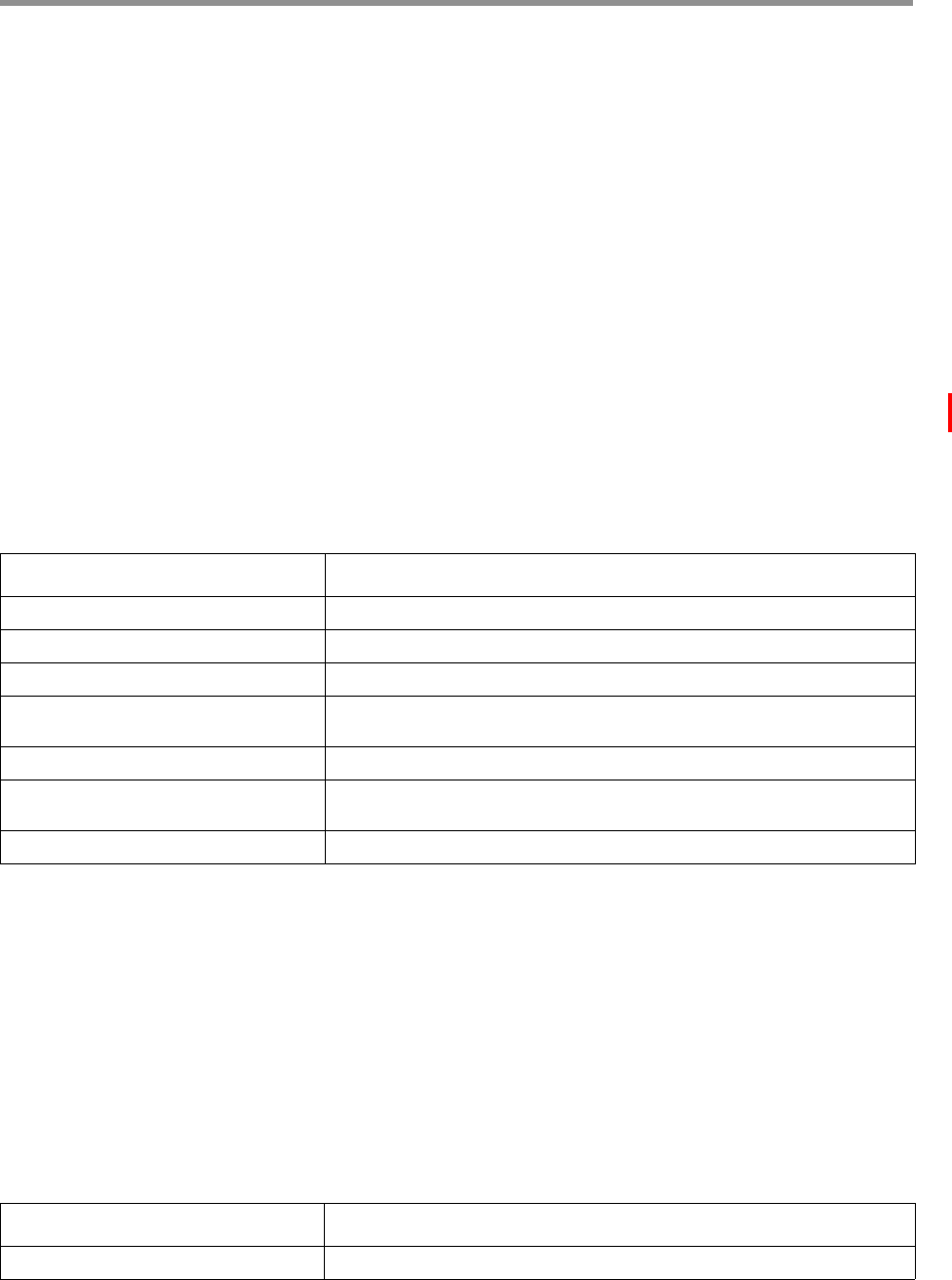

Required Description

input_bam The name of the input BAM file or Data Set from which reads will be read.

output_bam The name of the output BAM file or Data Set where filtered reads will be written to.

(Default = None)

Options Description

-h, --help Displays help information and exits.

--version Displays program version number and exits.

--log-file LOG_FILE Writes the log to file. (Default = None, writes to stdout.)

Page 4

Examples

Pulling out two ZMWs from a BAM file:

$ bamsieve --whitelist 111111,222222 full.subreads.bam sample.subreads.bam

Pulling out two ZMWs from a Data Set file:

$ bamsieve --whitelist 111111,222222 full.subreadset.xml sample.subreadset.xml

Using a text whitelist:

$ bamsieve --whitelist zmws.txt full.subreads.bam sample.subreads.bam

Using another BAM or Data Set as a whitelist:

$ bamsieve --whitelist mapped.alignmentset.xml full.subreads.bam mappable.subreads.bam

Generating a whitelist from a Data Set:

$ bamsieve --show-zmws mapped.alignmentset.xml > mapped_zmws.txt

Anonymizing a Data Set:

$ bamsieve --whitelist zmws.txt --ignore-metadata --anonymize full.subreadset.xml

anonymous_sample.subreadset.xml

Removing a read:

--log-level Specifies the log level; values are [DEBUG, INFO, WARNING, ERROR, CRITICAL].

(Default = WARNING)

--debug Alias for setting the log level to DEBUG. (Default = False)

--quiet Alias for setting the log level to CRITICAL to suppress output. (Default = False)

-v, --verbose Sets the verbosity level. (Default = NONE)

--show-zmws Prints a list of ZMWs and exits. (Default = False)

--whitelist WHITELIST Specifies the ZMWs to include in the output. This can be a comma-separated list

of ZMWs, or a file containing a list of ZMWs (one hole number per line), or a BAM/

Data Set file. (Default = NONE)

--blacklist BLACKLIST Specifies the ZMWs to exclude from the output. This can be a comma-separated

list of ZMWs, or a file containing a list of ZMWs (one hole number per line), or a

BAM/Data Set file that specifies ZMWs. (Default = NONE)

--percentage PERCENTAGE Specifies a percentage of a SMRT Cell to recover (Range = 1-100) rather than a

specific list of reads. (Default = NONE)

-n COUNT, --count COUNT Specifies a specific number of ZMWs picked at random to recover. (Default =

NONE)

-s SEED, --seed SEED Specifies a random seed for selecting a percentage of reads. (Default = NONE)

--ignore-metadata Discard the input Data Set metadata. (Default = False)

--barcodes Specifies that the whitelist or blacklist contains barcode indices instead of ZMW

numbers. (Default = False)

Options Description

Page 5

$ bamsieve --blacklist 111111 full.subreadset.xml filtered.subreadset.xml

Selecting 0.1% of reads:

$ bamsieve --percentage 0.1 full.subreads.bam random_sample.subreads.bam

Selecting a different 0.1% of reads:

$ bamsieve --percentage 0.1 --seed 98765 full.subreads.bam random_sample.subreads.bam

Selecting just two ZMWs/reads at random:

$ bamsieve --count 2 full.subreads.bam two_reads.subreads.bam

Selecting by barcode:

$ bamsieve --barcodes --whitelist 4,7 full.subreads.bam two_barcodes.subreads.bam

Generating a tiny BAM file that contains only mappable reads:

$ bamsieve --whitelist mapped.subreads.bam full.subreads.bam mappable.subreads.bam

$ bamsieve --count 4 mappable.subreads.bam tiny.subreads.bam

Splitting a Data Set into two halves:

$ bamsieve --percentage 50 full.subreadset.xml split.1of2.subreadset.xml

$ bamsieve --blacklist split.1of2.subreadset.xml full.subreadset.xml

split.2of2.subreadset.xml

Extracting Unmapped Reads:

$ bamsieve --blacklist mapped.alignmentset.xml movie.subreadset.xml

unmapped.subreadset.xml

blasr

The blasr tool aligns long reads against a reference sequence, possibly a

multi-contig reference.

blasr maps reads to genomes by finding the highest scoring local

alignment or set of local alignments between the read and the genome.

The initial set of candidate alignments is found by querying a rapidly-

searched precomputed index of the reference genome, and then refining

until only high-scoring alignments are kept. The base assignment in

alignments is optimized and scored using all available quality information,

such as insertion and deletion quality values.

Because alignment approximates an exhaustive search, alignment

significance is computed by comparing optimal alignment score to the

distribution of all other significant alignment scores.

Usage

blasr {subreads|ccs}.bam genome.fasta --bam --out aligned.bam [--options]

blasr {subreadset|consensusreadset}.xml genome.fasta --bam --out aligned.bam [--

Page 6

options]

blasr reads.fasta genome.fasta [--options]

Input Files

• {subreads|ccs}.bam is in PacBio BAM format, which is the native

Sequel

®

/Sequel II System output format of SMRT reads. PacBio BAM

files carry rich quality information (such as insertion, deletion, and

substitution quality values) needed for mapping, consensus calling and

variant detection. For the PacBio BAM format specifications, see

http://pacbiofileformats.readthedocs.io/en/5.1/BAM.html.

• {subreadset|consensusreadset}.xml is in PacBio Data Set format.

For the PacBio Data Set format specifications, see

http://pacbiofileformats.readthedocs.io/en/5.1/DataSet.html.

• reads.fasta: A multi-FASTA file of reads. While any FASTA file is

valid input,

bam or dataset files are preferable as they contain more

rich quality value information.

• genome.fasta: A FASTA file to which reads should map, usually

containing reference sequences.

Output Files

• aligned.bam: The pairwise alignments for each read, in PacBio BAM

format.

Input Options

Options for Aligning Output

Options Description

--sa suffixArrayFile Uses the suffix array sa for detecting matches between the reads and the reference.

(The suffix array is prepared by the sawriter program.)

--ctab tab Specifies a table of tuple counts used to estimate match significance, created by

printTupleCountTable. While it is quick to generate on the fly, if there are many

invocations of blasr, it is useful to precompute the ctab.

--regionTable table Specifies a read-region table in HDF format for masking portions of reads. This may

be a single table if there is just one input file, or a fofn (file-of-file names). When a

region table is specified, any region table inside the reads.plx.h5 or

reads.bax.h5 files is ignored. Note: This option works only with PacBio RS II

HDF5 files.

--noSplitSubreads Does not split subreads at adapters. This is typically only useful when the genome in

an unrolled version of a known template, and contains template-adapter-reverse-

template sequences. (Default = False)

Options Description

--bestn n Provides the top n alignments for the hit policy to select from. (Default = 10)

--sam Writes output in SAM format.

--bam Writes output in PacBio BAM format.

--clipping Uses no/hard/soft clipping for SAM output. (Default = none)

--out file Writes output to file. (Default = terminal)

--unaligned file Output reads that are not aligned to file.

Page 7

Options for Anchoring Alignment Regions

• These options will have the greatest effects on speed and sensitivity.

--m t If not printing SAM, modifies the output of the alignment.

•t=0: Print blast-like output with |'s connecting matched nucleotides.

• 1: Print only a summary: Score and position.

• 2: Print in Compare.xml format.

• 3: Print in vulgar format (Deprecated).

• 4: Print a longer tabular version of the alignment.

• 5: Print in a machine-parsable format that is read by

compareSequences.py.

--noSortRefinedAlignments Once candidate alignments are generated and scored via sparse dynamic

programming, they are rescored using local alignment that accounts for different

error profiles. Resorting based on the local alignment may change the order in

which the hits are returned. (Default = False)

--allowAdjacentIndels Allows adjacent insertion or deletions. Otherwise, adjacent insertion and

deletions are merged into one operation. Using quality values to guide pairwise

alignments may dictate that the higher probability alignment contains adjacent

insertions or deletions. Tools such as GATK do not permit this and so they are

not reported by default.

--header Prints a header as the first line of the output file describing the contents of each

column.

--titleTable tab Builds a table of reference sequence titles. The reference sequences are

enumerated by row, 0,1,... The reference index is printed in alignment results

rather than the full reference name. This makes output concise, particularly

when very verbose titles exist in reference names. (Default = NULL)

--minPctSimilarity p Reports alignments only if they are greater than p percent identity. (Default = 0)

--holeNumbers LIST Aligns reads whose ZMW hole numbers are in LIST only.

LIST is a comma-delimited string of ranges, such as 1,2,3,10-13. This

option only works when reads are in base or pulse h5 format.

--hitPolicy policy

Specifies how blasr treats multiple hits:

• all: Reports all alignments.

• allbest: Reports all equally top-scoring alignments.

• random: Reports a single random alignment.

• randombest: Reports a single random alignment from multiple equally top-

scoring alignments.

• leftmost: Reports an alignment which has the best alignment score and

has the smallest mapping coordinates in any reference.

Options Description

Options Description

--minMatch m Specifies the minimum seed length. A higher value will speed up alignment,

but decrease sensitivity. (Default = 12)

--maxMatch m

--maxLCPLength m

Stops mapping a read to the genome when the LCP length reaches m. This is

useful when the query is part of the reference, for example when constructing

pairwise alignments for de novo assembly. (Both options work the same.)

--maxAnchorsPerPosition m Do not add anchors from a position if it matches to more than m locations in

the target.

--advanceExactMatches E Speeds up alignments with match -E fewer anchors. Rather than finding

anchors between the read and the genome at every position in the read, when

an anchor is found at position i in a read of length L, the next position in a

read to find an anchor is at i+L-E. Use this when aligning already assembled

contigs. (Default = 0)

Page 8

Options for Refining Hits

Options for Overlap/Dynamic Programming Alignments and

Pairwise Overlap for de novo Assembly

--nCandidates n Keeps up to n candidates for the best alignment. A large value will slow

mapping as the slower dynamic programming steps are applied to more

clusters of anchors - this can be a rate-limiting step when reads are very long.

(Default = 10)

--concordant Maps all subreads of a ZMW (hole) to where the longest full pass subread of

the ZMW aligned to. This requires using the region table and hq regions. This

option only works when reads are in base or pulse h5 format.

(Default = False)

--placeGapConsistently Produces alignments with gaps placed consistently for better variant calling.

See “Gaps When Aligning” on page 10 for details.

Options Description

Options Description

--refineConcordantAlignments Refines concordant alignments. This slightly increases alignment accuracy

at the cost of time. This option is omitted if –-concordant is not set to

True. (Default = False)

--sdpTupleSize K Uses matches of length K to speed dynamic programming alignments. This

option controls accuracy of assigning gaps in pairwise alignments once a

mapping has been found, rather than mapping sensitivity itself.

(Default = 11)

--scoreMatrix "score matrix

string"

Specifies an alternative score matrix for scoring FASTA reads. The matrix is

in the format

ACGTN

A abcde

C fghij

G klmno

T pqrst

N uvwxy

The values a...y should be input as a quoted space separated string: "a

b c ... y". Lower scores are better, so matches should be less than

mismatches; such as a,g,m,s = -5 (match), mismatch = 6.

--affineOpen value Sets the penalty for opening an affine alignment. (Default = 10)

--affineExtend a Changes affine (extension) gap penalty. Lower value allows more gaps.

(Default = 0)

Options Description

--useQuality Uses substitution/insertion/deletion/merge quality values to score gap and mismatch

penalties in pairwise alignments. As the insertion and deletion rates are much higher

than substitution, this makes many alignments favor an insertion/deletion over a

substitution. Naive consensus-calling methods will then often miss substitution

polymorphisms. Use this option when calling consensus using the Quiver method.

Note: When not using quality values to score alignments, there will be a lower

consensus accuracy in homopolymer regions. (Default = False)

--affineAlign Refines alignment using affine guided align. (Default = False)

Page 9

Options for Filtering Reads

Options for Parallel Alignment

Options for Subsampling Reads

Examples

To align reads from reads.bam to the ecoli_K12 genome, and output in

PacBio BAM format:

blasr reads.bam ecoli_K12.fasta --bam --out ecoli_aligned.bam

To use multiple threads:

blasr reads.bam ecoli_K12.fasta --bam --out ecoli_aligned.bam --proc 16

To include a larger minimal match, for faster but less sensitive alignments:

blasr reads.bam ecoli_K12.fasta --bam --out ecoli_aligned.bam --proc 16 –-minMatch 15

To produce alignments in a pairwise human-readable format:

blasr reads.bam ecoli_K12.fasta -m 0

To use a precomputed suffix array for faster startup:

sawriter hg19.fasta.sa hg19.fasta #First precompute the suffix array

blasr reads.bam hg19.fasta --sa hg19.fasta.sa

Options Description

--minReadLength l Ignores reads that have a full length less than l. Subreads may be shorter.

(Default = 50)

--minSubreadLength l Does not align subreads of length less than l. (Default = 0)

--minAlnLength Reports alignments only if their lengths are greater than this value. (Default = 0)

Options Description

--nproc N Aligns using N processes. All large data structures such as the suffix array and tuple

count table are shared. (Default = 1)

--start S Index of the first read to begin aligning. This is useful when multiple instances are

running on the same data; for example when on a multi-rack cluster. (Default = 0)

--stride S Aligns one read every S reads. (Default = 1)

Options Description

--subsample p Proportion p of reads to randomly subsample and align; expressed as a decimal.

(Default = 0)

--help Displays help information and exits.

--version Displays version information using the format MajorVersion.Subversion.SHA1

(Example: 5.3.abcd123) and exits.

Page 10

Gaps When Aligning

By default, blasr places gap inconsistently when aligning a sequence

and its reverse-complement sequence. It is preferable to place gap

consistently to call a consensus sequence from multiple alignments or call

single nucleotide variants (SNPs), as the output alignments will make it

easier for variant callers to call variants.

Example:

REF : TTTTTTAAACCCC

READ1: TTTTTTACCCC

READ2: GGGGTAAAAAA

where READ1 and READ2 are reverse-complementary to each other.

In the following alignments, gaps are placed inconsistently:

REF : TTTTTTAAACCCC

READ1 : TTTTTTA--CCCC

RevComp(READ2): TTTTTT--ACCCC

In the following alignments, gaps are placed consistently, with

--placeGapsConsistently specified:

REF : TTTTTTAAACCCC

READ1 : TTTTTTA--CCCC

RevComp(READ2): TTTTTTA--CCCC

To produce alignments with gaps placed consistently for better variant

calling, use the

--placeGapConsistently option:

blasr query.bam target.fasta --out outfile.bam --bam -–placeGapConsistently

ccs

Circular Consensus Sequencing (CCS) calculates consensus sequences

from multiple “passes” around a circularized single DNA molecule

(SMRTbell

®

template). CCS uses the Arrow framework to achieve optimal

consensus results given the number of passes available.

Page 11

Input Files

• One .subreads.bam file containing the subreads for each SMRTbell

®

template sequenced.

Output Files

• A BAM file with one entry for each consensus sequence derived from a

ZMW. BAM is a general file format for storing sequence data, which is

described fully by the SAM/BAM working group. The CCS output

format is a version of this general format, where the consensus

sequence is represented by the "Query Sequence". Several tags were

added to provide additional meta information. An example BAM entry

for a consensus as seen by

samtools is shown below.

m141008_060349_42194_c100704972550000001823137703241586_s1_p0/63/ccs4*0255

**00CCCGGGGATCCTCTAGAATGC~~~~~~~~~~~~~~~~~~~~~RG:Z:83ba013f np:i:35 rq:f:0.999682

sn:B:f,11.3175,6.64119,11.6261,14.5199 zm:i:63

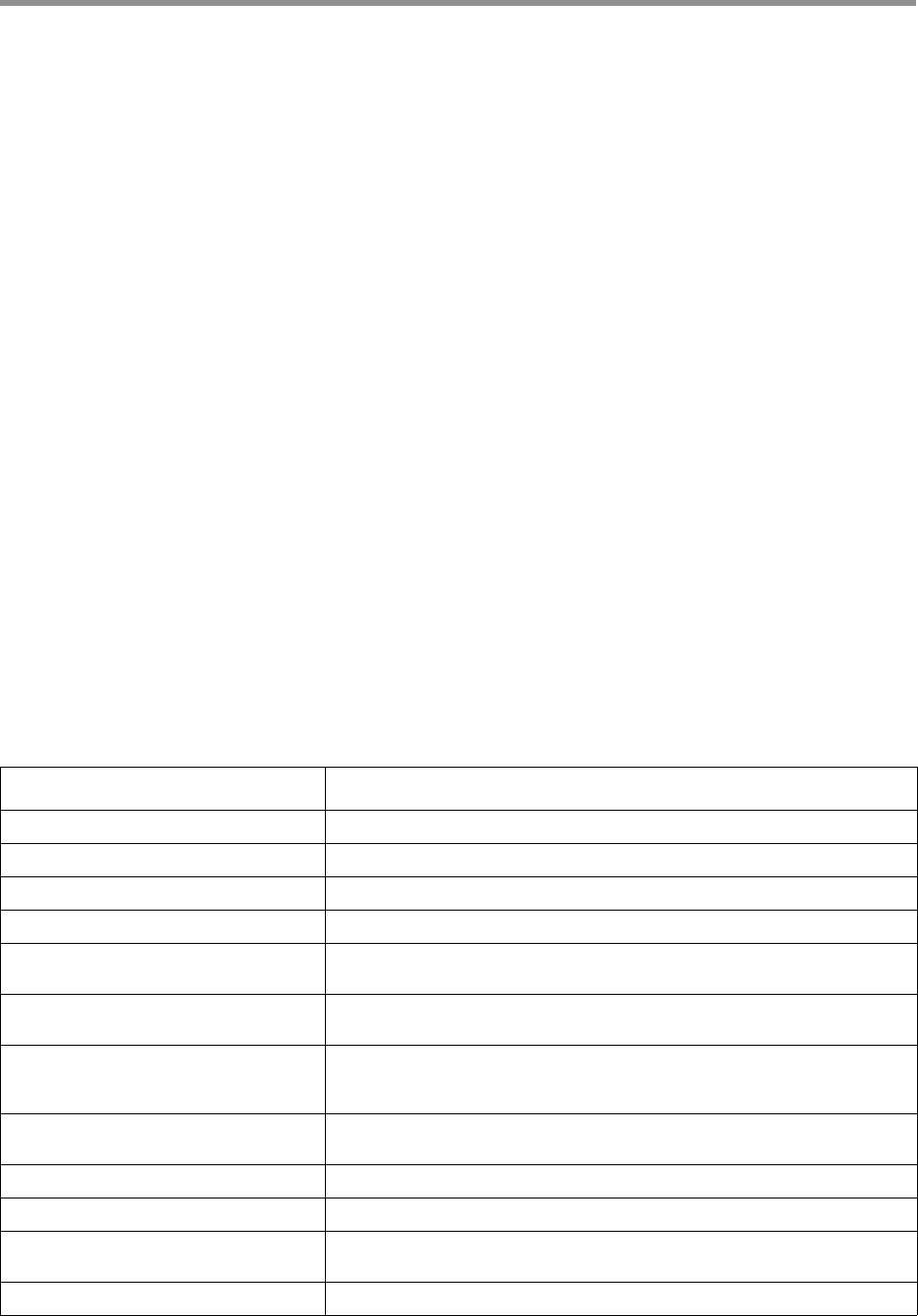

Following are some of the common fields contained in the output BAM file:

Field Description

Query Name Movie Name / ZMW # /ccs

FLAG Required by the format but meaningless in this context. Always set to 4 to indicate the

read is unmapped.

Reference Name Required by the format but meaningless in this context. Always set to *.

Mapping Start Required by the format but meaningless in this context. Always set to 0.

Mapping Quality Required by the format but meaningless in this context. Always set to 255.

CIGAR Required by the format but meaningless in this context. Always set to *.

RNEXT Required by the format but meaningless in this context. Always set to *.

PNEXT Required by the format but meaningless in this context. Always set to 0.

TLEN Required by the format but meaningless in this context. Always set to 0.

Page 12

Usage

ccs [OPTIONS] INPUT OUTPUT

Example

ccs --minlength 100 myData.subreads.bam myResult.bam

Consensus Sequence The consensus sequence generated.

Quality Values The per-base parametric quality metric. For details see “Interpreting QUAL Values” on

page 13.

RG Tag The read group identifier.

bc Tag A 2-entry array of upstream-provided barcode calls for this ZMW.

bq Tag The quality of the barcode call. (Optional: Depends on barcoded inputs.)

np Tag The number of full passes that went into the subread. (Optional: Depends on barcoded

inputs.)

rq Tag The predicted read quality.

t2 Tag The time (in seconds) spent aligning subreads to the draft consensus, prior to polishing.

t3 Tag The time (in seconds) spent polishing the draft consensus, not counting retries.

zm Tag The ZMW hole number.

Field Description

Required Description

Input File Name The name of a single subreads.bam or a subreadset.xml file to be processed.

(Example = myData.subreads.bam)

Output File Name The name of the output BAM file; comes after all other options listed. Valid output

files are the BAM and the Dataset .xml formats. (Example = myResult.bam)

Options Description

--version Prints the version number.

--report-file Contains a result tally of the outcomes for all ZMWs that were processed. If no file

name is given, the report is output to the file ccs_report.txt In addition to the

count of successfully-produced consensus sequences, this file lists how many

ZMWs failed various data quality filters (SNR too low, not enough full passes, and

so on) and is useful for diagnosing unexpected drops in yield.

--min-snr Removes data that is likely to contain deletions. SNR is a measure of the strength

of signal for all 4 channels (A, C, G, T) used to detect base pair incorporation. This

value sets the threshold for minimum required SNR for any of the four channels.

Data with SNR < 2.5 is typically considered lower quality. (Default = 2.5)

--min-length Specifies the minimum length requirement for the minimum length of the draft

consensus to be used for further polishing. If the targeted template is known to be a

particular size range, this can filter out alternative DNA templates. (Default = 10)

--max-length Specifies the maximum length requirement for the maximum length of the draft

consensus to be used for further polishing. For robust results while avoiding

unnecessary computation on unusual data, set to ~20% above the largest expected

insert size. (Default = 50000)

Page 13

Interpreting QUAL Values

The QUAL value of a read is a measure of the posterior likelihood of an

error at a particular position. Increasing QUAL values are associated with

a decreasing probability of error. For indels and homopolymers, there is

ambiguity as to which QUAL value is associated with the error probability.

Shown below are different types of alignment errors, with a

* indicating

which sequence BP should be associated with the alignment error.

Mismatch

*

ccs: ACGTATA

ref: ACATATA

Deletion

*

ccs: AC-TATA

ref: ACATATA

--min-passes Specifies the minimum number of passes for a ZMW to be emitted. This is the

number of full passes. Full passes must have an adapter hit before and after the

insert sequence and so do not include any partial passes at the start and end of the

sequencing reaction. It is computed as the number of passes mode across all

windows. (Default = 3)

--min-rq Specifies the minimum predicted accuracy of a read. ccs generates an accuracy

prediction for each read, defined as the expected percentage of matches in an

alignment of the consensus sequence to the true read. A value of 0.99 indicates

that only reads expected to be 99% accurate are emitted. (Default = 0.99)

--num-threads Specifies how many threads to use while processing. By default, ccs will use as

many threads as there are available cores to minimize processing time, but fewer

threads can be specified here.

--log-file The name of a log file to use. If none is given, the logging information is printed to

STDERR. (Example: mylog.txt)

--log-level Specifies verbosity of log data to produce. By setting --logLevel=DEBUG, you can

obtain detailed information on what ZMWs were dropped during processing, as well

as any errors which may have appeared. (Default = INFO)

--skip-polish After constructing the draft consensus, do not proceed with the polishing steps.

This is significantly faster, but generates less accurate data with no RQ or QUAL

values associated with each base.

--by-strand Separately generates a consensus sequence from the forward and reverse strands.

Useful for identifying heteroduplexes formed during sample preparation.

--chunk Operates on a single chunk. Format i/N, where i in [1,N]. Examples: 3/24 or 9/9.

--max-chunks Determines the maximum number of chunks, given an input file.

--modelPath Specifies the path to a model file or directory containing model files.

--modelSpec Specifies the name of the chemistry or model to use, overriding the default

selection.

Options Description

Page 14

Insertion

*

ccs: ACGTATA

ref: AC-TATA

Homopolymer Insertion or Deletion

Indels should always be left-aligned, and the error probability is only given

for the first base in a homopolymer.

* *

ccs: ACGGGGTATA ccs: AC-GGGTATA

ref: AC-GGGTATA ref: ACGGGGTATA

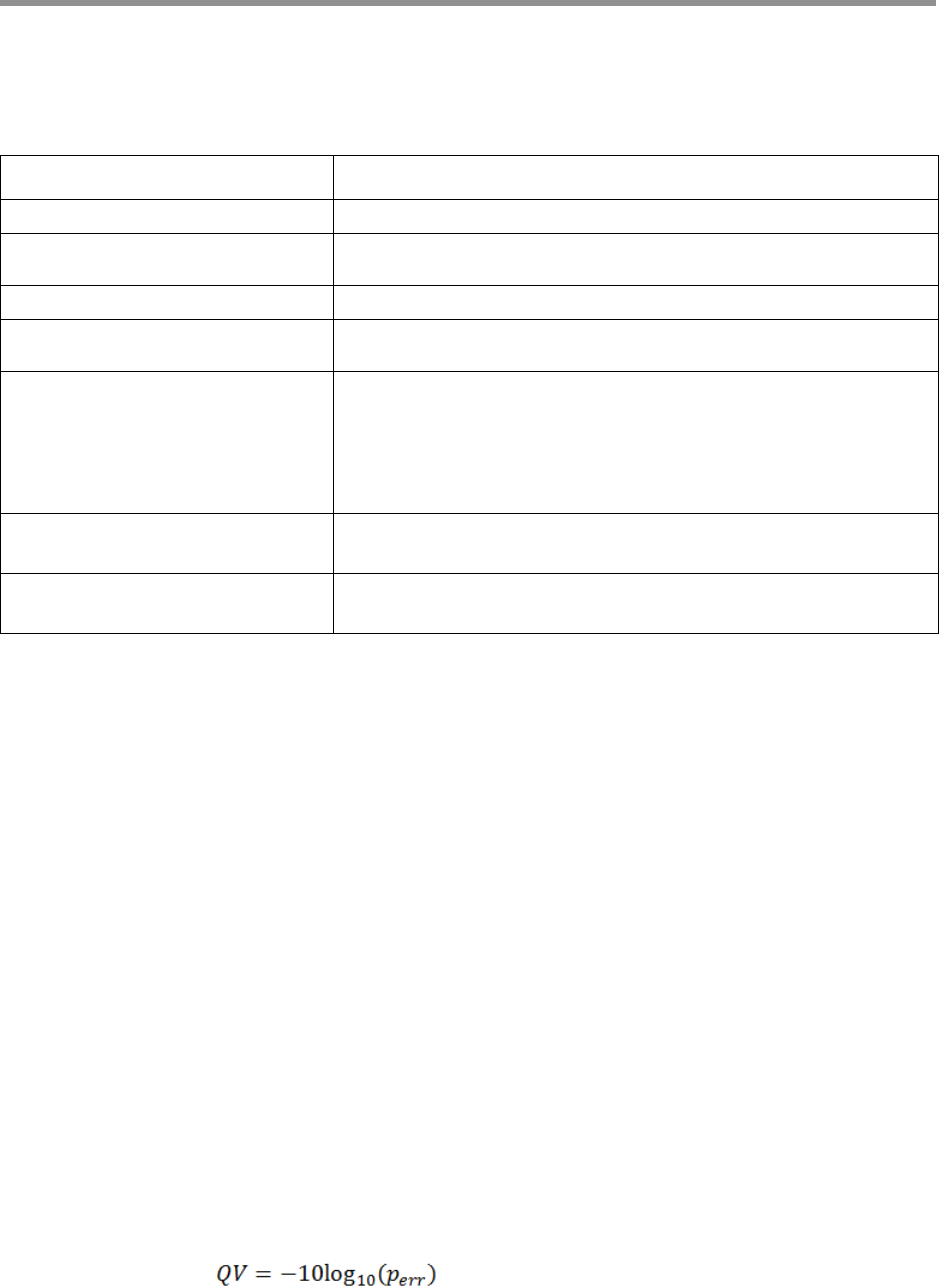

CCS Yield Report

The CCS Report specifies the number of ZMWs that successfully

produced consensus sequences, as well as a count of how many ZMWs

did not produce a consensus sequence for various reasons. The entries in

this report, as well as parameters used to increase or decrease the

number of ZMWs that pass various filters, are shown in the table below.

The first part is a summary of inputs and outputs:

The second part explains in details the exclusive ZMW count for (C),

those ZMWs that were filtered:

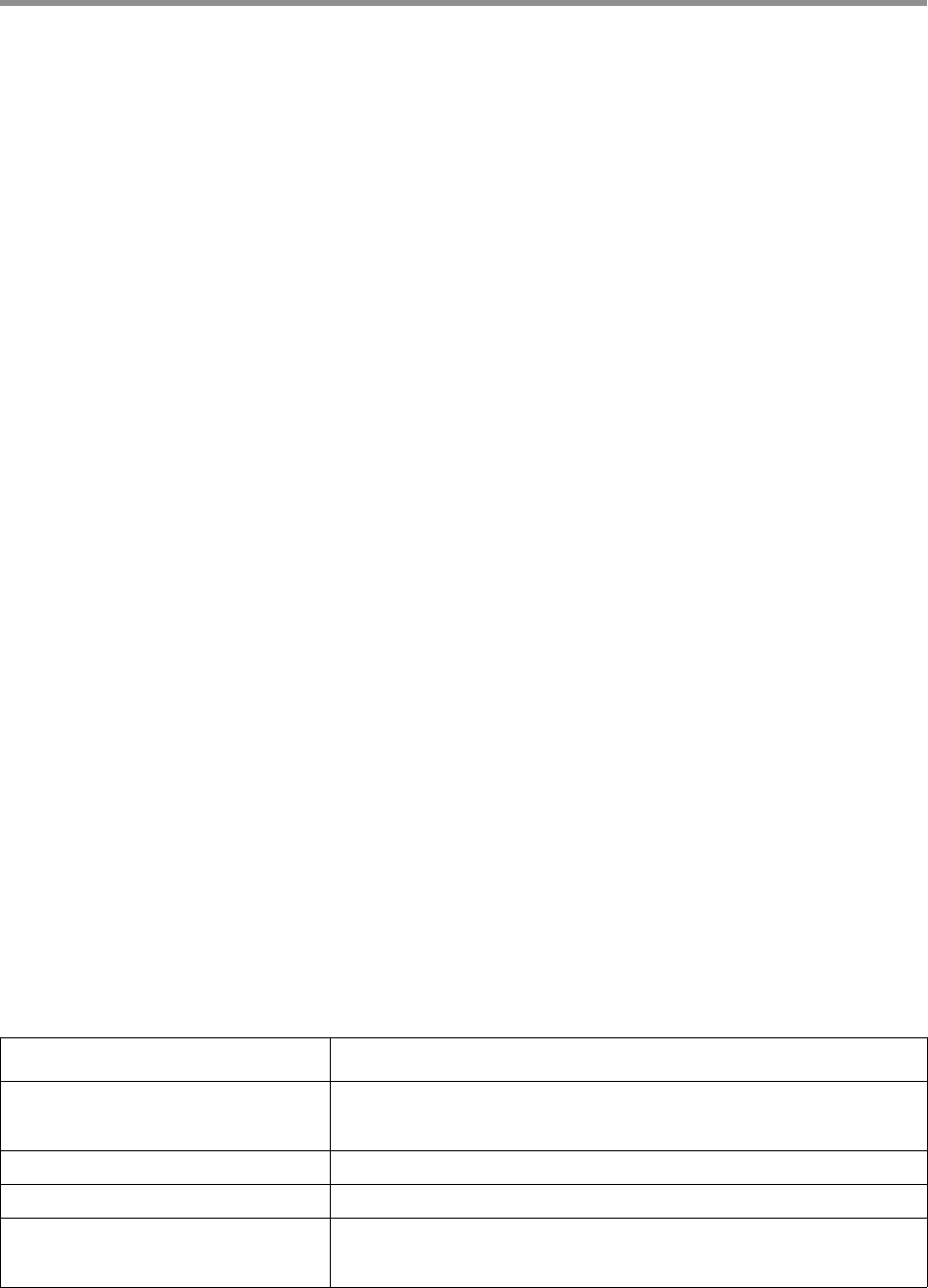

ZMW Results

Parameters Affecting

Results

Description

ZMWs input (A) None The number of input ZMWs.

ZMWs generating CCS (B) All custom processing settings The number of CCS reads successfully produced on

the first attempt, using the fast windowed approach.

ZMWs filtered (C) All custom processing settings The number of ZMWs reads that failed producing a

CCS read.

ZMW Results

Parameters Affecting

Results

Description

No usable subreads --minReadScore,

--minLength,

--maxLength

The ZMW had no usable subreads. Either there were no

subreads, or all subreads had lengths outside the range

<50% or >200% of the median subread length.

Below SNR threshold --min-snr The ZMW had at least one channel's SNR below the

minimum threshold.

Lacking full passes --min-passes There were not enough subreads that had an adapter at

the start and end of the subread (a "full pass").

Heteroduplexes None The SMRTbell contains a heteroduplex. In this case, it is

not clear what the consensus should be and so the ZMW is

dropped.

Min coverage violation None The ZMW is damaged on one strand and cannot be

polished reliably.

Page 15

dataset

The dataset tool creates, opens, manipulates and writes Data Set XML

files. The commands allow you to perform operations on the various types

of data held by a Data Set XML: Merge, split, write, and so on.

Usage

dataset [-h] [--version] [--log-file LOG_FILE]

[--log-level {DEBUG,INFO,WARNING,ERROR,CRITICAL} | --debug | --quiet | -v]

[--strict] [--skipCounts]

{create,filter,merge,split,validate,summarize,consolidate,loadstats,newuuid,loadmetada

ta,copyto,absolutize,relativize}

create Command: Create an XML file from a fofn (file-of-file names) or

BAM file. Possible types:

SubreadSet, AlignmentSet, ReferenceSet,

Draft generation error None Subreads do not match the generated draft sequence,

even after multiple tries.

Draft above --max-

length

--max-length The draft sequence was above the maximum length

threshold.

Draft below --min-

length

--min-length The draft sequence was below the minimum length

threshold.

Lacking usable

subreads

None Too many subreads were dropped while polishing

CCS did not converge None The consensus sequence did not converge after the

maximum number of allowed rounds of polishing.

CCS below minimum

predicted accuracy

--min-rq Each CCS read has a predicted level of accuracy

associated with it. Reads that are below the minimum

specified threshold are removed.

Unknown error during

processing

None These should not occur.

ZMW Results

Parameters Affecting

Results

Description

Options Description

-h, --help Displays help information and exits.

<Command> -h Displays help for a specific command.

-v, --version Displays program version number and exits.

--log-file LOG_FILE Writes the log to file. (Default = None, writes to stdout.)

--log-level Specifies the log level; values are [DEBUG, INFO, WARNING, ERROR,

CRITICAL]. (Default = INFO)

--debug Alias for setting the log level to DEBUG. (Default = False)

--quiet Alias for setting the log level to CRITICAL to suppress output.

(Default = False)

-v Sets the verbosity level. (Default = NONE)

--strict Turns on strict tests and display all errors. (Default = False)

--skipCounts Skips updating NumRecords and TotalLength counts.

(Default = False)

Page 16

HdfSubreadSet, BarcodeSet, ConsensusAlignmentSet,

ConsensusReadSet, ContigSet

.

dataset create [-h] [--type DSTYPE] [--name DSNAME] [--generateIndices]

[--metadata METADATA] [--novalidate] [--relative]

outfile infile [infile ...]

Example

The following example shows how to use the dataset create command

to create a barcode file:

$ dataset create --generateIndices --name my_barcodes --type BarcodeSet

my_barcodes.barcodeset.xml my_barcodes.fasta

filter Command: Filter an XML file using filters and threshold values.

• Suggested filters: accuracy, bc, bcf, bcq, bcr, bq, cx, length, movie,

n_subreads, pos, qend, qname, qstart, readstart, rname, rq, tend,

tstart, zm.

• More resource-intensive filter: [qs]

Note: Multiple filters with different names are ANDed together. Multiple

filters with the same name are ORed together, duplicating existing

requirements.

dataset filter [-h] infile outfile filters [filters ...]

Required Description

outfile The name of the XML file to create.

infile The fofn (file-of-file-names) or BAM file(s) to convert into an XML file.

Options Description

--type DSTYPE Specifies the type of XML file to create. (Default = NONE)

--name DSNAME The name of the new Data Set XML file.

--generateIndices Generates index files (.pbi and .bai for BAM, .fai for FASTA). Requires

samtools/pysam and pbindex. (Default = FALSE)

--metadata METADATA A metadata.xml file (or Data Set XML) to supply metadata.

(Default = NONE)

--novalidate Specifies not to validate the resulting XML. Leaves the paths as they are.

--relative Makes the included paths relative instead of absolute. This is not

compatible with --novalidate.

Required Description

infile The name of the XML file to filter.

outfile The name of the output filtered XML file.

Page 17

merge Command: Combine XML files.

dataset merge [-h] outfile infiles [infiles ...]

split Command: Split a Data Set XML file.

dataset split [-h] [--contigs] [--barcodes] [--zmws] [--byRefLength]

[--noCounts] [--chunks CHUNKS] [--maxChunks MAXCHUNKS]

[--targetSize TARGETSIZE] [--breakContigs]

[--subdatasets] [--outdir

infile [outfiles...]

validate Command: Validate XML and ResourceId files. (This is an

internal testing functionality that may be useful.)

Note: This command requires that pyxb (not distributed with SMRT Link)

be installed. If not installed,

validate simply checks that the files pointed

to in

ResourceIds exist.

filters The values to filter on. (Example: rq>0.85)

Required Description

Required Description

infiles The names of the XML files to merge.

outfile The name of the output XML file.

Required Description

infile The name of the XML file to split.

Options Description

outfiles The names of the resulting XML files.

--contigs Splits the XML file based on contigs. (Default = FALSE)

--barcodes Splits the XML file based on barcodes. (Default = FALSE)

--zmws Splits the XML file based on ZMWs. (Default = FALSE)

--byRefLength Splits contigs by contig length. (Default = TRUE)

--noCounts Updates the Data Set counts after the split. (Default = FALSE)

--chunks x Splits contigs into x total windows. (Default = 0)

--maxChunks x Splits the contig list into at most x groups. (Default = 0)

--targetSize x Specifies the minimum number of records per chunk. (Default = 5000)

--breakContigs Breaks contigs to get closer to maxCounts. (Default = False)

--subdatasets Splits the XML file based on subdatasets. (Default = False)

--outdir OUTDIR Specifies an output directory for the resulting XML files.

(Default = <in-place>, not the current working directory.)

Page 18

dataset validate [-h] [--skipFiles] infile

summarize Command: Summarize a Data Set XML file.

dataset summarize [-h] infile

consolidate Command: Consolidate XML files.

dataset consolidate [-h] [--numFiles NUMFILES] [--noTmp]

infile datafile xmlfile

loadstats Command: Load an sts.xml file containing pipeline statistics

into a Data Set XML file.

dataset loadstats [-h] [--outfile OUTFILE] infile statsfile

Required Description

infile The name of the XML file to validate.

Options Description

--skipFiles Skips validating external resources. (Default = False)

Required Description

infile The name of the XML file to summarize.

Required Description

infile The name of the XML file to consolidate.

datafile The name of the resulting data file.

xmlfile The name of the resulting XML file.

Options Description

--numFiles x Specifies the number of data files to produce. (Default = 1)

--noTmp Do not copy to a temporary location to ensure local disk use.

(Default = False)

Required Description

infile The name of the Data Set XML file to modify.

statsfile The name of the .sts.xml file to load.

Options Description

--outfile OUTFILE The name of the XML file to output. (Default = None)

Page 19

newuuid Command: Refresh a Data Set's Unique ID.

dataset newuuid [-h] [--random] infile

loadmetadata Command: Load a .metadata.xml file into a Data Set

XML file.

dataset loadmetadata [-h] [--outfile OUTFILE] infile metadata

copyto Command: Copy a Data Set and resources to a new location.

dataset copyto [-h] [--relative] infile outdir

absolutize Command: Make the paths in an XML file absolute.

dataset absolutize [-h] [--outdir OUTDIR] infile

Required Description

infile The name of the XML file to refresh.

Options Description

--random Generates a random UUID, instead of a hash. (Default = False)

Required Description

infile The name of the Data Set XML file to modify.

metadata The .metadata.xml file to load, or Data Set to borrow from.

Options Description

--outfile OUTFILE Specifies the XML file to output. (Default = None)

Required Description

infile The name of the XML file to copy.

outdir The directory to copy to.

Options Description

--relative Makes the included paths relative instead of absolute. (Default = False)

Required Description

infile The name of the XML file whose paths should be absolute.

Options Description

--outdir OUTDIR Specifies an optional output directory. (Default = None)

Page 20

relativize Command: Make the paths in an XML file relative.

dataset relativize [-h] infile

Example - Filter Reads

To filter one or more BAM file’s worth of subreads, aligned or otherwise,

and then place them into a single BAM file:

# usage: dataset filter <in_fn.xml> <out_fn.xml> <filters>

dataset filter in_fn.subreadset.xml filtered_fn.subreadset.xml 'rq>0.85'

# usage: dataset consolidate <in_fn.xml> <out_data_fn.bam> <out_fn.xml>

dataset consolidate filtered_fn.subreadset.xml consolidate.subreads.bam

out_fn.subreadset.xml

The filtered Data Set and the consolidated Data Set should be read-for-

read equivalent when used with SMRT

®

Analysis software.

Example - Resequencing Pipeline

• Align two movie’s worth of subreads in two SubreadSets to a

reference.

• Merge the subreads together.

• Split the subreads into Data Set chunks by contig.

• Process using gcpp on a chunkwise basis (in parallel).

1. Align each movie to the reference, producing a Data Set with one BAM

file for each execution:

pbalign movie1.subreadset.xml referenceset.xml movie1.alignmentset.xml

pbalign movie2.subreadset.xml referenceset.xml movie2.alignmentset.xml

2. Merge the files into a FOFN-like Data Set; BAMs are not touched:

# dataset merge <out_fn> <in_fn> [<in_fn> <in_fn> ...]

dataset merge merged.alignmentset.xml movie1.alignmentset.xml movie2.alignmentset.xml

3. Split the Data Set into chunks by contig name; BAMs are not touched:

– Note that supplying output files splits the Data Set into that many

output files (up to the number of contigs), with multiple contigs per

file.

– Not supplying output files splits the Data Set into one output file per

contig, named automatically.

– Specifying a number of chunks instead will produce that many files,

with contig or even subcontig (reference window) splitting.

dataset split --contigs --chunks 8 merged.alignmentset.xml

Required Description

infile The name of the XML file whose paths should be relative.

Page 21

4. Process the chunks:

gcpp --reference referenceset.xml --output

chunk1consensus.fasta,chunk1consensus.fastq,chunk1consensus.vcf,chunk1consensus.gff

chunk1contigs.alignmentset.xml

The chunking works by duplicating the original merged Data Set (no BAM

duplication) and adding filters to each duplicate such that only reads

belonging to the appropriate contigs are emitted. The contigs are

distributed among the output files in such a way that the total number of

records per chunk is about even.

Demultiplex

Barcodes

The Demultiplex Barcodes application identifies barcode sequences in

PacBio single-molecule sequencing data. It replaced

pbbarcode and

bam2bam for demultiplexing, starting with SMRT

®

Analysis v5.1.0.

Demultiplex Barcodes can demultiplex samples that have a unique per-

sample barcode pair and were pooled and sequenced on the same SMRT

Cell. There are four different methods for barcoding samples with PacBio

technology:

1. Sequence-specific primers

2. Barcoded universal primers

3. Barcoded adapters

4. Linear Barcoded Adapters for Probe-based Captures

Page 22

In addition, there are three different barcode library designs. As

Demultiplex Barcodes supports raw subread and CCS read

demultiplexing, the following terminology is based on the per (sub-) read

view.

Page 23

In the overview above, the input sequence is flanked by adapters on both

sides. The bases adjacent to an adapter are barcode regions. A read can

have up to two barcode regions, leading and trailing. Either or both adapt

-

ers can be missing and consequently the leading and/or trailing region is

not being identified.

For symmetric and tailed library designs, the same barcode is attached

to both sides of the insert sequence of interest. The only difference is the

orientation of the trailing barcode. For barcode identification, one read with

a single barcode region is sufficient.

For the asymmetric design, different barcodes are attached to the sides

of the insert sequence of interest. To identify the different barcodes, a read

with leading and trailing barcode regions is required.

Output barcode pairs are generated from the identified barcodes. The bar-

code names are combined using “--“, for example bc1002--bc1054. The

sort order is defined by the barcode indices, starting with the lowest.

Workflow

By default, Demultiplex Barcodes processes input reads grouped by

ZMW, except if the

--per-read option is used. All barcode regions along

the read are processed individually. The final per-ZMW result is a

summary over all barcode regions. Each ZMW is assigned to a pair of

selected barcodes from the provided set of candidate barcodes. Subreads

from the same ZMW will have the same barcode and barcode quality. For

a particular target barcode region, every barcode sequence gets aligned

as given and as reverse-complement, and higher scoring orientation is

chosen. This results in a list of scores over all candidate barcodes.

• If only same barcode pairs are of interest (symmetric/tailed), use the

--same option to filter out different barcode pairs.

• If only different barcode pairs are of interest (asymmetric), use the

--different option to require at least two barcodes to be read, and

remove pairs with the same barcode.

Page 24

Half Adapters

For an adapter call with only one barcode region, the high-quality region

finder cuts right through the adapter. The preceding or succeeding

subread was too short and was removed, or the sequencing reaction

started/stopped there. This is called a half adapter. Thus, there are also

1.5, 2.5, N+0.5 adapter calls.

ZMWs with half or only one adapter can be used to identify the same

barcode pairs; positive-predictive value might be reduced compared to

high adapter calls. For asymmetric designs with different barcodes in a

pair, at least a single full-pass read is required; this can be two adapters,

two half adapters, or a combination.

Usage:

• Any existing output files are overwritten after execution.

• Always use --peek-guess to remove spurious barcode hits.

Analysis of subread data:

lima movie.subreads.bam barcodes.fasta prefix.bam

lima movie.subreadset.xml barcodes.barcodeset.xml prefix.subreadset.xml

Analysis of CCS data:

lima --css movie.ccs.bam barcodes.fasta prefix.bam

lima --ccs movie.consensusreadset.xml barcodes.barcodeset.xml

prefix.consensusreadset.xml

If you do not need to import the demultiplexed data into SMRT Link, use

the

--no-pbi option to minimize memory consumption and run time.

Symmetric or Tailed options:

Raw: --same

CCS: --same --ccs

Asymmetric options:

Raw: --different

CCS: --different --ccs

Example Execution:

lima m54317_180718_075644.subreadset.xml \

Sequel_RSII_384_barcodes_v1.barcodeset.xml \

m54317_180718_075644.demux.subreadset.xml \

--different --peek-guess

Options Description

--same Retains only reads with the same barcodes on both ends of the insert

sequence, such as symmetric and tailed designs.

--different Retains only reads with different barcodes on both ends of the insert

sequence, asymmetric designs. Enforces --min-passes ≥ 1.

Page 25

--min-length n Omits reads with lengths below n base pairs after demultiplexing. ZMWs

with no reads passing are omitted. (Default = 50)

--max-input-length n Omits reads with lengths above n base pairs for scoring in the

demultiplexing step. (Default = 0, deactivated)

--min-score n Omits ZMWs with average barcode scores below n. A barcode score

measures the alignment between a barcode attached to a read and an

ideal barcode sequence, and is an indicator how well the chosen barcode

pair matches. It is normalized to a range between 0 (no hit) and 100 (a

perfect match).

(Default = 0, Pacific Biosciences recommends setting it to 26.)

--min-end-score n Specifies the minimum end barcode score threshold applied to the

individual leading and trailing ends. (Default = 0)

--min-passes n Omits ZMWs with less than n full passes, a read with a leading and trailing

adapter. (Default = 0, no full-pass needed) Example:

0 pass : insert - adapter - insert

1 pass : insert - adapter - INSERT - adapter - insert

2 passes: insert - adapter - INSERT - adapter - INSERT -

adapter - insert

--score-full-pass Uses only reads flanked by adapters on both sides (full-pass reads) for

barcode identification.

--min-ref-span Specifies the minimum reference span relative to the barcode length.

(Default = 0.5)

--per-read Scores and tags per subread, instead of per ZMW.

--ccs Sets defaults to -A 1 -B 4 -D 3 -I 3 -X 1.

--peek n Looks at the first n ZMWs of the input and return the mean. This lets you

test multiple test barcode.fasta files and see which set of barcodes

was used.

--guess n This performs demultiplexing twice. In the first iteration, all barcodes are

tested per ZMW. Afterwards, the barcode occurrences are counted and

their mean is tested against the threshold n; only those barcode pairs that

pass this threshold are used in the second iteration to produce the final

demultiplexed output. A prefix.lima.guess file shows the decision

process; --same is being respected.

--guess-min-count Specifies the minimum ZMW count to whitelist a barcode. This filter is

ANDed with the minimum barcode score specified by --guess.

(Default = 0)

--peek-guess Equivalent to the Infer Barcodes Used parameter option in SMRT Link.

Sets the following options:

--peek 50000 --guess 45 --guess-min-count 10.

Demultiplex Barcodes will run twice on the input data. For the first 50,000

ZMWs, it will guess the barcodes and store the mask of identified

barcodes. In the second run, the barcode mask is used to demultiplex all

ZMWs.

If combined with --ccs then the barcode score threshold is increased by

--guess 75.

--single-side Identifies barcodes in molecules that only have barcodes adjacent to one

adapter.

--window-size-mult

--window-size-bp

The candidate region size multiplier: barcode_length *

multiplier. (Default = 3)

Optionally, you can specify the region size in base pairs using

--window-size-bp. If set, --window-size-mult is ignored.

Options Description

Page 26

Input Files:

Input data in PacBio-enhanced BAM format is either:

• Sequence data - Unaligned subreads, directly from a Sequel/Sequel II

System, or

• Unaligned CCS reads, generated by CCS 2.

Note: To demultiplex PacBio RS II data, use SMRT Link or bax2bam to

convert

.h5 files to BAM format.

Barcodes are provided as a FASTA file or BarcodeSet file:

• One entry per barcode sequence.

• No duplicate sequences.

• All bases must be in upper-case.

• Orientation-agnostic (forward or reverse-complement, but not

reversed.)

Example:

>bc1000

CTCTACTTACTTACTG

--num-threads n Spawns n threads; 0 means use all available cores. This option also

controls the number of threads used for BAM and PBI compression.

(Default = 0)

--chunk-size n Specifies that each thread consumes n ZMWs per chunk for processing.

(Default = 10).

--no-bam Does not produce BAM output. Useful if only reports are of interest, as run

time is shorter.

--no-pbi Does not produce a .bam.pbi index file. The on-the-fly .bam.pbi file

generation buffers the output data. If you do not need a .bam.pbi index

file for SMRT Link import, use this option to decrease memory usage to a

minimum and shorten the run time.

--no-reports Does not produce any reports. Useful if only demultiplexed BAM files are

needed.

--dump-clips Outputs all clipped barcode regions generated to the

<prefix>.lima.clips file.

--dump-removed Outputs all records that did not pass the specified thresholds, or are

without barcodes, to the <prefix>.lima.removed.bam file.

--split-bam

--split-bam-named

Specifies that each barcode has its own BAM file called

prefix.idxBest-idxCombined.bam, such as prefix.0-0.bam.

Optionally ,--split-bam-named names the files by their barcode

names instead of their barcode indices.

--isoseq Removes primers as part of the Iso-Seq pipeline.

See “Demultiplexing Iso-Seq Data” on page 31 for details.

--bad-adapter-ratio n Specifies the maximum ratio of bad adapters. (Default = 0).

Options Description

Page 27

>bc1001

GTCGTATCATCATGTA

>bc1002

AATATACCTATCATTA

Note: Name barcodes using an alphabetic character prefix to avoid later

barcode name/index confusion.

Output Files:

Demultiplex Barcodes generates multiple output files by default, all

starting with the same prefix as the output file, using the suffixes

.bam,

.subreadset.xml, and .consensusreadset.xml. The report prefix is

lima. Example:

lima m54007_170702_064558.subreads.bam barcode.fasta /my/path/

m54007_170702_064558_demux.subreadset.xml

For all output files, the prefix is

/my/path/m54007_170702_064558_demux.

• <prefix>.bam: Contains clipped records, annotated with barcode

tags, that passed filters and respect the

--same option.

• <prefix>.lima.report: A tab-separated file describing each ZMW,

unfiltered. This is useful information for investigating the demultiplexing

process and the underlying data. A single row contains all reads from

a single ZMW. For

--per-read, each row contains one subread, and

ZMWs might span multiple rows.

• <prefix>.lima.summary: Lists how many ZMWs were filtered, how

many ZMWs are the same or different, and how many reads were

filtered.

(1)

ZMWs input (A) : 213120

ZMWs above all thresholds (B) : 176356 (83%)

ZMWs below any threshold (C) : 36764 (17%)

(2)

ZMW Marginals for (C) :

Below min length : 26 (0%)

Below min score : 0 (0%)

Below min end score : 5138 (13%)

Below min passes : 0 (0%)

Below min score lead : 11656 (32%)

Below min ref span : 3124 (8%)

Without adapter : 25094 (68%)

With bad adapter : 10349 (28%) <- Only with --bad-adapter-ratio

Undesired hybrids : xxx (xx%) <- Only with --peek-guess

Undesired same barcode pairs : xxx (xx%) <- Only with --different

Undesired diff barcode pairs : xxx (xx%) <- Only with --same

Undesired 5p--5p pairs : xxx (xx%) <- Only with --isoseq

Undesired 3p--3p pairs : xxx (xx%) <- Only with --isoseq

Undesired single side : xxx (xx%) <- Only with --isoseq

Undesired no hit : xxx (xx%) <- Only with --isoseq

Page 28

(3)

ZMWs for (B):

With same barcode : 162244 (92%)

With different barcodes : 14112 (8%)

Coefficient of correlation : 32.79%

(4)

ZMWs for (A):

Allow diff barcode pair : 157264 (74%)

Allow same barcode pair : 188026 (88%)

Bad adapter yield loss : 10112 (5%) <- Only with --bad-adapter-ratio

Bad adapter impurity : 10348 (5%) <- Only without --bad-adapter-ratio

(5)

Reads for (B):

Above length : 1278461 (100%)

Below length : 2787 (0%)

Explanation of each block:

1. Number of ZMWs that went into lima, how many ZMWs were passed

to the output file, and how many did not qualify.

2. For those ZMWs that did not qualify: The marginal counts of each filter.

(Filter are described in the Options table.)

When running with --peek-guess or similar manual option combina-

tion and different barcode pairs are found during peek, the full SMRT

Cell may contain low-abundant different barcode pairs that were identi

-

fied during peek individually, but not as a pair. Those unwanted bar-

code pairs are called hybrids.

3. For those ZMWs that passed: How many were flagged as having the

same or different barcode pair, as well as the coefficient of variation for

the barcode ZMW yield distribution in percent.

4. For all input ZMWs: How many allow calling the same or different bar-

code pair. This is a simplified version of how many ZMW have at least

one full pass to allow a different barcode pair call and how many

ZMWs have at least half an adapter, allowing the same barcode pair

call.

5. For those ZMWs that qualified: The number of reads that are above

and below the specified

--min-length threshold.

• <prefix>.lima.counts: A .tsv file listing the counts of each

observed barcode pair. Only passing ZMWs are counted. Example:

$ column -t prefix.lima.counts

IdxFirst IdxCombined IdxFirstNamed IdxCombinedNamed Counts MeanScore

0 0 bc1001 bc1001 1145 68

1 1 bc1002 bc1002 974 69

2 2 bc1003 bc1003 1087 68

Page 29

• <prefix>.lima.clips: Contains clipped barcode regions generated

using the

--dump-clips option. Example:

$ head -n 6 prefix.lima.clips

>m54007_170702_064558/4850602/6488_6512 bq:34 bc:11

CATGTCCCCTCAGTTAAGTTACAA

>m54007_170702_064558/4850602/6582_6605 bq:37 bc:11

TTTTGACTAACTGATACCAATAG

>m54007_170702_064558/4916040/4801_4816 bq:93 bc:10

• <prefix>.lima.removed.bam: Contains records that did not pass the

specified thresholds, or are without barcodes, using the option

--dump-removed.

lima does not generate a .pbi, nor Data Set for this file. This option

cannot be used with any splitting option.

• <prefix>.lima.guess: A .tsv file that describes the barcode

subsetting process activated using the

--peek and --guess options.

• One DataSet,.subreadset.xml, or .consensusreadset.xml file is

generated per output BAM file.

• .pbi: One PBI file is generated per output BAM file.

What is a universal spacer sequence and how does it affect

demultiplexing?

For library designs that include an identical sequence between adapter

and barcode, such as probe-based linear barcoded adapters samples,

Demultiplex Barcodes offers a special mode that is activated if it finds a

shared prefix sequence among all provided barcode sequences.

Example:

>custombc1

ACATGACTGTGACTATCTCACACATATCAGAGTGCG

>custombc2

ACATGACTGTGACTATCTCAACACACAGACTGTGAG

In this case, Demultiplex Barcodes detects the shared prefix

ACATGACTGTGACTATCTCA and removes it internally from all barcodes.

Subsequently, it increases the window size by the length

L of the prefix

sequence.

• If --window-size-bp N is used, the actual window size is L + N.

IdxFirst IdxCombined IdxFirstNamed IdxCombinedNamed NumZMWs MeanScore Picked

0 0 bc1001t bc1001t 1008 50 1

1 1 bc1002t bc1002t 1005 60 1

2 2 bc1003t bc1003t 5 24 0

3 3 bc1004t bc1004t 555 61 1

Page 30

• If --window-size-mult M is used, the actual window size is

(L + |bc|) * M.

Because the alignment is semi-global, a leading reference gap can be

added without any penalty to the barcode score.

What are bad adapters?

In the subreads.bam file, each subread has a context flag cx. The flag

specifies, among other things, whether a subread has flanking adapters,

before and/or after. Adapter-finding was improved and can also find

molecularly-missing adapters, or those obscured by a local decrease in

accuracy. This may lead to missing or obscured bases in the flanking

barcode. Such adapters are labelled "bad", as they don't align with the

adapter reference sequence(s). Regions flanking those bad adapters are

problematic, because they can fully or partially miss the barcode bases,

leading to wrong classification of the molecule.

lima can handle those

adapters by ignoring regions flanking bad adapters. For this,

lima

computes the ratio of number of bad adapters divided by number of all

adapters.

By default, --bad-adapter-ratio is set to 0 and does not perform any

filtering. In this mode, bad adapters are handled just like good adapters.

But the *.lima.summary file contains one row with the number of ZMWs

that have at least 25% bad adapters, but otherwise pass all other filters.

This metric can be used as a diagnostic to assess library preparation.

If --bad-adapter-ratio is set to non-zero positive (0,1), bad adapter

flanking barcode regions are treated as missing. If a ZMW has a higher

ratio of bad adapters than provided, the ZMW is filtered and consequently

removed from the output. The

*.lima.summary file contains two

additional rows.

With bad adapter : 10349 (28%)

Bad adapter yield loss : 10112 (5%)

The first row counts the number of ZMWs that have bad adapter ratios that

are too high; the percentage is with respect to the number of all ZMW not

passing. The second row counts the number of ZMWs that are removed

solely due to bad adapter ratios that are too high; the percentage is with

respect the number of all input ZMWs and consequently is the effective

yield loss caused by bad adapters.

If a ZMW has ~50% bad adapters, one side of the molecule is molecularly-

missing an adapter. For 100% bad adapter, both sides are missing

adapters. A lower than ~40% percentage indicates decreased local

accuracy during sequencing leading to adapter sequences not being

found. If a high percentage of ZMWs is molecularly-missing adapters, you

should improve library preparation.

Page 31

Demultiplexing Iso-Seq Data

Demultiplex Barcodes is used to identify and remove Iso-Seq cDNA

primers. If the Iso-Seq sample is barcoded, the barcodes should be

included as part of the primer. Note: To demultiplex Iso-Seq samples in the

SMRT Link (GUI), always choose the Iso-Seq Analysis or Iso-Seq

Analysis with Mapping applications, not the Demultiplex Barcodes

application. Only by using the command line can users use

lima with the

--isoseq option for demultiplexing Iso-Seq data.

The input Iso-Seq data format for demultiplexing is .ccs.bam. Users must

first generate a CCS BAM file for an Iso-Seq Data Set before running

lima. The recommended parameters for running CCS for Iso-Seq are

min-pass=1, min accuracy=0.8, and turning Polish to OFF.

1. Primer IDs must be specified using the suffix _5p to indicate 5’ cDNA

primers and the suffix

_3p to indicate 3’ cDNA primers. The 3’ cDNA

primer should not include the Ts and is written in reverse complement.

2. Below are two example primer sets. The first is unbarcoded, the sec-

ond has barcodes (shown in lower case) adjacent to the 3’ primer.

Example 1: The IsoSeq v2 primer set.

>NEB_5p

GCAATGAAGTCGCAGGGTTGGG

>Clontech_5p

AAGCAGTGGTATCAACGCAGAGTACATGGGG

>NEB_Clontech_3p

GTACTCTGCGTTGATACCACTGCTT

Example 2: 4 tissues were multiplexed using barcodes on the 3’ end

only.

>5p

AAGCAGTGGTATCAACGCAGAGTACATGGGG

>tissue1_3p

atgacgcatcgtctgaGTACTCTGCGTTGATACCACTGCTT

>tissue2_3p

gcagagtcatgtatagGTACTCTGCGTTGATACCACTGCTT

>tissue3_3p

gagtgctactctagtaGTACTCTGCGTTGATACCACTGCTT

>tissue4_3p

catgtactgatacacaGTACTCTGCGTTGATACCACTGCTT

3. Use the --isoseq mode. Note that this cannot be combined with the

--guess option.

4. The output will be only different pairs with a 5p and 3p combination:

demux.5p--tissue1_3p.bam

demux.5p--tissue2_3p.bam

The --isoseq parameter set is very conservative for removing any

spurious and ambiguous calls, and guarantees that only proper

asymmetric (barcoded) primer are used in downstream analyses. Good

libraries reach >75% CCS reads passing the Demultiplex Barcodes filters.

Page 32

gcpp

gcpp is a variant-calling tool provided by the GCpp package which provides

several variant-calling algorithms for PacBio sequencing data.

Usage

gcpp -j8 --algorithm=arrow \

-r lambdaNEB.fa \

-o variants.gff \

aligned_subreads.bam

This example requests variant-calling, using 8 worker processes and the

Arrow algorithm, taking input from the file

aligned_subreads.bam, using

the FASTA file

lambdaNEB.fa as the reference, and writing output to

variants.gff.

A particularly useful option is --referenceWindow/-w; which allows the

variant-calling to be performed exclusively on a window of the reference

genome.

Input Files

• A sorted file of reference-aligned reads in Pacific Biosciences’

standard BAM format.

• A FASTA file that follows the Pacific Biosciences FASTA file

convention.

Note: The --algorithm=arrow option requires that certain metrics be in

place in the input BAM file. It requires per-read SNR metrics, and the per-

base

PulseWidth metric for Sequel data.

The selected algorithm will stop with an error message if any features that

it requires are unavailable.

Output Files

Output files are specified as comma-separated arguments to the -o flag.

The file name extension provided to the

-o flag is meaningful, as it

determines the output file format. For example:

gcpp aligned_subreads.bam -r lambda.fa -o myVariants.gff,myConsensus.fasta

will read input from aligned_subreads.bam, using the reference

lambda.fa, and send variant call output to the file myVariants.gff, and

consensus output to

myConsensus.fasta.

The file formats currently supported (using extensions) are:

• .gff: PacBio GFFv3 variants format; convertible to BED.

• .vcf: VCF 4.2 variants format (that is compatible with v4.3.)

• .fasta: FASTA file recording the consensus sequence calculated for

each reference contig.

Page 33

• .fastq: FASTQ file recording the consensus sequence calculated for

each reference contig, as well as per-base confidence scores.

Available Algorithms

At this time there are three algorithms available for variant calling:

plurality, poa and arrow.

• plurality is a simple and very fast procedure that merely tallies the

most frequent read base or bases found in alignment with each

reference base, and reports deviations from the reference as potential

variants. This is a very insensitive and flawed approach for PacBio

sequence data, and is prone to insertion and deletion errors.

• poa uses the partial order alignment algorithm to determine the

consensus sequence. It is a heuristic algorithm that approximates a

multiple sequence alignment by progressively aligning sequences to

an existing set of alignments.

• arrow uses the per-read SNR metric and the per-pulse pulsewidth

metric as part of its likelihood model.

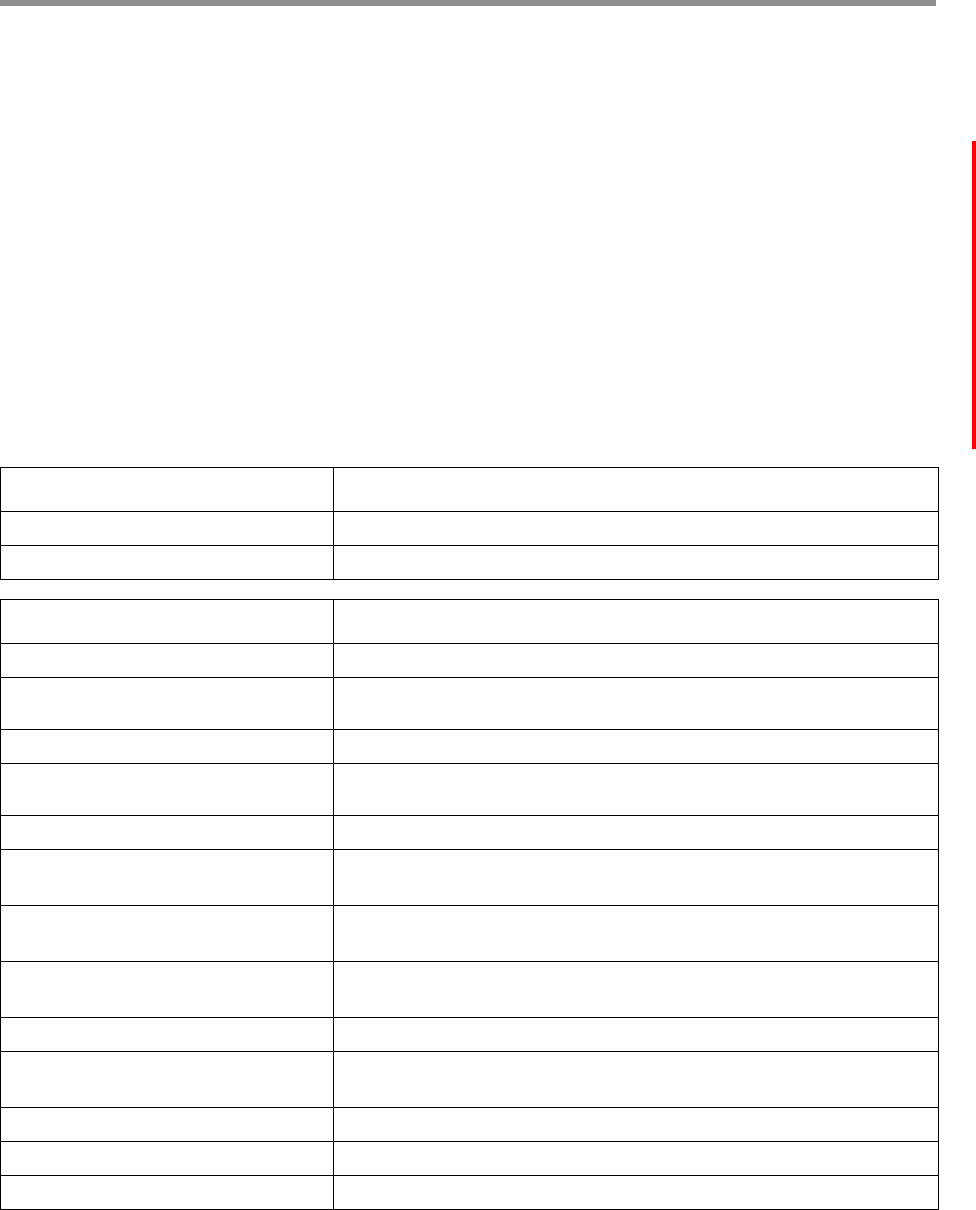

Confidence Values

The arrow and plurality algorithms make a confidence metric available

for every position of the consensus sequence. The confidence should be

interpreted as a phred-transformed posterior probability that the

consensus call is incorrect; such as:

Options Description

-j Specifies the number of worker processes to use.

--algorithm= Specifies the variant-calling algorithm to use; values are plurality and

arrow.

-r Specifies the FASTA reference file to use.

-o Specifies the output file format; values are .gff, .vcf, .fasta, and

.fastq.

--maskRadius When using the arrow algorithm, setting this option to a value N greater

than 0 causes gcpp to pass over the data a second time after masking out

regions of reads that have >70% errors in 2*N+1 bases. This setting has

little to no effect at low coverage, but for high-coverage datasets (>50X),

setting this parameter to 3 may improve final consensus accuracy. In rare

circumstances, such as misassembly or mapping to the wrong reference,

enabling this parameter may cause worse performance.

--minConfidence MINCONFIDENCE

-q MINCONFIDENCE

Specifies the minimum confidence for a variant call to be output to

variants.{gff,vcf} (Default = 40)

--minCoverage MINCOVERAGE

-x MINCOVERAGE

Specifies the minimum site coverage for variant calls and consensus to be

calculated for a site. (Default = 5)

Page 34

gcpp clips reported QV values at 93; larger values cannot be encoded in a

standard FASTQ file.

Chemistry Specificity

The --algorithm=arrow parameter is trained per-chemistry. arrow

identifies the sequencing chemistry used for each run by looking at

metadata contained in the input BAM data file. This behavior can be

overridden by a command-line option.

When multiple chemistries are represented in the reads in the input file,

the Arrow will model reads appropriately using the parameter set for its

chemistry, thus yielding optimal results.

ipdSummary

The ipdSummary tool detects DNA base-modifications from kinetic

signatures. It is part of the

kineticsTool package.

kineticsTool loads IPDs observed at each position in the genome,

compares those IPDs to value expected for unmodified DNA, and outputs

the result of this statistical test. The expected IPD value for unmodified

DNA can come from either an in-silico control or an amplified control. The

in-silico control is trained by Pacific Biosciences and shipped with the

package. It predicts the IPD using the local sequence context around the

current position. An amplified control Data Set is generated by sequencing

unmodified DNA with the same sequence as the test sample. An amplified

control sample is usually generated by whole-genome amplification of the

original sample.

Modification Detection

The basic mode of kineticsTool does an independent comparison of IPDs

at each position on the genome, for each strand, and outputs various

statistics to CSV and GFF files (after applying a significance filter).

Modifications Identification

kineticsTool also has a Modification Identification mode that can decode

multi-site IPD “fingerprints” into a reduced set of calls of specific

modifications. This feature has the following benefits:

• Different modifications occurring on the same base can be

distinguished; for example, 6mA and 4mC.

• The signal from one modification is combined into one statistic,

improving sensitivity, removing extra peaks, and correctly centering the

call.

Algorithm: Synthetic Control

Studies of the relationship between IPD and sequence context reveal that

most of the variation in mean IPD across a genome can be predicted from

a 12-base sequence context surrounding the active site of the DNA

polymerase. The bounds of the relevant context window correspond to the

Page 35

window of DNA in contact with the polymerase, as seen in DNA/

polymerase crystal structures. To simplify the process of finding DNA

modifications with PacBio data, the tool includes a pre-trained lookup table

mapping 12-mer DNA sequences to mean IPDs observed in C2 chemistry.

Algorithm: Filtering and Trimming

kineticsTool uses the Mapping QV generated by blasr and stored in the

cmp.h5 or BAM file (or AlignmentSet) to ignore reads that are not

confidently mapped. The default minimum Mapping QV required is 10,

implying that

blasr has 90% confidence that the read is correctly mapped.

Because of the range of read lengths inherent in PacBio data, this can be

changed using the

--mapQvThreshold option.

There are a few features of PacBio data that require special attention to

achieve good modification detection performance.

kineticsTool inspects

the alignment between the observed bases and the reference sequence

for an IPD measurement to be included in the analysis. The PacBio read

sequence must match the reference sequence for

k around the cognate

base. In the current module,

k=1. The IPD distribution at some locus can

be thought of as a mixture between the “normal” incorporation process

IPD, which is sensitive to the local sequence context and DNA

modifications, and a contaminating “pause” process IPD, which has a

much longer duration (mean > 10 times longer than normal), but happen

rarely (~1% of IPDs).

Note: Our current understanding is that pauses do not carry useful

information about the methylation state of the DNA; however a more

careful analysis may be warranted. Also note that modifications that

drastically increase the roughly 1% of observed IPDs are generated by

pause events. Capping observed IPDs at the global 99

th

percentile is

motivated by theory from robust hypothesis testing. Some sequence

contexts may have naturally longer IPDs; to avoid capping too much data

at those contexts, the cap threshold is adjusted per context as follows:

capThreshold = max(global99, 5*modelPrediction,

percentile(ipdObservations, 75))

Algorithm: Statistical Testing

We test the hypothesis that IPDs observed at a particular locus in the

sample have longer means than IPDs observed at the same locus in

unmodified DNA. If we have generated a Whole Genome Amplified Data

Set, which removes DNA modifications, we use a case-control, two-

sample t-test. This tool also provides a pre-calibrated “synthetic control”

model which predicts the unmodified IPD, given a 12-base sequence

context. In the synthetic control case we use a one-sample t-test, with an

adjustment to account for error in the synthetic control model.

Usage

To run using a BAM input, and output GFF and HDF5 files:

Page 36

ipdSummary aligned.bam --reference ref.fasta m6A,m4C --gff basemods.gff \

--csv_h5 kinetics.h5

To run using cmp.h5 input, perform methyl fraction calculation, and output

GFF and CSV files:

ipdSummary aligned.cmp.h5 --reference ref.fasta m6A,m4C --methylFraction \

--gff basemods.gff --csv kinetics.csv

Input Files

• A standard PacBio alignment file - either AlignmentSet XML, BAM, or

cmp.h5 - containing alignments and IPD information.

• Reference sequence used to perform alignments. This can be either a

FASTA file or a ReferenceSet XML.

Output Files

The tool provides results in a variety of formats suitable for in-depth

statistical analysis, quick reference, and consumption by visualization

tools such as SMRT View. Results are generally indexed by reference

position and reference strand. In all cases the strand value refers to the

strand carrying the modification in the DNA sample. Remember that the

kinetic effect of the modification is observed in read sequences aligning to

the opposite strand. So reads aligning to the positive strand carry

information about modification on the negative strand and vice versa, but

the strand containing the putative modification is always reported.

• modifications.gff: Compliant with the GFF Version 3 specification

(http://www.sequenceontology.org/gff3.shtml). Each template position/

strand pair whose probability value exceeds the probability value