The Future of

Computer Trading

in Financial Markets

An International Perspective

FINAL PROJECT REPORT

This Report should be cited as:

Foresight: The Future of Computer Trading in Financial Markets (2012)

Final Project Report

The Government Ofce for Science, London

The Future of

Computer Trading

in Financial Markets

An International Perspective

This Report is intended for:

Policy makers, legislators, regulators and a wide range of professionals and researchers whose interest

relate to computer trading within nancial markets. This Report focuses on computer trading from an

international perspective, and is not limited to one particular market.

Foreword

Well functioning nancial markets are vital for everyone. They

support businesses and growth across the world. They provide

important services for investors, from large pension funds to the

smallest investors. And they can even affect the long-term security

of entire countries.

Financial markets are evolving ever faster through interacting

forces such as globalisation, changes in geopolitics, competition,

evolving regulation and demographic shifts. However, the

development of new technology is arguably driving the fastest

changes. Technological developments are undoubtedly fuelling

many new products and services, and are contributing to the

dynamism of nancial markets. In particular, high frequency

computer-based trading (HFT) has grown in recent years to

represent about 30% of equity trading in the UK and possible

over 60% in the USA.

HFT has many proponents. Its roll-out is contributing to fundamental shifts in market structures

being seen across the world and, in turn, these are signicantly affecting the fortunes of many market

participants. But the relentless rise of HFT and algorithmic trading (AT) has also attracted considerable

controversy and opposition. Some question the added value it brings to markets and, indeed, whether

it constitutes a drag on market efciency. Crucially, some also believe that it may be playing an

increasing role in driving instabilities in particular markets. This is of concern to all nancial markets,

irrespective of their use of HFT, since increasing globalisation means that such instabilities could

potentially spread through contagion. It has also been suggested that HFT may have signicant negative

implications relating to market abuse. For these reasons, it is unsurprising that HFT is now attracting

the urgent attention of policy makers and regulators across the world.

This international Foresight Project was commissioned to address two critical challenges. First, the pace

of technological change, coupled with the ever-increasing complexity of nancial trading and markets,

makes it difcult to fully understand the present effect of HFT/AT on nancial markets, let alone to

develop policies and regulatory interventions that are robust to developments over the next decade.

Second, there is a relative paucity of evidence and analysis to inform new regulations, not least because

of the time lag between rapid technological developments and research into their effects. This latter

point is of particular concern, since good regulation clearly needs to be founded on good evidence and

sound analysis.

Therefore, the key aim of this Project has been to assemble and analyse the available evidence concerning

the effect of HFT on nancial markets. Looking back through recent years and out to 2022, it has taken

an independent scientic view. The intention has been to provide advice to policy makers. Over 150

leading academics from more than 20 countries have been involved in the work which has been informed

by over 50 commissioned papers, which have been subject to independent peer review.

The key message is mixed. The Project has found that some of the commonly held negative

perceptions surrounding HFT are not supported by the available evidence and, indeed, that HFT

may have modestly improved the functioning of markets in some respects. However, it is believed

that policy makers are justied in being concerned about the possible effects of HFT on instability in

nancial markets. Therefore, this Report provides clear advice on what regulatory measures might be

most effective in addressing those concerns in the shorter term, while preserving any benets that

HFT/AT may bring. It also advises what further actions should be undertaken to inform policies in the

longer term, particularly in view of outstanding uncertainties. In conclusion, it is my pleasure to make

this Report and all of its supporting evidence and analysis freely available. It is my hope that it will

provide valuable insights into this crucial issue.

Professor Sir John Beddington CMG, FRS

Chief Scientic Adviser to HM Government and

Head of the Government Ofce for Science

Lead expert group overseeing

the Project:

Dame Clara Furse (Chair) Non-executive Director, Legal & General plc, Amadeus IT

Holding SA, Nomura Holdings Inc., Chairman, Nomura Bank

International, Non-executive Director, Department for Work

and Pensions and Senior Adviser, Chatham House.

Professor Philip Bond

Professor Dave Cliff

Professor Charles Goodhart CBE, FBA

Visiting Professor of Engineering Mathematics and Computer

Science at the University of Bristol and Visiting Fellow at the

Oxford Centre for Industrial and Applied Mathematics.

Professor of Computer Science at the University of Bristol.

Professor (Emeritus) of Banking and Finance at the London

School of Economics.

Kevin Houstoun Chairman of Rapid Addition and co-Chair of the Global

Technical Committee, FIX Protocol Limited.

Professor Oliver Linton FBA

Dr Jean-Pierre Zigrand

Chair of Political Economy at the University of Cambridge.

Reader in Finance at the London School of Economics.

Foresight would like to thank Dr Sylvain Friederich, University of Bristol, Professor Maureen O’Hara,

Cornell University and Professor Richard Payne, Cass Business School, City University, London for their

involvement in drafting parts of this Report.

Foresight would also like to thank Andy Haldane, Executive Director for Financial Stability at the Bank

of England, for his contribution in the early stages of the Project.

Foresight Project team:

Professor Sandy Thomas Head of Foresight

Derek Flynn Deputy Head of Foresight

Lucas Pedace Project Leader

Alexander Burgerman Project Manager

Gary Cook Project Manager

Christopher Grifn Project Manager

Anne Hollowday Project Manager

Jorge Lazaro Project Manager

Luke Ryder Project Manager

Piers Davenport Project Co-ordinator

Martin Ford Project Co-ordinator

Yasmin Hossain Project Researcher

Zubin Siganporia Project Researcher

Isabel Hacche Intern

Arun Karnad Intern

Louise Pakseresht Intern

Jennifer Towers Intern

For further information about the Project please visit:

http://www.bis.gov.uk/foresight

Contents

Executive Summary 9

1: Introduction 19

2: The impact of technology developments 27

3: The impact of computer-based trading on liquidity, 41

price efciency/discovery and transaction costs

4: Financial stability and computer-based trading 61

5: Market abuse and computer-based trading 87

6: Economic impact assessments on policy measures 99

6.1 Notication of algorithms 101

6.2 Circuit breakers 102

6.3 Minimum tick sizes 106

6.4 Obligations for market makers 108

6.5 Minimum resting times 111

6.6 Order-to-execution ratios 113

6.7 Maker-taker pricing 115

6.8 Central limit order book 117

6.9 Internalisation 118

6.10 Order priority rules 120

6.11 Periodic call auctions 122

6.12 Key interactions 123

7: Computers and complexity 131

8: Conclusions and future options 139

Annex A: Acknowledgements 147

Annex B: References 156

165

Annex D: Project reports and papers

Annex C: Glossary of terms and acronyms

172

Annex E: Possible future scenarios for computer-based trading in nancial markets 174

7

Executive summary

A key message: despite commonly held negative perceptions, the available evidence indicates that high

frequency trading (HFT) and algorithmic trading (AT) may have several benecial effects on markets.

However, HFT/AT may cause instabilities in nancial markets in specic circumstances. This Project has

shown that carefully chosen regulatory measures can help to address concerns in the shorter term.

However, further work is needed to inform policies in the longer term, particularly in view of likely

uncertainties and lack of data. This will be vital to support evidence-based regulation in this controversial

and rapidly evolving eld.

1 The aims and ambitions of the Project

The Project’s two aims are:

• to determine how computer-based trading (CBT) in nancial markets across the world could evolve

over the next ten years, identifying potential risks and opportunities that this could present, notably

in terms of nancial stability

1

but also in terms of other market outcomes, such as volatility, liquidity,

price efciency and price discovery;

• to draw upon the available science and other evidence to provide advice to policy makers, regulators

and legislators on the options for addressing present and future risks while realising potential benets.

An independent analysis and an international academic perspective:

The analysis provides an independent view and is based upon the latest science and evidence. As such,

it does not constitute the views or policy of the UK or any other government.

Over 150 leading academics and experts from more than 20 countries have been involved in the work

which has been informed by over 50 commissioned scientic papers, which have been independently

peer reviewed. A further 350 stakeholders from across the world also provided advice on the key

issues to consider

2

.

2 Why the Project was undertaken

Well functioning nancial markets are vital for the growth of economies, the prosperity and well-being of

individuals, and can even affect the security of entire countries. Markets are evolving rapidly in a difcult

environment, characterised by converging and interacting macro- and microeconomic forces, such as

globalisation, changes in geopolitics, competition, evolving regulation and demographic shifts. However, the

development and application of new technology is arguably causing the most rapid changes in nancial

markets. In particular, HFT and AT in nancial markets have attracted considerable controversy relating to

their possible benets and risks.

While HFT and AT have many proponents, others question the added value they bring to markets, and

indeed whether they constitute a drag on market efciency. Crucially, some believe they may be playing

an increasingly signicant role in driving instabilities in particular markets. There have been suggestions

that HFT and AT may have signicant negative implications relating to market abuse. For these reasons,

and in view of the vital importance of nancial markets, both HFT and AT are now attracting the

urgent attention of policy makers and regulators across the world.

1 A list of denitions used in this Executive Summary can be found in Annex C of the Project’s Final Report.

2 A list of individuals who have been involved can be found in Annex A of the Project’s Final Report.

9

The Future of Computer Trading in Financial Markets

Two challenges for regulators:

Effective regulation must be founded on robust evidence and sound analysis. However, this Project addresses

two particular challenges currently faced by regulators:

• Rapid developments and applications of new technology, coupled with ever-increasing complexity of

nancial trading and markets make it difcult to fully understand the present effects of HFT and AT on

nancial markets and even more difcult to develop policies and regulatory interventions which will be

robust to developments over the next decade.

• There is a relative lack of evidence and analysis to inform the development of new regulations, not least

because of the time lag between rapid technological developments and research into their effects, and the

lack of available, comprehensive and consistent data.

These two challenges raise important concerns about the level of resources available to regulators in

addressing present and future issues. Setting the right level of resources is a matter for politicians.

However, unlocking the skills and resources of the wider international academic community could also

help. Here, a drive towards making better data available for analysis should be a key objective and the

experience of this Project suggests that political impetus could be important in achieving that quickly.

It makes sense for the various parties involved in nancial markets to be brought together in framing further

analytical work, in order to promote wide agreement to the eventual results. Everyone will benet from

further research that addresses areas of controversy, as these can cloud effective and proportionate

policy development, and can result in sub-optimal business decisions.

3 Technology as a key driver of innovation and change in financial markets

3

The relentless development and deployment of new technologies will continue to have profound effects on

markets at many levels. They will directly affect developments in HFT/AT and continue to fuel innovation in

the development of new market services. And they will also help to drive changes in market structure.

New technologies are creating new capabilities that no human trader could ever offer, such as

assimilating and integrating vast quantities of data and making multiple accurate trading decisions

on split-second time-scales. Ever more sophisticated techniques for analysing news are also being

developed and modern automated trading systems can increasingly learn from monitoring sequences

of events in the market. HFT/AT is likely to become more deeply reliant on such technologies.

Future developments with important implications:

• There will be increasing availability of substantially cheaper computing power, particularly through cloud

computing: those who embrace this technology will benet from faster and more intelligent trading

systems in particular.

• Special purpose silicon chips will gain ground from conventional computers: the increased speed will

provide an important competitive edge through better and faster simulation and analysis, and within

transaction systems.

• Computer-designed and computer-optimised robot traders could become more prevalent: in time, they

could replace algorithms designed and rened by people, posing new challenges for understanding

their effects on nancial markets and for their regulation.

• Opportunities will continue to open up for small and medium-sized rms offering ‘middleware’ technology

components, driving further changes in market structure: such components can be purchased and plugged

together to form trading systems which were previously the preserve of much larger institutions.

10

3 For a more detailed review of the evidence reported in this section, see Chapter 2 in the Project’s Final Report.

Executive Summary

4

Three key challenges arising from future technological developments:

• The extent to which different markets embrace new technology will critically affect their competitiveness

and therefore their position globally: The new technologies mean that major trading systems can

exist almost anywhere. Emerging economies may come to challenge the long-established historical

dominance of major European and US cities as global hubs for nancial markets if the former

capitalise faster on the technologies and the opportunities presented.

• The new technologies will continue to have profound implications for the workforce required to service

markets, both in terms of numbers employed in specic jobs, and the skills required: Machines can

increasingly undertake a range of jobs for less cost, with fewer errors and at much greater speed. As

a result, for example, the number of traders engaged in on-the-spot execution of orders has fallen

sharply in recent years, and is likely to continue to fall further in the future. However, the mix of

human and robot traders is likely to continue for some time, although this will be affected by other

important factors, such as future regulation.

• Markets are already ‘socio-technical’ systems, combining human and robot participants. Understanding

and managing these systems to prevent undesirable behaviour in both humans and robots will be key to

ensuring effective regulation: While this Report demonstrates that there has been some progress in

developing a better understanding of markets as socio-technical systems, greater effort is needed in

the longer term. This would involve an integrated approach combining social sciences, economics,

nance and computer science. As such, it has signicant implications for future research priorities.

The impact of computer-based trading on market quality: liquidity, price

efficiency/discovery and transaction costs

4

While the effect of CBT on market quality is controversial, the evidence available to this Project suggests

that CBT has several benecial effects on markets, notably:

• liquidity, as measured by bid-ask spreads and other metrics, has improved;

• transaction costs have fallen for both retail and institutional traders, mostly due to changes in trading

market structure, which are related closely to the development of HFT in particular;

• market prices have become more efcient, consistent with the hypothesis that CBT links markets and

thereby facilitates price discovery.

While the above improvements in market quality should not be overstated, they are important,

particularly since they counter the belief that HFT provides no useful function in nancial markets.

Nevertheless, there are concerns relating to market quality which are worthy of mention.

A particular concern:

While overall liquidity has improved, there appears to be greater potential for periodic illiquidity: The nature

of market making has changed, with high frequency traders now providing the bulk of such activity

in both futures and equities. However, unlike designated specialists, high frequency traders typically

operate with little capital, hold small inventory positions and have no obligations to provide liquidity

during periods of market stress. These factors, together with the ultra-fast speed of trading, create the

potential for periodic illiquidity. The US Flash Crash and other more recent smaller events illustrate this

increased potential for illiquidity.

A key message: regulatory changes in practices and policies will be needed to catch up to the new realities of

trading in asset markets. However, caution needs to be exercised to avoid undoing the advantages that HFT

has brought.

For a more detailed review of the evidence reported in this section, see Chapter 3 in the Project’s Final Report.

11

4

The Future of Computer Trading in Financial Markets

5 Financial stability and computer-based trading

5

The evidence available to this Project provides no direct evidence that computer-based HFT has increased

volatility in nancial markets. However, in specic circumstances CBT can lead to signicant instability. In

particular, self-reinforcing feedback loops, as well as a variety of informational features inherent in computer-

based markets, can amplify internal risks and lead to undesired interactions and outcomes. This can happen

even in the presence of well-intentioned management and control processes. Regulatory measures for

addressing potential instability are considered in Section 7 of this Executive Summary.

Three main mechanisms that may lead to instabilities and which involve CBT are:

• nonlinear sensitivities to change, where small changes can have very large effects, not least through

feedback loops;

• incomplete information in CBT environments where some agents in the market have more, or more

accurate, knowledge than others and where few events are common knowledge;

• internal ‘endogenous’ risks based on feedback loops within the system.

The feedback loops can be worsened by incomplete information and a lack of common knowledge.

A further cause of instability is social: a process known as ‘normalisation of deviance’, where

unexpected and risky events (such as extremely rapid crashes) come to be seen as increasingly normal,

until a disastrous failure occurs.

6 Market abuse

6

and computer-based trading

7

Economic research thus far, including the empirical studies commissioned by this Project, provides no direct

evidence that HFT has increased market abuse

8

. However, the evidence in the area remains tentative:

academic studies can only approximate market abuse as data of the quality and detail required to identify

abuse are simply not available to researchers.

This Project has commissioned three empirical studies that nd no direct evidence of a link between

HFT and market abuse. The main focus of these studies is not on the measurement of market abuse

during the continuous phase of trading, however. The Project has reviewed qualitative evidence on

perceived levels of manipulation from various sources including interviews with traders and investors,

the nancial press, UK and international regulatory reports, submissions to regulatory consultations

and large-scale surveys of market participants. A new survey of end users was also carried out by

the Project

9

.

This qualitative evidence consistently indicates high levels of concern. Claims of market manipulation using

HFT techniques are reported by institutional investors such as pension funds and mutual funds in different

countries. These claims are, in turn, widely relayed by the nancial press. Even if not backed by statistical

evidence, these perceptions need to be taken seriously by policy makers because, given that the true extent

of abuse is not precisely known, it is perception that is likely to determine the behaviour of liquidity suppliers.

High perceived levels of abuse may harm market liquidity and efciency for all classes of traders.

The qualitative evidence mentioned above is not easy to interpret unambiguously. It is consistent

with three different ‘scenarios’ that are not mutually exclusive:

• High frequency traders exploit their speed advantage to disadvantage other participants in

nancial terms.

• The growth of HFT has changed order ows in ways that facilitate market abuse by both

slow and fast agents (for example, by making ‘predatory trading’ easier).

5 For a more detailed review of the evidence reported in this section, see Chapter 4 in the Project’s Final Report.

6 Here the concern is with market abuse relating to manipulative behaviour, by which a market is temporarily distorted to one

party’s advantage. Abuse relating to insider trading is not considered here.

7 For a more detailed review of the evidence reported in this section, see Chapter 5 in the Project’s Final Report.

8 A list of the studies commissioned by the Project may be found in Annex D of the Project’s Final Report.

9 SR1 (Annex D refers).

12

Executive Summary

• Other market developments concomitant with the growth in HFT, but not necessarily brought

about by HFT growth, may have contributed to an increase in the perception or actual prevalence

of abuse. Fragmentation of liquidity across trading venues is an example.

Regulators and policy makers can inuence perceptions, even if denitive evidence on the extent of abuse

will not be available to settle the debate.

• Regulators can address the lack of condence that market participants have in their ability to detect

and prosecute abuse in HFT and fragmented markets. While this may require signicant investment

in regulatory activity, if progress is made, both the perception and reality of abuse will be reduced;

for abusers, even a perceived threat of being caught may be a powerful disincentive.

• More statistical evidence on the extent of HFT manipulation most often described by institutional

investors can be produced

10

. This will help to correct or conrm perceptions. It will also be important

in guiding regulatory action, as the three scenarios outlined above may have very different policy

implications.

Detecting evidence of market abuse from vast amounts of data from increasingly diverse trading platforms

will present a growing challenge for regulators.

To identify abuse, each national regulator will need access to international market data. Otherwise

the market abuser can hide by transacting simultaneously in several separately linked markets. In the

USA, the Ofce of Financial Research (OFR) has been commissioned by the Dodd-Frank Act to fund

a nancial data centre to collect, standardise and analyse such data. There may be case for a similar

initiative to be introduced in Europe.

7 Economic impact assessments of policy measures

11

A number of policies related to CBT are being considered by policy makers with the goals of improving

market efciency and reducing the risks associated with nancial instability. This Project has commissioned

a variety of studies to evaluate these policies, with a particular focus on their economic costs and benets.

12

The key conclusions are set out below.

Policy measures that could be effective:

• Circuit breakers: There is general support for these, particularly for those designed to limit periodic

illiquidity induced by temporary imbalances in limit order books. They are especially relevant to markets

operating at high speed. Different markets may nd different circuit breaker policies optimal, but in

times of overall market stress there is a need for coordination of circuit breakers across markets,

and this could be a mandate for regulatory involvement. New types of circuit breakers triggered by

ex-ante rather than ex-post trading may be particularly effective in dealing with periodic illiquidity.

However, further investigation is needed to establish how coordination could best be achieved in

the prevailing market structure.

• Tick size policy: This can have a large inuence on transactions costs, market depth and liquidity

provision. The current approach of allowing each European trading venue to choose its own

minimum tick size has merits, but this can result in a race to the bottom between venues. A uniform

policy applied across all European trading venues is unlikely to be optimal, but a coherent overall

minimum tick size policy applying to subsets of trading venues may be desirable. This coordinated

policy could be industry-based, such as the one agreed to recently by the Federation of European

Securities Exchanges (FESE) members.

10 ‘Quote stufng’ or order book ‘layering’ are obvious examples.

11 For a more detailed review of the evidence reported in this section, see Chapter 6 in the Project’s Final Report.

12 A list of the economic impact assessments commissioned by the Project can be found in Annex D of the

Project’s Final Report.

13

The Future of Computer Trading in Financial Markets

Policy measures that are likely to be problematic:

• Notication of algorithms: The implementation of this, even if feasible, would require excessive

costs for both rms and regulators. It is also doubtful that it would substantially reduce the risk of

market instability due to errant algorithmic behaviour.

• Imposing market maker obligations and minimum resting times on orders: The former issue runs

into complications arising from the nature of high frequency market making across markets, which

differs from traditional market making within markets. Requirements to post two-sided quotes may

restrict, rather than improve, liquidity provision. Similarly, minimum resting times, while conceptually

attractive, can impinge upon hedging strategies that operate by placing orders across markets and

expose liquidity providers to increased ‘pick-off risk’ due to the inability to cancel stale orders.

• Order-to-execution ratios: This would be a blunt instrument to reduce excessive message trafc

and cancellation rates. While it could potentially reduce undesirable manipulative strategies, it may

also curtail benecial strategies. There is not sufcient evidence at this point to ascertain these

effects, and so caution is warranted. Explicit fees charged by exchanges on excessive messaging, as

well as greater regulatory surveillance geared to detect manipulative trading practices may be more

desirable approaches to deal with these problems.

• Maker-taker pricing: The issue is complex and is related to other issues like order routing, priority

rules and best execution. Regulatory focus on these related areas seems a more promising way of

constraining any negative effects of maker-taker pricing than direct involvement in what is generally

viewed as an exchange’s business decision.

• The virtual central limit order book (CLOB): The introduction of competition between trading

venues brought about by Markets in Financial Instruments Directive (MiFID) has resulted in more

choices for investors and, in many cases, improved market quality, but it has also led to greater

complexity and risk. The virtual CLOB it has created is still evolving and improving, but its current

structure falls short of a single integrated market. This raises a number of issues for both individual

exchange and market behaviour.

• Constraining internalisation or, more generally, dark trading: Off-exchange trading can be mutually

benecial for all parties involved, especially where large orders are involved. However, the trend

away from pre-trade transparency cannot be continued indenitely without detrimental effects on

the public limit order book and price discovery. Constraining these activities within a range that does

not adversely affect price discovery but does allow for benecial trading is important but difcult.

Evidence gathered from European markets is too limited to give satisfactory guidance.

• Call auctions: These are an alternative trading mechanism that would eliminate most of the

advantage for speed present in modern electronic markets. They are widely used already in equity

markets at open and close and following a trading halt, although no major market uses them

exclusively. To impose call auctions as the only trading mechanism seems unrealistic and draconian.

There are serious coordination issues related to hedging strategies that would make this policy

undesirable.

Two words of caution: Whilst the above conclusions are consistent with the currently available

evidence, further empirical study is desirable for some of the policy measures in particular. It should

also be recognised that some of the above individual policy options interact with each other in

important ways. For example, the presence or absence of circuit breakers affects most other measures,

as does minimum tick sizes. Decisions on individual policies should not therefore be taken in isolation,

but should take account of such important interactions

13

.

14

13 See the Project’s Final Report (Chapter 6, Section 6.12) and also the supporting evidence papers which were commissioned

(Annex D of the Project’s Final Report refers).

Executive Summary

8 Computers and complexity

Over coming decades, the increasing use of computers and information technology in nancial systems is likely

to make them more, rather than less complex. Such complexity will reinforce information asymmetries and

cause principal/agent problems, which in turn will damage trust and make the nancial systems sub-optimal.

Constraining and reducing such complexity will be a key challenge for policy makers. Options include

requirements for trading platforms to publish information using an accurate, high resolution, synchronised

timestamp. Improved standardisation of connectivity to trading platforms could also be considered.

However, there is no ‘magic bullet’ to address this issue. Policy makers will need an integrated approach

based on improved understanding of nancial systems. This will need to be achieved through:

• Improved post-trade transparency: The challenge of ensuring adequate dissemination and storage

of trading data to enable market abuse to be identied provides an important example of where

improvements need to be considered.

• Analysis: Making sense of disclosed information and developing a better understanding of the

nancial system will be critical. This implies the need to harness the efforts of researchers

14

.

A further proposal that is sometimes made is that (various categories of) agents should only be allowed

to hold or issue instruments which have been approved by the authorities in advance. This contrasts

with the more common position that innovation should be allowed to ourish, but with the authorities

retaining the power to ban the uses of instruments where they consider evidence reveals undesirable

effects. The former stance, however, not only restricts innovation, but also such ofcial approval

may well have unintended consequences. Furthermore, the effectiveness of such ofcial approval

is debatable. Ofcials have no more, and probably less, skill in foreseeing how nancial instruments

will subsequently fare than credit rating agencies or market agents. Indeed, many, possibly all, of the

instruments now condemned in some quarters as having played a part in the recent global nancial

crisis would, at an earlier time, have probably been given ofcial approval.

A corrective step that could, and should, be taken is to simplify (electronic) nancial systems by the

application of greater standardisation, particularly in the form of accurate, high resolution, synchronised

timestamps. CBT, operating on many trading platforms, has led to a vast expansion of data, which are

often not standardised, nor easily accessible to third parties (for example, regulators and academics) for

analysis and research. The relevant authorities should consider following the US example and establish

a European Financial Data Centre to collect, standardise and analyse such data.

9 Conclusions – key priorities for action

15

While the effects CBT on nancial markets have been the topic of some controversy in recent years, analysis

of the available evidence has shown that CBT has led to benets to the operation of markets, notably relating

to liquidity, transaction costs and the efciency of market prices

16

. Against the background of ever greater

competition between markets, it is highly desirable that any new policies or market regulation preserve

these benets.

However, this Project has also highlighted legitimate concerns that merit the close attention of policy makers,

particularly relating to the possibility of instabilities occurring in certain circumstances, and also periodic

illiquidity

17

. In view of the critical importance of nancial markets for global growth and prosperity, the

following suggests priorities for action:

14 See Section 9 of this Executive Summary.

15 For a more detailed review of this section, see Chapter 8 in the Project’s Final Report.

16 See Section 4 of this Executive Summary.

17 See Section 5 of this Executive Summary.

15

The Future of Computer Trading in Financial Markets

A. Limiting possible future market disturbances:

A.1 European authorities

18

, working together, and with nancial practitioners and academics, should assess

(using evidence-based analysis) and introduce mechanisms for managing and modifying the potential adverse

side-effects of CBT and HFT. Section 7 of this Executive Summary sets out analysis of ten individual

policy options, and provides advice on which are supported most by the available evidence. It is also

important that such regulatory measures are considered together, not individually, in view of important

interactions which may exist between some of them.

A.2 Coordination of regulatory measures between markets is important and needs to take place at two levels:

• Regulatory constraints involving CBT in particular need to be introduced in a coordinated manner across

all markets where there are strong linkages.

• Regulatory measures for market control must also be undertaken in a systematic global fashion

to achieve in full the objectives they are directed at. A joint initiative from a European Ofce of

Financial Research and the US Ofce of Financial Research (OFR), with the involvement of other

international markets, could be one option for delivering such global coordination.

A.3 Legislators and regulators need to encourage good practice and behaviour in the nance and software

engineering industries. This clearly involves the need to discourage behaviour in which increasingly risky

situations are regarded as acceptable, particularly when failure does not appear as an immediate result

19

.

These recognise that nancial markets are essentially complex ‘socio-technical’ systems, in which both

humans and computers interact: the behaviour of computers should not be considered in isolation.

A.4 Standards should play a larger role. Legislators and regulators should consider implementing accurate,

high resolution, synchronised timestamps because this could act as a key enabling tool for analysis

of nancial markets. Clearly it could be useful to determine the extent to which common gateway

technology standards could enable regulators and customers to connect to multiple markets more

easily, making more effective market surveillance a possibility.

A.5 In the longer term, there is a strong case to learn lessons from other safety-critical industries, and to use

these to inform the effective management of systemic risk in nancial systems. For example, high-integrity

engineering practices developed in the aerospace industry could be adopted to help create safer

automated nancial systems.

B. Making surveillance of financial markets easier:

B.1 The development of software for automated forensic analysis of adverse/extreme market events would

provide valuable assistance for regulators engaged in surveillance of markets. This would help to address

the increasing difculty that people have in investigating events.

C. Improving understanding of the effects of CBT in both the shorter and longer term:

C.1 Unlocking the power of the research community has the potential to play a vital role in addressing the

considerable challenge of developing better evidence-based regulation relating to CBT risks and benets and

also market abuse in such a complex and fast-moving eld. It will also help to further address the present

controversy surrounding CBT. Suggested priorities include:

• Developing an ‘operational process map’: this would detail the processes, systems and interchanges

between market participants through the trade life cycle, and so help to identify areas of high

systemic risk and broken or failing processes.

• Making timely and detailed data across nancial markets easily available to academics, but recognising

the possible condentiality of such data.

16

18 While several of these potential actions for stakeholders are framed within the European context, they will also be relevant to

stakeholders in other parts of the world.

19 A term for behaviour which accepts increasingly risky situations in the absence of adverse effects is called ‘normalisation of

deviance’. See Section 5 of this Executive Summary.

Executive Summary

C.2 The above measures need to be undertaken on an integrated and coordinated international basis in

order to realise the greatest added value and efciency. One possible proposal would be to establish a

European Financial Data Centre.

In conclusion:

It is hoped that the analysis and arguments contained in the Foresight Final Project Report, together

with over 50 commissioned evidence papers which underpin it, will assist policy makers, regulators

and market practitioners in their current consideration of CBT. In this context, special thanks are

due to the 150 or so leading and independent experts from over 20 countries who have been involved

in this undertaking.

This Executive Summary and the underlying Project Report provide an independent view based on the best

available science and evidence. They do not constitute the views or policy of the UK or any other government.

17

1 Introduction

1.1 The aim of this Project

This Project has two principal aims. First, looking out to 2022, it seeks to determine how computer-

based trading in nancial markets could evolve and, by developing a robust understanding of its

effects, to identify potential risks and opportunities that this could present, notably in terms of nancial

stability

1

but also in terms of other market outcomes such as volatility

2

, liquidity

3

, price efciency and

price discovery

4

. Secondly, drawing upon the best available scientic and other evidence, the Project

aims to provide advice to policy makers, regulators and legislators on the options for addressing those

risks and opportunities.

1.2 Why the Project was commissioned

Computer-based trading (CBT)

5

has grown substantially in recent years, due to fast-paced

technological developments and their rapid uptake, particularly in equity markets. For example, possibly

30% of the UK’s equity trading volume is now generated through high frequency trading (HFT), while

in the USA this gure is possibly over 60%

6

. CBT is therefore already transforming the ways in which

nancial markets operate.

Inevitably, substantial changes to the functioning of nancial markets, actual or perceived, attract

considerable attention, not least because of their potential impact on market condence, the operation

of businesses and the health and growth of economies.

HFT attracts particular controversy. There has been a continuing debate about the extent to which

HFT improves or degrades the functioning of nancial markets, and also its inuence on market

volatility and the risk of instabilities. Indeed, such trading has been implicated by some as a contributory

factor in the Flash Crash of 6 May 2010 in which one trillion dollars temporarily evaporated from

US markets

7

. However, the wider debate on HFT and CBT has been hampered by the availability of

relatively little evidence, and a lack of analysis.

The controversy concerning the effect of CBT on nancial systems has the potential to grow for

several reasons. Relentless technological developments in both hardware and in trading algorithms,

together with other drivers of change

8

, continue to inuence the structure of markets, inevitably

creating winners and losers at all levels. They are also leading to increases in complexity as well as

new dynamics, making markets ever harder to understand and to regulate, particularly in view of the

rapid pace of change. These developments are fuelling an urgent need to gain a better understanding

of a range of issues, most notably concerning their effect on systemic risk of nancial instability and

its management. However, other important concerns relate to the effect of CBT on the efciency of

markets which has particularly divided opinion; the evolving potential for market abuse, especially its

1 Financial market stability refers to the lack of extreme movements in asset prices over short time periods. A glossary of terms

and a list of acronyms used in this Report can be found in Annex C.

2 Volatility is dened here as variability of an asset’s price over time, often measured in percentage terms.

3 Liquidity is dened here as the ability to buy or sell an asset without greatly affecting its price. The more liquid the market, the

smaller the price impact of sales or purchases. For a more detailed description see Section 3.3.1.

4 Price efciency and price discovery – pricing is efcient when an asset’s price reects the true underlying value of an asset;

price discovery refers to the market process whereby new information is impounded into asset prices.

5 See Box 1.1 for a denition of CBT.

6 Accurate estimates of the volume of high frequency trading are difcult to obtain, and in any case are contingent on the

precise denition used. For estimates, see Kaminska (2011), Kaminska (2009) and http://www.tradersmagazine.com/news/high-

frequency-trading-benets-105365-1.html?zkPrintable=true Accessed: 3 September 2012.

7 Chapter 4 reviews the available evidence for the inuence of HFT in the Flash Crash of 6 May 2010.

8 See Box 1.3 and Figure 1.1.

19

The Future of Computer Trading in Financial Markets

detection and regulation; and the relationship of CBT with dark pools and changing market institutions

more generally.

For these reasons, CBT is currently attracting the close attention of policy makers and regulators

worldwide. For example:

• In Europe, HFT has been placed near the top of the regulatory agenda, with a wide range of

measures on CBT being debated in the European Union (EU) Parliament and the EU Commission

within the Markets in Financial Instruments Directive (MiFID) II process. Indeed, some parties

have mooted measures that could have very far-reaching implications for markets, as they could

substantially constrain the future of CBT within Europe.

• In the USA, a number of measures are being proposed under the Dodd-Frank Act.

• A number of countries across the world, from Latin America to Asia, have adopted measures

related to CBT as exchanges turn electronic.

Against this background of rapid change and the urgent needs of regulators, the relative lack of

evidence and the prevalence of controversy over consensus is a major concern. Regulation that is not

informed by evidence and analysis risks making matters worse rather than better. It was therefore

decided to commission this Foresight Project to inform a broad audience of policy makers, regulators

and other stakeholders around the world.

Box 1.1: Definition of computer-based trading

Computer-based trading (CBT) refers to the trading system itself. Financial institutions use CBT

systems in a range of trading strategies, of which high frequency trading (HFT)

9

and algorithmic

trading (AT) are two types. However, the use of a CBT system by a nancial institution does not

necessarily mean that it is a user of one or other of these strategies.

A useful taxonomy of CBT systems identies four characteristics that can be used to classify CBT

systems

10

:

1) CBT systems may trade on an agency basis (i.e. attempting to get the best possible execution

of trades on behalf of clients) or a proprietary basis (i.e. trading using one’s own capital).

2) CBT systems may adopt liquidity-consuming (aggressive) or liquidity-supplying (passive)

trading styles.

3) CBT systems may engage in either uninformed or informed trading.

4) A CBT algorithm generates the trading strategy or only implements a decision taken by

another market participant

11

.

A more detailed denition may be found in Annex C.

20

9 For a denition of HFT please see Chapter 3.

10 DR5 (Annex D refers).

11 Please refer to Annex C for a comprehensive glossary and list of acronyms.

Introduction

1.3 A robust, international and independent approach

This Foresight Project has taken a broad approach, drawing upon a wide range of disciplines, including

economics, computer science, sociology and physics. In so doing, it has engaged with over 150 leading

independent academics from more than 20 countries

12

.

The Project has drawn upon a wider body of academic literature, but has also commissioned over 50

papers and studies, particularly where it was considered important to address gaps in the evidence

base, or to collate and assess existing studies. A full list of commissioned work is presented in Annex D:

all of this information is freely available from www.bis.gov.uk/foresight. Such work includes reviews of

drivers of change, economic impact assessments of regulatory measures and the results of workshops

held in Singapore, New York and London to gather the views of leading industry practitioners,

economists and technologists on future scenarios. A sociological study and a survey of end users

were also undertaken to understand the impact of computer trading on institutional investors. The

commissioned work has been peer reviewed internationally by independent reviewers (except for

certain workshop reports and a survey).

Throughout, the Project has been guided by a group of leading academics and senior industry

practitioners. It has also beneted from the advice of an international group of high-level stakeholders,

which has provided advice at critical stages of the Project. A full list of the 500 or so experts,

academics and stakeholders who have been involved in the Project is provided in Annex A.

Box 1.2: An independent view

While the Project has been managed by the UK Foresight programme under the direction of

Sir John Beddington, the UK Chief Scientic Adviser, its ndings are entirely independent of

the UK Government. As such, the ndings do not represent the views of the UK or any other

government, or the views of any of the organisations that have been involved in the work.

1.4 Project scope

The Project looks ten years into the future to take a long-term and strategic view of how CBT in

nancial markets might evolve, and how it might act within the context of other drivers of change, to

affect a range of market functions including: nancial stability, liquidity, price efciency and discovery,

transaction costs, technology and market abuse. While the future is inherently uncertain, major forces

driving change can be identied. A key driver of change is technology and this report explores how

technology and other drivers of change will interact to inuence the development and impact of CBT.

The possible consequences of a range of possible regulatory measures are also assessed.

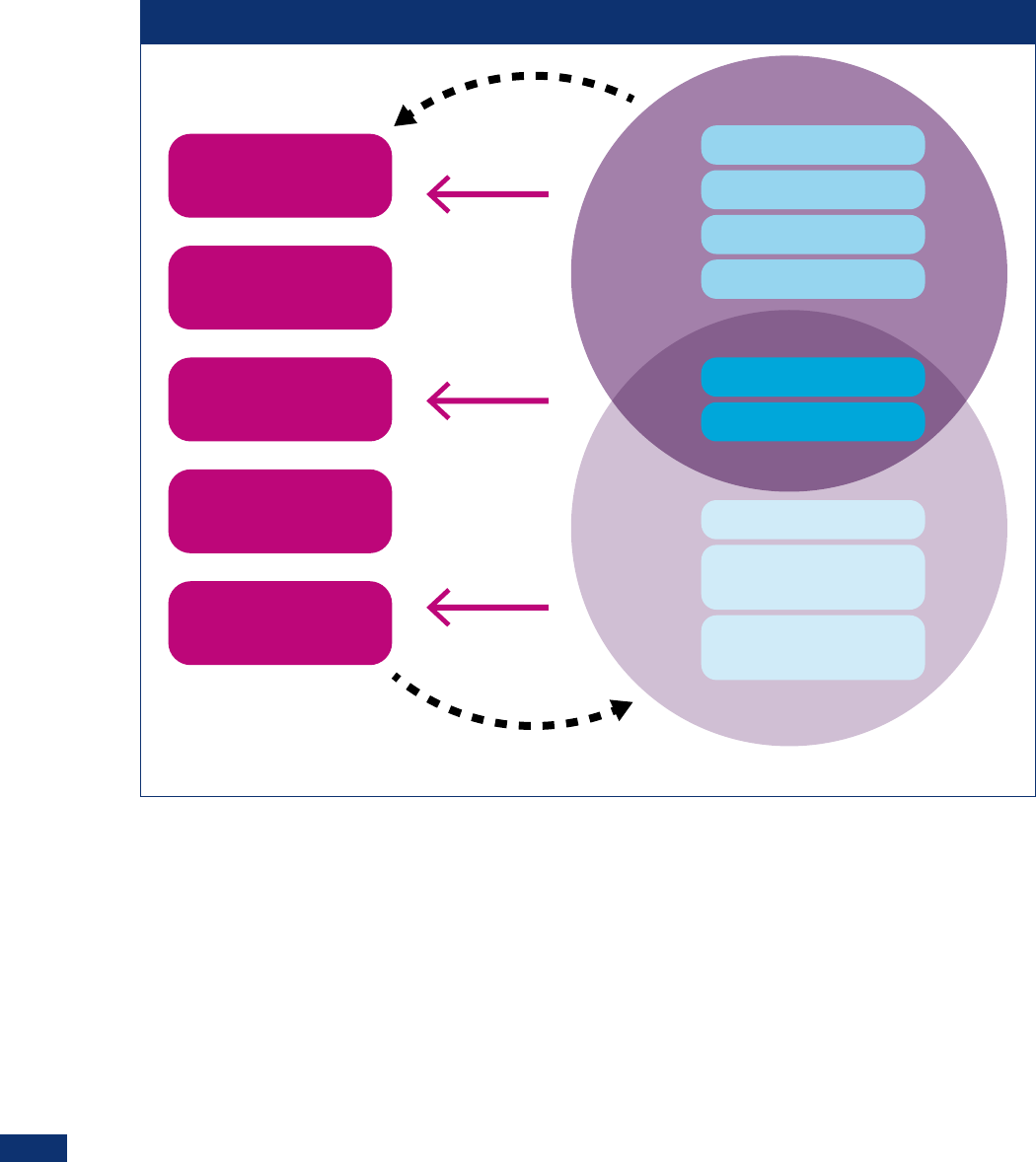

Nine broad classes of drivers affecting nancial markets have been considered. These were identied

in three international industry workshops (held in London, Singapore and New York), and a workshop

of chief economists in London. These drivers are briey explained in Box 1.3 and are presented

diagrammatically in Figure 1.1. In particular, this gure shows that all of the drivers affect the key aspects

of market function, which in turn feed back to affect particular drivers

13

.

While the work has taken a global view of drivers of change and markets, it has paid particular attention

to the evolving use of CBT in Europe. However, much of the analysis will nevertheless be of interest to

policy makers and markets in other parts of the world.

12 Please refer to Annex A for a list of all the individuals involved in this Project.

13 Please refer to Annex E for discussion on how drivers of change could play out in alternative future scenarios.

21

The Future of Computer Trading in Financial Markets

The analysis in this Report focuses particularly on high frequency and algorithmic trading. Its aim is to

provide advice to policy makers who are taking decisions today on the regulation of CBT in markets

– so that those decisions are well founded and are more likely to be robust to future uncertainties.

However, in taking a ten year view, the Project also assesses what actions need to be implemented to

address systemic issues such as nancial instability, in the longer term. Some of these actions may, by

their nature, take longer for policy makers to agree and implement.

The Project has taken an evidence-based approach wherever possible. However, in such a fast-moving

eld, gaps in both understanding and evidence are inevitable and these are highlighted in the text

where appropriate. The most important gaps for informing regulation are identied in the concluding

chapter. The Project has also explored the current perceptions of leading institutions about the

impact of CBT in markets and importantly, it has evaluated the extent to which such perceptions are

supported by the available evidence.

Figure 1.1: Key drivers of change

Financial

stability

Liquidity

Price discovery/

efficiency

Transaction

costs

Market

integrity

Technology

Asset classes

Competition

Industry

workshops

Chief

Economists

workshop

Geopolitics

Regulation

Demographics

Global economic cycles

Loss/change of riskless

(reference) assets

Change in

(dis)intermediation

Market outcomes Key drivers of change

22

Introduction

Box 1.3: Important future drivers of change

The following provides a brief summary of the nine broad classes of drivers identied during three

international industry workshops (London, Singapore and New York), and a workshop of chief

economists in London

14

.

Regulation: Regulation will have an important and uncertain inuence on nancial markets, as both

a driver and a consequence of nancial market changes. As a driver, it may change the allocation

of investment across assets and exchanges, and across institutions and investment models or

strategies. Future regulation could be more or less coercive, informed by big data analytics,

sophisticated models, heavy- or light-touch. There could be fragmentation at the global level,

possibly linked to resurgent protectionism. Demand for regulation will tend to have an inverse

relationship with levels of trust in the market.

Demographics: In the West over the next decade, the large number or people retiring will drive

investment shifts, for example in the demand for retail rather than mutual funds, or for xed

income rather than equities.

Global economic cycles: The economic cycle appears to have been perturbed by the nature of the

current recession. The dynamics of growth, employment, savings, trade, and leverage may return

to previous cyclical behaviour or may instead follow a new pattern (prolonged recession, chaotic

behaviour). Linked to this, global imbalances may persist or resolve. These factors will affect the

demands placed on nancial markets in terms of volume, asset classes and acceptable levels of risk

and return. Global macroeconomic dynamics may also affect the process of globalisation and the

relative importance of nancial and ‘real’ markets.

Geopolitics: Growth rates over the next decade will powerfully inuence the structure of future

markets. A strong world economy will allow technological experimentation and new connections

among geopolitical regions and groupings. A faltering economy or, worse, one in a tailspin, would

be likely to lead to national retrenchment.

Technology

15

: This may lead to the creation of highly distributed trading platforms on which large

numbers of individuals carry out transactions. Individual mobile phone handsets, possibly receiving

live news and data feeds, may be used for trading; institutional trading strategies may also be

inuenced by communications on social networks. A new topology of either highly dispersed

exchanges or of interlinked international exchanges could take shape.

Loss/change of riskless (reference) assets: The current global asset ‘ecosystem’ uses the return to

riskless assets as a reference point for pricing risky assets. With sovereign debt now perceived to

carry risk, this point of reference may be on the verge of disappearing. The behaviour of nancial

markets without a commonly recognised riskless asset is uncertain, and it is not clear whether a

new common reference point will emerge. A connected issue is the link between sovereign debt

and national currencies, and the role of the dollar as the global reserve currency.

Asset classes: Products focusing on levels of risk exposure rather than dividends may become

more prominent; investment may shift from listed equity or derivatives towards synthetic

products and spread-betting. These may focus on the state-dependent pattern or returns rather

than ownership, and are likely to include more ‘exotic’ instruments. CBT may lead to the creation

of new nancial instruments for asset classes that are not currently directly traded using HFT or

algorithmic tools.

14 See also Figure 1.1. More detailed discussion of these drivers can be found in the workshop reports (Annex D refers).

15 In view of the importance of technology as a key driver for computer-based trading, Chapter 2 provides a more detailed

discussion. See also DR3 (Annex D refers).

23

The Future of Computer Trading in Financial Markets

Competition: Over and above technological changes, innovation in business models will shape

competitive dynamics. Features analogous to Amazon’s ‘other products you may like’ button may

be introduced into institutional trading products. Market shares and returns will be driven by

content, nancial products and costs. Firms may unbundle services to generate more commissions

or rebundle them to enhance investor lock-in. Exchanges are already increasing prots by

proposing value-added components; they could increasingly focus on content-driven models.

Change in (dis)intermediation: Technological and nancial market changes are altering both the size

and role of intermediaries. The pace, direction and implications of these shifts will depend on

whether such entities can operate across borders, the depth of funding that they inuence and

their impact on specic assets or investors. These developments are linked to CBT and HFT via

the arbitrage role of intermediaries. They may be implemented via CBT/HFT by the continuum

of CBT from algorithmic trading to HFT and by the degree to which the implications of CBT are

different for the price trading and asset management functions of intermediaries.

1.5 Structure of the Report

This Report is comprised of eight chapters. Chapter 1 provides the rationale for undertaking the

Project, sets out the aims and objectives, and its approach in terms of scope and content. In Chapter 2

technological developments are reviewed in detail, since this is a particularly important driver of change

affecting CBT. Their recent impact is reviewed and technology advances likely in the next ten years for

example, cloud computing, and custom silicon are explored. In Chapter 3 evidence on the impact of

CBT on key indicators of market quality including liquidity, price discovery/efciency and transaction

costs are assessed. The chapter begins by examining the evidence for past effects, and then considers

how impacts could change in the future, recognising that this will be contingent upon the mix of future

regulatory measures in place.

Chapter 4 examines the evidence for the impact of CBT on nancial stability, evidence for past effects

is reviewed and particular attention is given to the impact of self-reinforcing feedback loops in CBT

which can amplify internal risks and lead to undesirable interactions and outcomes in nancial markets.

The concept of ‘normalisation of deviance’, where unexpected and risky events come to be seen as

ever more normal, and its implications for nancial stability is also explored.

The issue of market abuse is examined in Chapter 5 from economic, regulatory and user perspectives.

It assesses the current impact of market abuse, and evidence on the perceptions of abuse using survey

data commissioned by the Project. Consideration is also given to how the relationship between market

abuse and CBT might evolve in the next ten years and possible courses of action to address the issue.

In Chapter 6 the potential economic impact of individual regulatory measures on stability, volatility

and also liquidity, price discovery/efciency and transaction costs are reviewed using a variety of new

studies commissioned by the Project. Benets as well as costs and risks are assessed. The measures

are diverse ranging inter alia from notication of algorithms, circuit breakers, minimum tick size

requirements and market maker obligations, to minimum resting times and periodic auctions.

The issue of how long term strategic factors, notably how CBT and HFT can affect trust and

condence in markets is discussed in Chapter 7. Particular emphasis is given to the role of rising

complexity, enabled by information technology, in nancial arrangements, transactions and processes

in recent decades, and also the supply of credit, in inuencing trust. It asks how can complexity be

constrained and reduced and highlights the potential for a greater role for standards in addressing

these issues.

Finally, Chapter 8 concludes the Report by drawing out the top level advice for policy makers, both

for the short term and long term. In the latter case, priorities for research and better data collection

are suggested.

24

2 The impact of technology

developments

Key findings

Continuing advances in the sophistication of ‘robot’

automated trading technology, and reductions in cost

are set to continue for the foreseeable future.

Today’s markets involve human traders interacting with

large numbers of robot trading systems, yet there is

very little scientic understanding of how such markets

can behave.

For time-critical aspects of automated trading, readily

customisable, special purpose silicon chips offer major

increases in speed; where time is less of an issue, remotely

accessed cloud computing services offer even greater

reductions in cost.

Future trading robots will be able to adapt and learn with

little human involvement in their design. Far fewer human

traders will be needed in the major nancial markets of

the future.

27

The Future of Computer Trading in Financial Markets

2 The impact of technology

developments

2.1 Introduction

The present-day move to ever higher degrees of automation on the trading oors of exchanges, banks

and fund management companies is similar to the major shift to automated production and assembly

that manufacturing engineering underwent in advanced economies during the 1980s and 1990s. This

trend is likely to have a corresponding effect on the distribution of employment in the nancial sector.

Already, a very large proportion of transactions in the markets are computer generated and yet the

characteristics and dynamics of markets populated by mixtures of human traders and machine traders

are poorly understood. Moreover, the markets sometimes behave in unpredictable and undesirable

ways. Few details are known of the connectivity network of interactions and dependencies in

technology enabled nancial markets. There is recognition that the current global nancial network

needs to be mapped in order to gain an understanding of the current situation. Such a mapping

exercise would enable the development of new tools and techniques for managing the nancial

network and exploring how it can be modied to reduce or prevent undesirable behaviour

1

. New

technology, new science and engineering tools and techniques will be required to help map, manage

and modify the market systems of the future.

2.2 How has financial market technology evolved?

The technology changes of the past ve years are best understood as a continuation of longer term

trends. Cliff et al. (DR3)

2

relate the history of technology in the nancial markets, briey covering the

18th, 19th and 20th centuries, and then explore in more detail the rapid and signicant changes which

have occurred at the start of the 21st century

3

.

The high speed processing of data and high speed communication of data from one location to another

have always been signicant priorities for the nancial markets. Long before the invention of computers

or pocket calculators, traders with fast mental arithmetic skills could outsmart their slower witted

competitors. In the 19th century, communication of nancially signicant information by messengers

on horseback was replaced by the faster ‘technology’ of carrier pigeons; then pigeons were made

redundant by telegraph; and then telegraph by telephones. In the last quarter of the 20th century,

the shift to computer-based trading (CBT) systems meant that automated trading systems could start

to perform functions previously carried out only by humans: computers could monitor the price of a

nancial instrument (for example, a share price) and issue orders to buy or sell the instrument if its

price rose above or below specied ‘trigger’ prices. Such very simple ‘program trading’ systems were

widely blamed for exacerbating the Black Monday crash of October 1987, the memory of which, for

several years afterwards, dampened enthusiasm for allowing computers to issue buy or sell orders in

the markets. Nevertheless, the real cost of computer power continued to halve roughly once every

two years (the so-called Moore’s Law effect), until by the late 1990s it was possible to buy, at no extra

cost in real terms, computers over 50 times more powerful than those used in 1987. All this extra

computer power could be put to use in implementing far more sophisticated processing for making

investment decisions and for issuing structured patterns of orders to the markets.

By the end of the 20th century, as the real cost of computing continued to fall at a dramatic pace,

the management of investment funds had become an increasingly technical eld, heavily dependent

1 The need to map, manage, and modify the nancial network is the central message of a speech Rethinking the Financial Network

by Haldane (2009).

2 DR3 (Annex D refers).

3 For other accounts of the recent history of technology developments in the nancial markets, the following three texts are

particularly recommended: Angel et al. (2010), Gomber et al. (2011) and Leinweber (2009). For a recent discussion of high

frequency trading, including interviews with leading practitioners, see Perez (2011) and Arnuk & Saluzzi (2012).

28

The impact of technology developments

on computationally intensive mathematical models to manage portfolio risk (i.e. to ‘hedge’ the risk in

the fund’s holdings). Manual methods for hedging risk were used for decades before the deployment

of electronic computers in fund management, but as the real cost of computing fell, and the speed

and capacity of computers increased, so computers were increasingly called upon to calculate results

that could guide the fund manager’s decisions to buy or sell, and to go ‘long’ or ‘short’. In this period,

growing numbers of funds based their investment decisions on so-called statistical arbitrage (commonly

abbreviated to ‘stat arb’). One popular class of stat arb strategies identies long-term statistical

relationships between different nancial instruments, and trade on the assumption that any deviations

from those long-term relationships are temporary aberrations; that the relationship will revert to its

mean in due course. One of the simplest of these ‘mean-reversion’ strategies is pairs trading, where

the statistical relationship which is used as a trading signal is the degree of correlation between just

two securities. Identifying productive pair-wise correlations in the sea of nancial market data is a

computationally demanding task, but as the price of computer power fell, it became possible to

attempt increasingly sophisticated stat arb strategies.

At much the same time, the availability of cheaper computation meant that it was possible to deploy

automated trading systems that were considered more ‘intelligent’ than those implicated in the 1987

crash. In most cases, this ‘intelligence’ was based on rigorous mathematical approaches that were

rmly grounded in statistical modelling and probability theory. The new wave of automated systems

concentrated on execution of a trade. The computer did not make the decision to buy or to sell

a particular block of shares or quantity of commodity, nor to convert a particular amount of one

currency into another: those decisions were still taken by humans (possibly on the basis of complex

statistical analysis). But, once the trading decision had been made, the execution of that trade was then

handed over to an automated execution system (AES). Initially, the motivation for passing trades to an

AES was that the human traders were then freed up for dealing with more complicated trades. As AES

became more commonplace, and more trusted, various trading institutions started to experiment with

more sophisticated approaches to automated execution: different methods and different algorithms

could be deployed to t the constraints of different classes of transaction, under differing market

circumstances; and hence the notion of ‘algorithmic trading’ (AT) was born

4

.

At the same time as AES systems were being developed to reduce market impact, other trading teams

were perfecting advanced stat arb techniques for identifying trading opportunities based on complex

statistical regularities which lay deep in the data: the price and volume data for hundreds or thousands

of instruments might have to be considered simultaneously and cross-compared in the search for

opportunities similar to the pairs trading of the 1980s, but typically involving many more than two

instruments. These advanced stat arb approaches were made possible by powerful computers, used to

run the statistical analyses, and also by developments in CBT infrastructure (the machinery which traders

use to communicate with each other and with the exchanges). Two notable developments were

straight-through processing (STP), where the entire trading process from initiation of an order to nal

payments and clearing is one seamless electronic ow of transaction processing steps with no human-

operated intermediate stages, and direct market access (DMA), where investors and investment funds

are given direct access to the electronic order books of an exchange, rather than having to interact

with the market via an intermediary, such as an investment bank or broker/dealer.

The convergence of cheap computer power, statistically sophisticated and computationally intensive

trading strategies and fast automated execution via STP and DMA, means that, in recent years, it

has become entirely commonplace for market participants to seek counterparties to a transaction

electronically, identify a counterparty and then execute the transaction, all within a few seconds. For

trades involving large quantities of a tradable instrument (so large that the volume of shares traded

One key aspect of modern automated trading systems is that they are designed to be highly autonomous: once switched on,

they are intended by design to ‘do their own thing’, at high speed, with little or no human intervention at the level of individual

trades. Although the computer running an algorithmic trading system can be switched out of action in an instant (for example,

by severing its power cable), in many real systems it is much more desirable to order the algorithmic trader to go into its

shutdown sequence, where it will do its best to sell off its portfolio of holdings as quickly as possible and with minimal losses

before actually switching itself off. This means that while humans still bear ultimate responsibility for writing and managing

algorithmic trading systems, humans are less and less involved in their minute-by-minute operation or control. An example of

the owners of an automated trading system being unable to re-establish effective control occurred on 1st August 2012, when

the US brokerage Knight Capital suffered massive losses as their automated trading system failed. See http://dealbook.nytimes.

com/2012/08/03/trading-program-ran-amok-with-no-off-switch/?nl=business&emc=edit_dlbkam_20120806 Accessed:

4 September 2012.

29

4

The Future of Computer Trading in Financial Markets

is likely to signicantly shift the price of the transaction – a phenomenon known as market impact),

the computerised search for a counterparty is not often conducted in the open, on a national stock

exchange, but instead takes place via the services of a private alternative electronic trading venue, a

‘dark pool’, which offers to match large volume counterparties in an anonymous or obfuscated fashion,

to try to reduce market impact. Details of the transaction are only reported to the national exchange

after it has been completed. Because many trading institutions see reducing market impact as a good

thing, the popularity of dark pools has increased signicantly in recent years.

The old ‘vertically integrated’ business model of investment banking is becoming increasingly

fragmented. One effect of the European Union’s (EU) Markets in Financial Instruments Directive (MiFID)

5

legislation was to create an ecosystem of small and medium-sized businesses offering ‘middleware’

technology components that could be purchased individually and then plugged together to achieve the

same functionality which had previously been the exclusive preserve of the trading systems developed

in-house by big institutions. This lowered the barriers to entry: with sufcient funding, one or two

entrepreneurs working in a rented ofce with a high speed internet connection can set up a trading

company and automate much, or perhaps all, of the workow required to run a fund.

At the same time, a new style of trading has emerged, known as high frequency trading (HFT)

6

,

where automated systems buy and sell on electronic exchange venues, sometimes holding a particular

position for only a few seconds or less. An HFT system might ‘go long’ by buying a quantity of shares

(or some other nancial instrument, such as a commodity or a currency) hold it for perhaps two or

three seconds, and then sell it on to a buyer. If the price of the instrument rises in those two or three

seconds, and so long as the transaction costs are small enough, then the HFT system has made a prot

on the sale. The prot from holding a long position for three seconds is unlikely to be great, and may

only amount to a few pennies but if the HFT system is entirely automated, then it is a machine that

can create a steady stream of prot every hour that the market is open. A recent study

7

indicated that

the total amount of money extractable from the markets via HFT may be more modest than might be

supposed: a few tens of billions of dollars in the US markets. Despite this, the low variation in positive

returns from a well-tuned HFT system is an attractive feature and one that makes HFT an area of

intense interest in the current markets. Of course, even if the total prots extractable via HFT really

are limited, the downside risks, the total potential worst case losses and costs that might be incurred if

HFT technology fails, could in principle be much larger. There is a concern that some CBT systems, like