Noname manuscript No.

(will be inserted by the editor)

Image color transfer to evoke different emotions based on color

combinations

Li He · Hairong Qi · Russell Zaretzki

Received: date / Accepted: date

Abstract In this paper, a color transfer framework to

evoke different emotions for images based on color com-

binations is proposed. The purpose of this color transfer

is to change the “look and feel” of images, i.e., evoking

different emotions. Colors are confirmed as the most

attractive factor in images. In addition, various stud-

ies in both art and science areas have concluded that

other than single color, color combinations are neces-

sary to evoke specific emotions. Therefore, we propose

a novel framework to transfer color of images based

on color combinations, using a predefined color emo-

tion model. The contribution of this new framework is

three-fold. First, users do not need to provide reference

images as used in traditional color transfer algorithms.

In most situations, users may not have enough aesthetic

knowledge or path to choose desired reference images.

Second, because of the usage of color combinations in-

stead of single color for emotions, a new color transfer

algorithm that does not require an image library is pro-

posed. Third, again because of the usage of color combi-

nations, artifacts that are normally seen in traditional

frameworks using single color are avoided. We present

encouraging results generated from this new framework

and its potential in several possible applications includ-

ing color transfer of photos and paintings.

Keywords Color Transfer · Color Emotion · Color

Combination

1 Introduction

Among the many possible image-processing options, artists

and scientists are increasingly interested in extracting

“emotion” that images can evoke as well as changing

Li He, Hairong Qi, Russell Zaretzki

University of Tennessee, Knoxville

E-mail: {lhe4,hqi,rzaretzk}@utk.edu

the emotion by altering its colors. The pioneer work

by Reinhard et al. [1] made color transfer possible by

providing a reference image. Later, various color trans-

fer algorithms have been proposed. However, only until

recently was the first emotion-related color transfer al-

gorithm proposed by Yang and Peng [2]. Their work

followed the traditional color transfer framework but

added a single color scheme for emotions.

Color as an emotion messenger has attracted enor-

mous interests from researchers in different disciplines

[3,4,5,6,7,8,9]. One may delight in the beautiful red

and golden-yellow leaves of autumn, and in the magnif-

icent colors of a sunset. The relationships between color

and emotion is referred to as color emotion [10].

In our daily life colors are never seen in isolation,

but always presented together with other colors. This

is true when we look at our surroundings from the in-

side of a building to the entire cityscape [7]. Therefore,

it is inappropriate to apply single color scheme to iden-

tify the emotion evoked by color images. For instance,

in Kobayashi [3]’s book Color Image Scale, “red” may

have multiple meanings, such as rich, powerful, luxuri-

ous, dynamic and mellow, depending on what color it

is combined with. Hence, color combinations are always

preferred over single color to evoke specific emotions.

After Reinhard [1]’s ground breaking work, color

transfer algorithms have been extensively studied [11,

12,13,14,15,16,17,18,19,20]. Reinhard’s method is sim-

ple and efficient, but suffers from two problems. First,

it could produce unnaturally looking results in cases

where the input and reference images have different

color distributions. Second, as the algorithm is based

on simple statistics (mean and deviation), it could pro-

duce results with low fidelity in both scene detail and

color distribution [16].

Although there have been several methods proposed

to solve these two problems, they still need one or multi-

arXiv:1307.3581v2 [cs.CV] 2 Nov 2014

2 Li He et al.

ple reference images, while in most situations users may

not have enough aesthetic knowledge to choose appro-

priate reference images and finding a correct reference

image may become a time consuming task.

In this paper, we present a new emotion-changing

color transfer framework based on color combinations

that do not need reference images as used in traditional

color transfer algorithms. Because of the usage of color

combinations instead of single color scheme used in [2],

we also need to develop a new color transfer algorithm.

The proposed emotion transfer framework allows users

to select their desired emotion by providing keywords

directly, e.g., “warm”, “romantic” and “cool”.

We adopt Eisemann [5]’s color emotion scheme called

Pantone color scheme that contains 27 emotions with

each emotion containing 24 three color combinations.

This scheme is based on the early work of word as-

sociation studies of color and emotion and the color

harmony theory developed by Itten [21].

Due to the usage of color combinations, the pro-

posed color transfer framework faces two challenging is-

sues, which are, how to identify three main colors in the

image and how to transfer main colors to destination

color combinations. We resolve the first issue using an

Expectation Maximization (EM) clustering algorithm.

For the second issue, we model the color transfer pro-

cess as two optimization problems, with one used to

calculate target color combinations and the other used

to ensure the preservation of gradient of the input im-

age, which is based on [16]. Figure 1 shows an example

result of proposed color transfer method.

The proposed color transfer framework could lead

to many potential applications. For example, it can be

used in field of art and design, such as photo / painting

editing, transfer of the artist’s emotion in painting to

real photos, creative tone reproduction and color selec-

tion for industrial design. In addition, it can be used to

color gray-scale images. Furthermore, for the purpose

of color transfer with a reference image, the proposed

color transfer algorithm may provide an output image

which is better in capturing the emotion evoked in the

reference image by involving a color emotion scheme.

The rest of the paper is organized as follows. Section

2 reviews previous work in related areas. Section 3 de-

scribes the proposed color combination-based emotion

transfer framework in detail. Section 4 presents results

and comparisons. Section 5 provides the discussion and

future work.

2 Related Work

2.1 Color Emotion

Recent models of color emotion include single color

emotion and color combinations emotion. There have

been two main research topics in the study of single

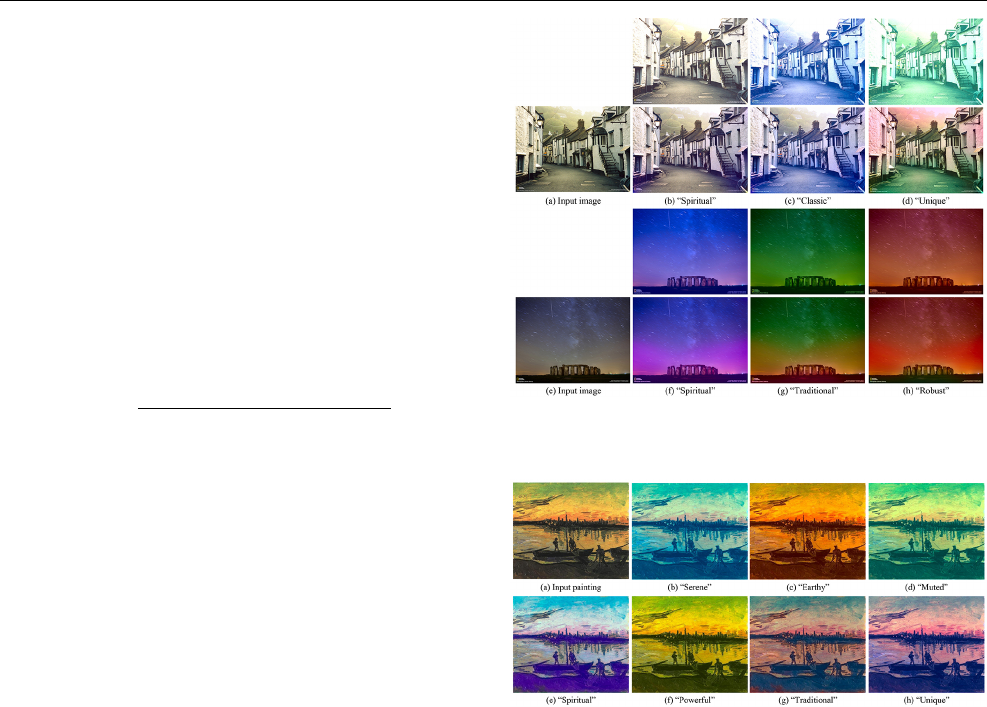

Fig. 1 Color transfer of an input image (a) to eight different

themes (b)-(i) that evoke different emotions. The input image

is an artwork called A lonely house made by Michael Otto.

color emotion: classification and quantification [10]. Clas-

sification of single color emotion uses principal com-

ponent analysis to reduce large number of colors to a

small number of categories [3,24,6]. The quantification

of color emotion is studied firstly by [24], later by [6]

who provided a color emotion model with quantities on

three color-emotion factors: activity, weight and heat.

[6]’s study was then confirmed by [8]. For color combi-

nations, Kobayashi [3] developed a color emotion model

based on psychology studies. Ou et al. [7] revealed a

simple relationship of color and emotion that relies on

single color emotion and color pair emotion. Lee et al.

[25] used sets theory to evaluate color combinations.

These early-stage studies on color emotion only con-

sider color pairs.

Compared to single color emotion, color combina-

tions provide a more appropriate and accurate way to

describe color emotion in images.

2.2 Emotion Semantics of Images

Emotion semantics is the most abstract semantic struc-

ture in images, because it is closely related to cognitive

models, culture background and aesthetic standards of

users. Tanaka [26] concluded that the contribution of

color, spatial frequency, and size to attractiveness fol-

lows the order of color > size > spatial frequency. Mao

[28] proved that the fractal dimensions (FDs) of images

are related to affective properties of an image. [27] dis-

covered that color has strong relationship with emotion

word pairs. Therefore, we attempt to alter the emotion

an image can stimulate by changing the colors.

2.3 Color Transfer

We may classify existing color transfer methods into

global and local algorithms, where “global” means the

algorithm transfers colors using global statistic, e.g.,

global mean and global variance, while “local” means

Image color transfer to evoke different emotions based on color combinations 3

the algorithm transfers colors using different values for

different regions of the input image.

The first global color transfer method was proposed

by Reinhard et al. [1]. It shifts and scales the pixel

values of the input image to match the mean and stan-

dard deviation of the reference image. This is done in

the lαβ opponent color space (CIELAB), which is an

average decorrelated space that allows color transfer to

take place independently in each channel [29]. Later,

many global approaches are proposed using high-level

statistical properties [30,16,14,31].

A general problem with global color transfer ap-

proaches is that if the structure of the input image

and the reference image are vastly different, the results

could look unnatural. Reinhard et al. [1] proposed a lo-

cal method based on the inverse distance weighting to

remedy the problem. Chang et al. [11] proposed a color

transfer method whereby colors are classified into cat-

egories derived through a psychophysical color naming

study. Tai et al. [13] proposed a local transfer approach

based on their soft color segmentation algorithm, where

a modified EM algorithm was proposed. Chiou et al.

[18] proposed a local color transfer algorithm based on

intrinsic component. Dong et al. [19] proposed a fast lo-

cal color transfer algorithm with dominant color map-

ping based on Earth Mover’s Distance (EMD). Huang

and Chen [33] proposed a landmark-based sparse color

representation for local color transfer.

The major difference between our approach and other

color transfer methods not only resides in the color emo-

tion model, but also that we do not need reference im-

ages. Therefore, existing color transfer algorithms may

not satisfy the proposed emotion transfer task. The al-

gorithm developed by Huang et al. [37] involved emo-

tion elements, however, they only considered warm and

cool aspects of color emotions, while emotion in images

need more complex color emotion model. A method rel-

atively close to ours is proposed by Yang and Peng [2]

which provided an automatic mood-transferring frame-

work for color images. They used single color emotion

scheme described in [4] to classify the input image to

one of the 24 emotions. The major difference of our ap-

proach to this approach is that we use color combina-

tions rather than single color for emotions. In addition,

we do not need an image library for emotions. Because

of these two differences, a new color transfer algorithm

is developed. In addition to the above differences, we

also select the best output image in a different way

since we do not use reference images.

3 Color Transfer to Evoke Different Emotions

Figure 2 illustrates components involved in the pro-

posed color transfer framework. Users can select target

emotion by either providing a reference image or select-

ing an emotion keyword directly. If the user has a refer-

Fig. 2 Proposed color transfer process overview

Fig. 3 24 sets of “Playful” color combinations [5]

ence image, we can extract main colors (cluster centers

in the color space) in reference image and use the clos-

est scheme in color emotion scheme as target scheme.

Either way, a specific scheme is selected and color com-

binations in this scheme are used for color transfer. Note

that main colors mean the dominate, subordinate, and

accent colors in the image.

Meanwhile, main colors in the input image are also

extracted. Once we have main colors in the input image

as well as target scheme, the color transfer algorithm

transfers all colors in the input images to 24 output

images (there are 24 three color combinations for each

scheme). The final step selects the best output image

out of the 24. Details of each step are described in the

following sections.

3.1 Color Emotion Scheme

The Pantone color scheme [5] contains 27 schemes with

each scheme containing 24 three color combinations. In

total, there are 648 three color combinations. The feel-

ing that 27 schemes can evoke are Serene, Earthy, Mel-

low, Muted, Capricious, Spiritual, Romantic, Sensual,

Powerful, Elegant, Robust, Delicate, Playful, Energetic,

Traditional, Classic, Festive, Fanciful, Cool, Warm, Lus-

cious&Sweet, Spicy&Tangy and Unique. Every color

combination in each scheme includes three colors: dom-

inant color, subordinate color and accent color. For ex-

ample, the 24 color combinations of emotion “Playful”

are illustrated in Figure 3. In each three color com-

bination, the center color is the dominant color, the

“@” shape block is the subordinate color and the color

shown in the right vertical bar is the accent color. All

the colors in Pantone color scheme are in the CMYK

space and we converted them to the CIELAB space.

4 Li He et al.

Fig. 4 Comparison of three different clustering algorithms.

Black indicates the cluster of the dominant color, gray is the

cluster of the subordinate color and white is the cluster of the

accent color.

3.2 Main Colors Identification Using Clustering

In order to match the three color combinations of the

scheme, we need to extract the dominant, subordinate

and accent colors in the input image. If the user chooses

to use a reference image, the same process needs to

be applied to extract the main colors of the reference

image. We adopt the Expectation-Maximization (EM)

algorithm in the CIELAB space.

We choose EM after comparing k-means, EM and

improved EM [13], as shown in Figure 4

1

. Compared to

EM, a key limitation of k-means is its cluster model.

The clusters are expected to be of similar size, so that

the assignment to the nearest cluster center is the cor-

rect assignment. EM is more flexible by taking into con-

sideration of both variances and covariances of clusters

[38]. In addition, in order to transfer colors of the input

image, we want to segment the image better in color-

wise, not in object-wise. Comparing to the result of EM

(Figure 4), the result of k-means segments the input im-

age better in object-wise (it separates the sky and water

precisely), while EM segments images better in color-

wise. Because of this reason, the weights of Gaussian

components in this algorithm can be naturally used as

weights of the dominant, subordinate and accent colors.

We also implement the improved EM algorithm pro-

posed by [13], in which the spatial information is added.

As shown in Figures 4, Tai’s algorithm has better re-

gion smoothness because of the spatial filter. However,

in many images we might not want this feature. For in-

stance, result of Tai’s algorithm merged rocks with the

ground and water surrounded into one region, while re-

sult of EM separated them.

3.3 Color Scheme Classification

If the user provides a reference image, we classify the

reference image to a specific related scheme first. After

identifying the three main colors in the reference image,

a Euclidean distance measure is used to classify the

1

All input images in this paper are from National Geogra-

phy.

emotion:

min

i

(

24

X

j=1

3

X

k=1

w

k

kC

k

R

− C

k

P

ij

k

2

2

) (1)

where i = 1, 2, · · · , 27 is the i

th

scheme of the 27 schemes,

j = 1, 2, · · · , 24 is the j

th

combination of the 24 color

combinations in each scheme, w

k

, k = 1, 2, 3 are weights

of the k

th

cluster (Gaussian component) generated by

the EM algorithm, C

k

R

, k = 1, 2, 3 are the three main

colors in the reference image, C

k

P

ij

is the k

th

color in the

j

th

Pantone three color combination of the i

th

scheme.

The scheme with the minimum distance is identified

as the scheme of the reference image.

Now we have three clusters in the input image and a

target emotion either specified by the user or identified

in a reference image. Then, the emotion of the input

image is transferred to the target emotion.

In this process, we use three guidelines to formulate

the problem:

1. The transferred colors should still reside within the

CIELAB space.

2. It is more important to guarantee the closeness be-

tween the transferred dominant cluster center to the

dominant color in the color combination as com-

pared to that of the subordinate or accent color.

3. It is well known that the human visual system is

more sensitive to local intensity differences than to

intensity itself [39]. Thus preserving the color gra-

dient is necessary to scene fidelity [16].

3.3.1 Calculation of Target Color Combinations

The first step in Figure ?? is to calculate target color

combinations. As we can see in Figure 5, if we move the

cluster centers of the input image to the exact Pantone

color combination, the resulting image is darker than

the input image. However, if we limit the movement

of cluster centers, the resulting image is almost at the

same brightness level compared to that of the input

image.

We formulate the calculation of target color combi-

nations as an optimization problem:

min

δ

f(δ) =

3

X

k=1

w

k

(kC

k

I

+ δ

k

− C

k

P

ij

k

2

2

)

s.t. lαβ

min

≤ (I

k

min

+ δ

k

) ≤ lαβ

max

lαβ

min

≤ (I

k

max

+ δ

k

) ≤ lαβ

max

(2)

where C

k

I

, k = 1, 2, 3 are the three cluster centers of

the input image calculated by the EM algorithm. δ

k

is

the movement of each cluster center. lαβ

min

and lαβ

max

are the range of each dimension in the CIELAB space.

I

k

min

and I

k

max

are the minimum and maximum values of

Image color transfer to evoke different emotions based on color combinations 5

Fig. 5 Steps in transferring to “Serene” scheme. (b) transfer without limitation of cluster center movement (c) transfer with

limit of cluster center movement (d) transfer without gradient preservation step.

the k

th

cluster in each dimension, respectively. C

k

I

0

, k =

1, 2, 3 are colors of the target color combination.

This optimization problem is designed to satisfy the

guidelines (1) and (2). The condition in Eq. 2 satis-

fies the first guideline, which guarantees all colors stay

within range after color transfer. In addition, by mini-

mizing the 2-norm distance in Eq. 2, target color com-

binations are moved as close as possible to desired Pan-

tone color combinations. Weights w

i

put different weights

on the dominant, subordinate and accent colors which

helps the minimization process to consider more about

the movement of the dominant color.

The interior point algorithm described in [40] is used

to solve this optimization problem.

Finally, target color combinations are calculated us-

ing Eq. 3.

C

k

I

0

= C

k

I

+ δ

k

(3)

3.3.2 Pixel Update

After calculation of target color combinations, the sec-

ond step in Figure ?? updates all pixels in the input

image:

I

0

k

xy

= I

k

xy

− C

k

I

+ C

k

I

0

(4)

where I

k

xy

and I

0

k

xy

are pixels of the k

th

clusters in the

input and updated (intermediate) images, respectively.

3.3.3 Gradient Preservation

The final step of color transfer is gradient preservation.

Let us first observe the differences in the transfer result

without (Figure 5(d)) or with (Figure 5(c)) gradient

preservation as compared to the input image. We can

see the artifacts in Figure 5(d), such as the edge be-

tween cloud and sky and the edge between water and

sky. However, the transfer result with gradient preser-

vation has no artifacts in edges and has average bright-

ness and contrast compared to that of the input image.

As required by guideline (3), preserving the gradient is

necessary to scene fidelity.

Fig. 6 Impact of λ values on color transfer. The input image

is transferred to ”Muted” scheme.

Fig. 7 Impact of λ values on the color transfer scores and

time. Each of 4 input images are transferred to 10 differ-

ent schemes (color combinations). (a) illustrates average color

transfer score, (b) illustrates color transfer time of 40 color

transfers.

To preserve gradient, we use the algorithm proposed

by [16]

min

O

xy

X

x

X

y

(O

xy

− I

0

xy

)

2

+ λ

X

x

X

y

[(

∂O

xy

∂x

−

∂I

xy

∂x

)

2

+ (

∂O

xy

∂y

−

∂I

xy

∂y

)

2

]

(5)

where I

xy

, I

0

xy

, and O

xy

are pixels in the input image,

the intermediate image, and the output image, respec-

tively. x and y are the horizontal and vertical axes of

the image. λ is a coefficient weighting the importance

of gradient preservation and new colors.

The first term of Eq. 5 ensures the output image is

as similar as possible to the intermediate image. The

second term of Eq. 5 maintains the gradient of the out-

put image as close as possible to the gradient of the

input image. This optimization problem is solved by

gradient descend method.

[16] set λ equal to 1 in their paper, however, the

scene fidelity is not high enough in this application

when λ = 1. Impact of different λ values on color trans-

fer result is demonstrated in Figure 6. We also tested

the impact of different λ values on color transfer scores

(E(j) of Eq. 8) and color transfer time. In order to

balance the color transfer scores and time, we choose

λ = 20 in this paper. In addition, throughout our exper-

6 Li He et al.

iment, we see consist trend of score on different image

content (with different λ).

3.4 Output Image Selection

When a user selects a specific scheme, we transfer the

input image to 24 output images based on the 24 color

combinations of that scheme. Then the final output im-

ages is selected by evaluating content similarity of those

images to the input image (in terms of luminance his-

togram) and distance of main colors (cluster centers) to

the Pantone color combinations.

To measure the difference in luminance between the

input and output images, we use the following [2]:

d

lumin

(I, O

j

) =

P

width

x=1

P

height

y=1

|l

I

x,y

− l

O

(j)x,y

|

width · height

(6)

where l

I

x,y

and l

O

(j)x,y

are the l values (in lαβ space) of

the input and output images at pixel (x, y), respectively.

I is the input image and O

j

is j

th

combination of the

24 color combinations. width and height represent the

width and height of the input and output images.

To measure how close the cluster centers in the out-

put image to the target Pantone color combinations, we

use the following equation:

d

color

(C

O

, C

P

ij

) =

3

X

k=1

|C

k

O

− C

k

P

ij

| (7)

where C

k

O

are colors in the target color combination

(cluster centers in the output images).

Finally, the best output image is selected by:

min

j

E(j) = γd

lumin

(I, O

j

) + (1 − γ)d

color

(C

O

j

, C

P

ij

)

(8)

where j = 1, 2, ..., 24, and γ are weighting factors to

combine two types of differences into a unified metric.

In order to avoid unnatural looking of the final output

image, we want to emphasize more the content simi-

larity (measured by luminance difference). Therefore,

we choose the γ value of 0.7 based on empirical study.

In addition, the γ value is not dependent on the image

content with our test images. The image with minimum

E(j) value is chosen as the final output image.

4 Results and Comparisons

Our approach transfers the color of the input images us-

ing color combinations. Three applications of this ap-

proach are shown first in this section, including color

transfer of an artwork, photos and a painting. Next, we

show the effectiveness of the proposed method with a

user study. This is followed by a comparison between

color transfer method based on color combinations and

the color transfer method based on single color is pre-

sented. At last, we compare our method with the tra-

ditional color transfer algorithms.

Fig. 8 Comparison of color transfer using color combination

and single color. For 4 input images, each image is transferred

to three schemes.

Fig. 9 Proposed color transfer of a painting. The painting

(a) is Vincent van Gogh’s Coal Barges

Color transfer to evoke different emotion. Fig-

ure 1 shows an example of the proposed color transfer

of an artwork. The input image is transferred to eight

target schemes, including Serene, Earthy, Romantic,

Cool, Traditional, Robust, Classic and Spiritual. Fig-

ure 8 shows an application of our approach on photos.

Figure 9 shows another application of our approach

on paintings, where a painting is transferred to seven

alternative color schemes.

User study. In order to evaluate the effectiveness

of our color transfer approach, a user study is designed

to evaluate the results. 5 photos are transferred to 24

color themes using proposed approach. Each user is

asked to choose the preference image of a given emo-

tion/feeling in a pair of images, where the pair contains

two randomly selected color transfer results of an in-

put image. For example, for a given feeling “Serene”,

the user has to choose one image between two images

in Figure 1 (b)(c), where Figure 1 is randomly chosen

for comparison. At the same time, a detail explana-

tion of each feeling is displayed in the evaluation inter-

face for user to further understand the meaning of the

given feeling. For instance, the explanation of “Serene”

is “calm, peaceful, quiet, clean”. In total, 16 users par-

Image color transfer to evoke different emotions based on color combinations 7

ticipated in the study and each user evaluated 30 image

pairs, totally 480 image pairs are evaluated.

The evaluation results are shown in Figures 10 and 11.

In Figure 10, We evaluated the accuracy rate of each

emotion. 15 out of 24 emotions are shown in the fig-

ure, the rest 9 emotions are not shown because they

were only selected in a few tests. We can see from the

results, for easy-to-understand feelings such as “cool”

and “warm” the accuracy is high. For ambiguous feel-

ings such as “Energetic” and “Fanciful” the accuracy

is low. Furthermore, we show the accuracy rate of each

specific pair in Figure 11. Pairs that contain strong con-

trast have high accuracy, such as “Earthy-Playful” and

“Serene-Festive”. Pairs that are ambiguous have low

accuracy, such as “Fanciful-Spicy” and “Sweet-Spicy”.

Overall, we are able to achieve the average accuracy

of ∼ 70% (compared to 50% if selected randomly). We

may improve the result in the future with a better de-

scription of each emotion.

Comparison with the single color method. Fig-

ure 8 shows comparison of color transfer using color

combinations and single colors. Color transfer using sin-

gle color is implemented by moving the mean of l, α, β

values of the input images to the dominate color of

Pantone color combination. Compared to the transfer

results using single colors, results using color combina-

tions have several advantages. Firstly, using color com-

binations to represent emotion an image can evoke al-

lows color transfer to be carried out separately in dif-

ferent regions of images, producing more colorful and

emotionally rich images. As shown in Figures 8(d), col-

ors of the road, houses and trees are transferred to dif-

ferent colors separately using color combinations, while

those objects are transferred to similar colors using sin-

gle color. Similarly, as shown in Figures 8(f), the colors

of sky, lights near the ground and stones are transferred

to different colors separately using color combinations,

producing more colorful images compared to transfer

results using single colors. Secondly, artifacts are elimi-

nated using color combinations. For example, as shown

in Figure 8(d), colors of the wall and trees are trans-

ferred to an unnatural look (green) using single colors,

while colors of the wall and trees still look natural us-

ing color combinations. Finally, using color combina-

tions can avoid out of range problem. In addition, sev-

eral transfer results are too bright using single colors,

while transfer results using color combinations success-

fully avoid this problem.

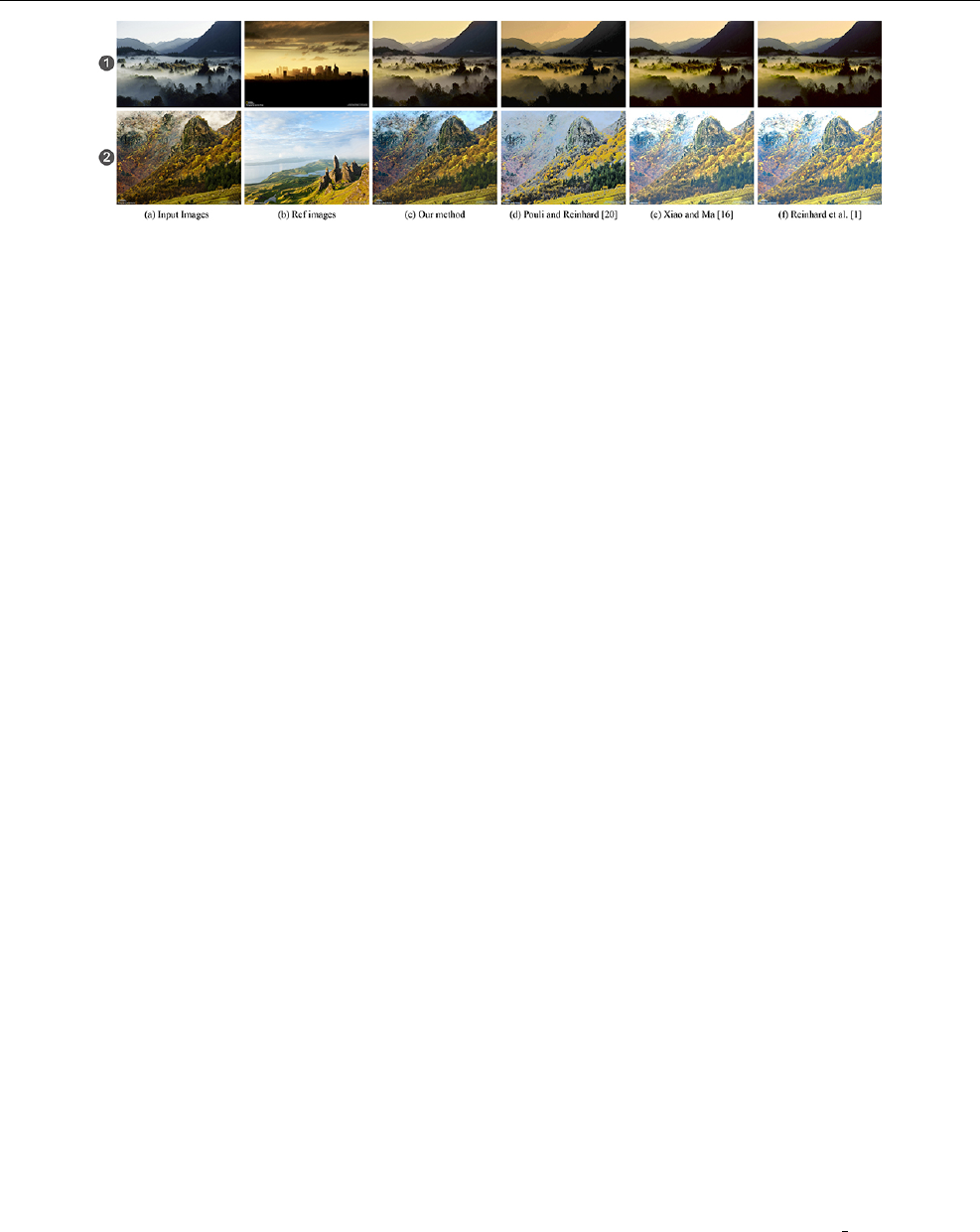

Comparison with color transfer. Although the

purpose of our color transfer algorithm is different from

traditional color transfer methods, we also compare our

method (not including color emotion scheme) to three

representative color transfer algorithms ([1,16,20]). If

the user provides a reference image, the purpose of

our algorithm is to transfer the “emotion” that im-

Fig. 10 User study - accuracy of each emotion.

Fig. 11 User study - accuracy of each emotion pair.

age evoke to similar “emotion” of the reference image

evokes, while the purpose of traditional color transfer

methods is to transfer the “colors” in the input im-

age to “colors” of the reference image. Figure 12 shows

the color transfer results, with two input images and

two reference images. Compared to other methods, our

method is able to blend the colors of the reference im-

age, while preserving the look and details of the input

image. For the first input image, Reinhard [1] and Xiao

[16]’s results suffer from high color saturation in the

trees region compared to that of the input image. Pouli

and Reinhard [20]’s method suffers from artifacts in the

sky and losing gradient of the input image in the bot-

tom area. However, our method is able to avoid these

problems. For the second input image, result images

of Reinhard [1] and Xiao [16]’s methods are too bright

compared to the input image, resulting in losing details

in highlight region. Pouli and Reinhard [20]’s method

suffers from losing gradient of the input image. Again,

our method successfully avoids artifacts and maintain

similar brightness level as compared to the input image.

5 Discussion and Future Work

In this paper, we proposed a novel color transfer frame-

work to evoke different emotions based on color com-

binations. Unlike traditional color transfer algorithms,

users are not required to provide reference images. They

can choose target emotion terms and the desired emo-

tion is transferred automatically. This process is achieved

by using a color scheme, in which each scheme is rep-

resented using three color combinations.

Results and user study showed our approach is able

to alter emotion evoked by photos and paintings, pro-

8 Li He et al.

Fig. 12 Comparison between existing color transfer methods and the proposed color transfer method.

viding emotionally rich images for art and design pur-

pose.

While our color transfer method can produce images

that convey rich emotions, there are still several limi-

tations. For example, current weights of the dominant,

subordinate and accent colors are decided automati-

cally by the weights of Gaussian components. However,

sometime the dominant color to human visual system

may not have the largest weight. Spatial information

may need to be considered as a part of color weights.

In addition, the first step of the color transfer algorithm

described in Section 3.3.1 may result in cluster centers

of the input image that do not have enough movement,

depending on how colors are spread in the CIELAB

space and where those target Pantone colors are.

In the future, different color emotion models can be

used in this framework, such as quantitative color emo-

tion models. In addition, in this paper we only used

three color combinations, while adaptive number of col-

ors may be used.

References

1. E. Reinhard, M. Ashikhmin, B. Gooch, P. Shirley, IEEE

Comput. Graph. Appl. 21, 34 (2001)

2. C.K. Yang, L.K. Peng, IEEE Computer Graphics and

Applications 28, 52 (2008)

3. S. Kobayashi, Color Image Scale (Kodansha Interna-

tional, 1992)

4. B.M. Whelan, Color Harmony 2: A Guide to Creative

Color Combinations (Rockport Publishers, 1994)

5. L. Eisemann, Pantone’s Guide to Communicating with

Color (Grafix Press, 2000)

6. L.C. Ou, M.R. Luo, A. Woodcock, A. Wright, Color Re-

search Application 29(3), 232 (2004)

7. L.C. Ou, M.R. Luo, A. Woodcock, A. Wright, Color Re-

search Application 29(4), 292 (2004)

8. W. Wei-ning, Y. Ying-lin, J. Sheng-ming, in Systems,

Man and Cybernetics, 2006. SMC ’06. IEEE Interna-

tional Conference on, vol. 4 (2006), vol. 4, pp. 3534 –3539

9. G. Csurka, S. Skaff, L. Marchesotti, C. Saunders, in Proc.

of ICVGIP (2010)

10. L.C. Ou. What’s color emotion. http://colour-

emotion.co.uk/whats.html (accessed May 2011)

11. Y. Chang, K. Uchikawa, S. Saito, in Proc. of APGV

(2004), pp. 91–98

12. G.R. Greenfield, D.H. House, Image recoloring in-

duced by palette color associations (2003), vol. 11, pp.

189–196. URL http://visinfo.zib.de/EVlib/Show?

EVL-2003-216

13. Y.W. Tai, J. Jia, C.K. Tang, Proc. of CVPR 1, 747 (2005)

14. F. Pitie, A. Kokaram, R. Dahyot, in Proc. of ICCV

(2005)

15. X. Xiao, L. Ma, in Proc. of VRCIA (ACM, New York,

NY, USA, 2006), pp. 305–309

16. X. Xiao, L. Ma, Comput. Graph. Forum pp. 1879–1886

(2009)

17. Y. Xiang, B. Zou, H. Li, Pattern Recognition Letters

30(7), 682 (2009)

18. W.C. Chiou, Y.L. Chen, C.T. Hsu, in Proc. of MMSP

(2010), pp. 156 –161

19. W. Dong, G. Bao, X. Zhang, J.C. Paul, in ACM SIG-

GRAPH ASIA 2010 Sketches (2010)

20. T. Pouli, E. Reinhard, Computers & Graphics 35(1), 67

(2011). Extended Papers from Non-Photorealistic Ani-

mation and Rendering (NPAR) 2010

21. J. Itten, Art of Colour (Van Nostrand Reinhold, 1962)

22. H.J. Eysenck, The American Journal of Psychology

54(3), pp. 385 (1941)

23. R.D. Norman, W.A. Scott, Journal of General Psychol-

ogy 46, pp. 185 (1952)

24. K.K.H.H. Sato, T., T. Nakamura, Advances in Colour

Science and Technology 3, pp. 53 (2000)

25. J. Lee, Y.M. Cheon, S.Y. Kim, E.J. Park, in Proc. of

ICNC 2007, vol. 1 (2007), vol. 1, pp. 140 –144

26. S. Tanaka, Y. Iwadate, S. Inokuchi, in Proc. of ICPR

(2000)

27. W. Wei-ning, Y. Ying-lin, J. Sheng-ming, in Systems,

Man and Cybernetics, 2006. SMC ’06. IEEE Interna-

tional Conference on, vol. 4 (2006), vol. 4, pp. 3534 –3539

28. X. Mao, B. Chen, I. Muta, Chaos, Solitons & Fractals

15(5), 905 (2003)

29. D.L. Ruderman, T.W. Cronin, C.C. Chiao, J. Opt. Soc.

Am. A 15(8), 2036 (1998)

30. L. Neumann, A. Neumann, in Computational Aesthetics

in Graphics, Visualization and Imaging 2005 (2005)

31. M.T. Li, M.L. Huang, C.M. Wang, in Proc. of ICCET

(2010)

32. M. Grundland, N.A. Dodgson, Pattern Recognition

40(11), 2891 (2007)

33. T.W. Huang, H.T. Chen, in Proc. of ICCV (2009)

34. C.L. Wen, C.H. Hsieh, B.Y. Chen, M. Ouhyoung, Com-

puter Graphics Forum 27(7) (2008)

35. T. Welsh, M. Ashikhmin, K. Mueller, ACM Trans.

Graph. pp. 277–280 (2002)

36. X. An, F. Pellacini, Computer Graphics Forum 29(2)

(2010)

37. H. Huang, Y. Zang, C.F. Li, The Visual Computer 26,

933 (2010)

38. Wikipedia. k-means clustering.

http://en.wikipedia.org/wiki/K-means clustering (ac-

cessed May 2011)

39. E.H. Land, J.J. McCann, Journal of the Optical Society

of America (1917-1983) 61, 1 (1971)

40. R.A. Waltz, J.L. Morales, J. Nocedal, D. Orban, Math.

Program. 107 (2006)