Security (Un-)Usability

17

Security Usability Fundamentals

An important consideration when you’re building an application is the usability of the

security features that you’ll be employing. Security experts frequently lament that

security has been bolted onto applications as an afterthought, however the security

community has committed the exact same sin in reverse, placing usability

considerations in second place behind security, if they were considered at all. As a

result, we spent the 1990s building and deploying security that wasn’t really needed,

and now that we’re experiencing widespread phishing attacks with viruses and worms

running rampant and the security

is actually needed, we’re finding that no-one can

use it.

To understand the problem, it’s necessary to go back to the basic definition of

functionality and security. An application exhibits functionality if things that are

supposed to happen, do happen. Similarly, an application exhibits security if things

that aren’t supposed to happen, don’t happen. Security developers are interested in

the latter, marketers and management tend to be more interested in the former.

Ensuring that things that aren’t supposed to happen don’t happen can be approached

from both the application side and from the user side. From the application side, the

application should behave in a safe manner, defaulting to behaviour that protects the

user from harm. From the user side, the application should act in a manner in which

the user’s expectations of a safe user experience are met. The following sections look

at some of the issues that face developers trying to create a user interface for a

security application.

Security (Un-)Usability

Before you start thinking about potential features of your security user interface, you

first need to consider the environment into which it’ll be deployed. Now that we

have 10-15 years of experience in (trying to) deploy Internet security, we can see,

both from hindsight and because in the last few years people have actually started

testing the usability of security applications, that a number of mechanisms that were

expected to Solve The Problem don’t really work in practice [1]. The idea behind

security technology is to translate a hard problem (secure/safe communication and

storage) into a simpler problem, not just to shift the complexity from one layer to

another. This is an example of Fundamental Truth No.6 of the Twelve Networking

Truths, “It is easier to move a problem around than it is to solve it” [2]. Security user

interfaces are usually driven by the underlying technology, which means that they

often just shift the problem from the technical level to the human level. Some of the

most awkward technologies not only shift the complexity but add an extra level of

complexity of their own (IPsec and PKI spring to mind).

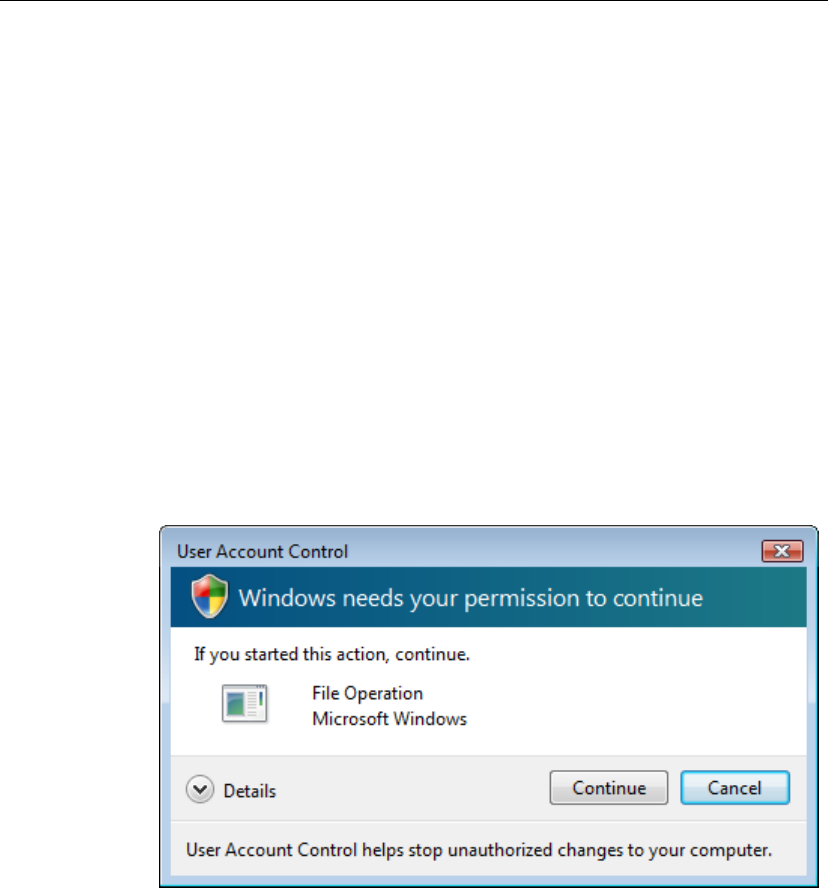

Figure 1: Blaming the user for security unusability

The major lesson that we’ve learned from the history of security (un-)usability is that

technical solutions like PKI and access control don’t align too well with usability

conceptual models. As a result, calling in the usability people after the framework of

the application’s user interface measures have been set in concrete by purely

Security Usability Fundamentals

18

technology-driven considerations is doomed to failure, since the user interface will be

forced to conform to the straightjacket constraints imposed by the security technology

rather than being able to exploit the full benefits of years of usability research and

experience. Blaming security problems on the user when they’re actually caused by

the user interface design (Figure 1) is equally ineffective.

This chapter covers some of the issues that affect security user interfaces, and looks at

various problems that you’ll have to deal with if you want to create an effective user

interface for your security application.

Theoretical vs. Effective Security

There can be a significant difference between theoretical and effective security. In

theory, we should all be using smart cards and PKI for authentication. However,

these measures are so painful to deploy and use that they’re almost never employed,

making them far less

effectively secure than basic usernames and passwords. Security

experts tend to focus exclusively on the measures that provide the best (theoretical)

security, but often these measures provide very little effective security because they

end up being misused, or turned off, or bypassed.

Worse yet, when they focus only on the theoretically perfect measures, they don’t

even try to get lesser security measures right. For example passwords are widely

decried as being insecure, but this is mostly because security protocol designers have

chosen to make them insecure. Both SSL and SSH, the two largest users of

passwords for authentication, will connect to anything claiming to be a server and

then hand over the password in plaintext after the handshake has completed. No

attempt is made to provide even the most trivial protection through some form of

challenge/response protocol, because everyone knows that passwords are insecure

and so it isn’t worth bothering to try and protect them.

This problem is exemplified by the IPsec protocol, which after years of discussion

still doesn’t have any standardised way to authenticate users based on simple

mechanisms like one-time passwords or password-token cards. The IETF even

chartered a special working group, IPSRA (IPsec Remote Access), for this purpose.

The group’s milestone list calls for an IPsec “user access control mechanism

submitted for standards track” by March 2001, but six years later its sole output

remains a requirements document [3] and an expired draft. As the author of one

paper on effective engineering of authentication mechanisms points out, the design

assumption behind IPsec was “all password-based authentication is insecure; IPsec is

designed to be secure; therefore, you have to deploy a PKI for it” [4]. The result has

been a system so unworkable that both developers and users have resorted to doing

almost anything to bypass it, from using homebrew (and often insecure)

“management tunnels” to communicate keys to hand-carrying static keying material

to IPsec endpoints to avoiding IPsec altogether and using mechanisms like SSL-based

VPNs, which were never designed to be used for tunnelling IP traffic but are being

pressed into service because users have found that almost anything is preferable to

having to use IPsec (this has become so pressing that there’s now a standard for

transporting TLS over UDP to allow it to fill the gap that IPsec couldn’t, datagram

TLS or DTLS [5]).

More than ten years after SSL was introduced, support for a basic password-based

mutual authentication protocol was finally (reluctantly) added, although even there it

was only under the guise of enabling use with low-powered devices that can’t handle

the preferred PKI-based authentication and lead to prolonged arguments on the SSL

developers list whenever the topic of allowing something other than certificates for

user authentication came up [6]. SSH, a protocol specifically created to protect

passwords sent over the network, still operates in a manner in which the recipient

ends up in possession of the plaintext password instead of having to perform a

challenge-response authentication in its standard mode of authentication. This

practice, under the technical label of a tunnelled authentication protocol, is known to

be insecure [7][8][9] and is explicitly warned against in developer documentation like

Apple’s security user interface guidelines, which instruct developers to avoid

“handing [passwords] off to another program unless you can verify that the other

Theoretical vs. Effective Security

19

program will protect the data” [10], and yet both SSL and SSH persist in using it.

What’s required for proper password-based security for these types of protocols is a

cryptographic binding between the outer tunnel and the inner authentication protocol,

which TLS’ recently-added mutual authentication finally performs, but to date very

few TLS implementations support it.

A nice example of the difference between theory and practice from the opposite point

of view is what its author describes as “the most ineffective CAPTCHA of all time”

[11]. Designed to protect his blog from comment spam, it requires submitters to type

the word “orange” into a text box when they provide a blog comment. This trivial

speed-bump, which would horrify any (non-pragmatist) security expert, has been

effective in stopping virtually all comment spam by changing the economic equation

for spammers, who can no longer auto-post blog spam as they can for unprotected or

monoculture-CAPTCHA protected blogs [12][13]. On paper it’s totally insecure, but

it works because spammers would have to expend manual effort to bypass it, and

keep expending effort when the author counters their move, which is exactly what

spam’s economic model doesn’t allow.

A lot of this problem arises from security’s origin in the government crypto

community. For cryptographers, the security must be perfect — anything less than

perfect security would be inconceivable. In the past this has lead to all-or-nothing

attempts at implementing security such as the US DoD’s “C2 in ‘92” initiative (a

more modern form of this might be “PKI or Bust”), which resulted in nothing in ’92

or at any other date — the whole multilevel-secure (MLS) operating system push

could almost be regarded as a denial-of-service attack on security, since it largely

drained security funding in the 1980s and was a significant R&D distraction. As

security god Butler Lampson observed when he quoted Voltaire, “The best is the

enemy of the good” (“Le mieux est l’ennemi du bien”) — a product that offers

generally effective (but less than perfect) security will be panned by security experts,

who would prefer to see a theoretically perfect but practically unattainable or

unusable product instead [14].

Psychologists refer to this phenomenon as zero-risk bias, the fact that people would

rather reduce a risk (no matter how small) to zero than create a proportionally much

larger decrease that doesn’t reduce it to zero [15]. Instead of reducing one risk from

90% to 10% they’ll concentrate on reducing another risk from 1% to 0%, yielding a

risk reduction of 1% instead of 80%. Zero-risk bias occurs because risk makes

people worry, and reducing it to zero means that they don’t have to worry about it any

more. Obviously this only works if you’re prepared to ignore other risks, which is

why the phenomenon counts as a psychological bias (philosophers, who see things in

more abstract terms, simply tag these things ‘fallacies’). An example of such a zero-

risk bias was the US’ total ban on carcinogenic food additives in the 1950s, which

increased the overall risk because (relatively) high-risk non-carcinogenic additives

were substituted for (relatively) low-risk carcinogenic ones. The bias ignored the fact

that many additives were potentially harmful and focused only on the single class of

carcinogenic additives.

The striving for impossibly perfect security comes about because usability has never

been a requirement put on those designing security protocols or setting security

policies. For example one analysis of a military cryptosystem design reports that “the

NSA designers focused almost exclusively on data confidentiality […] if that meant

that it was expensive, hard to use, and required extremely restrictive and awkward

policy, or if it might lock out legitimate users from time to time, then so be it” [16].

This type of approach to usability issues was summed up by an early paper on

security usability with the observation that “secure systems have a particularly rich

tradition of indifference to the user, whether the user is a security administrator, a

programmer, or an end user [...] Most research and development in secure systems

has strong roots in the military. People in the military are selected and trained to

follow rules and procedures precisely, no matter how onerous. This user training and

selection decreased the pressure on early systems to be user friendly” [17].

Systems such as this, designed and implemented in a vacuum, can fail

catastrophically when exposed to real-world considerations. As the report on the

Security Usability Fundamentals

20

military system discussed above goes on to say, “once the nascent system left the

NSA laboratories the emphasis on security above all changed dramatically. The

people who approved the final design were not security experts at all. They were the

Navy line officers who commanded the fleet. Their actions show that they were far

more concerned with data availability rather than data confidentiality [...] any ship or

station which became isolated by lack of key became an immediate, high-level issue

and prompted numerous and vigorous complaints. A key compromise, by contrast,

was a totally silent affair for the commander. Thus, commanders were prodded

toward approving very insecure systems”. A similar effect occurs with computer

security software that pushes critical security decisions into the user interface, where

users will find ways to work around the security because they don’t understand it and

it’s preventing them from doing their job.

The best security measures are ones that you can easily explain to users so that they

understand the risk and know how to respond appropriately. Don’t be afraid to use

simple but effective security measures, even if they’re not the theoretical best that’s

available. You should however be careful not to use effective (as opposed to

theoretically perfect) security as an excuse for weak security. Using weak or

homebrew encryption mechanisms when proven, industry-standard ones are available

isn’t effective security, it’s weak security. Using appropriately secured passwords

instead of PKI is justifiable, effective security (security researcher Simson Garfinkel

has termed this “The principle of good security now” [18]).

An example of the conflict between theoretical and effective security is illustrated by

what happens when we increase the usability of the security measures in an

application. Computer users are supported by a vast and mostly informal network of

friends, family, and neighbours (for home users) or office-mates and sysadmins (for

work users) who are frequently given passwords and access codes in order to help the

user with a problem. The theoretical security model says that once keys and similar

secrets are in the hands of the user they’ll take perfect care of them and protect them

in an appropriate manner. However in practice the application interface to the keys is

so hard to use that many users rely on help from others, who then need to be given

access to the keys to perform their intended task. Increasing the usability of the

security mechanisms helps close this gap between theory and practice by enabling

users to manage their own security without having to outsource it to others.

In some cases usability is a fundamental component of a system’s security. The Tor

anonymity service was specifically designed to maximise usability (and therefore to

maximise the number of users) because an unusable anonymity system that attracts

few users can’t provide much anonymity [19].

User Conditioning

It’s often claimed that the way to address security issues is through better user

education. As it turns out, we’re been educating users for years about security,

although unfortunately it’s entirely the wrong kind of education. “Conditioning”

might be a better term for what’s been happening. Whenever users go online, they’re

subjected to a constant barrage of error messages, warnings, and popups: DNS errors,

transient network outages, ASP errors, Javascript problems, missing plugins,

temporary server outages, incorrect or expired certificates, problems connecting to

the MySQL backend (common on any slashdotted web site), and a whole host of

other issues. In one attack, covered in more detail in the section on usability testing

below, researchers actually took advantage of this to replace security-related web site

images with a message saying that they were being upgraded and would return at a

later date.

To see just how tolerant browsers are of errors, enable script debugging (Internet

Explorer), look at the error console (Firefox), or install Safari Enhancer and look at

the error log (Safari). No matter which detection method you use, you can barely

navigate to any Javascript-using page without getting errors, sometimes a whole

cascade of them from a single web page. Javascript errors are so pervasive that

browsers hide them by default because the web would be unusable if they even

displayed them, let alone reacted to them. The result is a web ecosystem that bends

User Conditioning

21

over backwards to avoid exposing users to errors, and a user base that’s become

conditioned to ignoring anything that does leak through.

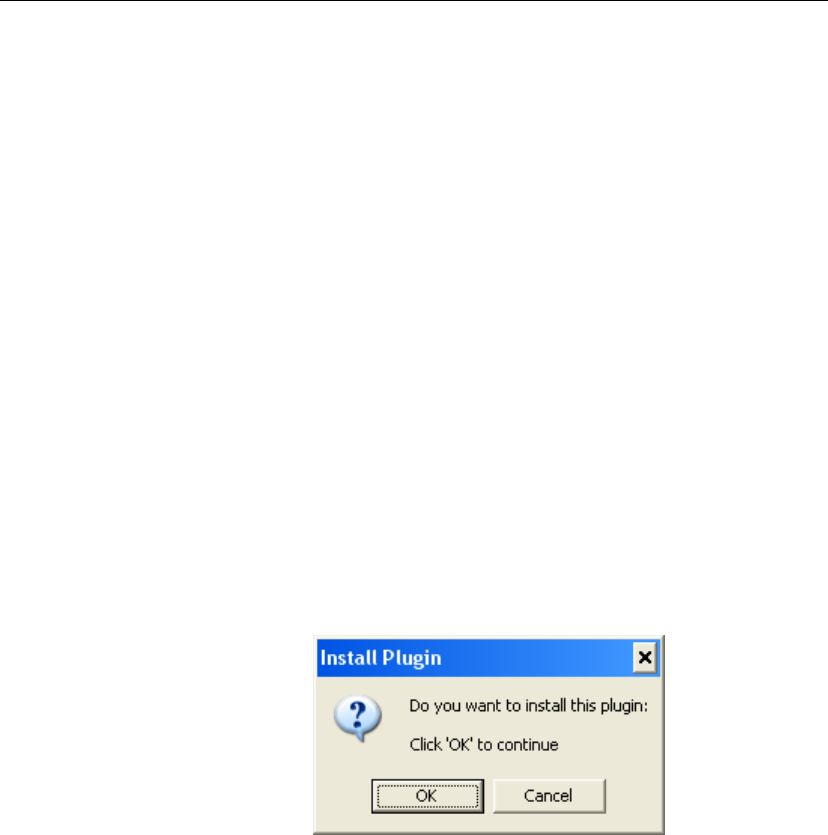

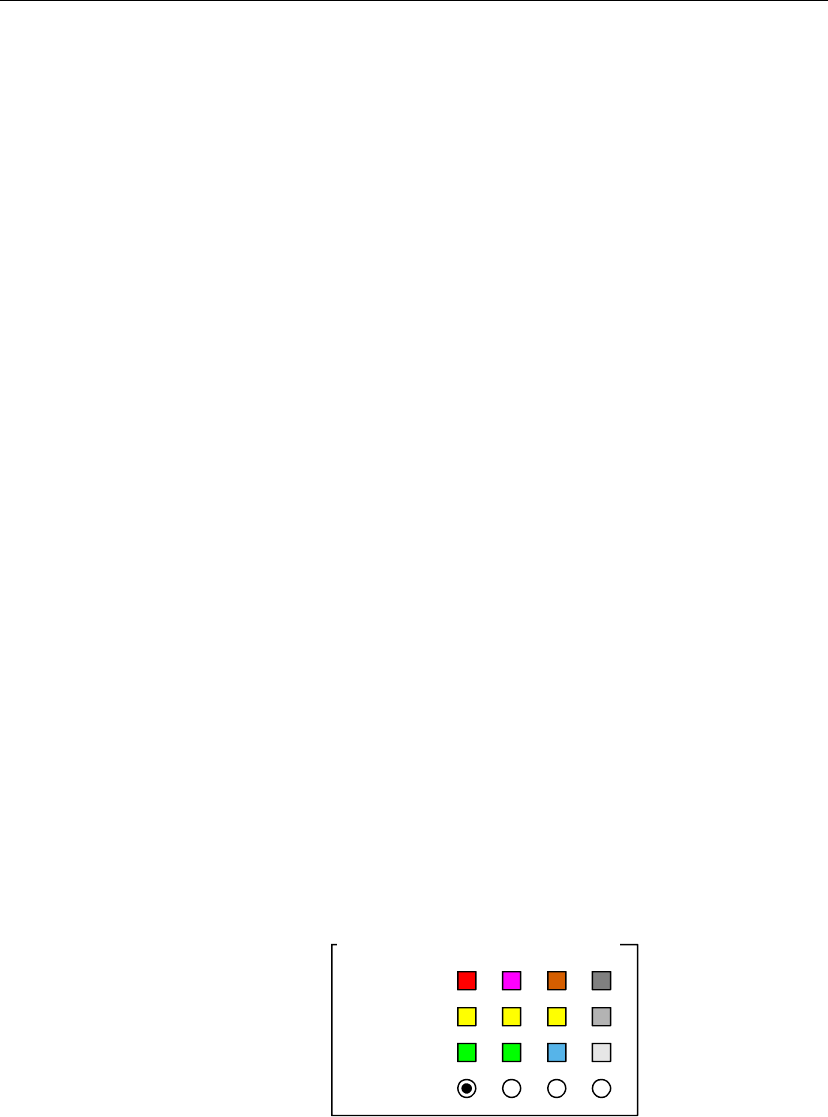

Figure 2: The user has clicked on a button, we’d better pop up a warning dialog

Sometimes the warnings don’t even correspond to real errors but seem to exist only

for their nuisance value. For example what is the warning in Figure 2 trying to

protect us from? Since we’re using a web browser, it’s quite obvious that we’re

about to send information over the Internet. Does a word-processor feel the need to

warn users that it’s about to perform a spell check, or a spreadsheet that it’s about to

recalculate a row? Since this warning is automatically displayed when anything at all

is sent, we have no idea what the significance of the message is. Are we sending an

online banking password, or just searching ebay for cheap dog food? (In this case the

browser was trying to protect us from sending a query for dog food to ebay).

This warning would actually be useful in the situation where a user is entering their

password on a US banks’ insecure login page (discussed later on), but by then the

dialog has long since been disabled due to all the false alarms.

This dialog is a good example of the conventional wisdom that security user

interfaces are often added to applications merely so that developers can show off the

presence of security [20]. Since they’ve put a lot of effort into implementing their

encryption algorithms and security protocols, they want to show off this fact to users.

Unfortunately most users couldn’t care less about the details, they just want to be

assured that they’re secure without needing to have the nitty-gritty details thrust in

their face all the time. This is an unfortunate clash between the goals of developers

and users: developers want to show off their work, but since it doesn’t provide any

direct benefit to users, users don’t want to see it. This type of user interface mostly

serves the needs of the developer rather than the user.

Figure 3: What the previous dialog is really saying

This (and many similarly pointless dialogs that web browsers and other applications

pop up) are prime examples of conditioning users to ignore such messages — note

the enabled-by-default “Do not show this message again” checkbox, in which the

message’s creators admit that users will simply want it to go away and not come back

again. The creation of such dialogs is very deeply ingrained in the programmer

psyche. When Jeff Bezos came up with Amazon’s one-click shopping system, he had

to go back and tell his developers that “one-click” really did mean that the customer

only had to make one click, not one click plus a warning dialog plus another click

Security Usability Fundamentals

22

(this works fine in the Amazon case since their order fulfilment system gives you

several hours grace to change your mind).

Apple’s user interface design guidelines actually equate the appearance of frequent

alerts with a design flaw in the underlying application. OpenBSD, a BSD distribution

that concentrates specifically on security, has a policy of “no useless buttons”

(unfortunately documented only in developer folklore), meaning that if a particular

setting is secure and works for 95% of users then that’s what gets used. Microsoft

has also finally acknowledged this problem in their Vista user interface guidelines

with the design principle that Vista shouldn’t display error messages when users

aren’t likely to change their behaviour as a result of the message, preferring that the

message be suppressed if it’s not going to have any effect anyway (it remains to be

seen how closely this guideline will be adhered to in practice). In fact a general

guideline for dialogs is to avoid ones that aren’t created as a result of a deliberate user

action [20], since users tend to react rather poorly to software events that aren’t a

direct consequence of an action that they’ve taken.

Popups are a user interface instance of the Tragedy of the Commons. If they were

less frequent they’d be more effective, but since they’re all over the place anyway

there’s nothing to stop

my application from popping up a few more than everyone

else’s application in order to get the user’s attention. An economist would describe

this situation by saying that popups have declining marginal utility.

Usability designer Alan Cooper describes these error boxes as “Kafkaesque

interrogations with each successive choice leading to a yet blacker pit of retribution

and regret” [21]. They’re a bit like the land mines that sometimes feature in old war

movies, you put your foot down and hear the click and know that although you’re

safe now, as soon as you take the next step you’re in for a world of hurt.

Unfortunately the war movie get-out-of-jail-free card of being the film’s leading

character and therefore indispensable to the plot doesn’t work in the real world —

you’re just another redshirt, and you’re not coming back from this mission.

The fix for all of these dialog-box problems is to click ‘Yes’, ‘OK’, or ‘Cancel’ as

appropriate if these options are available, or to try again later if they aren’t. Any user

who’s used the Internet for any amount of time has become deeply conditioned to

applying this solution to all Internet/network problems. These warning dialogs don’t

warn, they just hassle. This warning message overload has actually been exploited by

at least one piece of mobile malware, the Cabir virus, which reconnected to every

device within range again and again and again until users eventually clicked ‘OK’

just to get rid of the message [22] (the situation wasn’t helped by the fact that

Symbian OS pops up a warning for every application, even a signed one, that

originates from anywhere other than Symbian, training users to click ‘OK’

automatically).

Even when popups provide legitimate warnings of danger, user reactions to the

warning may not be what the developers of the application were expecting. The

developers of the TrustBar browser plugin, which warns users of phishing sites,

found in one evaluation of the system that almost all users disabled the popups or

even stopped using the plugin entirely because they found the popups disturbing and

felt less safe due to the warnings [23]. Although the whole point of security warnings

is to, well, warn of security issues, this makes users feel uneasy to the point where

they’ll disable the warnings in order to feel better

2

. As security researcher Amir

Herzberg puts it, “Defend, don’t ask”. Building something that relies on user

education to be effective is a recipe for disaster. No-one has the time to learn how to

use it, so they’ll only be adopted by a small number of users, typically hard-core

geeks and, in consumer electronics, gadget fanatics [24].

2

Applying the ostrich algorithm is a natural human reaction to things that make us uneasy. When a security

researcher demonstrated to his parents that the lock on the front door of their house could be picked in a matter of

seconds and offered relatively easy unauthorised entry to their home their reaction was to ask him not to inform

them of this again. This extends beyond security and carries over to general life, if you’d like to read more about

this look up a reference to “cognitive dissonance”.

User Conditioning

23

The best approach to the human-factors problem posed by warning dialogs is to

redesign the way that the application works so that they’re no longer needed. Since

users will invariably click ‘OK’ (or whatever’s needed to make the dialog disappear

so that they get on with their job), the best way to protect the user is to actually do the

right thing, rather than abrogating responsibility to the user. As Mr.Miyagi says in

Karate Kid II, “Best block, not be there”, or as rendered into a computing context by

Gordon Bell, “The cheapest, fastest, and most reliable components of a computer

system are those that aren’t there”. In a security user interface context, the best

warning dialog is one that isn’t there, with the application doing the right thing

without having to bother the user.

Certificates and Conditioned Users

When certificates are used to secure network communications, a genuine attack

displays symptoms that are identical to the dozens of other transient problems that

users have been conditioned to ignore. In other words we’re trying to detect attacks

using certificates when an astronomical false positive rate (endless dialogs and

warnings crying wolf) has conditioned users to ignore any warnings coming from the

certificate layer. In order to be effective, the false positive rate must be close to zero

to have any impact on the user.

An example of the effect of this user conditioning was revealed in a recent case where

a large bank accidentally used an invalid certificate for its online banking services.

An analysis of site access logs indicated that of the approximately 300 users who

accessed the site, just one single user turned back when faced with the invalid

certificate [25]. Although privacy concerns prevented a full-scale study of users’

reactions from being carried out, an informal survey indicated that users were treating

this as yet another transient problem to be sidestepped. Psychologists call this

approach judgemental heuristics (non-psychologists call it “guessing”), a shortcut to

having to think that works reasonably well most of the time at the cost of an

occasional mistake, and the result of the use of these heuristics is termed an automatic

or click, whirr response [26]. As an example of the use of judgemental heuristics,

one user commented that “Hotmail does this a lot, you just wait awhile and it works

again”. The Internet (and specifically the web and web browsers) have conditioned

users to act this way: Guessing is cheap, if you get it right it’s very quick, and if you

don’t get it right you just click the back button and try again. This technique was has

been christened “information foraging” by HCI researchers [27], but is more

commonly known as “maximum benefit for minimum effort”, or by somewhat more

negative label of “laziness” (in this case not in the usual negative sense, it’s merely

optimising the expenditure of effort).

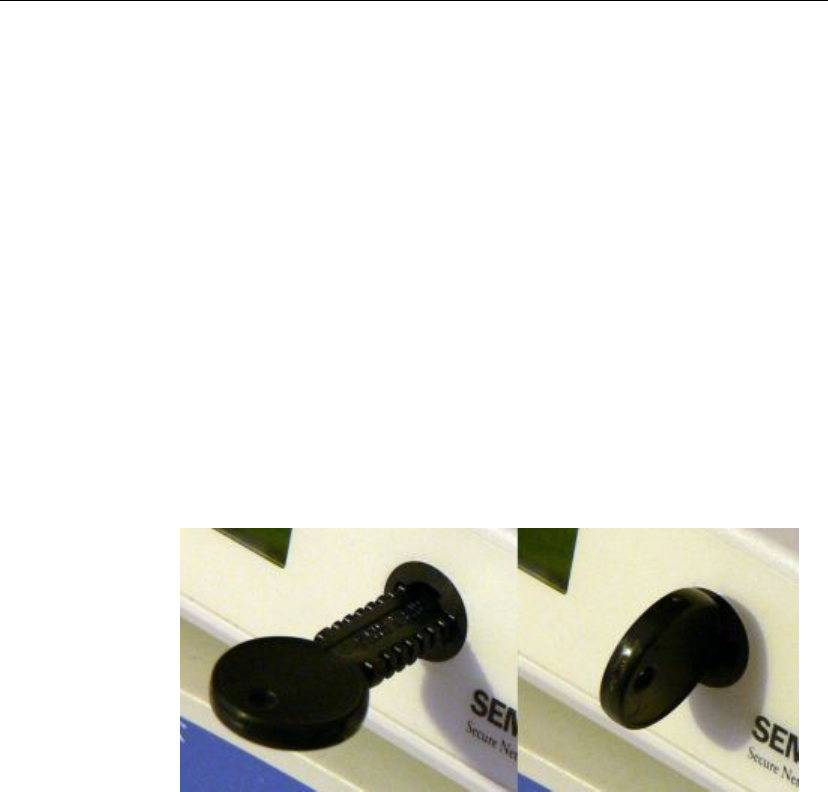

In a similar case, this time with a government site used to pay multi-thousand dollar

property taxes, users ignored the large red cross and warning text that the certificate

was invalid shown in Figure 4 for over two months before a security expert notified

the site administrators that they needed to fix the certificate. In yet another example,

a major US credit union’s certificate was invalid for over a year without anyone

noticing.

Security Usability Fundamentals

24

Figure 4: This certificate warning didn’t stop users from making multi-

thousand-dollar payments via the site

These real-life examples, taken from major banking sites and a large government site,

indicate that certificates, when deployed into a high-false-positive environment, are

completely ineffective in performing their intended task of preventing man-in-the-

middle attacks.

SSH fares little better than SSL, with the majority of users accepting SSH server keys

without checking them. This occurs because, although SSH users are in general more

security-aware than the typical web user, the SSH key verification mechanism

requires that the user stop whatever they’re trying to do and verify from memory a

long strong of hex digits (the key fingerprint) displayed by the client software. A

relatively straightforward attack, for the exceptional occasion where the user is

actually verifying the fingerprint, is to generate random keys until one of them has a

fingerprint whose first few hex digits are close enough to the real thing to pass muster

[28].

There are even automated attack tools around that enable this subversion of the

fingerprint mechanism. The simplest attack, provided by a MITM tool called

ssharpd 29, uses ARP redirection to grab an SSH connect attempt and then reports a

different protocol version to the one that’s actually in use (it can get the protocol

version from the information passed in the SSH handshake). Since SSHv1 and

SSHv2 keys have different fingerprints, the victim doesn’t get the more serious key-

changed warning but merely the relatively benign new-key warning. Since many

users never check key fingerprints but simply assume that everything should be OK

on the first connect, the attack succeeds and the

ssharp MITM has access to the

session contents [30]

3

.

> ssh test@testbox

The authenticity of host 'testbox (192.168.1.38)' can't be

established.

RSA key fingerprint is

86:9c:cc:c7:59:e3:4d:0d:6f:58:3e:af:f6:fa:db:d7.

Are you sure you want to continue connecting (yes/no)?

> ssh test@testbox

The authenticity of host 'testbox (192.168.1.38)' can't be

established.

RSA key fingerprint is

86:9c:cc:d7:39:53:e2:07:df:3a:c6:2f:fa:ba:dd:d7.

Are you sure you want to continue connecting (yes/no)?

Figure 5: Real (top) and spoofed (bottom) SSH servers

3

Since ssharp is based on a modified, rather old, version of OpenSSH, it’d be amusing to use one of the assorted

OpenSSH security holes to attack the MITM while the MITM is attacking you.

User Conditioning

25

A much more interesting attack can be performed using Konrad Rieck’s concept of

fuzzy fingerprints, which are fingerprints that are close enough to the real thing to

pass muster. As with the standard SSH MITM attack, there’s a tool available to

automate this attack for you [31]. This attack, illustrated in Figure 5, takes a target

SSH server key and generates a new key for which the fingerprint is close enough to

fool all but a detailed, byte-for-byte comparison. Since few users are likely to

remember and check the full 40-hex-digit fingerprint for each server that they connect

to, this attack, combined with

ssharpd, is capable of defeating virtually any SSH

setup [32]. This is another instance where a TLS-PSK style mechanism would

protect the user far more than public-key authentication does.

SSL Certificates: Indistinguishable from Placebo

The security model used with SSL server certificates might be called honesty-box

security: In some countries newspapers and similar low-value items are sold on the

street by having a box full of newspapers next to a coin box (the honesty box) into

which people are trusted to put the correct coins before taking out a paper. Of course

they can also put in a coin and take out all the papers, or put in a washer and take out

a paper, but most people are honest and so most of the time it works. SSL’s

certificate usage is similar. If you use a $495 certificate, people will come to your

site. If you use a $9.95 certificate, people will come to your site. If you use a $0 self-

signed certificate, people will come to your site. If you use an expired or invalid

certificate, people will come to your site. If you’re a US financial institution and use

no certificate at all but put up a message reassuring users that everything is OK (see

Figure 6), people will come to your site. In medical terms, the effects of this

“security” are indistinguishable from placebo.

Figure 6: Who needs SSL when you can just use a disclaimer?

In fact the real situation is even worse than this. There has in the past been plenty of

anecdotal evidence of the ineffectiveness of SSL certificates, an example being the

annual SecuritySpace survey, which reported that 58% of all SSL server certificates

in use today are invalid without having any apparent effect on users of the sites [33].

However, it wasn’t until mid-2005, ten years after their introduction, that a rigorous

study of their actual effectiveness was performed. This study, carried out with

computer-literate senior-year computer science students (who one would expect

would be more aware of the issues than the typical user) confirmed the anecdotal

evidence that invalid SSL certificates had no effect whatsoever on users visiting a

site. Security expert Perry Metzger has summed this up, tongue-in-cheek, as “PKI is

like real security, only without the security part”.

It gets worse though. In one part of the study, users were directed to a site that used

no SSL at all, at which point several of the users who had been quite happy to use the

site with an invalid certificate now refused to use it because of the lack of SSL. Users

assumed that the mere existence of a certificate (even if it was invalid) meant that it

was safe to use the site, while they were more reluctant to use a site that didn’t use

SSL or certificates. This is quite understandable — no-one worries about an expired

safety certificate in an elevator because all it signifies is that the owner forgot to get a

Security Usability Fundamentals

26

new one, not that the elevator will crash into the basement and kill its occupants the

next time it’s used. In fact for the vast majority of elevator users the most that they’ll

ever do is register that some form of framed paperwork is present. Whether it’s a

currently valid safety certificate or an old supermarket till printout doesn’t matter.

This real-world conditioning carries across to the virtual world. To quote the study,

“the actual security of existing browsers is appalling when the ‘human in the loop’ is

considered. Because most users dismiss certificate verification error messages, SSL

provides little real protection against man-in-the-middle attacks. Users actually

behaved less insecurely when interacting with the site that was

not SSL-secured”

[34]. The astonishing result of this research is that not only is the use of SSL

certificates in browsers indistinguishable from placebo, it’s actually

worse than

placebo because users are happy to hand over sensitive information to a site just

because it has a certificate. If a medicine were to act in this way, it would be

withdrawn from sale.

Another example of the clash of certificate theory with reality was reported by a

security appliance vendor. Their products ship with a pre-generated self-signed

certificate that ensures that they’re secure out of the box without the user having to

perform any additional certificate setup. Because it’s a self-signed certificate, the

user gets a certificate warning dialog from the browser each time they connect to the

appliance, which in effect lets them know that the security is active. However, if they

replace the self-signed certificate with an “official” CA-issued one, the browser

warning goes away. Having lost the comforting SSL browser warning dialog, users

were assuming that SSL was no longer in effect and complained to the vendor [35].

Again, users treated the (at least from a PKI theory point of view) less secure self-

signed certificate setup as being more secure than the official CA-issued one.

A similar problem occurred during an experiment into the use of S/MIME signed

email. When signed messaging was enabled, users experienced arcane PKI warning

dialogs, requests to insert crypto cards, X.509 certificate displays, and all manner of

other crypto complexity that they didn’t much understand. This caused much

apprehension among users, the exact opposite of the reassurance that signed email is

supposed to provide. The conclusion reached was to “sign your messages only to

people who understand the concept. Until more usable mechanisms are integrated

into popular email clients, signatures using S/MIME should remain in the domain of

‘power users’” [36]. Since a vanishingly small percentage of users really understand

signed email, the actual message of the study is “Don’t use signed email”.

This result is very disturbing to security people. I’ve experienced this shock effect a

number of times at conferences when I’ve mentioned the indistinguishable-from-

placebo nature of SSL’s PKI. Security people were stunned to hear that it basically

doesn’t work, and didn’t know seem to know what to do with the information. A

similar phenomenon has occurred with researchers in other fields as well.

Inattentional blindness, which is covered later on, was filed away by psychologists

for over a quarter of a century after its discovery in 1970 because it was disturbing

enough that no-one quite knew how to deal with it [37].

Social scientists call this a “fundamental surprise”, a profound discrepancy between

your perception of the real world and reality [38]. This differs from the more usual

situational surprise, a localised event that requires the solution of a specific problem,

in that it requires a complete reappraisal of the situation in order to address it (there

isn’t much sign of this happening with PKI yet). Another term for the phenomenon is

an Outside Context Problem, from author Iain Banks’ novel

Excession, in which he

describes it as something that you encounter “in the same way that a sentence

encounters a full stop” [39].

This situation isn’t helped by the fact that even if PKI worked, obtaining bogus

certificates from legitimate CA’s isn’t that hard. For example researcher David

Mazieres was able to obtain a $350 Verisign certificate for a nonexistent business by

providing a Doing Business As (DBA) license [40], which requires little more than

payment of the US$10-$50 filing fee. In case you’re wondering why a DBA (referred

to as a “trading as” license in the UK) has so little apparent security, it’s deliberately

User Conditioning

27

designed this way to allow small-scale businesses such as a single person to operate

without the overhead of creating a full business entity. DBAs were never intended to

be a security measure, they were designed to make operating a small independent

business easier (their effectiveness is indicated by the fact that the US alone had more

than 20 million sole proprietorships and general partnerships recorded for the 2004

tax year). $9.95 certificates are even less rigorous, simply verifying the ability to

obtain a reply from an email address. How much checking do users expect the CA to

do for all of $9.95?

The User is Trusting… What?

CAs are often presented as “trusted third parties”, but as security researcher Scott Rea

has pointed out they’re really just plain “third parties” because the user has no basis

for trusting them [36], and for the large number of unknown CAs hardcoded into

common applications they’re explicitly untrusted third parties because the user

doesn’t even know who they are. Consider the dialog shown in Figure 7, in which

the user is being told that they’ve chosen to trust a certain CA. Most users have no

idea what a CA is, and they most certainly never chose to trust any of them. It’s not

even possible to determine who or what it is that they’re so blindly trusting. The

certificate, when the ‘View’ button is clicked, is issued by something claiming to be

“Digi-SSL Xp” (whatever that is), and that in turn is issued by “UTN-USERFirst-

Hardware” (ditto). In other words the user is being informed that they’re trusting an

unknown entity which is in turn being vouched for by another unknown entity. To

paraphrase Douglas Adams, “This must be some strange new use of the word ‘trust’

with which I wasn’t previously familiar”.

Figure 7: Who are these people and why am I trusting them?

This dialog is reminiscent of a fable about the car that Ken Thompson, one of the

creators of Unix, helped design. Whenever there’s a problem, a giant ‘?’ lights up on

the dashboard. When asked about this, Ken responds that “the experienced user will

usually know what’s wrong”. This dialog presents the same user interface as Ken’s

car, just a giant ‘?’ flashing in the middle of the screen.

A contributing factor in the SSL certificate problem is the fact that the security

warnings presented to the user that are produced by certificates often come with no

supporting context. Danish science writer Tor Nørretranders calls this shared context

between communicating parties “exformation” [41]. In the case of certificates there’s

no certificate-related exformation shared between the programmer and the user. Even

at the best of times users have little chance of effectively evaluating security risk [42]

(even experts find this extraordinarily difficult, which is why it’s almost impossible to

obtain computer security insurance), and the complete lack of context provided for

the warning makes this even more difficult. Since web browsers implicitly and

Security Usability Fundamentals

28

invisibly trust a large number of CAs, and by extension a vast number of certificates,

users have no exformation that allows them to reason about certificates when an error

message mentioning one appears. One user survey found that many users assumed

that it represented some form of notice on the wall of the establishment, like a health

inspection notice in a restaurant or a Better Business Bureau certificate, a piece of

paper that indicates nothing more than that the owner has paid for it (which is indeed

the case for most SSL certificates).

Similarly, the introduction of so-called high-assurance or extended validation (EV)

certificates that allow CAs to charge more for them than standard ones is simply a

case of rounding up twice the usual number of suspects — presumably somebody’s

going to be impressed by it, but the effect on phishing will be minimal since it’s not

fixing any problem that the phishers are exploiting. Indeed, cynics would say that

this was exactly the problem that certificates and CAs were supposed to solve in the

first place, and that “high-assurance” certificates are just a way of charging a second

time for an existing service. A few years ago certificates still cost several hundred

dollars, but now that you can get them for $9.95 the big commercial CAs have had to

reinvent themselves by defining a new standard and convincing the market to go back

to the prices paid in the good old days. When you consider certificates using a purely

financial perspective then from a large-company mindset (“cost is no object”) this

may make some sort of sense but from an Internet mindset (“anything that costs is

bypassed”), it’s simply not going to work. Not everyone can issue or afford these

extra-cost certificates, and not everyone is allowed to apply for them — the 20

million sole proprietorships and general partnerships mentioned earlier are

automatically excluded, for example. High-assurance certificates are a revenue

model rather than a solution for users’ problems, with the end result being the

creation of barriers to entry rather than the solution of any particular security

problem.

Predictably, when the effectiveness of EV certificates was tested once Internet

Explorer with its EV support had been around for a few months, they were found to

have no effect on security [43]. One usability researcher’s rather pithy summary of

the situation is that “the EV approach is to do more of what we have already

discovered doesn’t work” [44]. As with the 2005 study on the effectiveness of

browser SSL indicators which found that users actually behaved less insecurely when

SSL was absent, this study also produced a surprising result: Users who had received

training in browser EV security behaved less securely than ones who hadn’t! The

reason for this was that the browser documentation talked about the use of

(ineffective, see other parts of this section) phishing warnings, and users then relied

on these rather than the certificate security indicators to assess a site. As a result they

were far more likely to classify a fraudulent site as valid than users who had received

no security training. This unexpected result emphasises the importance of post-

release testing when you introduce new security features, which is covered in more

detail later in the section on security testing.

In order for a certificate-differentiation mechanism to work the user would need to

have a very deep understanding of CA brands (recall that the vast majority of users

don’t even know what a CA is, let alone knowing CA names and brands), and know

which of the 100-150 CA certificates hard-coded into web browsers are trustworthy

and which aren’t. No-one, not even the most knowledgeable security expert, knows

who most of these CAs really are. The CA brands are competing against multi-

million dollar advertising campaigns from established brands like Nike and Coke —

it’s no contest [45].

Security companies aren’t helping with this confusion by their handling of things like

trust marks and site security seals. Although these are basically worthless — anyone

can copy the graphic to their site, and by the time it’s finally discovered (if it’s ever

discovered) it’s too late to do much about it — providers of some seals like

Verisign’s Secure Site Seal compound the problem by tying it to their issuing of SSL

server certificates. As a result Verisign’s brand is being attached to a completely

insecure site-marking mechanism, with the unfortunate effect that a significant

proportion of users are more likely to trust sites that display the mark [46]. Phishers

User Conditioning

29

can therefore increase the effectiveness of their phishing by copying the graphics of

any site seals they feel like using to their sites.

The problem with CA branding (and lack of brand recognition) was demonstrated in

the study of user recognition of CA brands discussed in the next section in which, of

the users who actually knew what a CA was (many didn’t), far more identified Visa

as a trusted CA than Verisign, despite the fact that Verisign is the world’s largest CA

and Visa isn’t a CA at all [18]. Combine this with the previously-described user

response to certificates and you have a situation where a bogus CA with a well-

known brand like Visa will be given more weight than a genuine CA like Verisign.

After all, what user would doubt

https://www.visa.com, certified by Visa’s

own CA?

In practice almost everything trumps certificate-based SSL security indicators. One

large-scale study found, for example, that if users were presented with two identical

pages of which one was SSL-protected and had a complex URL,

https://www.-

accountonline.com/View?docId=Index&siteId=AC&langId=EN

and

the other wasn’t secured and had a simple URL,

http://www.-

attuniversalcard.com

, people rated the unprotected version with the simple

URL as considerably more trustworthy than the protected one with the complex URL

[47] (the unsecured page — note the different domains that the two are hosted in,

even though they’re the same page — has since been updated to redirect to the

secured page). Other factors that usability researchers have found will trump SSL

indicators include:

The complexity of the web page. Using fancy graphics and Flash animation

exploits the watermark fallacy, in which users translate the use of complex

features in physical objects that’s used for anti-counterfeiting of items like

banknotes and cheques into an indication of authenticity in the virtual world.

Pseudo-personalisation such as displaying the first four digits of the user’s credit

card number, for example 4828-****-****-****, to “prove” that you know

them. The first four digits are identical across large numbers of users and

therefore relatively easy to anticipate. For attacks targeting the user bases of

individual banks, it’s even easier because prefixes for all cards from that bank

will be identical. For example when phishers spammed (possible) customers of

the Mountain America credit union in Salt Lake City, they were able to display

the first five digits of the card as “proof” of legitimacy because all cards issued

by the bank have the same prefix [48] (in addition they used a legitimate CA-

issued certificate to authenticate their phishing site).

Providing an independent verification channel for information such as a phone

number to call. This exploits the “not-my-problem” fallacy, no-one actually calls

the number since they assume that someone else will. In addition phishers have

already set up their own interactive voice response (IVR) systems using VoIP

technology that mimic those of the target bank, so having a phone number to call

is no guarantee of authenticity [49][50].

The “not-my-problem” fallacy is particularly noteworthy here because it first gained

widespread attention in 1964 when a woman named “Kitty” Genovese was brutally

murdered next to her New York apartment building. As with the phone verification

channel for web pages, people who heard her cries for help during separate attacks

spread over about thirty minutes assumed that someone else had called the police and

so didn’t call themselves (although some of the details, and in particular the number

and apparent apathy of some of the bystanders, was exaggerated by journalists). This

event was later investigated in depth by psychologists, who termed the phenomenon

the “bystander effect”. They found that the more bystanders there are, the less likely

that any one is to come to a victim’s aid because the assumption is that someone else

must have already done so [51]. This effect, which arises due to diffusion of

responsibility, is so noticeable that it’s been quantified by experimental

psychologists. In one experiment, having one bystander present resulted in 85% of

subjects stepping in to help. With two bystanders this dropped to 62%, and with five

Security Usability Fundamentals

30

bystanders it had fallen to 32%, with each one thinking that it was someone else’s job

to intervene [52].

The bystander effect exists in many variations. For example in one experiment

subjects were shown a sample line X and three other lines A, B, and C, of which A

was shorter than X, B was the same length, and C was longer. The subjects were

placed in a room with a varying number of other people who reported that either the

shorter A or the longer C matched X rather than the equal-length B. With one other

person present, 3% of subjects agreed with the (incorrect) assessment. With two

others present this rose to 14%, and with three others it went to 32% (these figures

aren’t exactly identical to the ones from the previous experiment; the point is that

there’s a demonstrable effect that increases with the number of bystanders, not that

some hard-and-fast figure applies across all cases [53].

When people were asked why they’d done this, they explained it away on the basis

that they wanted to fit in, or (more rationally, since the supposed reason for the

experiment was that it was a vision test) that they thought there might be something

wrong with their eyesight since the others couldn’t

all be wrong (although technically

this could be taken as a variation of wanting to fit in).

In the Internet the bystander effect is particularly pernicious. Recall that the effect

increases with the number of bystanders present. In the Genovese murder, the

presence of a relatively small group people was enough to trigger the bystander

effect. On the Internet, the

entire world is potentially a bystander. This is the worst

possible situation into which you can deploy a mechanism that can fall prey to the

bystander effect, and although phishers probably aren’t psychology graduates they do

know how to take advantage of this.

Alongside these tricks, there are myriad other ways that are being actively exploited

by phishers. Any of these factors, or factors in combination, can trump SSL security

in the eyes of the users.

Password Mismanagement

The start of this section touched on the poor implementation of password security by

applications, pointing out that both SSH and SSL/TLS, protocols designed to secure

(among other things) user passwords, will connect to anything claiming to be a server

and then hand over the user’s password in plaintext form without attempting to apply

even the most basic protection mechanisms. However, the problem goes much

further than this. Applications (particularly web browsers) have conditioned users

into constantly entering passwords with no clear indication of who they’re handing

them over to. These password mechanisms are one of the many computer processes

that are training users to become victims of phishing attacks.

Figure 8: Gimme your password!

Consider the dialog in Figure 8, in this case a legitimate one generated by the Firefox

browser for its own use. This dialog is an example of geek-speak at its finest. In

order to understand what it’s asking, you need to know that Netscape-derived

browsers use the PKCS #11 crypto token interface internally to meet their

cryptographic security requirements. PKCS #11 is an object-oriented interface for

devices like smart cards, USB tokens, and PCMCIA crypto cards, but can also be

used as a pure software API. When there’s no hardware crypto token available, the

User Conditioning

31

browser uses an internal software emulation, a so-called PKCS #11 soft-token. In

addition, the PKCS #11 device model works in terms of user sessions with the device.

The default session type is a public session, which only allows restricted (or even no)

access to objects on the device and to device functionality. In order to fully utilise the

device, it’s necessary to open a private session, which requires authenticating yourself

with a PIN or password. What the dialog is asking for is the password that’s required

to open a private session with the internal PKCS #11 soft-token in order to gain

access to the information needed to access a web site.

Figure 9: Password dialog as the user sees it

Possibly as much as one hundredth of one percent of users exposed to this dialog will

understand that. For everyone else, it may as well be written in Klingon (see Figure

9). All they know is that whenever they fire up the browser and go to a web site that

requires authentication, this garbled request for a password pops up. After an initial

training period, their proficiency increases to the point where they’re barely aware of

what they’re doing when they type in their password — it’s become an automatic

process of the kind described in the next chapter.

Other poorly-thought-out password management systems can be similarly

problematic. The OpenID standard, a single-sign-on mechanism for web sites, goes

to a great deal of trouble to remain authentication-provider neutral. The unfortunate

result is what security practitioner Ben Laurie has termed “a standard that has to be

the worst I’ve ever seen from a phishing point of view” [54] because it allows any

web site to steal the credentials you use at any other web site. To do this, an attacker

sets up a joke-of-the-day or animated-dancing-pigs or kitten-photos web page or

some other site of the kind that people find absolutely critical for their daily lives, and

uses OpenID to authenticate users. Instead of using your chosen OpenID provider to

handle the authentication, the attacker sends you to an attacker-controlled provider

that proxies the authentication to the real provider. In this way the attacker can use

your credentials to empty your PayPal account while you’re reading the joke of the

day or looking at kitten pictures.

This is far worse than any standard phishing attack because instead of having to

convince you to go to a fake PayPal site, the attacker can use any site at all to get at

your PayPal credentials. What OpenID is doing is training users to follow links from

random sites and then enter their passwords, exactly the behaviour that phishers want

[55]. By declaring this problem “out of scope” for the specification [56], the

developers of the OpenID standard get to pass it on to someone else. Other federated

single-sign-on mechanisms like Internet2’s Shibboleth exhibit similar flaws.

Future developments have the potential to make this situation even worse. If the

biometrics vendors get their way, we’ll be replacing login passwords with

thumbprints. Instead of at least allowing for the possibility of one password per

account, there’ll be a single password (biometric trait) for all accounts, and once it’s

compromised there’s no way to change it. Far more damaging though is the fact that

biometrics makes it even easier to mindlessly authenticate yourself at every

opportunity, handing out your biometric “password” to anything that asks for it.

Articles proposing the use of biometrics as anti-phishing measures never even

consider these issues, choosing to focus instead on the technical aspects of fingerprint

scanning and related issues [57].

Security Usability Fundamentals

32

Abuse of Authority

Once applications have obtained additional authorisation for a particular action, they

often retain their extra privileges far beyond any sensible amount of time for which

they need them. Like a miser hanging onto money, the clutch the extra privileges to

their chest and refuse to let go for any reason. Consider the Firefox plugin-install

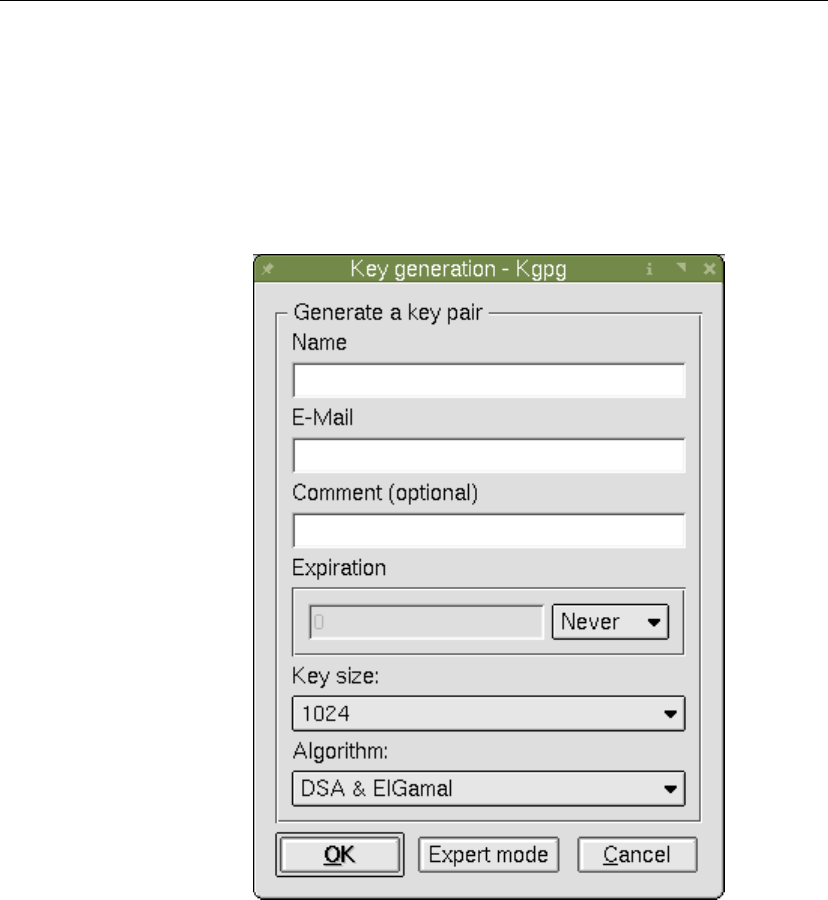

request shown in Figure 10. It only takes two mouse clicks in response to the install

request to give the browser the necessary permission to install the plugin, but

navigation to an obscure configuration dialog buried four levels down in a succession

of menus and other dialogs to remove them again. What’s more, the browser hasn’t

just authorised the installation of that one plugin but of all other plugins hosted at that

domain! Consider the amount of content hosted on domains like

yahoo.com to see

how dangerous such a blanket permission can be — the

groups.yahoo.com

community alone has gigabytes of arbitrary untrusted content hosted on it, all sitting

ready to infect your PC.

Figure 10: Plugin install request

The abuse of authority can be exploited in arbitrarily creative ways. Consider the

following cross-pollination attack, which allows an impostor to set up a genuine

Verisign-certified fake banking site. This takes advantage of the way that browsers

currently handle certificates that they can’t verify. Instead of treating the security

failure as an absolute, they allow users to ignore it and continue anyway, which

virtually all users do. However instead of allowing the certificate to be used once,

they allow it to be used either for the remainder of the browser session or forever (see

Figure 11: Permanent temporary certificate acceptance). Since users tend to leave

browsers (along with applications like email and IM clients) open for extended

periods of time, and PCs powered on (or at least effectively on, for example in

hibernation) for equally long amounts of time, the time periods “for this session” and

“permanently” are more or less synonymous. So what we need to do is get a user to

accept a certificate for a non-valuable site (for which they’re quite likely to click

‘OK’ since there are no real consequences to this action), and then reuse it later to

certify any site that we want.

Figure 11: Permanent temporary certificate acceptance

Other Languages, Other Cultures

33

To do this, we get them to come to the initial site via standard spam techniques

inviting them to read an e-postcard, view someone’s holiday photos, meet a long-lost

school friend, or some other standard (and innocuous) lure. The site is protected with

an SSL certificate that the browser can’t verify, so the user has to accept it either

permanently or for the current session. If the user accepts it permanently then there’s

nothing left to do. If they accept it for the current session then all that the phishing

site needs to do is determine that they’ve accepted the certificate in order to use it to

“authenticate” the phishing site.

Phishers have come up with several ways of doing this that don’t involve the obvious

(and easily-blocked) use of cookies. One is cache mining, which uses the load time

of potentially cached images from the target site to determine whether the browser

has recently visited it (and subsequently cached the images) or not [58]. A far more

effective means of doing this though involves the use of Cascading Style Sheets

(CSS). CSS has a

:visited pseudo-class that allows the server to dynamically

change the appearance of a link

<a href=…> based on whether the client has visited it

in the past or not (the browser default is to change the colour slightly, typically from

blue to purple). In programming terms, this CSS facility allows the server to execute

the command

if url_visited then do_A else do_B on the client.

How does the server find out what the client has done? By having the actions loop

back to the server using CSS’ url() feature, applying two different URLs based on

whether

do_A or do_B is triggered. So the pseudocode becomes if url_visited

then url('server_url_A') else url('server_url_B')

. All of this is hidden from

the user through the use of an empty-appearing link,

<a href="…"></a>. The server

can now tell whether the user has accepted the certificate from the innocuous site as

soon as the user visits the not-so-innocuous site [59].

So how does this allow us to create a genuine Verisign-certified fake banking site?

By making the SSL certificate that the user accepts to get to the innocuous site a CA

certificate, we can now use it to issue our own certificates in any name we want.

Because of the universal implicit cross-certification that’s present in browsers, we can

issue a certificate in the name of Verisign, and our fake Verisign can then certify any

phishing site that it wants. When the user visits the phishing site, they’ll get no

warning from the browser, all the SSL security indicators will be present, and on the

very remote chance that they bother to check the certificate, they’ll see that it’s been

authorised by Verisign, the world’s largest CA. Not bad for a few fragments of CSS

and an extra flag set in a certificate!

Other Languages, Other Cultures

Up until about fifteen years ago, it was assumed that there were universal maxims

such as modes of conversation and politeness that crossed all cultural boundaries.

This turned out to be largely an illusion, contributed to at least to some extent by the

fact that most of the researchers who published on the subject came from an Anglo-

Saxon, or at least European, cultural background.

Since then, the ensuing field of cross-cultural pragmatics, the study of how people

interact across different cultures, has helped dispel this illusion. For example, the

once-popular assumption that the “principles of politeness” are the same everywhere

have been shown to be incorrect in ways ranging from minor variations such as

English vs. eastern European hospitality rituals through to major differences such as

cultures in which you don’t thank someone who performs a service for you because if

they didn’t want you to accept the service they wouldn’t have offered it, a practice

that would seem extremely rude to anyone coming from a European cultural

background.

Let’s look at a simple example of how a security user interface can be affected by

cross-cultural pragmatics issues. Imagine a fairly standard dialog that warns that

something has gone slightly wrong somewhere and that if the user continues, their

privacy may be compromised. Even the simple phrase “your privacy may be

compromised” is a communications minefield. Firstly, the English term “privacy”

has no equivalent in any other European language. In fact the very concept of

Security Usability Fundamentals

34

“privacy” reflects a very Anglo-Saxon cultural value of being able to create a wall

around yourself when and as required. Even in English, privacy is a rather fuzzy

concept, something that philosopher Isaiah Berlin calls a “negative liberty” which is

defined by an intrusion of it rather than any innate property. Like the US Supreme

Court’s (non-)definition of obscenity, people can’t explicitly define it, but know when

they’ve lost it [60]. So in this case warning of a

loss of privacy (rather than stating

that taking a certain measure will increase privacy) is the appropriate way to

communicate this concept to users — assuming that they come from an Anglo-Saxon

cultural background, that is.

Next we have the phrase “may be”, a uniquely English way of avoiding the use of an

imperative [61]. In English culture if you wanted to threaten someone, you might tell

them that if they don’t take the requested action they might have a nasty accident. On

the continent, you’d be more likely to inform them that they

will have a nasty

accident. Moving across to eastern Europe and Italy, you’d not only inform them of

the impending accident but describe it in considerable and occasionally graphic

detail.

The use of so-called whimperatives, extremely common in English culture, is almost

unheard-of in other European languages [62]. A request like “Would you mind

opening the window” (perhaps watered down even further with a side-order of “it’s a

bit cold in here”) would, if you attempted to render it into a language like Polish,

“Czy

miałabyś ochotę …”, sound quite bizarre — at best it would come across as an

inquiry as to whether the addressee is capable of opening the window, but certainly

not as a request.

Finally, we come to the word “compromise”, which in everyday English is mostly

neutral or slightly positive, referring to mutual concessions made in order to reach

agreement (there’s an old joke about a manager who wonders why security people are

always worrying about compromise when everyone knows that compromise is a

necessary requirement for running a business). In other languages the connotations

are more negative, denoting weakness or a sell-out of values. Only in the specialised

language of security-speak, however, is compromise an obviously negative term.

The fact that it’s taken four paragraphs just to explain the ramifications of the phrase

“your privacy may be compromised” is a yardstick of how tricky the effective

communication of security-relevant information can be. Even something as simple as

the much-maligned “Are you sure?” dialog box can be problematic. In some cultures,

particularly when offering hospitality, you never try to second-guess someone else’s

wishes. A host will assume that the addressee should always have more, and any

resistance by them can be safely disregarded (the authors of endless “Are you sure?”

dialogs should probably take this attitude to heart). The common English question

“Are you sure?” can thus sound quite odd in some cultures.

Japan has a cultural value called enryo, whose closest English approximation would

be “restraint” or “reserve”. The typical way to express enryo is to avoid giving

opinions and to sidestep choices. Again using the example of hospitality, the norm is

for the host to serve the guest a succession of food and drink and for the guest to

consume at least a part of every item, on the basis that to not do so would imply that

the host had miscalculated the guest’s wishes. The host doesn’t ask, and the guest

doesn’t request. When responding to a security-related dialog in which the user is

required to respond to an uninvited and difficult-to-answer request, the best way to

express enryo is to click ‘OK’. In a Japanese cultural context, the ‘OK’ button on

such dialogs should really be replaced with one that states ‘Nan-demo kaimasen’,

“Anything will be all right with me”. (In practice it’s not quite that bad, since the fact

that the user is interacting with a machine rather than a human relaxes the enryo

etiquette requirements).

So going beyond the better-known problems of security applications being localised

for

xx-geek by their developers, even speaking in plain English can be quite difficult

when the message has to be accurately communicated across different languages and

cultures. Some time ago I was working on an internationalised security application

and the person doing the Polish translation told me that in situations like this in which

Other Languages, Other Cultures

35

the correct interpretation of the application developer’s intent is critical, he preferred

to use the English version of the application (even though it wasn’t his native

language) because then he knew that he was talking directly with the developer, and

not witnessing an attempt to render the meaning across a language and cultural

barrier.

References

[1] “Security Absurdity: The Complete, Unquestionable, And Total Failure of

Information Security”, Noam Eppel,

http://www.securityabsurdity.com/failure.php.

[2] “The Twelve Networking Truths”, RFC 1925, Ross Callon, 1 April 1996.

[3] “Requirements for IPsec Remote Access Scenarios”, RFC 3457, Scott Kelly and

Sankar Ramamoorthi, January 2003.

[4] “Authentication Components: Engineering Experiences and Guidelines”, Pasi

Eronen and Jari Arkko,

Proceedings of the 12

th

International Workshop on

Security Protocols (Protocols’04)

, Springer-Verlag Lecture Notes in Computer

Science No.3957, April 2004, p.68.

[5] “Datagram Transport Layer Security”, RFC 4347, Eric Rescorla and Nagendra

Modadugu, April 2006.

[6] “Straw poll on TLS SRP status”, thread on ietf-tls mailing list, May-June 2007,

http://www1.ietf.org/mail-archive/web/tls/current/-

msg01667.html

.

[7] “Man-in-the-Middle in Tunnelled Authentication Protocols”, N. Asokan,

Valtteri Niemi, and Kaisa Nyberg, Cryptology ePrint Archive, Report 2002/163,

November 2002,

http://eprint.iacr.org/2002/163.

[8] “Man-in-the-Middle in Tunnelled Authentication Protocols”, N.Asokan,

Valttieri Niemi, and Kaisa Nyberg,

Proceedings of the 11

th

Security Protocols

Workshop (Protocols’03)

, Springer-Verlag Lecture Notes in Computer Science

No.3364, April 2003, p.29.

[9] “The Compound Authentication Binding Problem”, IETF draft

draft-

puthenkulam-eap-binding-04

, Jose Puthenkulam, Victor Lortz, Ashwin

Palekar, and Dan Simon,27 October 2003.

[10] “Application Interfaces That Enhance Security”, Apple Computer, 23 May 2006,

http://developer.apple.com/documentation/Security/-

Conceptual/SecureCodingGuide/Articles/-

AppInterfaces.html

.

[11] “CAPTCHA effectiveness”, Jeff Atwood, 25 October 2006,

http://www.codinghorror.com/blog/archives/000712.html.

[12] “CAPTCHA CAPTCHA DECODER”,

http://www.lafdc.com/-

captcha/

.

[13] “OCR Research Team”,

http://ocr-research.org.ua/.

[14] “Computer Security in the Real World”, Butler Lampson, keynote address at the

14

th

Usenix Security Symposium (Security’05), August 2005.

[15] “Prospect Theory: An Analysis of Decision under Risk”, Daniel Kahneman and

Amos Tversky,

Econometrica, Vol.47, No.2 (March 1979), p.263.

[16] “An Analysis of the System Security Weaknesses of the US Navy Fleet

Broadcasting System, 1967-1974, as exploited by CWO John Walker”, Laura

Heath, Master of Military Art and Science thesis, US Army Command and

General Staff College, Ft.Leavenworth, Kansas, 2005.

[17] “User-Centered Security”, Mary Ellen Zurko,

Proceedings of the 1996 New

Security Paradigms Workshop (NSPW’96)

, September 1996, p.27.

[18] “Design Principles and Patterns for Computer Systems That Are Simultaneously

Secure and Usable”, Simson Garfinkel, PhD thesis, Massachusetts Institute of

Technology, May 2005.

[19] “Challenges in deploying low-latency anonymity (Draft)”, Roger Dingledine ,

Nick Mathewson and Paul Syverson, 2005,

http://tor.eff.org/-

svn/trunk/doc/design-paper/challenges.pdf

.

Security Usability Fundamentals

36

[20] “Firefox and the Worry-free Web”, Blake Ross, in “Security and Usability:

Designing Secure Systems That People Can Use”, O’Reilly, 2005, p.577.

[21] “About Face 2.0: The Essentials of Interaction Design”, Alan Cooper and Robert

Reimann, John Wiley and Sons, 2003.

[22] “Cabirn Fever”, Peter Ferrie and Peter Szor,

Virus Bulletin, August 2004, p.4.

[23] “Security and Identification Indicators for Browsers against Spoofing and

Phishing Attacks”, Amir Herzberg and Ahmad Jbara, Cryptology ePrint

Archive,

http://eprint.iacr.org/2004/, 2004.

[24] “Why Features Don’t Matter Any More: The New Laws of Digital Technology”,

Andreas Pfeiffer,

ACM Ubiquity, Vol.7, Issue 7 (February 2006),

http://www.acm.org/ubiquity/views/v7i07_pfeiffer.html.

[25] “Invalid banking cert spooks only one user in 300”, Stephen Bell,

ComputerWorld New Zealand, 16 May 2005,

http://www.computerworld.co.nz/news.nsf/NL/-

FCC8B6B48B24CDF2CC2570020018FF73

.

[26] “The heuristic-systematic model in its broader context”, Serena Chen and Shelly

Chaiken, Dual-Process Theories in Social Psychology, Guilford Press, 1999,

p.73.

[27] “Information Foraging in Information Access Environments”, Peter Pirolli and

Stuart Card,

Proceedings of the SIGCHI Conference on Human Factors in

Computing Systems (SIGCHI’95)

, May 1995, p.51.

[28] “pattern recognition”, Dan Kaminsky, invited talk at Black Ops 2006 at the 20

th

Large Installation System Administration Conference (LISA’06), December

2006.

[29] “SSH for fun and profit”, Sebastian Krahmer, 1 July 2002,

http://www.shellcode.com.ar/docz/asm/ssharp.pdf.

[30] “Hacking: The Art of Exploitation”, Jon Erickson, No Starch Press, 2003.

[31] “THC Fuzzy Fingerprint”, 25 October 2003,

http://www.thc.org/thc-ffp/.