Robotics: Science and Systems 2023

Daegu, Republic of Korea, July 10-July 14, 2023

1

Robotic Table Tennis: A Case Study

into a High Speed Learning System

David B. D’Ambrosio

∗

, Jonathan Abelian

†

, Saminda Abeyruwan

∗

, Michael Ahn

∗

, Alex Bewley

∗

,

Justin Boyd

†

, Krzysztof Choromanski

∗

, Omar Cortes

†

, Erwin Coumans

∗

, Tianli Ding

∗

, Wenbo Gao

∗

,

Laura Graesser

∗

, Atil Iscen

∗

, Navdeep Jaitly

∗

, Deepali Jain

∗

, Juhana Kangaspunta

∗

, Satoshi Kataoka

∗

,

Gus Kouretas

‡

, Yuheng Kuang

∗

, Nevena Lazic

∗

, Corey Lynch

∗

, Reza Mahjourian

∗

, Sherry Q. Moore

∗

,

Thinh Nguyen

†

, Ken Oslund

∗

, Barney J Reed

§

, Krista Reymann

∗

, Pannag R. Sanketi

∗

, Anish Shankar

∗

,

Pierre Sermanet

∗

, Vikas Sindhwani

∗

, Avi Singh

∗

, Vincent Vanhoucke

∗

, Grace Vesom

∗

, and Peng Xu

∗

Authors beyond the first are listed alphabetically, with full author contributions in the Appendix.

∗

Google DeepMind.

†

Work done at Google DeepMind via FS Studio

‡

Work done at Google DeepMind via Relentless Adrenaline

§

Work done at Google DeepMind via Stickman Skills Center LLC

Fig. 1: The physical robotic table tennis system. Images from left to right show (I) ball thrower, (II) entire system (thrower,

arm, gantry), (III) automatic ball refill, (inlay) simulator, and (IV) robot mid-swing.

Abstract—We present a deep-dive into a real-world robotic

learning system that, in previous work, was shown to be capable

of hundreds of table tennis rallies with a human and has the

ability to precisely return the ball to desired targets. This system

puts together a highly optimized perception subsystem, a high-

speed low-latency robot controller, a simulation paradigm that

can prevent damage in the real world and also train policies for

zero-shot transfer, and automated real world environment resets

that enable autonomous training and evaluation on physical

robots. We complement a complete system description, including

numerous design decisions that are typically not widely dissem-

inated, with a collection of studies that clarify the importance

of mitigating various sources of latency, accounting for training

and deployment distribution shifts, robustness of the perception

system, sensitivity to policy hyper-parameters, and choice of

action space. A video demonstrating the components of the

system and details of experimental results can be found at

https://youtu.be/HbortM1wpAA.

1

I. INTRODUCTION

There are some tasks that are infeasible for a robot to

perform unless it moves and reacts quickly. Industrial robots

can execute pre-programmed motions at blindingly fast speeds,

but planning, adapting, and learning while executing a task

1

Corresponding emails: {bewley, ddambro, lauragraesser,

psanketi}@google.com.

at high speed can push a robotic system to its limits and

introduce complex safety and coordination challenges that may

not show up in less demanding environments. Yet many vital

tasks, particularly those that involve interacting with humans

in real time, necessitate such an high-speed robotic system.

The goal of this paper is to describe such a system and

the process behind its creation. Building any robotic system

is a complex and multifaceted challenge, but nuanced design

decisions are not often widely disseminated. Our hope is that

this paper can help researchers who are starting out in high-

speed robotic learning and serve as a discussion point for those

already active in the area.

We focus on a robotic table tennis system that has shown

promise in playing with humans (340 hit cooperative rallies)

[2] and targeted ball returns (competitive with amateur hu-

mans) [20]. This platform provides an excellent case study

in system design because it includes multiple trade-offs and

desiderata — e.g. perception latency v.s. accuracy, ease of use

v.s. performance, high speed, human interactivity, support for

multiple learning methods — and is able to produce strong real

world performance. This paper discusses the design decisions

that went into the creation of the system and empirically

validates many of them through analyses of key components.

Physics simulation Real world

Robot

control

Ball

modeling

Ball

vision

Robot

control

Collision

detection

State machine

Rewards

Observation

Data

interpolation

Observation

Safety

sim

collisions

Referee

Done

Info

P3 P4

P3

P2

P1

P2

P1

process

real only

sim only

shared

Px

Ball

thrower

P5

Robot Controller

Logger

Custom MPI

ThrowerVision

TF detection and

tracking on GPU

Control Server

PyBullet

(Safety)

egm_server

(driver)

festo_driver

(driver)

Camera reader

Camera

1

Camera

2

Motor 1

Motor 2

Motor 3

Abb Arm

Festo

Linear

Referee

Environment

Custom MPI

248Hz ~125Hz100Hz

Python

C++

Actor

pybind11

pybind11

IR

Sensor

Video Encoder

Device

Fig. 2: Overview of the components for running simulated and real environments. The diagram on the left shows how the

various software components fit to form the environment: in simulation, everything runs in a single process, but the real

environment splits the work among several. The diagram on the right shows the components of the real hardware system. A

custom MPI manages communication between the parts and logging of all data.

This work explores all aspects of the system, how they relate

to and inform one another, and highlights several important

contributions including: (1) a highly optimized perception

subsystem capable of running at 125Hz, (2) an example of

high-speed, low latency control with industrial robots, (3) a

simulation paradigm that can prevent damage in the real world

while performing agile tasks and also train policies for zero-

shot transfer using a variety of learning approaches, (4) a

common interface for simulation and real world deployment,

(5) an automatic physical environment reset system for table

tennis that enables training and evaluation for long periods

without human intervention, and (6) a research-friendly modu-

lar design that allows customization and component swapping.

A summary of widely applicable lessons can be found in Sec-

tion V and a video of the system in operation and experimental

results can be found at https://youtu.be/HbortM1wpAA.

II. TABLE TENNIS SYSTEM

Table tennis is easy to pick up for humans, but poses

interesting challenges for a robotic system. Amateurs hit the

ball at up to 9m/s, with professionals tripling that. Thus, the

robot must be able to move, sense, and react quickly just to

make contact, let alone replicate the precise hits needed for

high-level play.

The components of this system are numerous with many

interactions (Figure 2). Therefore, a major design focus was

on modularity to enable testing and swapping. At a high

level, the hardware components (cameras + vision stack, robot,

ball thrower) are controlled through C++ and communicate

state to the environment through a custom message passing

system called Fluxworks. The various components not only

send policy-related information this way (e.g. where the the

ball is, the position of the robot) but also synchronize the state

of the system (e.g. the robot has faulted or a new episode

has started). Note that this process is simplified in simulation

where all state information is centralized. Information from the

components determines the state of the game (in the Referee)

and input to the policy. The policy then produces actions which

feed into the low-level controllers while the game state drives

the system as a whole (e.g. the episode is over). All logging

(Appendix M), including videos, is handled with Fluxworks

which utilizes highly optimized Protobuffer communication.

The rest of this section describes the components in the

system and their dependencies and interactions.

A. Physical Robots

The player in this system consists of two industrial robots

that work together: an ABB 6DOF arm and a Festo 2DOF

linear actuator, creating an 8DOF system (Figure 1). The two

robots complement each other: the gantry is able to cover large

distances quickly, maneuvering the arm into an appropriate

position where it can make fine adjustments and hit the ball

in a controlled manner with the arm. The choice of industrial

robots was deliberate, to focus on the machine learning

challenges of the problem and for high reliability. However

one major limitation of working with off-the-shelf industrial

systems is that they may contain proprietary, “closed-box”

software that must be contended with. For example, the ABB

arm runs an additional safety layer that instantly stops the

robot when it thinks something bad will happen. It took careful

effort to work within these constraints because the robot was

operating near its limits. See Appendix C for details.

For the ABB arms, either an ABB IRB 120T or ABB IRB

1100-4/0.58 are used, the latter being a faster version with a

different joint structure. Both are capable of fast (joints rotate

up to 420 or 600 degrees/s), repeatable (to within 0.01mm)

motions and allow a high control frequency. The arm’s end

effector is an 18.8cm 3D-printed extension attached to a

standard table tennis paddle that has had its handle removed

(Figure 1 right). While the ABB arms are not perfect analogs

to human arms, they can impart significant force and spin on

the ball.

Taking inspiration from professional table tennis where play

can extend well to the side of and away from the table,

the Festo gantries range in size from 2 × 2m to 4 × 2m,

despite the table tennis table being 1.525m wide. This extra

range gives the robot more options for returning the ball. The

gantries can move up to 2 m/s in in both axes. Most other

robotic table tennis systems (discussed in Section IV-B) opt

for a fixed-position arm but the inclusion of a gantry means

the robot is able to reach more of the table space and has

more freedom to adopt general policies. The downside is that

the gantry complicates the system by adding two degrees

of freedom leading to an overdetermined system whilst also

imparting additional lateral forces on the robot arm that must

be accounted for.

B. Communication, Safety, and Control

The ABB robot accepts position and velocity target com-

mands and provides joint feedback at 248Hz via the Externally

Guided Motion (EGM) [1] interface. The Festo gantry is

controlled through a Modbus [90] interface at approximately

125Hz. See Appendix C for full communication details.

Safety is a critical component of controlling robots. While

the robot should be hitting the ball, collision with anything else

in the environment should be avoided. To solve this problem,

commands are filtered through a safety simulator before being

sent to the robot (a simplified version of Section II-C). The

simulator converts a velocity action generated by the control

policy to a position and velocity command required by EGM at

each timestep. Collisions in the simulator generate a repulsive

force that pushes the robot away, resulting in a valid, safe

command for the real robot. Objects in the safety simulator are

dilated for an adequate safety margin and additional obstacles

are added to block off the “danger zones” robot should avoid.

Low-level robot control can be extremely time-sensitive and

is typically implemented in a lower-level language like C++

for performance. Python on the other hand is very useful

for high-level machine learning implementations and rapid

iteration but is not well suited to high speed robot control due

to the Global Interpreter Lock (GIL) which severely hampers

concurrency. This limitation can be mitigated through multiple

Python processes, but is still not optimal for speed. Therefore

this system adopts a hybrid approach where latency sensitive

processes like control and perception are implemented in C++

while others are partitioned into several Python binaries (Fig-

ure 2). Having these components in Python allows researchers

to iterate rapidly and not worry as much about low-level

details. This separation also allows components to be easily

swapped or tested.

C. Simulator

The table tennis environment is simulated to facilitate sim-

to-real training and prototyping for real robot training. PyBul-

let [19] is the physics engine and the environment interface

conforms to the Gym API [12].

Figure 2 (left) gives an overview of the environment struc-

ture in simulation and compares it with the real world environ-

ment (see Section II-E). There are five conceptual components;

(1) the physics simulation and ball dynamics model which

together model the dynamics of the robot and ball, (2) the

StateMachine which uses ball contact information from

the physics simulation and tracks the semantic state of the

game (e.g. the ball just bounced on the opponent’s side of the

table, the player hit the ball), (3) the RewardManager which

loads a configurable set of rewards and outputs the reward per

step, (4) the DoneManager which loads a configurable set of

done conditions (e.g. ball leaves play area, robot collision with

non-ball object) and outputs if the episode is done per step,

and (5) the Observation class which configurably formats

the environment observation per step.

The main advantage of this design is that it isolates compo-

nents so they are easy to build and iterate on. For example, the

StateMachine makes it easy to extend the environment to

more complex tasks. New tasks are defined by implementing a

new state machine in a config file. The StateMachine also

makes it easier to determine the episode termination condition

and some rewards (e.g. for hitting the ball). Note that whilst

related, it is not the same as the transition function of the

MDP; the StateMachine is less granular and changes at a

lower frequency. Another example is the RewardManager.

It is common practice in robot learning when training using

the reinforcement learning paradigm to experiment frequently

with the reward function. To facilitate this, reward components

and their weights are specified in a config file taken in

by the RewardManager, which calculates and sums each

component. This makes it straightforward to change rewards

and easy to define new components.

1) Latency modeling: Latency is a major source of the sim-

to-real gap in robotics [91]. To mitigate this issue, and inspired

by Tan et al. [91], latency is modelled in the simulation as

follows. During inference, the history of observations and

corresponding timestamps are stored and linearly interpolated

to produce an observation with a desired latency. In contrast to

[91] which uses a single latency range sampled uniformly for

the whole observation, the latency of five main components —

Ball observation (i.e. latency of the ball perception system),

ABB observation, Festo observation, ABB action, Festo action

Latencies (ms)

Component µ σ

Ball observation 40 8.2

ABB observation 29 8.2

Festo observation 33 9.0

ABB action 71 5.7

Festo action 64.5 11.5

TABLE I: Latency distribution values.

— are modeled as a Gaussian distribution and a distinct dis-

tribution is used for each component. The mean and standard

deviation per component were measured empirically on the

physical system through instrumentation that logs timestamps

throughout the software stack (see Table I). In simulation, at

the beginning of each episode a latency value is sampled per

component and the observation components are interpolated

to those latency values per step. Similarly, action latency is

implemented by storing the raw actions produced by the policy

in a buffer, and linearly interpolating the action sent to the

robot to the desired latency.

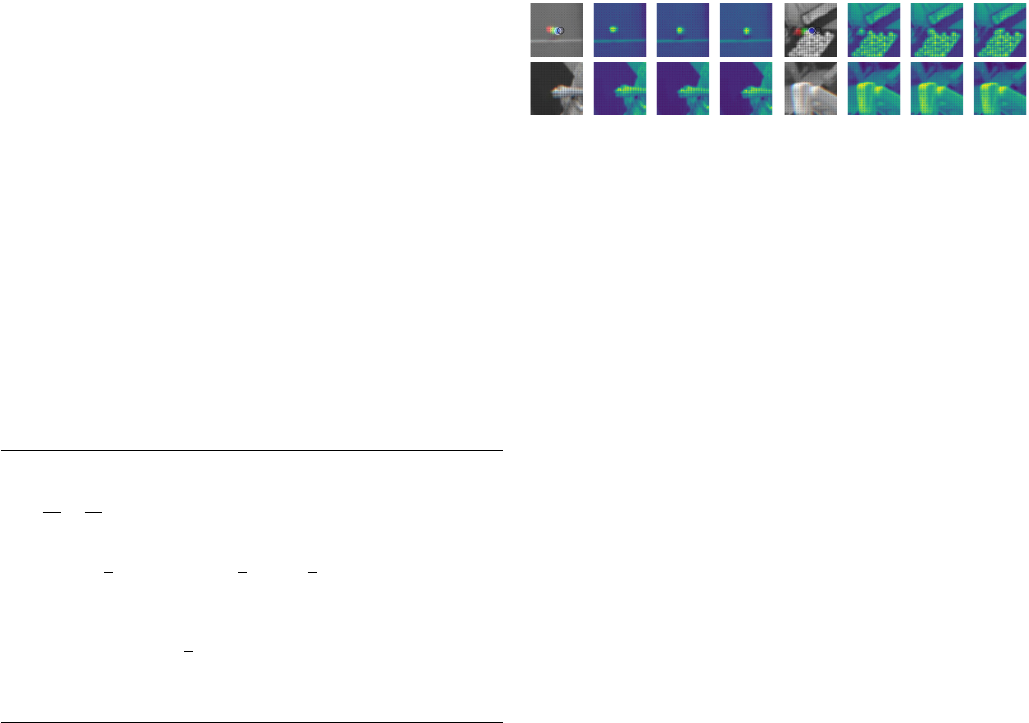

2) Ball distributions, observation noise, and domain ran-

domization: A table tennis player must be able to return

balls with many different incoming trajectories and angular

velocities. That is, they experience different ball distributions.

Ball dynamics and distributions are implemented following

[2]. Each episode, initial ball conditions are sampled from a

parameterized distribution which is specified in a config. To

account for real world jitter, random noise is added to the

ball observation. Domain randomization [77, 15, 41, 75] is

also supported for many physical parameters. The paddle and

table restitution coefficients are randomized by default.

For more details on the simulator see Appendix D.

D. Perception System

Table tennis is a highly dynamic sport (an amateur-speed

ball crosses the table in 0.4 seconds), requiring extremely

fast reaction times and precise motor control when hitting the

ball. Therefore a vision system with the desiderata of low

latency and high precision is required. It is also not possible

to instrument (e.g. with LEDs) or paint the ball for active

tracking as they are very sensitive to variation in weight or

texture and so a passive vision system must be employed.

A custom vision pipeline that is fast, accurate and passive

is designed to provide 3D balls positions. It consists of three

main components 1) 2D ball detection across two stereo

cameras, 2) triangulation to recover the 3D ball position

and 3) a sequential decision making process which manages

trajectory creation, filtering, and termination. The remainder

of this section will provide details on the hardware and these

components.

1) Camera Hardware, Synchronization and Setup: For im-

age capture the system employs a pair of Ximea MQ013CG-

ON cameras that have a hardwired synchronization cable

and are connected to the host computer via USB3 active

optical cables. Cameras lenses are firmly locked and focused.

Synchronization timestamps are used to match images down-

stream. Many different cameras were tried, but these had high

frame rates (the cameras can run at 125FPS at a resolution of

1280x1024) and an extremely low latency of 388µs. Other

cameras were capable of higher FPS, at the cost of more

latency which is not acceptable in this high-speed domain.

To achieve the desired performance the camera uses a global

shutter with a short (4ms) exposure time and only returns the

raw, unprocessed Bayer pattern.

Fig. 3: Quantification of triangulation bias over the length

of playing area (y-position) at a height of 250mm above the

center line. The more orthogonal viewpoints offered by placing

cameras on opposite sides of the tables lead to an order of

magnitude reduction in triangulation bias.

The ball is small and moves fast, so capturing it accurately

is a challenge. Ideally the cameras would be as close to the

action as possible, but in a dual camera setup, each needs

to view the entire play area. Additionally, putting sensitively

calibrated cameras in the path of fast moving balls is not

ideal. Instead, the cameras are mounted roughly 2m above

the play area on each side of the table and are equipped

with Fujinon FE185C086HA-1 “fisheye” lenses that expand

the view to the full play area, including the gantries. While

capturing more of the environment, the fisheye lens distortion

introduces challenges in calibration and additional uncertainty

in triangulation.

The direct linear transform (DLT) method [35] for binocular

stereo vision estimates a 3D position from these image loca-

tions in the table’s coordinate frame. However, the problem of

non-uniform and non-zero mean bias known as triangulation

bias [23] must be considered in optimizing camera placement.

Two stereo camera configurations are considered, two over-

head cameras viewing the scene from: 1) the same side of the

table and 2) opposite sides. Simulation is used to quantify

triangulation bias across these configurations and decouple

triangulation from potential errors in calibration. Quantifying

this bias for common ball positions (see Figure 3) indicates

that positioning the cameras on opposite table sides results

in a significant reduction in the overall triangulation bias.

Furthermore, this configuration also benefits from a larger

baseline between the cameras for reducing estimation variance

[25].

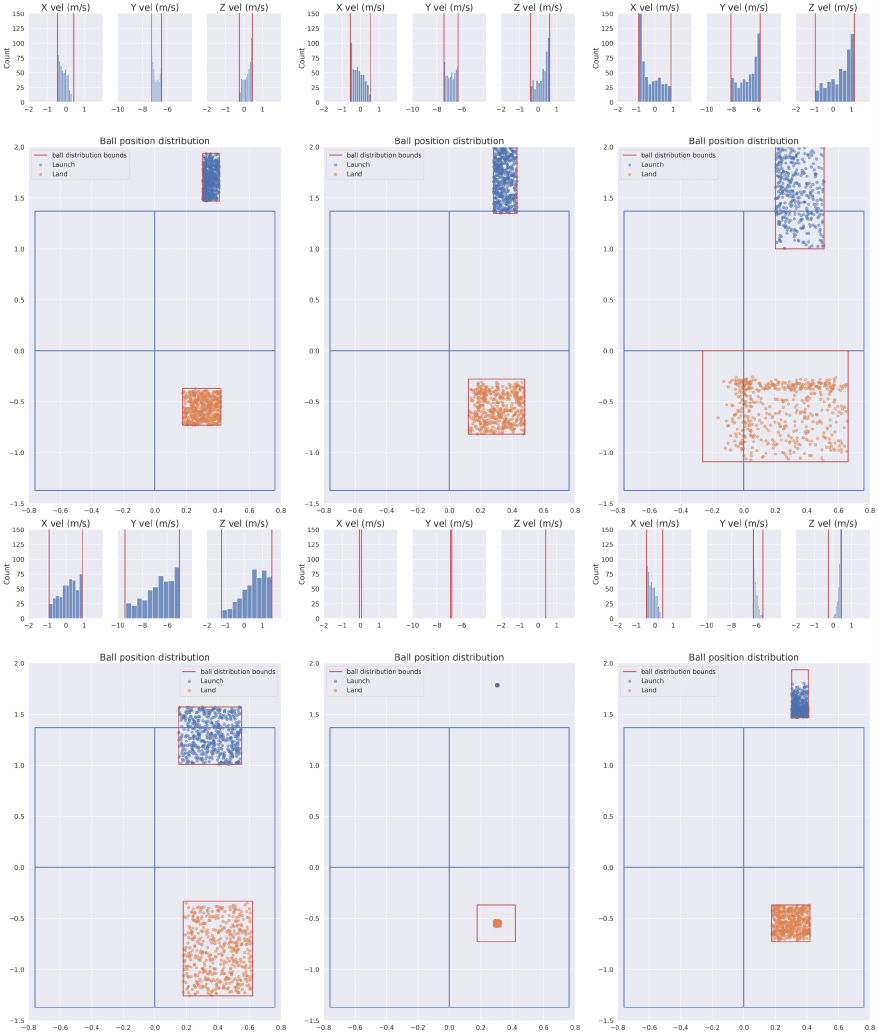

2) Ball Detection: The core of the perception system lies

with ball detection. The system uses a temporal convolutional

architecture to process each camera’s video stream indepen-

dently and provides information about the ball location and

velocity for the downstream triangulation and filtering (see

Figure 4). The system uses raw Bayer images and tempo-

ral convolutions, which allow it to efficiently process each

video stream independently and thus improve the latency

and accuracy of ball detection. The output structure takes

Fig. 4: Ball Detection. These synchronized images (cropped

to approximately 50% normal size) show the temporal con-

volutional network detecting the ball (detected ball center in

pixels) independently from cameras on both sides of the table.

These detections are triangulated and used for 3D tracking.

inspiration from CenterNet [99, 100] by producing per location

predictions that include: a ball score indicating corresponding

to the likelihood of the ball center at that location, a 2D local

offset to accommodate sub-pixel resolution, and a 2D estimate

of the ball velocity in pixels.

a) Direct Processing of Bayer Images: The detection

network takes the raw Bayer pattern image [7] as input directly

from the high speed camera after cropping to the play area

at a resolution of 512 × 1024. By skipping Bayer to RGB

conversion, 1ms (or 15% of the time between images) of

conversion induced latency per camera is avoided and data

transferred from camera to host to accelerator is reduced

by

2

3

, further reducing latency. In contrast to other models

utilizing Bayer images [14], no loss in performance was found

using the raw format, largely due to special attention given to

structure of the 2 × 2 Bayer pattern and ensuring the first

convolution layer is also set to have a stride of 2 × 2. This

alignment means that the individual weights of the first layer

are only responsible for a single color across all positions of

the convolution operation. The immediate striding also benefits

wall-clock time by down-sampling the input to a quarter of

the original size. The alignment with the Bayer pattern is also

extended to any crop operations during training as discussed

later in this section.

b) Detector Backbone with Buffered Temporal Convolu-

tions: A custom deep-learning based ball detector is used

to learn the right combination of color, shape and motion

for identifying the ball in play. Its architecture falls in the

category of a convolutional neural network (CNN) with a

compact size of only 27k parameters spread over five spatial

convolutional layers and two temporal convolutions to capture

motion features. Compared to related architectures such as

ConvLSTM [85], this fully convolutional approach restricts

the temporal influence of the predictions to a finite temporal

window allowing for greater interpretability and fault diagno-

sis. Full details of the architecture are provided in Appendix

E.

Temporal convolutional operations are employed to capture

motion as a visual cue for detecting the ball in play and the

direction of motion. In contrast to the typical implementation

that requires a window of frames to be presented at each

timestep, the implementation in this system only requires a

single frame to be presented to the CNN for each timestep

during inference. This change minimises data transfer from

the host device to the accelerator running the CNN operations,

a critical throughput bottleneck. This temporal layer creates a

buffer to store the input feature for the next timestep as in

Khandelwal et al. [49].

c) Training the Detector Model: To train the detection

model, a dataset of 2.3M small temporal patches were selected

to match the receptive field of the architecture (64 × 64 pixels

and n frames). The patches are selected from frames with a

labeled ball position where a single positive patch is defined

as being centered on the ball position in the current frame with

the temporal dimension filled with the same spatial position

but spanning [t − n + 1, t]. Similarly a negative patch can

be selected from the same frame at a random location which

does not overlap with the positive patch. Examples of positive

and negative patches are provided in the Appendix. Special

consideration is taken to align the patch selection with the

Bayer pattern by rounding the patch location to the nearest

even number. This local patch based training has several

benefits; it 1) reduces the training time by 50×

2

, 2) helps

generalization across different parts of the image as the model

is unable to rely on global statistics of ball positions, 3) offers

a more fine-grained selection of training data for non-trivial

cases e.g. when another ball is still moving in the scene, and

similarly 4) allows for hard negative mining [89] on sequences

where it is known for no ball to exist in play.

For each patch the separate outputs each have a correspond-

ing loss. First, the ball score is optimized using the standard bi-

nary cross-entropy loss for both positive and negative patches.

For positive patches only, the local offset is optimized using

the mean-squared error loss using the relative position between

the corresponding pixel coordinate and the ball center in the

current frame. The velocity prediction is similarly optimized,

instead using the relative position of the ball in next frame to

the current frame as the regression target.

3) 3D Tracking: To have a consistent representation that is

invariant to camera viewpoint, the ball is represented in 3D

in the table’s coordinate frame. If the maximum score in both

images are above a learnt threshold, their current and next

image positions using the local offset and velocity predictions

are triangulated using DLT [35]. This corresponds to the 3D

position and 3D velocity of the ball in the table frame. Finally

these observations are provided to a recursive Kalman filter

[46] to refine the estimated ball state before its 3D position is

sent to the robot policy.

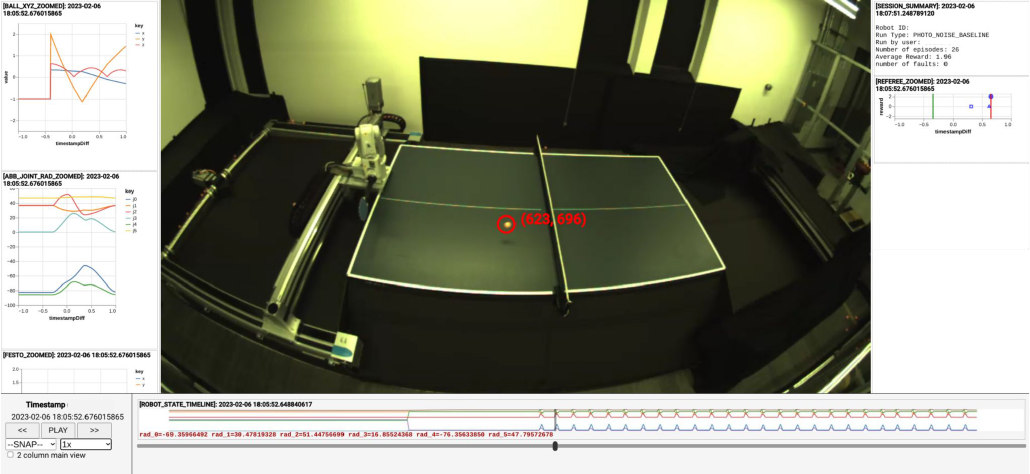

E. Running on the Real Robot

As an analog to the simulated environment (Section II-C)

there is an equivalent Gym environment for the real hardware.

This environment must contend with an additional set of

challenges that are either nonexistent or trivial in simulation:

1) continuous raw sensor observation at different frequencies

that is subjected to jitter and real world noise, 2) determining

the start of an episode, 3) monitoring environment state, 4)

environment resets.

2

Two 64 × 64 × n patches are required per frame as opposed to the full

512 × 1024 × n frames.

1) Observation generation: In the simulator, the state of

every object is known and can be queried at fixed intervals.

In contrast, the real environment receives sensor readings

from different modalities at different frequencies (e.g. the ball,

ABB, Festo) that may be inaccurate or arrive irregularly. To

generate policy observations, the sensor observations, along

with their timestamps are buffered and interpolated or extrap-

olated to the environment step timestamp. To address noise

and jitter a bandpass filter is applied to the observation buffer

before interpolation (see Appendix F). These observations

are afterwards converted according to the policy observation

specification.

2) Episode Starts: Simulators provide a direct function to

reset the environment to a start state instantly. In the real

world, the robot must be physically moved to a start state with

controllers based on standard S-curve trajectory planning at the

end of the episode or just after a paddle hit. The latter was

shown to be beneficial in [2], so that a human and robot could

interact as fast as possible. An episode starts when a valid

ball is thrown towards the robot. The real world must rely on

vision to detect this event and can be subject to spurious false

positives, balls rolling on the table, bad ball throws, etc., which

need to be taken into consideration. Therefore an episode is

started only if a ball is detected incoming toward a robot from

a predefined region of space.

3) Referee: To interface with the GymAPI a process

called Referee generates the reward, done, and info using the

StateMachine, RewardManager, and DoneManager

as defined in Section II-C. It receives raw sensor observations

at different frequencies and updates a PyBullet instance.

The observations are filtered (see Appendix F) and used to

update the PyBullet state (only the position). It calculates

different ball contact events (see Appendix D), compensates

for false positives, and uses simple heuristics and closest point

thresholds to determine high confidence ball contact detections

to generate the events used by the previously mentioned

components.

4) Automatic system reset — continuously introducing

balls: An important aspect of a real world robotic system

is environment reset. If each episode requires a lengthy reset

process or human intervention, then progress will be slow.

Human table tennis players also face this problem and so-

called “table tennis robots” are commercially available to

shoot balls continuously and even in a variety of programmed

ways. Almost all of these machines accomplish this task

with a hopper of balls that introduces a ball to two or more

rotating wheels forcing it out at a desired speed and spin (see

Figure 1 left). Unfortunately, while many of these devices are

“programmable”, none provide true APIs and instead rely on

physical interfaces. Therefore, an off-the-shelf thrower was

customized with a Pololu motor controller and an infrared

sensor for detecting throws, allowing it to be controlled over

USB. This setup allows balls to be introduced purely through

software control.

However, the ball thrower is still limited by the hopper

capacity. A system to automate the refill process was designed

that exploits the light weight of table tennis balls by blowing

air to return them to the hopper. A ceiling-mounted fan blows

down to remove balls stuck on the table, which is surrounded

by foamcore to direct the balls into carpeted pathways. At each

corner of the path is a blower fan (typically meant for drying

out carpet) that directs air across the floor. The balls circulate

around the table until they reach a ramp that directs them to a

tube that also uses air to transport them back into the hopper.

When the thrower detects it hasn’t shot a ball for a while, the

fans turn on for 40 seconds, refilling the hopper so training

or evaluation can continue indefinitely. See Appendix F for a

diagram and the video at https://youtu.be/HbortM1wpAA for

a demonstration.

One demonstration of the utility of this system is through

the experiments in this paper. For example, the simulator

parameter ablation studies (Section III-A) involved evaluat-

ing over 150 policies in 450+ independent evaluations on

a physical robot with 22.5k+ balls thrown. All evaluations

were conducted remotely and required onsite intervention just

once

3

.

F. Design of Robot Policies

Policies have been trained for this system using a variety

of approaches. This section details the basic structure of these

policies and any customization needed for specific methods.

1) Policies: The policy input consists of a history of the

past eight robot joint and ball states, and it outputs the desired

robot state, typically a velocity for each of the eight joints

(joint space policies). Many robot control frequencies ranging

from from 20Hz - 100Hz have been explored, but 100Hz is

used for most experiments. Most policies are compact, repre-

sented as a three layer, 1D, fully convolutional gated dilated

CNN with ≈1k parameters introduced in [26]. However, it is

also possible to deploy larger policies. For example, a 13m

parameter policy consisting of two LSTM layers with a fully

connected output layer has successfully controlled the robot

at 60Hz [20].

2) Robot Policies in Task Space: Joint space policies lack

the relation between joint movement and the task at hand. A

more compact task space — the pose of the robot end effector

— is especially beneficial in in robotics, showing significant

improvements in learning of locomotion and manipulation

tasks [21, 60, 95, 57].

Standard task space control uses the Jacobian Matrix to

calculate joint torques or velocities given target pose, target

end effector velocities, joint angles and joint velocities. This

system employs a reduced (pitch invariant) version with 5

dimensions. Instead of commanding the full pose of the end

effector, it commands the position in 3 dimensions and the

surface normal of the paddle in 2 dimensions (roll and yaw). In

contrast to the default joint space policies, which use velocity

control, task space policies are position controlled, which have

the added benefit of easily defining a bounding cube that the

paddle should operate in. The robot state component of the

3

Some tape became unstuck and the balls escaped.

observation space is also represented in task space, making

policies independent of a robot’s form factor and enabling

transfer of learned policies across different robots (see Section

III-D).

G. Blackbox Gradient Sensing (BGS)

The design of the system allows for interaction with many

different learning approaches, as long as they conform to

the given APIs. The system supports training using a variety

of methods including BGS [2] (evolutionary strategies), PPO

[83] and SAC [33] (reinforcement learning), and GoalsEye

(behavior cloning). The rest of the section describes BGS,

since it is used as the training algorithm in all the system

studies in this paper (see Section III).

BGS is an ES algorithm. This class of algorithm maximize

a smoothed version of expected episode return, R, given by:

R

σ

(θ) = E

δ ∼ N (0,I

d

)

[R(θ + σδ)] (1)

where σ > 0 controls the precision of the smoothing, and δ is a

random normal perturbation vector with the same dimension as

the policy parameters θ. θ is perturbed by adding or subtracting

N Gaussian perturbations δ

R

i

and calculating episode return,

R

+

i

and R

−

i

for each direction. Assuming the perturbations,

δ

R

i

, are rank ordered with δ

R

1

being the top performing

direction, then the policy update can be expressed as:

θ

′

= θ + α

1

σ

R

k

X

i=1

"

1

m

m

X

j=1

R

+

i,j

−

1

m

m

X

j=1

R

−

i,j

δ

R

i

#

(2)

where α is the step size, σ

R

is the standard deviation of

each distinct reward (positive and negative direction), N is

the number of directions sampled per parameter update, and

k(< N) is the number of top directions (elites). m is the

number of repeats per direction to reduce variance for reward

estimation. R

+

i,j

is the reward corresponding to the j-th repeat

of i-th in the positive direction. R

−

i,j

is the same but in the

negative direction.

BGS is an improvement upon a popular ES algorithm

ARS [59], with two major changes.

1) Reward differential elite-choice.: In ARS, rewards are

ranked yielding an ordering of directions based on the absolute

rewards of either the positive or negative directions. BGS

takes the absolute difference in rewards between the positive

and negative directions and rank the differences to yield an

ordering over directions. ARS can be interpreted as ranking

directions in absolute reward space, whereas BGS ranks di-

rections according to reward curvature:

ARS: Sort δ

R

i

by max{R

+

i

, R

−

i

}. (3)

BGS: Sort δ

R

i

by |R

+

i

− R

−

i

|. (4)

2) Orthogonal sampling: Orthogonal ensembles of per-

turbations δ

R

i

[18] relies on constructing perturbations δ

R

i

in blocks, where each block consists of pairwise orthogonal

samples. Those samples are still of Gaussian marginal distri-

butions, matching those of the regular non-orthogonal variant.

The feasibility of such a construction comes from the isotropic

property of the Gaussian distribution (see: [18] for details).

BGS policies are trained in simulation and transferred zero-

shot to the physical hardware. An important note is that

the BGS framework can also fine tune policies on hardware

through the real Gym API (Section II-E). Hyperparameters

must be adjusted in this case to account for there only being

one “worker” to gather samples.

III. SYSTEM STUDIES

This section describes several experiments that explore and

evaluate the importance of the various components of the

system.

Except where noted, the experiments use a ball return task

for training and testing. A ball is launched towards the robot

such that it bounces on the robot’s side of the table (a standard

rule in table tennis). The robot must then hit the ball back over

the net so it lands on the opposite side of the table. Although

other work has applied this system to more complex tasks

(e.g. cooperative human rallies [2]), a simpler task isolates

the variables we are interested in from complications like

variability and repeatability of humans.

For real robot evaluations, making contact with the ball is

worth one point and landing on the opposing side is worth

another point, for a maximum episode return of 2.0. A single

evaluation is the average return over 50 episodes. Simulated

training runs typically have additional reward shaping applied

that change the maximum episode return to 4.0 (see Appendix

D).

A. Effect of Simulation Parameters on Zero-Shot Transfer

Our goal in this section is to assess the sensitivity of policy

performance to environment parameters. We focus on the zero-

shot sim-to-real performance of trained policies and hope that

this analysis (presented in Figure 5) sheds some light on which

aspects of similar systems need to be faithfully aligned with

the real world and where error can be tolerated. For the effects

on training quality see Appendix H.

1) Evaluation methodology: For each test in this section,

10 models were trained in simulation using BGS described

in Section II-G for 10,000 training iterations (equivalent to

60m environment episodes, or roughly 6B environment steps).

In order to assess how different simulated training settings

affect transfer independent of how they affect training quality,

we only evaluate models that trained well in simulation (i.e.,

achieved more than 97.5% of the maximum possible return).

The resulting set of policies were evaluated on the real setup

for 3 × 50 episodes.

2) Modeling latency is crucial for good performance:

The latency study presented in Figure 5 (top left) show that

policies are sensitive to latency. The baseline model (i.e. the

model that uses latency values as measured on hardware) had

a significantly higher zero-shot transfer than any of the other

latency values tested. The next best model had 50% of the

baseline latency, achieving an average zero-shot transfer of

1.33 compared with 1.83 for the baseline. Zero-shot transfer

Fig. 5: Effect of simulator parameters on zero-shot sim-to-

real transfer. Policies are sensitive to latency and physical

parameter values, yet surprisingly robust to ball observation

noise and changes in the ball distribution. Charts show the

mean (with 95% CIs) zero-shot sim-to-real transfer. 2.0 is a

perfect score with a policy returning all balls. R = restitution

coefficient.

scores for the other latency levels tested (0%, 20% and 150%)

had very poor performance. Interestingly, some policies are

lucky and transfer relatively well — for example one policy

with 0% latency had an average score of 1.54. However,

performance is highly inconsistent when simulated latency is

different from measured parameters.

3) Anchoring ball distributions to the real world matters,

but precision is not essential: The ball distribution study

shown in Figure 5 (top right) indicate that policies are robust to

variations in ball distributions provided the real world distribu-

tion (thrower) is contained within the training distribution. The

medium and wide distributions were derived from the baseline

distribution but are 25% and 100% larger respectively (see

Appendix H). The distribution derived from a different ball

thrower (thrower 2) is also larger than the baseline thrower

distribution but effectively contains it. In contrast, very small

training distributions (tiny) or distributions which are disjoint

from the baseline distribution in one or more components

(velocity offset — disjoint in y velocity) result in performance

degradation.

4) Policies are robust to observation noise provided it

has zero mean: The observation noise study in Figure 5

(bottom left) revealed that policies have a high tolerance for

zero-mean observation noise. Doubling the noise to +/- 8cm

(4 ball diameters in total) or removing it altogether had a

minor impact on performance. However, if noise is biased

Fig. 6: Perception resilience studies. Reducing FPS and in-

creasing latency have threshold points where performance of

the system is stable until they reach a point where the robot can

no longer react the to ball in them. Additional noise causes

graceful degradation in performance, increased by non-zero

mean distributions (common in vision triangulation).

performance suffers substantially. Adding a 4cm (one ball

diameter) bias to the default noise results in a 36% drop in

reward (approximately 80% drop in return rate).

5) Policies are sensitive to physical parameters, which can

have complex interactions with each other: The physical

parameter ablations in Figure 5 (bottom right) reveal how

sensitive policies are to all parameter values tested. Removing

randomization from the table restitution coefficient (table: no

R randomize) degrades performance by 14%. Increasing the

ball restitution coefficient by just 2% reduces performance by

25%, whilst increasing the table restitution coefficient by 8%

reduces performance by 36%.

This study also highlights a current limitation of the system.

Setting key parameters in the simulator such as the table and

paddle restitution coefficients, or the paddle mass to values

estimated following the process described in Appendix D led

to worse performance than tuned values (see measured v.s.

tuned and also Appendix H for all parameter values). We

hypothesize this is because ball spin is not correctly modelled

in the simulator and that the tuned values compensate for this

for the particular ball distributions used in the real world.

One challenge of a complex system with many interacting

components is that multiple errors can compensate for each

other, making them difficult to notice if performance does

not suffer dramatically. It was only through conducting these

studies that we became aware of the drop in performance from

using measured values. In future work we plan to model spin

and investigate if this resolves the performance degradation

from using measured values. For further discussion on this

topic, see Appendix I.

B. Perception Resilience Studies

In this section we explore important factors in the perception

system and how they affect end-to-end performance of the

entire system. Latency and accuracy are two major factors and

typically there is a tradeoff between them. A more accurate

model may take longer to process but for fast moving objects

(like a table tennis ball) it may be better to have a less accurate

result more quickly. Framerate also plays a role. If processing

takes longer than frames are arriving, latency will increase

over time and eventually require dropping frames to catch up.

For these experiments we select three high performing

models from the baseline simulator parameter studies and test

them on the real robot while modulating vision performance in

the following ways: (1) reduce the framerate of the cameras

, (2) increase latency by queuing observations and sending

them to the policy at fixed intervals, and (3) reduce accuracy

by injecting zero mean and non-zero mean noise to the ball

position (over and above inherent noise in the system).

The results from these experiments can be seen in Figure 6.

For both framerate and latency, the performance stays con-

sistent with the baseline until there is a heavy dropoff at 50

FPS and 150ms respectively, at which point the robot likely

no longer has sufficient time to react to the ball and swings

too late, almost universally resulting in balls that hit the net

instead of going over. There is a gentle decline in performance

as noise increases, but the impact is much greater for non-zero

mean noise: going from zero mean ([-4, 4] cm) noise to non-

zero mean ([-1, 7] cm) is equivalent to doubling the zero mean

noise ([-8, 8] cm). The interpolation of observations described

in Section II-E likely serves as a buffer against low levels of

zero mean noise. Qualitatively, the robot’s behavior was jittery

and unstable when moderate noise was introduced. Overall,

the stable performance over moderate framerate and latency

declines implies that designing around accuracy would be ideal

for this task, although as trajectories become more varied and

nuanced higher framerates may be necessary to capture their

detailed behavior.

C. ES Training Studies

BGS has been a consistent and reliable method for learning

table tennis tasks on this system in simulation and fine-

tuning in the real world. In this section we ablate the main

components of BGS and compare it with a closely related

method, ARS.

Figure 7 (top) presents a comparison of BGS and ARS on

the default ball return task against a narrow ball distribution.

For both methods we set number of perturbations to 200,

σ to 0.025, and the proportion of perturbations selected as

elites to 30%. We roll out each perturbation for 15 episodes

and average the reward to reduce reward variance due to

stochasticity in the environment. We also apply the common

0 2000 4000 6000 8000 10000

ES Iterations

2

3

4

Reward

ARS

BGS

0 2000 4000 6000 8000 10000 12000

ES Iterations

0.0

0.5

1.0

Reward

ARS (step 1e-4)

ARS (step 1e-3)

ARS (step 4e-3)

ARS (step 1e-2)

ARS (step 1e-1)

0 2000 4000 6000 8000 10000 12000

ES Iterations

0.0

0.5

1.0

Reward

ARS

ARS-ortho

ARS-diff

BGS

Fig. 7: BGS ablation studies. (top) BGS and ARS perform

comparably on the ball return task with a narrow ball dis-

tribution. (bottom) A harder environment, ball targeting with

a larger ball distribution. (left) Step-size alpha has a very

significant effect on training success. (right) Improvements

with reward differential elite-choice technique, orthogonal

perturbation sampling and their combination (BGS).

approach of state normalization [82, 71]. Under these settings,

the methods are comparable.

Next we consider a harder ball targeting task where the

objective for the policy is to return the ball to a precise

(randomized per episode) location on the opponent’s side of

the table [20]. We further increase the difficulty by increasing

the range of incoming balls, i.e. using a wider ball distribution,

and by decreasing the number of perturbations to 50. Tuning

the step size α was crucial for successful policy training with

ARS (Figure 7 bottom left). An un-tuned step-size may lead

to extremely slow training or fast training with sub-optimal

asymptotic performance.

Figure 7 (bottom right) shows the enhancements in training

made by the BGS techniques independently and collectively

compared to baseline ARS. Reward differential elite-choice

and orthogonal sampling leads to faster convergence. As a

result, BGS is the default ES algorithm for policy training.

D. Acting and Observing in Task Space

The previous results use joint space for observations and

actions. In this section we explore policies that operate in “task

space” (see Section II-F2). Task space has several benefits:

it is compact, interpretable, provides a bounding cube for

the end effector as a safety mechanism, and aligns the robot

action and the observation spaces with ball observations. In

our experiments we show that task spaces policies train faster

and, more importantly, can be transferred to different robot

morphologies.

Figure 8 (top left) compares training speed between joint

space (JS), task space for actions — TS(Act), and full task

space policies (actions and observations) — TS(Act&Obs).

Both task spaces policies train faster than JS policies. We also

Fig. 8: Training policies in task space in the baseline environ-

ment (top-left) and a harder damped environment (top-right).

Training converges faster in task-space for both scenarios.

(bottom) A task space policy trained in the damped environ-

ment is successfully transferred to different morphologies and

a new robot.

assess task space policies on a harder (damped) environment

4

.

Now the robot needs to learn to swing and hit the ball harder.

Figure 8 (top right) shows that task space policies learn to

solve the task (albeit not perfectly) while joint space policies

gets stuck in a local maxima. For transfer performance of these

policies see Appendix K.

One crucial benefit of operating in task space is the robust-

ness to different robots or morphologies. To demonstrate this,

we first take the TS(Act&Obs) model trained in the damped

environment and transfer it to the real robot (Figure 8 bottom).

Performance is almost perfect with a score of 1.9. Next we

change the initial pose of the robot and freeze two of the arm

joints. Policy performance is maintained under a pose change

(ABB 120T & Modified Default Pose (MDP)) and only drops

slightly when some joints are also frozen (ABB 120T & MDP

+ 2 Frozen Joints). We then evaluate the policy on a robot

with a different morphology and ball distribution and see that

performance drops substantially. However, a task space policy

is easily adaptable to new settings without retraining by adding

a residual to actions to shift the paddle position. This is not

possible when operating in joint space. Observing the robot

showed that it was swinging too low and slightly off-angle and

so adding a residual of 7cm above the table and 0.2 radians

of roll causes the original policy performance to be nearly

recovered (ABB 1100-4 & New Ball Dist & Manual Offset).

E. Applying to a New Task: Catching

While the system described above was designed for table

tennis, it is general enough to be applied to other agile tasks.

In this section, we apply it to a new task of catching a thrown

ball and assess the effect of latency modelling, similar to the

latency experiment from Section III-A.

4

Created by lowering the restitution coefficient of the paddle and ball, and

increasing the linear damping of the ball.

We used a similar system setup with minor modifications:

a single horizontal linear rail (instead of two) and a lacrosse

head as the end effector. The software stack and agents are

similar with small differences: simplified RewardManager

and DoneManager, soft body modelling of the net in sim-

ulation, trajectory prediction inputs for agents, and handling

occlusions when the ball is close to the net. The BGS agents

are similarly trained in a simulator before being transferred to

the real hardware, where they are fine-tuned. Agents achieve

a final catching success rate of 85 ∼ 90%. For full details on

the task see related work [84].

This task has a much larger variance in sim-to-real transfer

due to difficulty in accurately modelling net & ball capture

dynamics. As in the table tennis study, agents were trained in

simulation with latencies of 100%, 0%, 20%, 50%, and 150%

of baseline latency. Experiments with lower latency (0%, 20%,

and 50%) all transferred poorly, between 0 ∼ 10% catch

rate. Curiously, baseline latency and 150% latency performed

similarly, with one 150% run achieving the best zero-shot

transfer ever: a score equaling policies fine-tuned on the real

robot. This finding contradicts the results in the table tennis

task, which prompted further investigation and revealed that

the latency for this task was set incorrectly in the configuration

file; the real value was much closer to the 150% value.

This revelation dovetails with the 50% latency table tennis

results: a close latency can still give decent performance,

but accurate values are better. As such, it may be useful

to generally run ablation studies such as these to challenge

assumptions about the system and potentially find bugs.

IV. RELATED WORK

A. Agile Robotic Learning

The space of agile robotic learning systems is varied. It

includes autonomous vehicles such as cars [76, 79, 70, 9, 10],

legged locomotion [73, 91, 32, 78, 86, 87, 4], as well as

dynamic throwing [3, 52, 29, 98], catching [84], and hitting

— which is where table tennis fits.

Many of these systems face similar challenges — envi-

ronment resets, latency, safety, sim-to-real, perception, and

system running speed as exemplified in strict inference and

environment step time requirements.

The benefits of automatic resets have been demonstrated

in quadrupedal systems [86, 87] and throwing [98]. To our

knowledge, this system is the first table tennis learning system

with automatic resets, enabling autonomous training and eval-

uation in the real world for hours without human intervention.

Latency is a well known problem in physical learning

systems [91]. The system contributes to this area by extend-

ing [91], modeling multiple latencies in simulation, and by

validating its importance through extensive experiments. Or-

thogonally, the system also includes observation interpolation

on the physical system as a useful technique for increasing the

robustness of deployed policies to latency variation (e.g. from

jitter). We demonstrated empirically the robustness of policies

to substantial injections of latency and hypothesize that the

observation interpolation plays a crucial role in this.

Safety is another crucial element that becomes very im-

portant with fast moving robots. Trajectory planners [54] can

avoid static obstacles, neural networks can check for collisions

[48], safe RL can be used to restrict state spaces [97], or

a system can learn from safe demonstrations [67, 68, 40].

In contrast, this system runs a parallel simulation during

deployment as a safety layer. Doing so is beneficial because

the robot policy runs at a high frequency and there are several

physical environments and robots and it enables (1) definition

of undesirable states and (2) preventing a physical robot from

reaching them. To the best of our knowledge this is also a

novel component of the system.

Learning controllers from scratch in the real world can be

challenging for an agile robot due to sample inefficiency and

dangers in policy exploration. Training first in a simulator and

then deploying to the real robot [56, 75, 91] (i.e. sim-to-

real) is an effective way to mitigate both issues, but persistent

differences between simulated and real world environments

can be difficult to overcome [42, 72].

Perception is crucial in helping robots adapt to changes

in the environment [4, 96] and interact with relevant objects

[98, 52]. When objects need to be tracked at high speed such

as in catching or hitting, it is typical to utilize methods such

as motion-capture systems [65] however for table tennis, the

ball needs to adhere to strict standards that prevent instru-

mentation or altering of the ball properties. Passive vision

approaches for detecting the location within a video frame

of a bright colored ball from a stationary camera may seem

trivial, however, applying image processing techniques [92]

such as color thresholding, shape fitting [37], and background

subtraction are problematic. When considering the typical

video captured from the cameras several factors in the scene

render such approaches brittle. For example, the color of

the natural light changes through out the day. Even under

fixed lighting, the video stream is captured at 125Hz which

is above the Nyquist frequency of the electricity powering

fluorescent lights, resulting in images that flicker between

frames. Additionally, there are typically several leftover balls

from previous episodes around the scene which share the same

color and shape as the ball in play. These distractors make

data association more of a challenge for down stream tracking.

Finally, extracting things that move is also a challenge when

other basic visual cues are unreliable because there is always

a robot and or a human moving in the scene. The perception

component of the system in this paper uniquely combined all

these visual cues by learning to detect the ball in an end-to-

end fashion that is robust to visual ambiguities and provides

both precise ball locations and velocity estimates.

Finally, prior work in robot learning varies by how much

it focuses on the system compared with the problem being

tackled. [22, 45, 47, 87, 66, 92, 56] are examples of works

which dedicate substantial attention to the system. They pro-

vide valuable details and know-how about what mattered for

a system to work in practice. This work is spiritually similar.

B. Robotic Table Tennis

Robotic table tennis is a challenging, dynamic task [13]

that has been a test bed for robotics research since the

1980s [8, 51, 34, 36, 66]. The current exemplar is the Omron

robot [55]. Until recently, most methods tackled the problem

by identifying a virtual hitting point for the racket [63, 64,

6, 69, 101, 39, 88, 58]. These methods depend on being

able to predict the ball state at time t either from a ball

dynamics model which may be parameterized [63, 64, 61, 62]

or by learning to predict it [66, 69, 101]. Various methods

can then generate robot joint trajectories given these target

states [66, 63, 64, 61, 62, 67, 68, 40, 53, 92, 27]. More

recently, Tebbe et al. [93] learned to predict the paddle target

using reinforcement learning (RL).

Such approaches can be limited by their ability to predict

and generate trajectories. An alternative line of research seeks

to do away with hitting points and ball prediction models,

instead focusing on high frequency control of a robot’s joints

using either RL [13, 101, 26] or learning from demonstra-

tions [68, 17, 16]. Of these, B

¨

uchler et al. [13] is the most

similar to the system in this paper. Similar to B

¨

uchler et al.

[13], this system trains RL policies to control robot joints at

high frequencies given ball and robot states as policy inputs.

However B

¨

uchler et al. [13] uses hybrid sim and real training

as well as a robot arm driven by pneumatic artificial muscles

(PAMs), whilst this system uses a motor-driven arm. Motor-

driven arms are a common choice and used by [17, 92, 93, 67].

V. TAKEAWAYS AND LESSONS LEARNED

Here we summarize lessons learned from the system that

we hope are widely applicable to high-speed learning robotic

systems beyond table tennis.

Choosing the right robots is important. The system started

with a scaled down version of the current setup as a proof of

concept and then graduated to full-scale, industrial robots (Ap-

pendix B). Industrial robots have many benefits such as low

latency and high repeatability, but they can come with “closed-

box” issues that must be worked through (Section II-B).

A safety simulator is a dynamic and customizable solution

to constraining operations with high frequency control com-

pared to high-level trajectory planners (Section II-B).

A configurable, modular, and multi-language (e.g. C++ and

Python) system improves research and development velocity

by making experimentation and testing easy for the researcher

(Section II-B).

Latency modeling is critical for real world transfer per-

formance as indicated by our experimental results. Other

environmental factors may have varying effects that change

based on the task (Section III-A). For example, ball spin is not

accurately modeled in the ball return task, but can be critical

when more nuanced actions are required.

Accurate environmental perception is also a key factor in

transfer performance. In this system’s case many factors were

non-obvious to non-vision experts: camera placement, special

calibration techniques, lens locks, etc. all resulted in better

detection (Section II-D).

GPU data buffering, raw Bayer pattern detection, and patch

based training substantially increase the performance of high

frequency perception (Section II-D). Rather than using an off-

the-shelf perception module, a purpose-built version allows

levels of customization that may be required for high-speed

tasks.

Interpolating and smoothing inputs (Section II-E) solves the

problem of different devices running at different frequencies.

It also guards against zero-mean noise and system latency

variability, but is less effective against other types of noise.

Automatic resets and remote control increase system uti-

lization and research velocity (Section II-E). The system orig-

inally required a human to manually collect balls and control

the thrower. Now that the system can be run remotely and

“indefinitely”, significantly more data collection and training

can occur.

ES algorithms like BGS (Section II-G) are a good starting

point to explore the capabilities of a system, but they may

also be a good option in general. BGS is still the most

successful and reliable method applied in this system. Despite

poor sample efficiency, ES methods are simple to implement,

scalable, and robust optimizers that can even fine-tune real

world performance.

Humans are highly variable and don’t always follow instruc-

tions (on purpose or not) and require significant accommoda-

tions to address these issues and also to alleviate frustrations

(e.g. time to reset) and ensure valuable human time is not

wasted.

A. Limitations and Future Work

A guiding principal of the system has been not to solve

everything at once. Starting with a simple task (e.g. hitting the

ball) and then scaling up to more complex tasks (e.g. playing

with a human) provides a path to progress naturally prioritizes

inefficiencies to be addressed. For example, a long but clean

environment reset was sufficient for learning ball return tasks,

but needed optimization to be sufficiently responsive to a

human.

The current system struggles with a few key features. More

complex play requires understanding the spin of the ball and

the system currently has no way to directly read spin and it is

not even included in simulation training. While it is possible

to determine spin optically (i.e. by tracking the motion of the

logo on the ball), it would require significantly higher frame

rates and resolutions than what is currently employed. Other

approaches more suited to our setup include analyzing the

trajectory of the ball (which the robot may be doing implicitly)

or including the paddle/thrower pose into the observation;

analogous to how many humans detect spin. Additionally

learning a model of the opponent if the opponent attempts

to be deliberately deceptive, concealing of adding confusion

to their hits.

The robot’s range of motion is significant thanks to the

inclusion of the gantry, but is still limited in a few key ways.

Firstly, the safety simulator does not allow the paddle to

go below the height of the table, preventing the robot from

“scooping” low balls. This restriction prevents the robot from

catching the arm between the table and gantry, which the safety

sim was unable to prevent in testing. The robot is limited in

side-to-side motion as well as how far forward over the table

it can reach, so there may be balls that it physically cannot

return. Finally, so far the robot has not made significant use

of motion away from the table. We hope that training on more

complex ball distributions will require the robot to make full

use of the play space as professional humans do.

The sensitivity of policies also increases as the task becomes

more complex. For example, slight jitter or latency in inference

may be imperceptible for simple ball return tasks, but more

complex tasks that require higher precision quickly revealed

these gaps requiring performance optimizations. Sim-to-real

gaps are also an issue; hitting a ball can be done without

taking spin into account, but controlling spin is essential

for high-level rallying. Environmental parameters and ball

spin both become more important and incorporating domain

randomization is a promising path forward to integrating them

in a robust manner. Additionally, when human opponents come

into play, modeling them directly or indirectly make it possible

for the robot to move beyond purely reactive play and to start

incorporating strategic planning into the game.

VI. CONCLUSION

In this paper we have explored the components of a suc-

cessful, real-world robotic table tennis system. We discussed

the building blocks, trade-offs, and other design decisions that

went into the system and justify them with several case studies.

While we do not believe the system in this paper is the perfect

solution to building a learning, high-speed robotic system, we

hope that this deep-dive can serve as a reference to those who

face similar problems and as a discussion point to those who

have found alternative approaches.

ACKNOWLEDGMENTS

We would like to thank Arnab Bose, Laura Downs, and

Morgan Worthington for their work on improving the vision

calibration system and Barry Benight for their help with

video storage and encoding. We would also like to thank Yi-

Hua Edward Yang and Khem Holden for improvements to

the ball thrower control stack. We also are very grateful to

Chris Harris and Razvan Surdulescu for their overall guidance

and supervision of supporting teams such as logging and

visualization. Additional thanks go to Tomas Jackson for video

and photography and Andy Zeng for a thorough review of the

inital draft of this paper. And finally we want to thank Huong

Phan who was the lab manager for the early stages of the

project and got the project headed in the right direction.

REFERENCES

[1] ABB. Application manual Externally Guided Motion.

Thorlabs, 2022.

[2] Saminda Abeyruwan, Laura Graesser, David B

D’Ambrosio, Avi Singh, Anish Shankar, Alex Bewley,

Deepali Jain, Krzysztof Choromanski, and Pannag R

Sanketi. i-Sim2Real: Reinforcement learning of

robotic policies in tight human-robot interaction loops.

Conference on Robot Learning (CoRL), 2022.

[3] E.W. Aboaf, C.G. Atkeson, and D.J. Reinkensmeyer.

Task-level robot learning. In Proceedings. 1988 IEEE

International Conference on Robotics and Automation,

pages 1309–1310 vol.2, 1988. doi: 10.1109/ROBOT.

1988.12245.

[4] Ananye Agarwal, Ashish Kumar, Jitendra Malik, and

Deepak Pathak. Legged locomotion in challeng-

ing terrains using egocentric vision. arXiv preprint

arXiv:2211.07638, 2022.

[5] A. C. Aitken. Numerical Methods of Curve Fitting.

Proceedings of the Edinburgh Mathematical Society, 12

(4):218–218, 1961. doi: 10.1017/S0013091500025487.

[6] Russell Anderson. A Robot Ping-Pong Player: Exper-

iments in Real-Time Intelligent Control. MIT Press,

1988.

[7] Bryce E Bayer. Color imaging array, July 20 1976. US

Patent 3,971,065.

[8] John Billingsley. Robot ping pong. Practical Comput-

ing, 1983.

[9] Mariusz Bojarski, Davide Del Testa, Daniel

Dworakowski, Bernhard Firner, Beat Flepp, Prasoon

Goyal, Lawrence D Jackel, Mathew Monfort, Urs

Muller, Jiakai Zhang, et al. End to end learning for

self-driving cars. arXiv preprint arXiv:1604.07316,

2016.

[10] Mariusz Bojarski, Philip Yeres, Anna Choromanska,

Krzysztof Choromanski, Bernhard Firner, Lawrence

Jackel, and Urs Muller. Explaining how a deep neural

network trained with end-to-end learning steers a car.

arXiv preprint arXiv:1704.07911, 2017.

[11] G. Bradski. The OpenCV Library. Dr. Dobb’s Journal

of Software Tools, 2000.

[12] Greg Brockman, Vicki Cheung, Ludwig Pettersson,

Jonas Schneider, John Schulman, Jie Tang, and Wo-

jciech Zaremba. Openai gym. arXiv preprint

arXiv:1606.01540, 2016.

[13] Dieter B

¨

uchler, Simon Guist, Roberto Calandra, Vincent

Berenz, Bernhard Sch

¨

olkopf, and Jan Peters. Learning

to Play Table Tennis From Scratch using Muscular

Robots. CoRR, abs/2006.05935, 2020.

[14] Mahesh Chandra and Brejesh Lall. A Novel Method

for CNN Training Using Existing Color Datasets for

Classifying Hand Postures in Bayer Images. SN

Computer Science, 2, 04 2021. doi: 10.1007/

s42979-021-00450-w.

[15] Yevgen Chebotar, Ankur Handa, Viktor Makoviychuk,

Miles Macklin, Jan Issac, Nathan D. Ratliff, and Dieter

Fox. Closing the Sim-to-Real Loop: Adapting Simu-

lation Randomization with Real World Experience. In

International Conference on Robotics and Automation,

ICRA 2019, Montreal, QC, Canada, May 20-24, 2019,

pages 8973–8979. IEEE, 2019.

[16] Letian Chen, Rohan R. Paleja, Muyleng Ghuy, and

Matthew C. Gombolay. Joint Goal and Strategy Infer-

ence across Heterogeneous Demonstrators via Reward

Network Distillation. CoRR, abs/2001.00503, 2020.

[17] Letian Chen, Rohan R. Paleja, and Matthew C. Gom-

bolay. Learning from Suboptimal Demonstration via

Self-Supervised Reward Regression. CoRL, 2020.

[18] Krzysztof Choromanski, Mark Rowland, Vikas Sind-

hwani, Richard E. Turner, and Adrian Weller. Struc-

tured Evolution with Compact Architectures for Scal-

able Policy Optimization. In Proceedings of the 35th

International Conference on Machine Learning, pages

969–977. PMLR, 2018.

[19] Erwin Coumans and Yunfei Bai. PyBullet, a Python

module for physics simulation for games, robotics and

machine learning. http://pybullet.org, 2016–2021.

[20] Tianli Ding, Laura Graesser, Saminda Abeyruwan,

David B D’Ambrosio, Anish Shankar, Pierre Sermanet,

Pannag R Sanketi, and Corey Lynch. GoalsEye: Learn-

ing High Speed Precision Table Tennis on a Physical

Robot. In 2022 IEEE/RSJ International Conference on

Intelligent Robots and Systems (IROS), pages 10780–

10787. IEEE, 2022.

[21] Helei Duan, Jeremy Dao, Kevin Green, Taylor Apgar,

Alan Fern, and Jonathan Hurst. Learning Task Space

Actions for Bipedal Locomotion. In 2021 IEEE Interna-

tional Conference on Robotics and Automation (ICRA),

pages 1276–1282, 2021. doi: 10.1109/ICRA48506.

2021.9561705.

[22] Clemens Eppner, Sebastian H

¨

ofer, Rico Jonschkowski,

Roberto Mart

´

ın-Mart

´

ın, Arne Sieverling, Vincent Wall,

and Oliver Brock. Lessons from the Amazon Picking

Challenge: Four Aspects of Building Robotic Systems.

In Proceedings of Robotics: Science and Systems, An-

nArbor, Michigan, June 2016. doi: 10.15607/RSS.2016.

XII.036.

[23] Charles Freundlich, Michael Zavlanos, and Philippos

Mordohai. Exact bias correction and covariance esti-

mation for stereo vision. In Proceedings of the IEEE

Conference on Computer Vision and Pattern Recogni-

tion, pages 3296–3304, 2015.

[24] Kunihiko Fukushima. Visual Feature Extraction by a

Multilayered Network of Analog Threshold Elements.

IEEE Transactions on Systems Science and Cybernetics,

5(4):322–333, 1969. doi: 10.1109/TSSC.1969.300225.

[25] David Gallup, Jan-Michael Frahm, Philippos Mordohai,

and Marc Pollefeys. Variable baseline/resolution stereo.

In 2008 IEEE conference on computer vision and pat-

tern recognition, pages 1–8. IEEE, 2008.

[26] Wenbo Gao, Laura Graesser, Krzysztof Choromanski,