An agency of the

European Union

Authors

EASA

Jean Marc Cluzeau

Xavier Henriquel

Georges Rebender

Guillaume Soudain

Daedalean AG

Dr. Luuk van Dijk

Dr. Alexey Gronskiy

David Haber

Dr. Corentin Perret-Gentil

Ruben Polak

Disclaimer

This document and all information contained or referred to herein are provided for information

purposes only, in the context of, and subject to all terms, conditions and limitations expressed in

the IPC contract P-EASA.IPC.004 of June 4th, 2019, under which the work and/or discussions

to which they relate was/were conducted. Information or opinions expressed or referred to

herein shall not constitute any binding advice nor they shall they create or be understood as

creating any expectations with respect to any future certification or approval whatsoever.

All intellectual property rights in this document shall remain at all times strictly and exclusively

vested with Daedalean. Any communication or reproduction in full or in part of this document

or any information contained herein shall require Daedalean’s prior approval and bear the full

text of this disclaimer.

An agency of the

European Union

Contents

1 Executive summary 5

A note about this document . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 6

2 Introduction 7

2.1 Background . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 7

2.2 Learning Assurance process elements . . . . . . . . . . . . . . . . . . . . . . . 8

2.3 Other key takeaways from the IPC . . . . . . . . . . . . . . . . . . . . . . . . 8

2.4 Aim of the report . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

2.5 Outline of the report . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 9

2.6 Terminology . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 10

3 Existing guidelines, standards and regulations, and their applicability to machine

learning-based systems 12

3.1 EASA AI Roadmap . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 12

3.2 EU guidelines for trustworthy AI . . . . . . . . . . . . . . . . . . . . . . . . . 13

3.3 Existing guidelines and standards . . . . . . . . . . . . . . . . . . . . . . . . . 16

3.4 Other documents and working groups . . . . . . . . . . . . . . . . . . . . . . 18

3.5 Comparison of traditional software and machine learning-based systems . . . . 20

4 Use case definition and Concepts of Operations (ConOps) 22

4.1 Use case and ConOps . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 22

4.2 System description . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 24

4.3 Notes on model training . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 26

4.4 Selection criteria . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 27

5 Learning process 28

5.1 What is a learning algorithm? . . . . . . . . . . . . . . . . . . . . . . . . . . . 28

5.2 Training, validation, testing, and out-of-sample errors . . . . . . . . . . . . . . 30

5.3 Generalizability . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 32

6 Learning Assurance 43

6.1 Learning Assurance process overview . . . . . . . . . . . . . . . . . . . . . . . 43

6.2 Dataset management and verification . . . . . . . . . . . . . . . . . . . . . . 45

6.3 Training phase verification . . . . . . . . . . . . . . . . . . . . . . . . . . . . 51

3

An agency of the

European Union

Daedalean – EASA CoDANN IPC Extract 4

6.4 Machine learning model verification . . . . . . . . . . . . . . . . . . . . . . . 53

6.5 Inference stage verification . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

6.6 Runtime monitoring . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 57

6.7 Learning Assurance artifacts . . . . . . . . . . . . . . . . . . . . . . . . . . . 62

7 Advanced concepts for Learning Assurance 63

7.1 Transfer learning . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 63

7.2 Synthesized data . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 66

8 Performance assessment 70

8.1 Metrics . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 70

8.2 Model evaluation . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 75

9 Safety Assessment 77

9.1 Safety Assessment process . . . . . . . . . . . . . . . . . . . . . . . . . . . . 77

9.2 Functional Hazard Assessment . . . . . . . . . . . . . . . . . . . . . . . . . . 78

9.3 DAL Assignment . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 85

9.4 Common Mode Analysis . . . . . . . . . . . . . . . . . . . . . . . . . . . . . 86

9.5 Neural network Failure Mode and Effect Analysis (FMEA) . . . . . . . . . . . 86

10 Use case: Learning Assurance 88

11 Conclusion & future work 89

References 91

Notations 100

Index 101

Acronyms 103

An agency of the

European Union

Chapter 1

Executive summary

This is a public extract of the report that resulted from the collaboration between EASA and

Daedalean in the frame of an Innovation Partnership Contract (IPC) signed by the Agency.

The project ran from June 2019 to February 2020.

The project titled “Concepts of Design Assurance for Neural Networks” (CoDANN) aimed

at examining the challenges posed by the use of neural networks in aviation, in the broader

context of allowing machine learning and more generally artificial intelligence on-board aircraft

for safety-critical applications.

Focus was put on the “Learning Assurance” building-block of the EASA AI Roadmap 1.0

[EAS20] which resulted in significant progress on three essential aspects of the “Learning

Assurance” concept:

1. The definition of the W-shaped Learning Assurance life-cycle as a foundation for future

guidance from EASA for machine learning / deep learning (ML/DL) applications. It

provides an outline of the essential steps for Learning Assurance and their connection

with traditional Development Assurance processes.

2. The investigation of the notion of “generalization” of neural networks, which is a crucial

characteristic of neural networks when ensuring the level of confidence that a ML model

will perform as intended. The reviewed t heoretical and practical “generalization bounds”

should contribute to the definition of more generic guidance on how to account for NNs

in Safety Assessment processes.

3. The approach to accounting for neural networks in safety assessments, on the basis of a

realistic use case. Guidance that is more generic will have to be developed but this report

paves the way for a practical approach to achieve certification safety objectives when

ML/DL are used in safety-critical applications. The Safety Assessment in this report

includes an outline of a failure mode and effect analysis (FMEA) for an ML component

to derive quantitative guarantees.

Many concepts discussed in this report apply to machine learning algorithms in general, but an

emphasis is put on the specific challenges of deep neural networks or deep learning for computer

vision systems.

It was one of the primary goals to keep t he guidelines for Learning Assurance on a generic level,

in an attempt to motivate these guidelines from a theoretical perspective. Where applicable,

reference is made to concrete methods.

5

An agency of the

European Union

Daedalean – EASA CoDANN IPC Extract – Chapter 1 6

A note about this document

This extract is a public version of the original IPC report. Several parts have been shortened

for conciseness and some details from the original report have been removed for confidentiality

reasons. The main outcomes from the work between EASA and Daedalean have been retained

for the benefit of the public.

An agency of the

European Union

Chapter 2

Introduction

2.1 Background

In recent years, the scientific discipline known as machine learning (ML) has demonstrated

impressive performances on visual tasks relevant to the operation of General Aviation aircraft,

autonomous drones, or electric air-taxis. For this reason, the application of machine learning

to complex problems such as object detection and image segmentation is very promising for

current and future airborne systems. Recent progress has been made possible partly due to

a simultaneous increase in the amount of data available and in computational power (see for

example the survey [

LBH15]). However, this increase of performance comes at the cost of

more complexity in machine learning models, and this complexity might pose challenges in

safety-critical domains, as it is often difficult to verify their design and to explain or interpret

their behavior during operation.

Machine learning therefore provides major opportunities for the aviation industry, yet the trust-

worthiness of such systems needs to be guaranteed. The EASA AI Roadmap [

EAS20, p. 14]

lists the following challenges with respect to trustworthiness:

• “Traditional Development Assurance frameworks are not adapted to machine learning”;

• “Difficulties in keeping a comprehensive description of the intended function”;

• “Lack of predictability and explainability of the ML application behavior”;

• “Lack of guarantee of robustness and of no ’unintended function’”;

• “Lack of standardized methods for evaluating the operational performance of the ML/DL

applications”;

• “Issue of bias and variance in ML applications”;

• “Complexity of architectures and algorithms”;

• “Adaptive learning processes”.

This report investigates these challenges in more detail. The current aviation regulatory frame-

work and in particular Development Assurance do not provide a means of compliance for these

new systems. As an extension to traditional Development Assurance, the elements of the

Learning Assurance concepts defined in the EASA AI Roadmap are investigated to address

these challenges.

7

An agency of the

European Union

Daedalean – EASA CoDANN IPC Extract – Chapter 2 8

2.2 Learning Assurance process elements

This report has identified crucial elements for Learning Assurance in a W-shaped development

cycle, as an extension to traditional Development Assurance frameworks (see

Figure 6.1 and

the details on

Page 43). They summarize the key activities required for the safe use of neural

networks (and more generally machine learning models) during operation.

Figure 6.1: W-shaped development cycle for Learning Assurance.

The reader is encouraged to use these as a guide while reading the report. Each of these items

will be motivated from a theoretical perspective and exemplified in more detail in the context

of the identified use case in

Chapter 4.

2.3 Other key takeaways from the IPC

To cope with the difficulties in keeping a comprehensive description of the intended function,

this report introduces data management activities to ensure the quality and completeness of

the datasets used for training or verification processes. In particular, the concepts outlined in

this document advocate the creation of a distribution discriminator to ensure an evaluation of

the completeness of the datasets.

The lack of predictability of the ML application behavior could be addressed through t he con-

cept of generalizability that is introduced in Section 5.3 as a means of obtaining theoretical

guarantees on the expected behavior of machine learning-based systems during operation. To-

gether with data management, introduced in

Section 6.2, this allows to obtain s uch guarantees

from the performance of a model during the design phase.

The report identified risks associated with two types of robustness: algorithm robustness and

model robustness. The former measures how robust the learning algorithm is to changes in

the underlying training dataset. The latter quantifies a trained model’s robustness to input

perturbations.

The evaluation and mitigation of bias and variance is a key issue in ML applications. It was

identified that bias and variance must be addressed on two levels. First, bias and variance

inherent to the datasets need to be captured and minimized. Second, model bias and variance

need to be analyzed and the associated risks taken into account.

This report assumes a system architecture which is non-adaptive (i.e. does not learn) during

An agency of the

European Union

Daedalean – EASA CoDANN IPC Extract – Chapter 2 9

operation. This does not impair the capability of retraining or reusing portions of NNs (transfer

learning) but creates boundaries which are easily compatible with the current aviation regulatory

frameworks.

2.4 Aim of the report

The aim of this report is to present the outcome of the collaboration between EASA and

Daedalean AG, in an Innovation Partnership Contract (IPC) on the Concepts of Design Assur-

ance for Neural Networks (CoDANN).

The purpose of this IPC was to investigate ways to gain confidence in the use of products

embedding machine learning-based systems (and more specifically neural networks), with the

objective of identifying the enablers needed to support their future introduction in aviation.

More precisely, the collaboration aimed at:

1. Proposing a first set of guidelines for machine learning-based systems facilitating future

compatibility with the Agency regulatory framework (e.g. [

CS-25]/[CS-27]/[CS-29].1309

or [

CS-23]/[SC-VTOL-01].2510), using one of the specific examples (landing guidance)

proposed by Daedalean;

2. Proposing possible reference(s) for evaluating the performance/accuracy of machine

learning-based system in the context of real-scale safety analyses.

The scope of this assessment will include but may not be limited to airworthiness and operations.

Note however that only software questions are addressed in detail: specific hardware might have

to be used and certified for neural networks, but we leave discussions on this subject for future

work.

This report has been prepared under the conditions set within the IPC. Its duration has been

of ten months, between May 2019 and February 2020.

The European Union Aviation Safety Agency (EASA) is the centerpiece of the European

Union’s strategy for aviation safety. Its mission is to promote the highest common standards of

safety and environmental protection in civil aviation. The Agency develops common safety and

environmental rules at the European level. It monitors the implementation of standards through

inspections in the Member States and provides the necessary technical expertise, training and

research. The Agency works hand in hand with the national authorities which continue to carry

out many operational tasks, such as certification of individual aircraft or licensing of pilots.

Daedalean AG was founded in 2016 by a team of engineers who worked at companies such

as Google and SpaceX. As of February 2020, the team includes 30+ software engineers, as

well as avionics specialists and pilots. Daedalean works with eVTOL companies and aerospace

manufacturers to specify, build, test and certify a fully autonomous autopilot system. It has

developed systems demonstrating crucial early capabilities on a path to certification for airwor-

thiness. Daedalean has offices in Z¨urich, Switzerland and Minsk, Belarus.

2.5 Outline of the report

Chapter 3 investigates the existing regulations, standards, and major reports on the use of

machine learning-based sys tems in safety-critical systems.

Afterwards, Chapter 4 presents the identified use cases and Concepts of Operations of neural

networks in aviation applications. These will be used to illustrate the findings on a real-world

example for the remainder of the report.

An agency of the

European Union

Daedalean – EASA CoDANN IPC Extract – Chapter 2 10

Chapter 5 provides the reader with the necessary background knowledge required for the con-

cepts of Learning Assurance.

In

Chapters 6 and 7, guidelines for Learning Assurance are introduced. Furthermore, a set of

activities that appear to be necessary to guarantee a safe use of neural networks are detailed.

Each of these activities are visited in detail and motivated from a theoretical perspective. This

framework is kept in a flexible way such that the concrete implementation of each activity can

be tailored to the specific use case of future applicants.

Chapter 8 explains how the overall system described in Chapter 4 is evaluated. Chapter 9

discusses the Safety Assessment aspects of the system.

A use case summary is given in

Chapter 10, with a few more concrete ideas for implementation.

Finally, the conclusion (

Chapter 11) revisits assumptions made throughout and discusses sub-

jects for future work.

2.6 Terminology

In this section, the definition of machine learning is recalled, and common terminology that

will be used throughout the report is set up.

Automation is the use of control s yst ems and information technologies reducing the need for

human input and supervision, while autonomy is the ultimate level of automation, the ability

to perform tasks without input or supervision by a human during operations.

Artificial intelligence (AI) is the theory and development of computer systems which are able

to perform tasks that “normally” require human intelligence. Such tasks include visual percep-

tion, speech recognition, decision-making, and translation between languages. As “normally”

is a shifting term, the definition of what constitutes AI changes over t he years. This report

will therefore not discuss this specific term in more detail.

Machine learning (ML) is the scientific field rooted in statistics and mathematical optimization

that studies algorithms and mathematical models that aim at achieving artificial intelligence

through learning from data. This data might consists of samples with labels (supervised

learning), or without (unsupervised learning).

More formally, machine learning aims at approximating a mathematical function f : X → Y

from a (very large and possibly infinite) input space X to an output space Y , given a finite

amount of data.

In supervised learning, the data consists of sample pairs (x, f (x)) with x ∈ X. This report will

mostly consider parametric machine learning algorithms, which work by finding the optimal

parameters in a set of models, given the data.

An approximation

ˆ

f : X → Y to f is usually called a model. Together, the computational

steps required to find these parameters are usually referred to as training (of the model).

Once a model

ˆ

f for f has been obtained, it can be used to make approximations/predictions

ˆ

f (x) for values f (x) at points x which were not seen during training. This phase is called

inference. The sample pairs (x, f (x)) (or simply the values f (x)) are often called ground

truth, as opposed to the approximation

ˆ

f (x).

Artificial neural networks (or simply neural networks) are a class of machine learning algo-

rithms, loosely inspired by the human brain. They consist of connected nodes (“neurons”) that

define the order in which operations are performed on the input. Neurons are connected by

edges which are parametrized by weights and biases. Neurons are organized in layers, specif-

ically an input layer, several intermediate layers, and an output layer. Given a fixed topology

(neurons and connections), a model is found by searching for the optimal weights and other

parameters. Deep learning is the name given to the study and use of “deep” neural networks,

An agency of the

European Union

Daedalean – EASA CoDANN IPC Extract – Chapter 2 11

that is neural networks with more than a few intermediate layers.

Convolutional neural networks (CNN) are a specific type of deep neural networks that are

particularly suited to process image data, based on convolution operators. A feature is a

derived property/attribute of data, usually lower dimensional than the input. While features

used to be handcrafted, deep learning is expected to learn features automatically. In a CNN ,

they are encoded by the convolutional filters in the intermediary layers.

A system is adaptive when it continues to change (e.g. learn) during real-time operation. A

system is predictable/deterministic if identical inputs produce identical outputs. In the scope

of this report, only non-adaptive and deterministic systems will be considered.

A machine learning model is robust if small variations in the input yield small variations in the

output (see also

Section 6.4 for a precise definition).

Refer to the index at the end of the document for an exhaustive list of the other technical

terms used throughout the report.

An agency of the

European Union

Chapter 3

Existing guidelines, standards and

regulations, and their applicability

to machine learning-based systems

3.1 EASA AI Roadmap

As far as EASA is concerned, AI will have an impact on most of the domains under its mandate.

AI not only affects the products and services provided by the industry, but also triggers the rise

of new business models and affects the Agency’s core processes (certification, rule-making, or-

ganization approvals, and standardization). This may in turn affect the competency framework

of EASA staff.

EASA developed an AI Roadmap [

EAS20] that aims at creating a consis tent and risk-based

“AI trustworthiness” framework to enable the processing of AI/ML applications in any of the

core domains of EASA, from 2025 onward. The EASA approach is driven by the seven key

requirements for trustworthy AI that were published in the report from the EC High Level

Group of Experts on AI (see also

Section 3.2). Version 1.0 of the EASA AI Roadmap focuses

on machine learning techniques using, among others, learning decision trees or neural network

architectures. Further development in AI technology will require future adaptations to this

Roadmap.

3.1.1 B uilding blocks of the AI Trustworthiness framework

The EASA AI Roadmap is based on four building blocks that structure the AI Trustworthiness

framework. All four building blocks are anticipated to have an importance in gaining confidence

in the trustworthiness of an AI/ML application.

• The AI trustworthiness analysis should provide guidance to applicants on how to address

each of the seven key guidelines in the specific context of civil aviation;

• The objective of Learning Assurance is to gain confidence at an appropriate level that

an ML application supports the intended functionality, thus opening the “AI black box”

as much as practically possible and required;

• Explainability of AI is a human-centric concept that deals with the capability to explain

how an AI application is coming to its results and outputs;

12

An agency of the

European Union

Daedalean – EASA CoDANN IPC Extract – Chapter 3 13

• AI safety risk mitigation is based on the anticipation that the “AI black box” m ay not

always be opened to a sufficient extent and that supervision of the function of the AI

application may be necessary.

Figure 3.1: Relationship between AI Roadmap building blocks and AI trustworthiness.

3.1.2 Key objectives

The main action streams identified in the EASA AI Roadmap are to:

1. “Develop a human-centric Trustworthiness framework”;

2. “Make EASA a leading certification authority for AI”;

3. “Support European Aviation leadership in AI”;

4. “Contribute to an efficient European AI research agenda”;

5. “Contribute actively to EU AI strategy and initiatives”.

3.1.3 T imeline

The EASA AI Roadmap foresees a phased approach, the timing of which is aligned with the

industry AI implementation timeline. Phase I will consist of developing a first set of guidelines

necessary to approve first use of safety-critical AI. This will be achieved in partnership with the

industry, mainly through IPCs, support to research, certification projects, and working groups.

Phase II will build on the outcome of Phase I to develop regulations, Acceptable Means of

Compliance (AMC) and Guidance Material (GM) for certification/approval of AI. A phase III

is foreseen to further adapt the Agency process and expand the regulatory framework to the

future developments in the dynamic field of AI.

3.2 EU guidelines for trustworthy AI

On April 8th, 2019, the European Commission’s High-Level Expert Group on Artificial Intel-

ligence (AI HLEG) issued a report titled “Ethics and Guidelines on Trustworthy AI” [

EGTA],

An agency of the

European Union

Daedalean – EASA CoDANN IPC Extract – Chapter 3 14

which lays out four ethical principles and seven requirements that AI systems should meet in

order to be trustworthy, each further split out into multiple principles that should be adhered

to.

3.2.1 EGTA, EASA, and this report

The seven EGTA requirements, their constituting principles, the four building blocks of the

EASA AI Roadmap and this report form a narrowing sequence of scopes. The EGTA report

intends to address any kind of AI the European citizens might encounter, many of which will

deal with end-user data, affecting them directly.

This report is not focused on all possible applications of AI in all of EASA’s domain of com-

petency, but specifically on machine-learned systems applied to make better and safer safety-

critical avionics. This report is not intended or expected to be the end-all and be-all of this

topic, but addresses a subset of the concerns raised by the guiding documents. As an aid to

the reader,

Table 3.1 presents an overview of how parts of this report may be traced to the

EASA building blocks, and to the principles and the requirements.

EGTA key re-

quirement

Constituting

principles

(Example of) ap-

plicability to AI in

safety-critical avion-

ics

EASA

building

block

This report

Human

agency and

oversight

Fundamental

rights

Must improve safety

of life and goods

TA –

Human agency

Public must be al-

lowed choice of use

Human oversight Human-in-command

Technical ro-

bustness and

safety

Resilience to at-

tack and security

Potential for sabotage TA –

Fallback plan

Runtime monitoring,

Fault mitigation

TA Chapter 9

General safety

Hazard analysis, pro-

portionality in DAL

TA/SRM Chapter 9

Accuracy

Correctness and accu-

racy of system output

LA Ch. 5, 6, 7

Reliability and

reproducibility

Correctness and accu-

racy of system design

Privacy and

data gover-

nance

Privacy and data

protection

Passenger/pilot pri-

vacy when collecting

training/testing data

[GDPR] –

Quality and in-

tegrity of data

Core to quality of ML

systems

LA Ch. 5 and 6

An agency of the

European Union

Daedalean – EASA CoDANN IPC Extract – Chapter 3 15

EGTA key re-

quirement

Constituting

principles

(Example of) ap-

plicability to AI in

safety-critical avion-

ics

EASA

building

block

This report

Access to indi-

vidual’s data

(n/a, Individual pas-

senger/pilot data not

required)

TA –

Transparency Traceability

Datasets and ML pro-

cess documentation

LA/EX Chapter 6

Explainability

Justification and fail-

ure case analysis of

ML system outputs

EX Chapter 11

Communication

Mixing human and AI

on ATC/comms

TA –

Diversity,

non-

discrimination

and fairness

Avoidance of un-

fair bias

Must not unfairly

trade off safety of

passenger vs public

TA –

Accessibility and

universal design

May enable more peo-

ple to fly

Stakeholder par-

ticipation

EASA, pilots and op-

erators, passengers,

public at large

Societal and

environmental

well-being

Sustainable and

environmentally

friendly AI

Increase ubiquity of

flying, with environ-

mental and societal

consequences

TA –

Social impact

Society and

democracy

Accountability Auditability

Core competency of

the regulator (EASA)

TA –

Minimization

and reporting of

negative impacts

Trade-offs

Redress

Table 3.1: EGTA requirements and principles, EASA building blocks and this report. The EASA

building blocks are referred to as TA (Trustworthiness Analysis), LA (Learning Assurance), SRM

(Safety Risk Mitigation) and EX (Explainability).

An agency of the

European Union

Daedalean – EASA CoDANN IPC Extract – Chapter 3 16

3.3 Existing guidelines and standards

3.3.1 A RP4754A / ED-79A

The Guidelines for Development of Civil Aircraft and Systems [ED-79A/ARP4754A] were

released in 2010. The purpose of this guidance is to define a structured development process

to minimize the risk of development errors during aircraft and system design. It is recognized

by EASA as a recommended practice for system Development Assurance. In conjunction with

[

ARP4761], it also provides a Safety Assessment guidance used for the development of large

aircraft and their highly integrated system.

It is essentially applied for complex and highly integrated systems, as per AMC25.1309:

“A concern arose regarding the efficiency and coverage of the techniques used for

assessing safety aspects of highly integrated systems that perform complex and

interrelated functions, particularly through the use of electronic technology and

software based techniques. The concern is that design and analysis techniques

traditionally applied to deterministic risks or to conventional, non-complex systems

may not provide adequate safety coverage for more complex systems. Thus, other

assurance techniques, such as Development Assurance utilizing a combination of

process assurance and verification coverage criteria, or structured analysis or as-

sessment techniques applied at the aeroplane level, if necessary, or at least across

integrated or interacting systems, have been applied to these more complex sys-

tems. Their systematic use increases confidence that errors in requirements or

design, and integration or interaction effects have been adequately identified and

corrected.”

One key aspect is highlighted by [

ED-79A/ARP4754A, Table 3]: When relying on Functional

Development Ass urance Level A (FDAL A) alone, the applicant may be required to substantiate

that the development process of a function has sufficient independent validation/verification

activities, techniques, and completion criteria to ensure that all potential development errors,

capable of having a catastrophic effect on the operations of the function, have been removed

or mitigated. It is EASA’s experience that development errors may occur even with the highest

level of Development Assurance.

3.3.2 EUROCAE ED-12C / RTCA DO-178C

The Software Considerations in Airborne Systems and Equipment Certification [ED-12C/DO-

178C

], released in 2011, provide the main guidance used by certification authorities for the

approval of aviation software. From [

ED-12C/DO-178C, Section 1.1], the purpose of this

standard is to

“provide guidance for the production of software for airborne systems and equip-

ment that performs its intended function with a level of confidence in safety that

complies with airworthiness requirements.”

Key concepts are the flow-down of requirements and bidirectional traceability between the

different layers of requirements. Traditional “Development Assurance” frameworks such as

[

ED-12C/DO-178C] are however not adapted to address machine learning processes, due to

specific challenges, including:

• Machine learning shifts the emphasis on other parts of the process, namely data prepa-

ration, architecture and algorithm selection, hyperparameter tuning, etc. There is a

An agency of the

European Union

Daedalean – EASA CoDANN IPC Extract – Chapter 3 17

need for a change in paradigm to develop specific assurance methodologies to deal with

learning processes;

• Difficulties in keeping a comprehensive description of the intended function and in the

flow-down of traceability within datasets (e.g. definition of low level requirements);

• Lack of predictability and explainability of the ML application behavior.

The document also has the following three supplements:

• [

ED-128/DO-331]: Model-Based Development and Verification;

• [ED-217/DO-332]: Object-Oriented Technology and Related Techniques;

• [

ED-216/DO-333]: Formal Methods.

The two first supplements are addressing specific Development Assurance techniques and will

not be applicable to address machine learning processes. The Formal Methods supplement

could on the contrary provide a good basis to deal with novel verification approaches (e.g. for

the verification of the robustness of a neural network).

Finally, for tool qualification aspects, it is also worth mentioning:

• [ED-215/DO-330]: Tool Qualification Document that could also be used in a Learning

Assurance framework to develop tools that would reduce, automate or eliminate those

process objective(s) whose output cannot be verified.

3.3.3 EUROCAE ED-76A / RTCA DO-200B

The Standards for Processing Aeronautical Data [ED-76A/DO-200B] provides the minimum

requirements and guidance for the processing of aeronautical data that are used for navigation,

flight planning, terrain/obstacle awareness, flight deck displays, flight simulators, and for other

applications. This standard aims at providing assurance that a certain level of data quality is

established and maintained over time.

Data Quality is defined in the standard as the degree or level of confidence that the provided

data meets the requirements of the user. These requirements include levels of accuracy,

resolution, assurance level, traceability, timeliness, completeness, and format.

The notion of data quality can be used in the context of the preparation of machine learning

datasets and could be instrumental in the establishment of adequate data completeness and

correctness processes as described in

Section 6.2.

3.3.4 A STM F3269-17

The Standard Practice for Methods to Safely Bound Flight Behavior of Unmanned Aircraft

Systems Containing Complex Functions [

F3269-17] outlines guidance to constrain complex

function(s) in unmanned aircraft systems through a runtime assurance (RTA) syst em.

Aspects of safety monitoring are encompassed in the building block “Safety Risk Mitigation”

from the EASA AI Roadmap (see

Section 3.1).

An agency of the

European Union

Daedalean – EASA CoDANN IPC Extract – Chapter 3 18

3.4 Other documents and working groups

3.4.1 EUROCAE WG-114 / SAE G-34

An EUROCAE working group on the “certification of aeronautical systems implementing artifi-

cial intelligence technologies”, WG-114, was announced in June 2019 (see

https://eurocae.

net/about-us/working-groups/

). It recently merged with a similar SAE working group, G-

34 (“Artificial Intelligence in Aviation”). Their objectives are to:

• “Develop and publish a first technical report to establish a comprehensive statement of

concerns versus the current industrial standards. [. . . ]”

• “Develop and publish EUROCAE Technical Reports for selecting, implementing, and

certifying AI technology embedded into and/or for use with aeronautical systems in both

aerial vehicles and ground systems.”

• “Act as a key forum for enabling global adoption and implementation of AI technologies

that embed or interact with aeronautical systems.”

• “Enable aerospace manufactures and regulatory agencies to consider and implement

common sense approaches to the certification of AI systems, which unlike other avionics

software, has fundamentally non-deterministic qualities. (sic)”

At the time of writing, draft documents list possible safety concerns and potential next steps.

Most of these are addressed in this report and a detailed comparison can be released when the

WG-114 documents are finalized.

The working group includes representatives from both EASA and Daedalean, in addition to

experts from other stakeholders.

3.4.2 UL-4600: Standard for Safety for the Evaluation of Autonomous

Products

A working group, led by software safety expert Prof. Phil Koopman, is currently working with

UL LLC on a standard proposal for autonomous automotive vehicles (with the goal of being

adaptable to other types of vehicles). The idea is to complement existing standards such as

ISO 26262 and ISO/PAS 21488 that were conceived with human drivers in mind. Notice that

this is very similar to what this IPC aimed to achieve for airborne systems. A preliminary draft

[

UL-4600] has been released in October 2019, and the standard is planned to be released in

the course of 2020.

Section 8.5 of the current draft of UL-4600 is dedicated to machine learning, but the treatment

of the topic remains fairly high-level. In this report, the aim is to provide a more in-depth

understanding of the risks of modern machine learning methods and ways to mitigate them.

3.4.3 “ Safety First for Automated Driving” whitepaper

In June 2019, 11 major stakeholders in the automative and automated driving industry, including

Audi, Baidu, BMW, Intel, Daimler, and VW, published a 157-page report [

SaFAD19] on safety

for automated driving. Their work focuses on safety by design and verification & validation

methods for SAE levels 3-4 autonomous driving (conditional/high automation).

In particular, the report contains an 18-page appendix on the use of deep neural networks in

these safety-critical scenarios, with the running example of 3D object detection. The conclu-

sions that surface therein are compatible with those in this IPC report.

An agency of the

European Union

Daedalean – EASA CoDANN IPC Extract – Chapter 3 19

3.4.4 FAA TC-16/4

The U.S. Federal Aviation Administration (FAA) report on Verification of adaptive systems

[

TC-16/4], released in April 2016, is the result of a two-phase research study in collaboration

with the NASA Langley Research Center and Honeywell Inc., with the aim of analyzing the

certifiably of adaptive systems in view of [

ED-12C/DO-178C].

Adaptive systems are defined therein as “software having the ability to change behavior at

runtime in response to changes in the operational environment, system configuration, resource

availability, or other factors”.

At this st age, adaptive machine learning algorithms are anticipated to be harder to certify than

models that are frozen after training. Restricting ourselves to non-adaptive models creates a set

of realistic assumptions in which the development of Learning Assurance concepts is possible.

Following this, aspects of recertification of existing but changed models (i.e. retrained models)

are very briefly discussed in Section 7.1.3.

3.4.5 FDA April 2019 report

The Proposed Regulatory Framework for Modifications to AI/ML-based Software as a Medical

Device [

FDA19], released by the U.S. Food and Drugs Administrations in April 2019, focuses

on risks in machine learning-based systems resulting from software modifications, but also

contains more general information on the regulation/certification of AI software. Note that

such software modifications include the adaptive algorithms studied in length in the FAA report

[

TC-16/4] discussed above.

3.4.6 AVSI’s AFE 87 project on certification aspects of machine learning

This is a one-year project launched in May 2018 and includes Airbus, Boeing, Embraer, FAA,

GE Aviation, Honeywell, NASA, Rockwell Collins, Saab, Thales, and UTC. According to a

presentation given in the April 2019 EUROCAE symposium, the goal is to address the following

questions (quoting from [

Gat19]):

1. “Which performance-based objectives should an application to certify a system incorpo-

rating machine learning contain, so that it demonstrates that the system performs its

intended function correctly in the operating conditions?”

2. “What are the methods for determining that a training set is correct and complete? ”

3. “What is retraining, when is it needed, and to which extent? ”

4. “What kind of architecture monitoring would be adapted to complex machine learning

applications?”

Note that these questions are all addressed in this report, respectively in

Chapter 10, Sec-

tion 6.2

, Section 7.1.3/Section 7.1 and Section 6.6.

3.4.7 D ata Safety Guidance

The Data Safety Guidance [SCSC-127C], from the Data Safety Initiative Working Group of

the Safety Critical Systems Club, aims at providing up-to-date recommendations for the use

of data (under a broad definition) in safety-critical systems. Along with definitions, principles,

processes, objectives, and guidance, it contains a worked out example in addition to several

An agency of the

European Union

Daedalean – EASA CoDANN IPC Extract – Chapter 3 20

appendices (including examples of accidents due to faulty data).

Section 6.2 will explain the

importance of data in systems based on machine learning.

3.5 Comparison of traditional software and machine learning-

based systems

3.5.1 G eneral considerations

A shift in paradigm

In aviation, system and software engineering are traditionally guided

through the use of industrial standards on Development Assurance like [

ED-79A/ARP4754A]

or [

ED-12C/DO-178C]. The use of learning algorithms and processes constitutes a shift in

paradigm compared to traditional system and software development processes.

Development processes foresee the design and coding of software from a set of functional

requirements to obtain an intended behavior of the system. Whereas in the case of learning

processes, the intended behavior is captured in data from which a model is derived through

the training phase, as an approximation of the expected function of the system. This con-

ceptual difference comes with a set of challenges that requires an extension to the traditional

Development Assurance framework that was used for complex and highly integrated system

development so far. Machine learning shifts the emphasis of assurance methods on other parts

of the process, namely data management, learning model design, etc.

Still some similarities. . . It is anticipated that Development Assurance processes could still

apply to higher layers of the system design, namely to capture, validate and verify the functional

system requirements.

Also the core software and the hardware used for the inference phase are anticipated to be

developed with traditional means of compliance such as [ED-12C/DO-178C] or [ED-80/DO-

254

].

In addition, some of the processes integral to Development Assurance are nevertheless antic-

ipated to be still compatible and required with Learning Assurance methods. This concerns

mainly processes such as planning, configuration management, quality assurance and certifica-

tion liaison.

Planning, quality assurance, and certification liaison processes These elements of t ra-

ditional Development Assurance require adaptations for the Learning Assurance process, in

particular for the definition of transition criteria but their principles are anticipated to remain

unchanged.

3.5.2 Configur ation management principles

The principles from existing standards are anticipated to apply with no restriction. For applica-

tions involving modification of parameters through learning, a strong focus should be put on the

capability to maintain configuration management of those parameters (e.g. weights of a neural

network) for any relevant configuration of the resulting application. Specific consideration may

be required for the capture of hyperparameters configurations.

Considerations on the use of PDIs Considering the nature of neural networks, parametrized

by weights and biases that define the behavior of the model, it may be convenient to capture

these parameters in a separate configuration file.

It is however important to mention that the Parameter Data Item (PDI) guidance as introduced

in [ED-12C/DO-178C] cannot be used as such, due to the fact that the learned parametrized

An agency of the

European Union

Daedalean – EASA CoDANN IPC Extract – Chapter 3 21

model is inherently driving the functionality of the software and cannot be separated from the

neural network architecture or executable object code.

As indicated in [

ED-12C/DO-178C, Section 6.6], PDIs can be verified separately under four

conditions:

1. The Executable Object Code has been developed and verified by normal range testing to

correctly handle all Parameter Data Item Files that comply with their defined structure

and attributes;

2. The Executable Object Code is robust with respect to Parameter Data Item Files struc-

tures and attributes;

3. All behavior of the Executable Object Code resulting from the contents of the Parameter

Data Item File can be verified;

4. The structure of the life-cycle data allows the parameter data item to be managed

separately.

At least the third condition is not realistic in the case of a learning model parameter data item.

In conclusion, even if PDIs can be conveniently used to store and manage the parameters

of a machine learning model resulting from a learning process, the PDI guidance from [

ED-

12C/DO-178C

] (or equivalent), which foresees a separate verification of the PDI from the

executable object code, is not a practicable approach.

An agency of the

European Union

Chapter 4

Use case definition and Concepts of

Operations (ConOps)

The use of neural networks in aviation applications should be regulated such that it is propor-

tionate to the risk of the specific operation. As a running example throughout the report, a

specific use case (visual landing guidance) will be considered, described in detail in this chapter.

Chapter 9 will use this example to outline a safety analysis and Chapter 10 aims to present a

summary of the Learning Assurance activities in the context of the use case.

The contents of Chapters 5 to 8 are generic, and apply to general (supervised) machine learning

algorithms.

4.1 Use case and ConOps

Visual landing guidance (VLG) facilitates the task of landing an aircraft on a runway or vertiport.

Table 4.1 proposes two operational concepts for our VLG system, one for General Aviation [CS-

23

] Class IV and another for Rotorcraft [CS-27] or eVTOL [SC-VTOL-01] (cat. enhanced),

with corresponding operating parameters.

To assess the risk of the operation, two levels of automation are proposed for each operational

concept: pilot advisory (1a and 2a) and full autonomy (1b and 2b).

Operational Concept 1 Operational Concept 2

Application

Visual landing guidance

(Runway)

Visual landing guidance

(Vertiport)

Aircraft type

General Aviation [CS-23] Class

IV

Rotorcraft [CS-27] or eVTOL

[

SC-VTOL-01] (cat.

enhanced)

Flight rules

Visual Flight Rules (VFR) in daytime Visual Meteorological

Conditions (VMC)

Special

considerations

Marked concrete runways, no

ILS equipment assumed

Vertiports in urban built-up

areas, no ILS equipment

assumed

22

An agency of the

European Union

Daedalean – EASA CoDANN IPC Extract – Chapter 4 23

Operational Concept 1 Operational Concept 2

Level of

automation

Pilot

advisory

Full

autonomy

(1a)

(1b)

Pilot

advisory

Full

autonomy

(2a)

(2b)

System interface

Glass cockpit

flight

director

display

Flight

computer

guidance

vector and

clear/abort

signal

Glass cockpit

flight

director

display

Flight

computer

guidance

vector and

clear/abort

signal

Cruise/Pattern Identify runway Identify vertiport

Descent

Disambiguate alternatives,

eliminate taxiways, find

centerline, maintain tracking

over 3

o

descents (even if

runway out of s ight)

Find centerpoint and bounds,

maintain tracking over 15

o

descents (assume platform

stays in line of sight)

Final approach

Maintain tracking, identify

obstruction/clear at 150m

AGL

Maintain tracking, identify

obstruction/clear at 30 – 15m

AGL

Decision point

Decide to land/Abort at any

point including after

touchdown

Decide to land/Abort down to

flare

Go Around Maintain tracking until back in pattern

Relevant Operating Parameters

Number of

airfields

50’000 100’000

Distance 100 – 8000m 10 – 1000m

Altitude 800m AGL 150m AGL

Angle of view 160

o

Time of day (sun

position)

3

o

below horizon and 3

o

after sunset (VFR definition)

Time of year Every month sampled

Visibility > 5km > 1km

Runways visible At most 1

Temporary

runway changes

Landing lights out, temporary

signs obstructing aircraft

Obstructing aircraft, person or

large object (box, plastic bag)

Table 4.1: Concepts of Operations (ConOps). Note that these are only meant to be an

illustration, in the scope of the report and, for example, do not address all possible sources of

uncertainty (e.g. other traffic, runway incursions, etc.).

An agency of the

European Union

Daedalean – EASA CoDANN IPC Extract – Chapter 4 24

Camera 2448 x 2448px 512 x 512px

Pre-

processing

Tracking/

filtering

CNN

Detection

(Corners + Uncertainty)

Runway

presence

likelihood

Corners

coordinates

Figure 4.1: System architecture overview (perception).

4.2 System description

This section describes and analyzes the system t hat performs visual landing guidance from

the above ConOps. It consists of traditional (non machine learning-based) software and a

neural network. A focus will be put on the machine learning components and their interactions

with the traditional software parts, s ince the existing certification guidelines can be followed

otherwise.

End-to-end learning The proposed system includes several smaller more dedicated, compo-

nents to simplify the design and achieve an isolation of the machine learning components.

This split will make the performance and safety assessments easier and more transparent (see

Chapters 8 and 9).

This is in contrast to recently proposed systems that attempt to learn complex behavior such

as visual landing guidance end-to-end, i.e. learning functions that directly map sensor data to

control outputs. While end-to-end learning is certainly an exciting area of research, it will not

be considered in this report for simplicity.

4.2.1 System architecture: perception

The system used in operation is shown in Figure 4.1 and consists of a combination of a camera

unit, a pre-processing component, a neural network and a tracking/filtering component.

Sensor The camera unit is assumed to have a global shutter and output 5 megapixels RGB

images at a fixed frequency.

Pre-processing The pre-processing unit reduces the resolution of the camera output to 512 ×

512 pixels and normalizes the image (e.g. so that it fits a given distribution). This is done

with “classical software” (i.e. no machine learning).

Neural network A convolutional neural network (CNN) as shown in Figure 4.2 is chosen as

the reference architecture for this document. The model’s input space X consists of 512 ×512

RGB images from a camera fixed on the nose of the aircraft. The model’s output space Y

consists of:

• A likelihood value (in [0, 1]) that the input image contains a runway;

• The normalized coordinates (e.g. in [0, 1]

2

) of each of the four runway corners (in a

given ordering with respect to image coordinates).

The model approximates the “ground truth” function f : X → {0, 1} × [0, 1]

4×2

defined

similarly.

Such networks are usually called object detection networks. They are generally based on a fea-

ture extraction network such as ResNet [

ResNet], followed by fully connected or convolutional

layers. Examples of such models (for multiple objects detection) are the Single Shot MultiBox

An agency of the

European Union

Daedalean – EASA CoDANN IPC Extract – Chapter 4 25

...

Convolution + Pooling

+ Activation

Input RGB Image

(512 x 512 x 3)

Predictions

Convolution + Pooling

+ Activation

Convolution + Pooling

+ Activation

Corner Predictions +

Uncertainty

Likelihood

Normalized

Coordinates

Figure 4.2: A generic convolutional neural network as considered in this report. This corre-

sponds to the red box in

Figure 4.1.

Detector (SSD) [Liu+16], Faster R-CNN [Ren+15] or Mask R-CNN [He+17] (the first two

output only bounding boxes, while the third one provides object masks; our proposed model

outputs quadrilaterals corresponding to the detected object and therefore, lies in-between).

The neural network satisfies all hypotheses that will be made in

Chapter 5. In particular, all

operations in the CNN are fully defined and differentiable, the network’s topology is a directed

acyclic graph (so that there are no recurrent connections), it is trained in a supervised manner,

and the whole system is non-adaptive, as defined in

Section 2.6.

Post-processing (tracking/filtering) The tracking/filtering unit post-processes t he neural

network output to:

• Threshold the runway likelihood output to make a binary runway/no runway decision;

• Reduce the error rate of the network, using information on previous frames, and eventually

on the movement/controls of the aircraft.

Similarly to pre-processing, this post-processing is also implemented with “classical software”.

4.2.2 System architecture: actuation

The second part of the system takes as input the output of the perception component, namely

an indication whether a runway is present or not, and corner coordinates (these are relevant

only if the runway likelihood is high enough). It then uses those to perform the actual visual

landing guidance described in

Section 4.1, in full autonomy or for pilot advisory only.

As the report focuses on certification concerns related to the use of machine learning, the

actuation subsystem is not described further and assumed to be developed with conventional

technologies. Similarly, the Safety Assessment outlined in

Chapter 9 will only consider the

perception s ys tem.

4.2.3 H ardware

Pre-/post-processing

The pre- and post-processing software components described above run

on classical computing hardware (CPUs), for which existing guidance and standards apply.

Neural network While it is possible to execute neural networks on CPUs as well, circuits

specialized in the most resource-heavy operations (e.g. matrix multiplications, convolutions)

can yield significantly higher performance. This can be especially important for applications

requiring high resolution input or throughput.

Nowadays, this is mostly done using graphics processing units (GPUs), originally developed

for 3D graphics, even though there is an increase in the use of application-specific integrated

An agency of the

European Union

Daedalean – EASA CoDANN IPC Extract – Chapter 4 26

circuits

1

(ASICs) or field-programmable gate arrays (FPGAs). For example, a recent study

[

WWB20] shows an improvement of 1-100 times on inference speed for GPUs over CPUs, and

1/5 − 10 times for TPUs over GPUs on different convolutional neural networks, depending on

various parameters (see also Table 4.2).

An important part to prove the airworthiness of the whole system described in this chapter

would be to demonstrate compliance of such specialized compute hardware with [ED-80/DO-

254

] and applicable EASA airborne electronic hardware guidance. This will not be addressed

in this report, which focuses on novel software aspects relevant to the certification of machine

learning systems.

Platform

Peak TFLOPS Memory (GB)

Memory

bandwidth (GB/s)

CPU (Skylake 32 threads) 2 (single prec.) 120 16.6

GPU (NVIDIA V100)

125 16 900

ASIC (Google TPUv3)

420 16 3600

Table 4.2: Neural network inference hardware compared in [

WWB20].

4.3 Notes on model training

The computational operations required to train a neural network are similar to those performed

during inference. Therefore, the observations made on hardware in

Section 4.2.3 still apply, with

the difference that requirements are a bit less stringent: one needs to ensure the correctness

of computations, but the training hardware does not need to be airworthy itself.

A typical environment for training neural networks consists of a desktop computer equipped

with a GPU (e.g. NVIDIA K80 or P100), running a Linux-based operating system, with

device-specific acceleration libraries (such as CUDA and cuDNN), and a neural network train-

ing framework. Popular neural network frameworks are TensorFlow

2

, Keras

3

, PyTorch

4

, and

CNTK

5

. These are all open-source, i.e. their source code is publicly available.

System errors could be introduced through malfunctioning hardware or data corruption. This

is relevant to both the learned model and datasets used for training, validation, and testing.

Another risk could come from deliberate third-party attacks on the learning algorithm and/or

model in training. Adversarial attacks are popular examples to fool neural networks during

operation. During the design phase, one could imagine that malicious attackers obtain access

to the training machine and insert modifications (e.g. backdoors) to the resulting model.

Again, this report will not address these risks further and leave them for future work. Note

that they could for example be mitigated in part by performing evaluations on the certified

operational hardware once the training has finished.

Use of cloud computing It is very common nowadays for complex consumer-grade machine

learning models to be trained in the cloud, given the advantages provided by the hardware

abstractions of remote compute and storage (such as large amount of resources available,

1

See for example Google’s Tensor processing units (TPUs):

https://cloud.google.com/tpu/

2

https://tensorflow.org

3

https://keras.io

4

https://pytorch.org

5

https://github.com/microsoft/CNTK

An agency of the

European Union

Daedalean – EASA CoDANN IPC Extract – Chapter 4 27

lack of maintenance needs, etc.). Popular cloud providers include Google Cloud Platform

6

,

Amazon Web Services (AWS)

7

, and Microsoft Azure

8

. Each of them allows the creation of

virtual instances which provide access to a full operating system and hardware including GPUs

or ASICs for machine learning.

This would introduce additional challenges in a safety-critical setting. For example, the user

has no control over which specific hardware unit the training algorithms are executed on. The

hardware is (at least for the time being) not provisioned with any certifications or qualifications

relevant to safety-critical applications. In this setting, third-party attacks and data corruption

error are particularly important to keep in mind.

Hence, training safety-critical machine learning models in the cloud is not excluded per se,

but should be the subject of extended discussion and analysis (e.g. with respect to hardware

certification, cybersecurity, etc.), which are also left to future work.

4.4 Selection criteria

The use case and system presented in this chapter were chosen because they are a represen-

tative and at the same time a fairly simple example for a machine learning system in aviation.

In this setting, a human pilot has the following cognitive functions:

• Perception (processing visual input). The quality of the image input is equivalent to

perfect human vision in specified VFR conditions.

• Representation of knowledge (information organization in memory and learning/construction

of new knowledge from information stored in memory).

• Reasoning (computation based on knowledge represented in memory).

• Capability of communication and expression. This requires identification of the hu-

man/machine interface and communication protocol.

• Decision making: modeling of executive decisions (e.g. landing: yes/no, if no, go around

or diversion).

This report focuses on the emulation of the perception function.

6

https://cloud.google.com

7

https://aws.amazon.com

8

https://azure.microsoft.com

An agency of the

European Union

Chapter 5

Learning process

This chapter provides a background tour of the learning algorithms considered in this doc-

ument. The reader will obtain a more precise technical understanding that is fundamental

to comprehend the theoretical guarantees and challenges of the framework for Learning As-

surance described in

Chapter 6. This chapter also provides formal definitions for supervised

and parametric learning and explains s trategies to quantify model errors. While most of this

chapter applies to the broader use of general machine learning algorithms, it concludes with a

discussion of generalization behavior specific to neural networks.

For more detailed accounts of learning algorithms, the reader is referred to textbooks including

[

LFD; ESL].

5.1 What is a learning algorithm?

The goal of a (supervised) learning algorithm F is to learn a function f : X → Y from an input

space X t o an output space Y , using a finite number of example pairs (x, f (x)), with x ∈ X.

More precisely, given a finite training dataset

D

train

= {(x

i

, f (x

i

)) : 1 ≤ i ≤ n

train

},

the goal of the training algorithm F is to generate a function (also called model, or hypothesis)

ˆ

f

(D

train

)

: X → Y

that approximates f “well”, as measured by error metrics that are defined below. In the

following, we will use the notation

F(D

train

) =

ˆ

f

(D

train

)

, (5.1)

with the meaning “the model

ˆ

f

(D

train

)

is the result of learning algorithm F trained on dataset

D

train

”.

With a slight abuse of notation, we will also refer to F as a set of possible models produced

by the learning algorithm F, sometimes called hypothesis space:

ˆ

f ∈ F.

Error metrics The pointwise quality of the approximation of f by

ˆ

f is measured with respect

to a predefined choice of error metric(s) m : Y → R

≥0

, demanding that

m

F(D

train

), f (x )

28

An agency of the

European Union

Daedalean – EASA CoDANN IPC Extract – Chapter 5 29

be low on all x ∈ X. These will not be simply called “metrics” to emphasize that “lower

value means better performance”, nor “losses” to make a distinction with the losses that are

introduced below.

For example, if Y is a subset of the real numbers, one could simply use the absolute values

(resp. squares) of differences m(y

1

, y

2

) = |y

1

− y

2

| (resp. (y

1

− y

2

)

2

). For further illustrations

in the context of the ConOps, see

Sections 5.2.5 and 8.1. In particular, these will explain that

several error metrics can and should be used.

Generalizability Most importantly, the goal is to perform well on unseen data from X during

operation (and not simply memorize the subset D

train

). This is called generalizability.

Design phase The process of using D

train

to obtain

ˆ

f

(D

train

)

from F is called the training phase.

The design phase of a final model

ˆ

f

(D

train

)

comprises of multiple rounds of choosing learning

algorithms, training them, and comparing them using validation datasets (see below).

The models that will be considered are parametric, in the sense that the algorithm F chooses

the model

ˆ

f from a family {

ˆ

f

θ

: X → Y : θ ∈ Θ} parametrized by a set of parameters θ. For

neural networks, θ would include the weights and biases. The choice of θ is usually done by

trying to minimize a function of the form

J(θ) =

1

|D

train

|

X

(x,f (x))∈D

train

L

ˆ

f

(D

train

)

θ

(x), f (x)

,

where L is a differentiable loss function that is related or equal to one or several of the error

metrics m. Usually, the loss function acts as a differentiable proxy to optimize the different

error metrics.

For binary classification tasks (i.e. the number of output classes is two), a popular loss function

is binary cross-entropy:

L(ˆy, y) = CE(ˆy, y) = −y log (ˆy) − (1 − y) log (1 − ˆy), (y, ˆy ∈ [0, 1]). (5.2)

Hyperparameters In addition to the learned parameters θ, the algorithm F might also come

with parameters, called hyperparameters. For the widely used gradient descent minimization

algorithm, the learning rate is an important hyperparameter.

Validation dataset A second dataset, the validation dataset

D

val

= {(x

i

, f (x

i

)) : 1 ≤ i ≤ n

val

},

disjoint from D

train

, is used during the design phase to

• monitor the performance of models on unseen data, and

• compare different models (i.e. different choices of θ).

For example, an algorithm simply memorizing D

train

would certainly perform badly on D

val

.

Note that D

val

is not used explicitly by the algorithm, but the information it contains might

influence the design of the model, leading to an overestimation of the performance on unseen

data. To illustrate this, an extreme example would involve tweaking F at each round of the

design phase depending on the validation scores. This would essentially become equivalent to

using D

val

as a training set.

Test dataset To get a more precise estimation of generalizability ( and hence of performance

during the operational phase), remedying the issue jus t mentioned, the final model is evaluated

on a third disjoint dataset, the test dataset

D

test

= {(x

i

, f (x

i

)) : 1 ≤ i ≤ n

test

}.

An agency of the

European Union

Daedalean – EASA CoDANN IPC Extract – Chapter 5 30

Figure 5.1: The training, design, and operational phases of a learning algorithm. See also the

development life-cycle exposed in

Section 6.1.

As

Section 6.2.9 will explain, D

test

should also be disjoint from D

val

and D

train

. Importantly,

D

test

should be kept hidden from the training phase, i.e., it should not influence the training

of the model in any manner.

Operational phase During the inference or operational phase, the model can be fed data and

make predictions. It is important to note that once a model has been obtained during the

design phase, it will be frozen and baselined. In other words, its behavior will not be changed

in operation.

5.2 Training, validation, testing, and out-of-sample errors

In this section, different types of errors are considered. These will allow to understand a m odel’s

behavior on both known and, to a possible extent, on unknown data (i.e. data it will encounter

during operation).

5.2.1 Probability spaces

To be able to quantify performance on unseen data accurately, X is equipped with a probabil-

ity distribution P , yielding a probability space

1

X = (X, P ), so that more likely elements are

assigned a higher probability. When evaluating and comparing learning algorithms, worse per-

formance on more likely elements will be penalized more strongly. As described later, identifying

the probability space corresponding to the target operational scenario is essential.

5.2.2 Errors in data

In a real-world scenario, the pairs (x

i

, y

i

) in the training, validation, and test datasets might

contain small errors coming from sources such as annotation mistakes or imprecisions in mea-

surements arising from sensor noise. Namely, one rather has

2

y

i

= f (x

i

) + δ

i

1

The σ-algebra of events will be left implicit for simplicity.

2

For the sake of simplicity, the focus is put here on additive errors. Advanced texts would consider the joint

distribution of (x, y).

An agency of the

European Union

Daedalean – EASA CoDANN IPC Extract – Chapter 5 31

for some small but nonzero δ

i

∈ R

t

, assuming that Y ⊂ R

t

for some t ≥ 1 (as will be assumed

from now on). These δ

i

are typically modeled as normal independent variables with mean zero

and common variance σ

2

.

This aspect will be addressed in detail in Section 6.2.3.

5.2.3 In-sample errors

The training error of a model

ˆ

f : X → Y with respect to an error metric m is defined as the

mean

E

in

(

ˆ

f , D

train

, m) =

1

|D

train

|

X

(x,f (x))∈D

train

m

ˆ

f (x), f (x)

,

and the validation and testing errors E

in

(

ˆ

f , D

val

, m), E

in

(

ˆ

f , D

test

, m) are defined similarly. These

are called in-sample errors.

5.2.4 O ut-of-sample errors

The out-of-sample error (or expected loss) of a model

ˆ

f , with respect to an error metric m,

can be measured formally by the expected value

3

E

out

(

ˆ

f , m) = E

δ,x∼X

m

f (x) + δ,

ˆ

f (x)

,

with the expectation taken over the distribution of the input space X and the errors δ.

Given a dataset size n, one can also consider the average

E

out

(F, m, n) = E

D∼X

n

E

out

(F(D), D, m)

, (5.3)

over all datasets D obtained by sampling n points independently from X , where F(D) =

ˆ

f

(D)

is the model resulting from training F on D.

One would generally have

E

in

(F(D

train

), D

train

, m) < E

in

(F(D

train

), D

val

, m) < E

in

(F(D

train

), D

test

, m)

< E

out

(F(D

train

), m) ,

and a desirable property is to have all inequalities as tight as possibly because it would imply

that the performance on unseen data (during the operational phase) can be precisely estimated

during the design phase (see

Section 5.3 on generalizability below).

5.2.5 Example

This section gives an example of the above in the context of the ConOps from Chapter 4.

As described in

Section 4.2.1, the input space X consists of 512 × 512 RGB images from

a camera fixed on the nose of the aircraft (with the image distribution depending on the

conditions/locations where the plane is expected to fly), while the output space is Y = [0, 1] ×

[0, 1]

4×2

. The goal is to obtain a model

ˆ

f : X → Y approximating the function f : X → Y

indicating the presence or not of a runway in the input image, and the corner coordinates if

relevant.

3

Here, E denotes the expected value of a random variable, and x ∼ X denotes a random variable sampled

from the probability space X .

An agency of the

European Union

Daedalean – EASA CoDANN IPC Extract – Chapter 5 32

Datasets A large representative dataset of images x ∈ X in the operating conditions is col-

lected, and the “ground truth” f (x) is manually annotated. Care is given to cover all parameters

correctly (see Sections 6.2.7 and 6.2.8), particularly those that correlate with the presence of a

runway. Splitting the resulting set of pairs (x, f (x)) randomly with the ratios 70%–15%–15%

yields training, validation, and test datasets D

train

, D

val

, D

test

respectively.

Error metric The error metric m has to take into account both the runway presence likelihood,

and the corner coordinates prediction. A linear combination of the cross-entropy (

5.2) and the

L

2

(Euclidean) norm could be used:

m (

ˆ

f (x), f (x)) := f

0

(x)

X

1≤i≤4

k

ˆ

f

i

(x) − f

i

(x)k + λ · CE(

ˆ

f

0

(x), f

0

(x)),

where λ > 0 is a parameter to determine during the design/training phase, and

f = (f

0

, . . . , f

4

) ∈ {0, 1} × [0, 1]

2

× · · · × [ 0, 1]

2

,

ˆ

f = (

ˆ

f

0

, . . . ,

ˆ

f

4

) ∈ [0, 1] × [0, 1]

2

× · · · × [ 0, 1]

2

,

with

ˆ

f

0

the runway presence likelihood and

ˆ

f

1

, . . . ,

ˆ

f

4

the corner coordinates (similarly for the

ground truth f ).

Note that one could also compare the two runway masks using the Jaccard distance; see

Section 8.1 for a discussion of different metrics.

Design phase An algorithm is selected, as described in Section 4.2.1. For example, a first round

gives a model

ˆ

f with a validation error E

in

(

ˆ

f , D

val

, m) of 0.20. A subsequent modification of

the algorithm yields another model

ˆ

f with a lower validation error E

in

(

ˆ

f , D

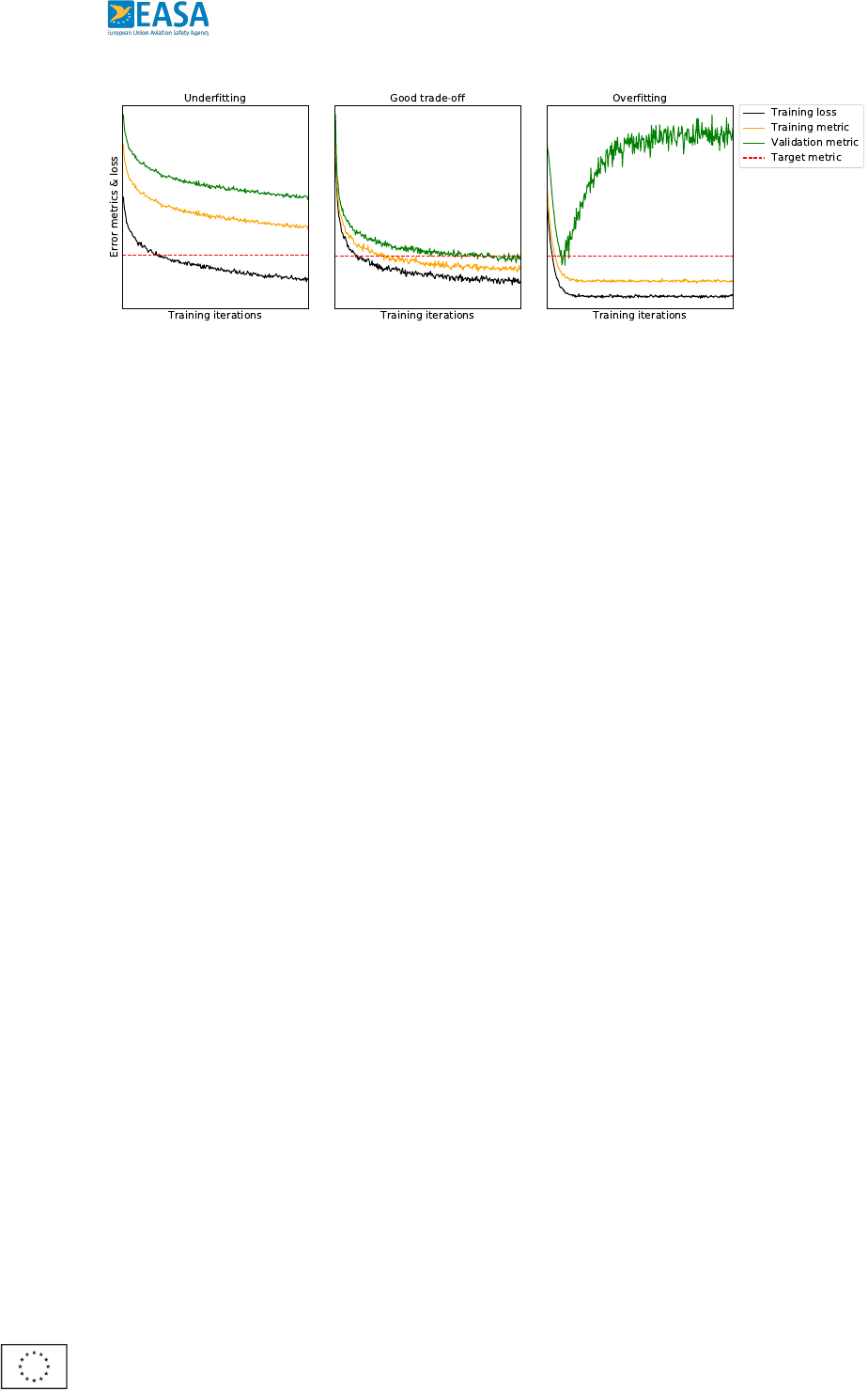

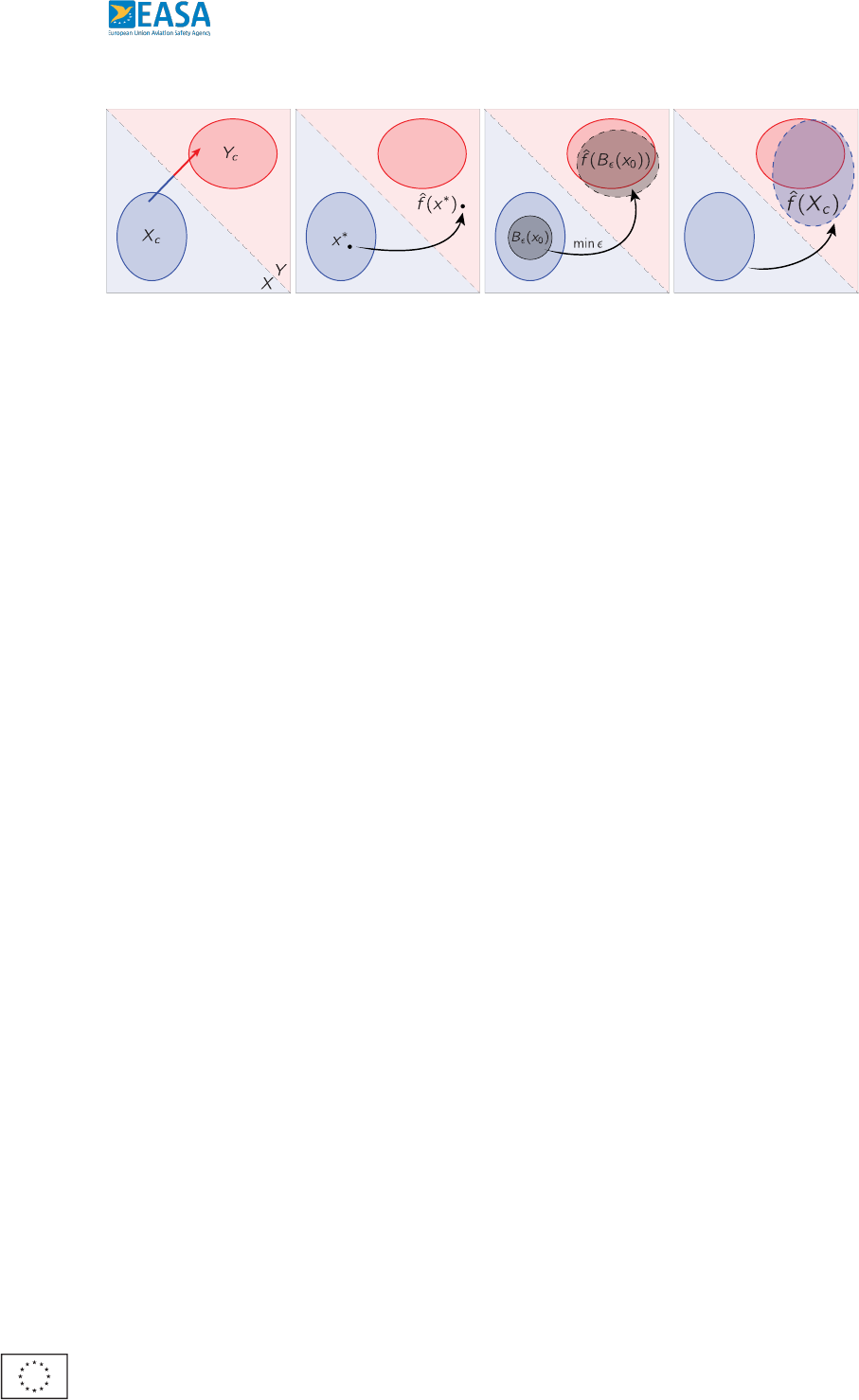

val