OPERATING

SYSTEM

CONCEPTS

NINTH EDITION

OPERATING

SYSTEM

CONCEPTS

ABRAHAM SILBERSCHATZ

Yale University

PETER BAER GALVIN

Pluribus Networks

GREG GAGNE

Westminster College

NINTH EDITION

!

Vice!President!and!Executive!Publisher!! ! Don!Fowley!

Executive!Editor! !!!!Beth!Lang!Golub!

Editorial!Assistant! !!!!Katherine!Willis!

Executive!Marketing!Manager!!!!Christopher!Ruel!

Senior!Production!Editor! !!!Ken!Santor!

Cover!and!title!page!illustrations!! ! Susan!Cyr!

Cover!Designer! !!!!Madelyn!Lesure!

Text!Designer!! !!!!Judy!Allan!

!

!

!

!

!

This!book!was!set!in!Palatino!by!the!author!using!LaTeX!and!printed!and!bound!by!Courier"

Kendallville.!The!cover!was!printed!by!Courier.!

!

!

Copyright!©!2013,!2012,!2008!John!Wiley!&!Sons,!Inc.!!All!rights!reserved.!

!

No!part!of!this!publication!may!be!reproduced,!stored!in!a!retrieval!system!or!transmitted!in!any!

form!or!by!any!means,!electronic,!mechanical,!photocopying,!recording,!scanning!or!otherwise,!

except!as!permitted!under!Sections!107!or!108!of!the!1976!United!States!Copyright! Act,!without!

either!the!prior!written!permission!of!the!Publisher,!or!authorization!through!payment!of!the!

appropriate!per"copy!fee!to!the!Copyright!Clearance!Center,!Inc.!222!Rosewood!Drive,!Danvers,!

MA!01923,!(978)750"8400,!fax!(978)750"4470.!Requests!to!the!Publisher!for!permission!should!be!

addressed!to!the!Permissions!Department,!John!Wi ley!&!Sons,!Inc.,!111!River!Street,!Hoboken,!NJ!

!

Evaluation!copies!are!provided!to!qualified!academics!and!professionals!for!review!purposes!

only,!for!use!in!their!courses!during!the!next!academic!year.!!These!copies!are!licensed!and!may!

not!be!sold!or!transferred!to!a!third!party.!!Upon!completion!of!the!review!period,!please!return!

the!evaluation!copy!to!Wiley.!!Return!instructions!and!a!free"of"charge!return!shipping!label!are!

available!at!www.wiley.com/go/evalreturn.!Outside!of!the!United!States,!please!contact!your!

local!representative.!

!

Founded!in!1807,!John!Wiley!&!Sons,!Inc.!has! been! a ! valued! source!of!knowledge!and!

understanding!for!more!than!200!years,!helping !people!around!the!world!meet!their!needs!and!

fulfill!their!aspirations.!Our!company!is!built!on!a!foundation!of!principles!that!include!

responsibility!to!the!communities!we!serve!and!where!we!live!and!work.!In!2008,!we!launched!a!

Corporate!Citizenship!Initiative,!a!global!effort!to!address!the!environmental,!social,!economic,!

and!ethical!challenges!we!face!in!our!business.!Among!the!issues!we!are!addressing!are!carbon!

impact,!paper!specifications!and!procurement,!ethical!conduct!within!our!business!and!among!

our!vendors,!and!community!and!charitable!support.!For!more!information,!please!visit!our!

website:!www.wiley.com/go/citizenship.!!!

!

!

!

ISBN:!!978"1"118"06333"0!

ISBN!BRV:!!978"1"118"12938"8!

!

Printed!in!the!United!States!of!America!

!

10!!!9!!!8!!!7!!!6!!!5!!!4!!!3!!!2!!!1!

To my children, Lemor, S i v a n , and Aaron

and my Nicolette

Avi Silberschatz

To Brendan and Ellen,

and Barbara, Anne and Harold, and Walter and Rebecca

Peter Baer Galvin

To my Mom and Dad,

Greg Gagne

Preface

Operating systems are an essential part of any computer system. Similarly,

acourseonoperatingsystemsisanessentialpartofanycomputerscience

education. This field is undergoing rapid change, as computers are now

prevalent in virtually every arena of day-to-day life—from embedded devices

in automobiles through the most sophisticated planning tools for governments

and multinational firms. Yet the fundamental concepts remain fairly clear, and

it is on these that we base this book.

We wrote this book as a text for an introductory course in operating systems

at the junior or senior undergraduate level or at the first-year graduate level. We

hope that practitioners will also find it useful. It provides a clear description of

the concepts that underlie operating systems. As prerequisites, we assume that

the reader is familiar with basic data structures, computer organization, and

ahigh-levellanguage,suchasCorJava.Thehardwaretopicsrequiredforan

understanding of operating systems are covered in Chapter 1. In that chapter,

we also include an overview of the fundamental data structures that are

prevalent in most operating systems. For code examples, we use predominantly

C, with some Java, but the reader can still understand the algorithms without

a thorough knowledge of these languages.

Concepts are presented using intuitive descriptions. Important theoretical

results are covered, but formal proofs are largely omitted. The bibliographical

notes at the end of each chapter contain pointers to research papers in which

results were first presented and proved, as well as references to recent material

for further reading. In place of proofs, figures and examples are used to suggest

why we should expect the result in question to be true.

The fundamental concepts and algorithms covered in the book are often

based on those used in both commercial and open-source operating systems.

Our aim is to present these concepts and algorithms in a general setting that

is not tied to one particular operating system. However, we present a large

number of examples that pertain to the most popular and the most innovative

operating systems, including Linux, Microsoft Windows, Apple Mac

OS X,and

Solaris. We also include examples of both Android and i

OS,currentlythetwo

dominant mobile operating systems.

The organization of the text reflects our many years of teaching courses on

operating systems, as well as curriculum guidelines published by the

IEEE

vii

viii Preface

Computing Society and the Association for Computing Machinery (ACM).

Consideration was also given to the feedback provided by the reviewers of

the text, along with the many comments and suggestions we received from

readers of our previous editions and from our current and former students.

Content of This Book

The text is organized in eight major parts:

•

Overview.Chapters1and2explainwhatoperatingsystemsare,what

they do, and how they are designed and constructed. These chapters

discuss what the common features of an operating system are and what an

operating system does for the user. We include coverage of both traditional

PC and server operating systems, as well as operating systems for mobile

devices. The presentation is motivational and explanatory in nature. We

have avoided a discussion of how things are done internally in these

chapters. Therefore, they are suitable for individual readers or for students

in lower-level classes who want to learn what an operating system is

without getting into the details of the internal algorithms.

•

Process management.Chapters3through7describetheprocessconcept

and concurrency as the heart of modern operating systems. A process

is the unit of work in a system. Such a system consists of a collection

of concurrently executing processes, some of which are operating-system

processes (those that execute system code) and the rest of which are user

processes (those that execute user code). These chapters cover methods for

process scheduling, interprocess communication, process synchronization,

and deadlock handling. Also included is a discussion of threads, as well

as an examination of issues related to multicore systems and parallel

programming.

•

Memory management. Chapters 8 and 9 deal with the management of

main memory during the execution of a process. To improve both the

utilization of the

CPU and the speed of its response to its users, the

computer must keep several processes in memory. There are many different

memory-management schemes, reflecting various approaches to memory

management, and the effectiveness of a particular algorithm depends on

the situation.

•

Storage management.Chapters10through13describehowmassstorage,

the file system, and

I/O are handled in a modern computer system. The

file system provides the mechanism for on-line storage of and access

to both data and programs. We describe the classic internal algorithms

and structures of storage management and provide a firm practical

understanding of the algorithms used—their properties, advantages, and

disadvantages. Since the

I/O devices that attach to a computer vary widely,

the operating system needs to provide a wide range of functionality to

applications to allow them to control all aspects of these devices. We

discuss system

I/O in depth, including I/O system design, interfaces, and

internal system structures and functions. In many ways,

I/O devices are

the slowest major components of thecomputer.Becausetheyrepresenta

Preface ix

performance bottleneck, we also examine performance issues associated

with

I/O devices.

•

Protection and security.Chapters14and15discussthemechanisms

necessary for the protection and security of computer systems. The

processes in an operating system must be protected from one another’s

activities, and to provide such protection, we must ensure that only

processes that have gained proper authorization from the operating system

can operate on the files, memory,

CPU, and other resources of the system.

Protection is a mechanism for controlling the access of programs, processes,

or users to computer-system resources. This mechanism must provide a

means of specifying the controls to be imposed, as well as a means of

enforcement. Security protects the integrity of the information stored in

the system (both data and code), as well as the physical resources of the

system, from unauthorized access, malicious destruction or alteration, and

accidental introduction of inconsistency.

•

Advanced topics. Chapters 16 and 17 discuss virtual machines and

distributed systems. Chapter 16 is a new chapter that provides an overview

of virtual machines and their relationship to contemporary operating

systems. Included is an overview of the hardware and software techniques

that make virtualization possible. Chapter 17 condenses and updates the

three chapters on distributed computing from the previous edition. This

change is meant to make it easier for instructors to cover the material in

the limited time available during a semester and for students to gain an

understanding of the core ideas of distributed computing more quickly.

•

Case studies.Chapters18and19inthetext,alongwithAppendicesAand

B(whichareavailableon(

http://www.os-book.com), present detailed

case studies of real operating systems, including Linux, Windows 7,

FreeBSD,andMach.CoverageofbothLinuxandWindows7arepresented

throughout this text; however, the case studies provide much more detail.

It is especially interesting to compare and contrast the design of these two

very different systems. Chapter 20 briefly describes a few other influential

operating systems.

The Ninth Edition

As we wrote this Ninth Edition of Operating System Concepts, we were guided

by the recent growth in three fundamental areas that affect operating systems:

1. Multicore systems

2. Mobile computing

3. Virtualization

To emphasize these topics, we have integrated relevant coverage throughout

this new edition—and, in the case of virtualization, have written an entirely

new chapter. Additionally, we have rewritten material in almost every chapter

by bringing older material up to date and removing material that is no longer

interesting or relevant.

x Preface

We have also made substantial organizational changes. For example, we

have eliminated the chapter on real-time systems and instead have integrated

appropriate coverage of these systems throughout the text. We have reordered

the chapters on storage management and have moved up the presentation

of process synchronization so that it appears before process scheduling. Most

of these organizational changes are basedonourexperienceswhileteaching

courses on operating systems.

Below, we provide a brief outline of the major changes to the various

chapters:

•

Chapter 1, Introduction, includes updated coverage of multiprocessor

and multicore systems, as well as a new section on kernel data structures.

Additionally, the coverage of computing environments now includes

mobile systems and cloud computing. We also have incorporated an

overview of real-time systems.

•

Chapter 2, Operating-System Structures, provides new coverage of user

interfaces for mobile devices, including discussions of i

OS and Android,

and expanded coverage of Mac

OS X as a type of hybrid system.

•

Chapter 3, Processes, now includes coverage of multitasking in mobile

operating systems, support for the multiprocess model in Google’s Chrome

web browser, and zombie and orphan processes in

UNIX.

•

Chapter 4, Threads, supplies expanded coverage of parallelism and

Amdahl’s law. It also provides a new section on implicit threading,

including Open

MP and Apple’s Grand Central Dispatch.

•

Chapter 5, Process Synchronization (previously Chapter 6), adds a new

section on mutex locks as well as coverage of synchronization using

Open

MP,aswellasfunctionallanguages.

•

Chapter 6, CPU Scheduling (previously Chapter 5), contains new coverage

of the Linux

CFS scheduler and Windows user-mode scheduling. Coverage

of real-time scheduling algorithms has also been integrated into this

chapter.

•

Chapter 7, Deadlocks, has no major changes.

•

Chapter 8, Main Memory, includes new coverage of swapping on mobile

systems and Intel 32- and 64-bit architectures. A new section discusses

ARM architecture.

•

Chapter 9, Virtual Memory, updates kernel memory management to

include the Linux

SLUB and SLOB memory allocators.

•

Chapter 10, Mass-Storage Structure (previously Chapter 12), adds cover-

age of solid-state disks.

•

Chapter 11, File-System Interface (previously Chapter 10), is updated

with information about current technologies.

•

Chapter 12, File-System Implementation (previously Chapter 11), is

updated with coverage of current technologies.

•

Chapter 13, I/O, updates technologies and performance numbers, expands

coverage of synchronous/asynchronous and blocking/nonblocking

I/O,

and adds a section on vectored

I/O.

Preface xi

•

Chapter 14, Protection, has no major changes.

•

Chapter 15, Security, has a revised cryptography section with modern

notation and an improved explanation of various encryption methods and

their uses. The chapter also includes new coverage of Windows 7 security.

•

Chapter 16, Virtual Machines, is a new chapter that provides an overview

of virtualization and how it relates to contemporary operating systems.

•

Chapter 17, Distributed Systems, is a new chapter that combines and

updates a selection of materials from previous Chapters 16, 17, and 18.

•

Chapter 18, The Linux System (previously Chapter 21), has been updated

to cover the Linux 3.2 kernel.

•

Chapter 19, Windows 7, is a new chapter presenting a case study of

Windows 7.

•

Chapter 20, Influential Operating Systems (previously Chapter 23), has

no major changes.

Programming Environments

This book uses examples of many real-world operating systems to illustrate

fundamental operating-system concepts. Particular attention is paid to Linux

and Microsoft Windows, but we also refer to various versions of

UNIX

(including Solaris, BSD,andMacOS X).

The text also provides several example programs written in C and

Java. These programs are intended to run in the following programming

environments:

•

POSIX. POSIX (which stands for Portable Operating System Interface)repre-

sents a set of standards implemented primarily for

UNIX-based operating

systems. Although Windows systems can also run certain

POSIX programs,

our coverage of

POSIX focuses on UNIX and Linux systems. POSIX-compliant

systems must implement the

POSIX core standard (POSIX.1); Linux, Solaris,

and Mac

OS X are examples of POSIX-compliant systems. POSIX also

defines several extensions to the standards, including real-time extensions

(

POSIX1.b) and an extension for a threads library (POSIX1.c, better known

as Pthreads). We provide several programming examples written in C

illustrating the

POSIX base API,aswellasPthreadsandtheextensionsfor

real-time programming. These example programs were tested on Linux 2.6

and 3.2 systems, Mac

OS X 10.7, and Solaris 10 using the gcc 4.0 compiler.

•

Java.JavaisawidelyusedprogramminglanguagewitharichAPI and

built-in language support for thread creation and management. Java

programs run on any operating system supporting a Java virtual machine

(or

JVM). We illustrate various operating-system and networking concepts

with Java programs tested using the Java 1.6

JVM.

•

Windows systems.TheprimaryprogrammingenvironmentforWindows

systems is the Windows

API,whichprovidesacomprehensivesetoffunc-

tions for managing processes, threads, memory, and peripheral devices.

We supply several C programs illustrating the use of this

API.Programs

were tested on systems running Windows

XP and Windows 7.

xii Preface

We have chosen these three programmingenvironmentsbecausewe

believe that they best represent the two most popular operating-system models

—Windows and

UNIX/Linux—along with the widely used Java environment.

Most programming examples are written in C, and we expect readers to be

comfortable with this language. Readers familiar with both the C and Java

languages should easily understand most programs provided in this text.

In some instances—such as thread creation—we illustrate a specific

concept using all three programming environments, allowing the reader

to contrast the three different libraries as they address the same task. In

other situations, we may use just one of the

APIstodemonstrateaconcept.

For example, we illustrate shared memory using just the

POSIX API;socket

programming in

TCP/IP is highlighted using the Java API.

Linux Virtual Machine

To help students gain a better understanding of the Linux system, we

provide a Linux virtual machine, including the Linux source code,

that is available for download from the the website supporting this

text (

http://www.os-book.com). This virtual machine also includes a

gcc development environment with compilers and editors. Most of the

programming assignments in the book can be completed on this virtual

machine, with the exception of assignments that require Java or the Windows

API.

We also provide three programming assignments that modify the Linux

kernel through kernel modules:

1. Adding a basic kernel module to the Linux kernel.

2. Adding a kernel module that uses various kernel data structures.

3. Adding a kernel module that iterates over tasks in a running Linux

system.

Over time it is our intention to add additional kernel module assignments on

the supporting website.

Supporting Website

When you visit the website supporting this text at http://www.os-book.com,

you can download the following resources:

•

Linux virtual machine

•

CandJavasourcecode

•

Sample syllabi

•

Set of Powerpoint slides

•

Set of figures and illustrations

•

FreeBSD and Mach case studies

Preface xiii

•

Solutions to practice exercises

•

Study guide for students

•

Errata

Notes to Instructors

On the website for this text, we provide several sample syllabi that suggest

various approaches for using the text in both introductory and advanced

courses. As a general rule, we encourage instructors to progress sequentially

through the chapters, as this strategy provides the most thorough study of

operating systems. However, by using the sample syllabi, an instructor can

select a different ordering of chapters (or subsections of chapters).

In this edition, we have added over sixty new written exercises and over

twenty new programming problems and projects. Most of the new program-

ming assignments involve processes, threads, process synchronization, and

memory management. Some involve adding kernel modules to the Linux

system which requires using either the Linux virtual machine that accompanies

this text or another suitable Linux distribution.

Solutions to written exercises and programming assignments are available

to instructors who have adopted this text for their operating-system class. To

obtain these restricted supplements, contact your local John Wiley & Sons

sales representative. You can find your Wiley representative by going to

http://www.wiley.com/college and clicking “Who’s my rep?”

Notes to Students

We encourage you to take advantage of the practice exercises that appear at

the end of each chapter. Solutions to the practice exercises are available for

download from the supporting website

http://www.os-book.com.Wealso

encourage you to read through the study guide, which was prepared by one of

our students. Finally, for students who are unfamiliar with

UNIX and Linux

systems, we recommend that you download and install the Linux virtual

machine that we include on the supporting website. Not only will this provide

you with a new computing experience, but the open-source nature of Linux

will allow you to easily examine the inner details of this popular operating

system.

We wish you the very best of luck in your study of operating systems.

Contacting Us

We have endeavored to eliminate typos, bugs, and the like from the text. But,

as in new releases of software, bugs almost surely remain. An up-to-date errata

list is accessible from the book’s website. We would be grateful if you would

notify us of any errors or omissions in the book that are not on the current list

of errata.

We would be glad to receive suggestions on improvements to the book.

We also welcome any contributions to the book website that could be of

xiv Preface

use to other readers, such as programming exercises, project suggestions,

on-line labs and tutorials, and teaching tips. E-mail should be addressed to

Acknowledgments

This book is derived from the previous editions, the first three of which

were coauthored by James Peterson. Others who helped us with previous

editions include Hamid Arabnia, Rida Bazzi, Randy Bentson, David Black,

Joseph Boykin, Jeff Brumfield, Gael Buckley, Roy Campbell, P. C. Capon, John

Carpenter, Gil Carrick, Thomas Casavant, Bart Childs, Ajoy Kumar Datta,

Joe Deck, Sudarshan K. Dhall, Thomas Doeppner, Caleb Drake, M. Racsit

Eskicio

˘

glu, Hans Flack, Robert Fowler, G. Scott Graham, Richard Guy, Max

Hailperin, Rebecca Hartman, Wayne Hathaway, Christopher Haynes, Don

Heller, Bruce Hillyer, Mark Holliday, Dean Hougen, Michael Huang, Ahmed

Kamel, Morty Kewstel, Richard Kieburtz, Carol Kroll, Morty Kwestel, Thomas

LeBlanc, John Leggett, Jerrold Leichter, Ted Leung, Gary Lippman, Carolyn

Miller, Michael Molloy, Euripides Montagne, Yoichi Muraoka, Jim M. Ng,

Banu

¨

Ozden, Ed Posnak, Boris Putanec, Charles Qualline, John Quarterman,

Mike Reiter, Gustavo Rodriguez-Rivera, Carolyn J. C. Schauble, Thomas P.

Skinner, Yannis Smaragdakis, Jesse St. Laurent, John Stankovic, Adam Stauffer,

Steven Stepanek, John Sterling, Hal Stern, Louis Stevens, Pete Thomas, David

Umbaugh, Steve Vinoski, Tommy Wagner, Larry L. Wear, John Werth, James

M. Westall, J. S. Weston, and Yang Xiang

Robert Love updated both Chapter 18 and the Linux coverage throughout

the text, as well as answering many of our Android-related questions. Chapter

19 was written by Dave Probert and was derived from Chapter 22 of the Eighth

Edition of Operating System Concepts. Jonathan Katz contributed to Chapter

15. Richard West provided input into Chapter 16. Salahuddin Khan updated

Section 15.9 to provide new coverage of Windows 7 security.

Parts of Chapter 17 were derived from a paper by Levy and Silberschatz

[1990]. Chapter 18 was derived from an unpublished manuscript by Stephen

Tweedie. Cliff Martin helped with updating the

UNIX appendix to cover

FreeBSD.Someoftheexercisesandaccompanyingsolutionsweresuppliedby

Arvind Krishnamurthy. Andrew DeNicola prepared the student study guide

that is available on our website. Some of the the slides were prepeared by

Marilyn Turnamian.

Mike Shapiro, Bryan Cantrill, and Jim Mauro answered several Solaris-

related questions, and Bryan Cantrill from Sun Microsystems helped with the

ZFS coverage. Josh Dees and Rob Reynolds contributed coverage of Microsoft’s

NET.TheprojectforPOSIX message queues was contributed by John Trono of

Saint Michael’s College in Colchester, Vermont.

Judi Paige helped with generating figures and presentation of slides.

Thomas Gagne prepared new artwork for this edition. Owen Galvin helped

copy-edit Chapter 16. Mark Wogahn has made sure that the software to produce

this book (

L

A

T

E

X and fonts) works properly. Ranjan Kumar Meher rewrote some

of the

L

A

T

E

X software used in the production of this new text.

Preface xv

Our Executive Editor, Beth Lang Golub, provided expert guidance as we

prepared this edition. She was assisted by Katherine Willis, who managed

many details of the project smoothly. The Senior Production Editor, Ken Santor,

was instrumental in handling all the production details.

The cover illustrator was Susan Cyr, and the cover designer was Madelyn

Lesure. Beverly Peavler copy-edited the manuscript. The freelance proofreader

was Katrina Avery; the freelance indexer was WordCo, Inc.

Abraham Silberschatz, New Haven, CT, 2012

Peter Baer Galvin, Boston, MA, 2012

Greg Gagne, Salt Lake City, UT, 2012

Contents

PART ONE OVERVIEW

Chapter 1 Introduction

1.1 What Operating Systems Do 4

1.2 Computer-System Organization 7

1.3 Computer-System Architecture 12

1.4 Operating-System Structure 19

1.5 Operating-System Operations 21

1.6 Process Management 24

1.7 Memory Management 25

1.8 Storage Management 26

1.9 Protection and Security 30

1.10 Kernel Data Structures 31

1.11 Computing Environments 35

1.12 Open-Source Operating Systems 43

1.13 Summary 47

Exercises 49

Bibliographical Notes 52

Chapter 2 Operating-System Structures

2.1 Operating-System Services 55

2.2 User and Operating-System

Interface 58

2.3 System Calls 62

2.4 Types of System Calls 66

2.5 System Programs 74

2.6 Operating-System Design and

Implementation 75

2.7 Operating-System Structure 78

2.8 Operating-System Debugging 86

2.9 Operating-System Generation 91

2.10 System Boot 92

2.11 Summary 93

Exercises 94

Bibliographical Notes 101

PART TWO PROCESS MANAGEMENT

Chapter 3 Processes

3.1 Process Concept 105

3.2 Process Scheduling 110

3.3 Operations on Processes 115

3.4 Interprocess Communication 122

3.5 Examples of IPC Systems 130

3.6 Communication in Client–

Server Systems 136

3.7 Summary 147

Exercises 149

Bibliographical Notes 161

xvii

xviii Contents

Chapter 4 Threads

4.1 Overview 163

4.2 Multicore Programming 166

4.3 Multithreading Models 169

4.4 Thread Libraries 171

4.5 Implicit Threading 177

4.6 Threading Issues 183

4.7 Operating-System Examples 188

4.8 Summary 191

Exercises 191

Bibliographical Notes 199

Chapter 5 Process Synchronization

5.1 Background 203

5.2 The Critical-Section Problem 206

5.3 Peterson’s Solution 207

5.4 Synchronization Hardware 209

5.5 Mutex Locks 212

5.6 Semaphores 213

5.7 Classic Problems of

Synchronization 219

5.8 Monitors 223

5.9 Synchronization Examples 232

5.10 Alternative Approaches 238

5.11 Summary 242

Exercises 242

Bibliographical Notes 258

Chapter 6 CPU Scheduling

6.1 Basic Concepts 261

6.2 Scheduling Criteria 265

6.3 Scheduling Algorithms 266

6.4 Thread Scheduling 277

6.5 Multiple-Processor Scheduling 278

6.6 Real-Time CPU Scheduling 283

6.7 Operating-System Examples 290

6.8 Algorithm Evaluation 300

6.9 Summary 304

Exercises 305

Bibliographical Notes 311

Chapter 7 Deadlocks

7.1 System Model 315

7.2 Deadlock Characterization 317

7.3 Methods for Handling Deadlocks 322

7.4 Deadlock Prevention 323

7.5 Deadlock Avoidance 327

7.6 Deadlock Detection 333

7.7 Recovery from Deadlock 337

7.8 Summary 339

Exercises 339

Bibliographical Notes 346

PART THREE MEMORY MANAGEMENT

Chapter 8 Main Memory

8.1 Background 351

8.2 Swapping 358

8.3 Contiguous Memory Allocation 360

8.4 Segmentation 364

8.5 Paging 366

8.6 Structure of the Page Table 378

8.7 Example: Intel 32 and 64-bit

Architectures 383

8.8 Example: ARM Architecture 388

8.9 Summary 389

Exercises 390

Bibliographical Notes 394

Contents xix

Chapter 9 Virtual Memory

9.1 Background 397

9.2 Demand Paging 401

9.3 Copy-on-Write 408

9.4 Page Replacement 409

9.5 Allocation of Frames 421

9.6 Thrashing 425

9.7 Memory-Mapped Files 430

9.8 Allocating Kernel Memory 436

9.9 Other Considerations 439

9.10 Operating-System Examples 445

9.11 Summary 448

Exercises 449

Bibliographical Notes 461

PART FOUR STORAGE MANAGEMENT

Chapter 10 Mass-Storage Structure

10.1 Overview of Mass-Storage

Structure 467

10.2 Disk Structure 470

10.3 Disk Attachment 471

10.4 Disk Scheduling 472

10.5 Disk Management 478

10.6 Swap-Space Management 482

10.7 RAID Structure 484

10.8 Stable-Storage Implementation 494

10.9 Summary 496

Exercises 497

Bibliographical Notes 501

Chapter 11 File-System Interface

11.1 File Concept 503

11.2 Access Methods 513

11.3 Directory and Disk Structure 515

11.4 File-System Mounting 526

11.5 File Sharing 528

11.6 Protection 533

11.7 Summary 538

Exercises 539

Bibliographical Notes 541

Chapter 12 File-System Implementation

12.1 File-System Structure 543

12.2 File-System Implementation 546

12.3 Directory Implementation 552

12.4 Allocation Methods 553

12.5 Free-Space Management 561

12.6 Efficiency and Performance 564

12.7 Recovery 568

12.8 NFS 571

12.9 Example: The WAFL File System 577

12.10 Summary 580

Exercises 581

Bibliographical Notes 585

Chapter 13 I/O Systems

13.1 Overview 587

13.2 I/O Hardware 588

13.3 Application I/O Interface 597

13.4 Kernel I/O Subsystem 604

13.5 Transforming I/O Requests to

Hardware Operations 611

13.6 STREAMS 613

13.7 Performance 615

13.8 Summary 618

Exercises 619

Bibliographical Notes 621

xx Contents

PART FIVE PROTECTION AND SECURITY

Chapter 14 Protection

14.1 Goals of Protection 625

14.2 Principles of Protection 626

14.3 Domain of Protection 627

14.4 Access Matrix 632

14.5 Implementation of the Access

Matrix 636

14.6 Access Control 639

14.7 Revocation of Access Rights 640

14.8 Capability-Based Systems 641

14.9 Language-Based Protection 644

14.10 Summary 649

Exercises 650

Bibliographical Notes 652

Chapter 15 Security

15.1 The Security Problem 657

15.2 Program Threats 661

15.3 System and Network Threats 669

15.4 Cryptography as a Security Tool 674

15.5 User Authentication 685

15.6 Implementing Security Defenses 689

15.7 Firewalling to Protect Systems and

Networks 696

15.8 Computer-Security

Classifications 698

15.9 An Example: Windows 7 699

15.10 Summary 701

Exercises 702

Bibliographical Notes 704

PART SIX ADVANCED TOPICS

Chapter 16 Virtual Machines

16.1 Overview 711

16.2 History 713

16.3 Benefits and Features 714

16.4 Building Blocks 717

16.5 Types of Virtual Machines and Their

Implementations 721

16.6 Virtualization and Operating-System

Components 728

16.7 Examples 735

16.8 Summary 737

Exercises 738

Bibliographical Notes 739

Chapter 17 Distributed Systems

17.1 Advantages of Distributed

Systems 741

17.2 Types of Network-

based Operating Systems 743

17.3 Network Structure 747

17.4 Communication Structure 751

17.5 Communication Protocols 756

17.6 An Example: TCP/IP 760

17.7 Robustness 762

17.8 Design Issues 764

17.9 Distributed File Systems 765

17.10 Summary 773

Exercises 774

Bibliographical Notes 777

Contents xxi

PART SEVEN CASE STUDIES

Chapter 18 The Linux System

18.1 Linux History 781

18.2 Design Principles 786

18.3 Kernel Modules 789

18.4 Process Management 792

18.5 Scheduling 795

18.6 Memory Management 800

18.7 File Systems 809

18.8 Input and Output 815

18.9 Interprocess Communication 818

18.10 Network Structure 819

18.11 Security 821

18.12 Summary 824

Exercises 824

Bibliographical Notes 826

Chapter 19 Windows 7

19.1 History 829

19.2 Design Principles 831

19.3 System Components 838

19.4 Terminal Services and Fast User

Switching 862

19.5 File System 863

19.6 Networking 869

19.7 Programmer Interface 874

19.8 Summary 883

Exercises 883

Bibliographical Notes 885

Chapter 20 Influential Operating Systems

20.1 Feature Migration 887

20.2 Early Systems 888

20.3 Atlas 895

20.4 XDS-940 896

20.5 THE 897

20.6 RC 4000 897

20.7 CTSS 898

20.8 MULTICS 899

20.9 IBM OS/360 899

20.10 TOPS-20 901

20.11 CP/M and MS/DOS 901

20.12 Macintosh Operating System and

Windows 902

20.13 Mach 902

20.14 Other Systems 904

Exercises 904

Bibliographical Notes 904

PART EIGHT APPENDICES

Appendix A BSD UNIX

A.1 UNIX History A1

A.2 Design Principles A6

A.3 Programmer Interface A8

A.4 User Interface A15

A.5 Process Management A18

A.6 Memory Management A22

A.7 File System A24

A.8 I/O System A32

A.9 Interprocess Communication A36

A.10 Summary A40

Exercises A41

Bibliographical Notes A42

xxii Contents

Appendix B The Mach System

B.1 History of the Mach System B1

B.2 Design Principles B3

B.3 System Components B4

B.4 Process Management B7

B.5 Interprocess Communication B13

B.6 Memory Management B18

B.7 Programmer Interface B23

B.8 Summary B24

Exercises B25

Bibliographical Notes B26

Part One

Overview

An operating system acts as an intermediary between the user of a

computer and the computer hardware. The purpose of an operating

system is to provide an environment in which a user can execute

programs in a convenient and efficient manner.

An operating system is software that manages the computer hard-

ware. The hardware must provide appropriate mechanisms to ensure the

correct operation of the computer system and to prevent user programs

from interfering with the proper operation of the system.

Internally, operating systems vary greatly in their makeup, since they

are organized along many different lines. The design of a new operating

system is a major task. It is important that the goals of the system be well

defined before the design begins. These goals form the basis for choices

among various algorithms and strategies.

Because an operating system is large and complex, it must be created

piece by piece. E ach of these pieces should be a well-delineated portion

of the system, with carefully defined inputs, outputs, and functions.

1

CHAPTER

Introduction

An operating system is a program that manages a computer’s hardware. It

also provides a basis for application programs and acts as an intermediary

between the computer user and the computer hardware. An amazing aspect of

operating systems is how they vary in accomplishing these tasks. Mainframe

operating systems are designed primarily to optimize utilization of hardware.

Personal computer (

PC)operatingsystemssupportcomplexgames,business

applications, and everything in between. Operating systems for mobile com-

puters provide an environment in which a user can easily interface with the

computer to execute programs. Thus, some operating systems are designed to

be convenient, others to be efficient, and others to be some combination of the

two.

Before we can explore the details of computer system operation, we need to

know something about system structure. We thus discuss the basic functions

of system startup,

I/O,andstorageearlyinthischapter.Wealsodescribe

the basic computer architecture that makes it possible to write a functional

operating system.

Because an operating system is large and complex, it must be created

piece by piece. Each of these pieces should be a well-delineated portion of the

system, with carefully defined inputs, outputs, and functions. In this chapter,

we provide a general overview of the major components of a contemporary

computer system as well as the functions provided by the operating system.

Additionally, we cover several other topics to help set the stage for the

remainder of this text: data structures used in operating systems, computing

environments, and open-source operating systems.

CHAPTER OBJECTIVES

• To d e scribe t h e basic o rg a nization o f compute r s y s t e ms.

• To p ro v i d e a g r a n d t our o f the m a j o r c o m p o n e nts of o p e rating s y s t e ms.

• To g i ve a n overview o f the m a n y t y p e s of c o m p u ting e n v i ronm e n t s .

• To explore several open-source operating systems.

3

4 Chapter 1 Introduction

user

1

user

2

user

3

computer hardware

operating system

system and application programs

compiler assembler text editor database

system

user

n

…

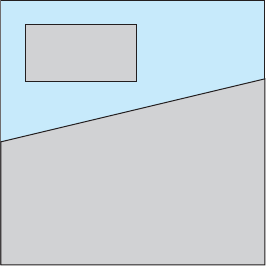

…

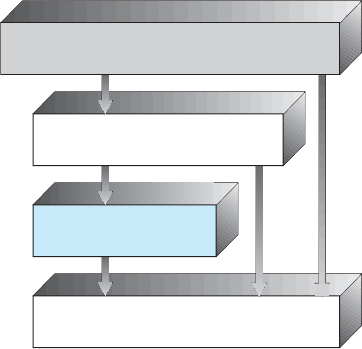

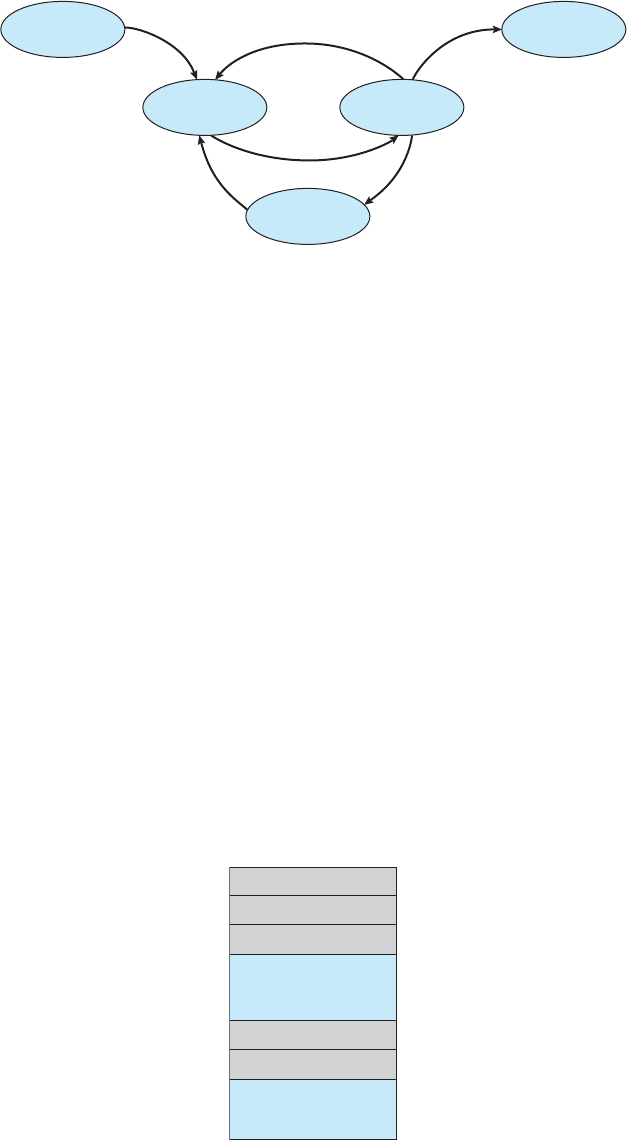

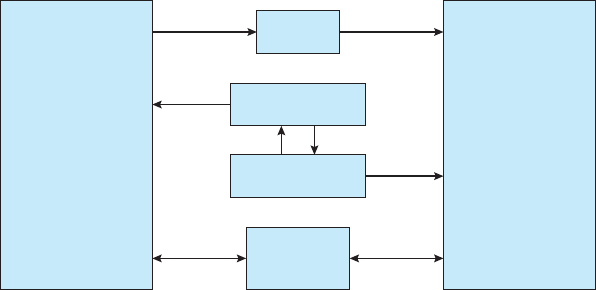

Figure 1.1 Abstract view of the components of a computer system.

1.1 What Operating Systems Do

We begin our discussion by looking at the operating system’s role in the

overall computer system. A computer system can be divided roughly into four

components: the hardware, the operating system, the application programs,

and the users (Figure 1.1).

The hardware—the central processing unit (

CPU),thememory,andthe

input/output (

I/O) devices—provides the basic computing resources for the

system. The application programs—such as word processors, spreadsheets,

compilers, and Web browsers—define the ways in which these resources are

used to solve users’ computing problems. The operating system controls the

hardware and coordinates its use among the various application programs for

the various users.

We can also view a computer system as consisting of hardware, software,

and data. The operating system provides the means for proper use of these

resources in the operation of the computer system. An operating system is

similar to a government. Like a government, it performs no useful function by

itself. It simply provides an environment within which other programs can do

useful work.

To understand more fully the operating system’s role, we next explore

operating systems from two viewpoints: that of the user and that of the system.

1.1.1 User View

The user’s view of the computer varies according to the interface being

used. Most computer users sit in front of a

PC,consistingofamonitor,

keyboard, mouse, and system unit. Such a system is designed for one user

1.1 What Operating Systems Do 5

to monopolize its resources. The goal is to maximize the work (or play) that

the user is performing. In this case, the operating system is designed mostly

for ease of use,withsomeattentionpaidtoperformanceandnonepaid

to resource utilization—how various hardware and software resources are

shared. Performance is, of course, important to the user; but such systems

are optimized for the single-user experience rather than the requirements of

multiple users.

In other cases, a user sits at a terminal connected to a mainframe or a

minicomputer.Otherusersareaccessingthesamecomputerthroughother

terminals. These users share resources and may exchange information. The

operating system in such cases is designed to maximize resource utilization—

to assure that all available

CPU time, memory, and I/O are used efficiently and

that no individual user takes more than her fair share.

In still other cases, users sit at workstations connected to networks of

other workstations and servers.Theseusershavededicatedresourcesat

their disposal, but they also share resources such as networking and servers,

including file, compute, and print servers. Therefore, their operating system is

designed to compromise between individual usability and resource utilization.

Recently, many varieties of mobile computers, such as smartphones and

tablets, have come into fashion. Most mobile computers are standalone units for

individual users. Quite often, they are connected to networks through cellular

or other wireless technologies. Increasingly,thesemobiledevicesarereplacing

desktop and laptop computers for people who are primarily interested in

using computers for e-mail and web browsing. The user interface for mobile

computers generally features a touch screen,wheretheuserinteractswiththe

system by pressing and swiping fingers across the screen rather than using a

physical keyboard and mouse.

Some computers have little or no user view. For example, embedded

computers in home devices and automobiles may have numeric keypads and

may turn indicator lights on or off to show status, but they and their operating

systems are designed primarily to run without user intervention.

1.1.2 System View

From the computer’s point of view, the operating system is the program

most intimately involved with the hardware. In this context, we can view

an operating system as a resource allocator.Acomputersystemhasmany

resources that may be required to solve a problem:

CPU time, memory space,

file-storage space,

I/O devices, and so on. The operating system acts as the

manager of these resources. Facing numerous and possibly conflicting requests

for resources, the operating system must decide how to allocate them to specific

programs and users so that it can operate the computer system efficiently and

fairly. As we have seen, resource allocation is especially important where many

users access the same mainframe or minicomputer.

Aslightlydifferentviewofanoperatingsystememphasizestheneedto

control the various

I/O devices and user programs. An operating system is a

control program. A control program manages the execution of user programs

to prevent errors and improper use of thecomputer.Itisespeciallyconcerned

with the operation and control of

I/O devices.

6 Chapter 1 Introduction

1.1.3 Defining Operating Systems

By now, you can probably see that the term operating system covers many roles

and functions. That is the case, at least in part, because of the myriad designs

and uses of computers. Computers are present within toasters, cars, ships,

spacecraft, homes, and businesses. They are the basis for game machines, music

players, cable

TV tuners, and industrial control systems. Although computers

have a relatively short history, they have evolved rapidly. Computing started

as an experiment to determine what could be done and quickly moved to

fixed-purpose systems for military uses, such as code breaking and trajectory

plotting, and governmental uses, such as census calculation. Those early

computers evolved into general-purpose, multifunction mainframes, and

that’s when operating systems were born. In the 1960s, Moore’s Law predicted

that the number of transistors on an integrated circuit would double every

eighteen months, and that prediction has held true. Computers gained in

functionality and shrunk in size, leading to a vast number of uses and a vast

number and variety of operating systems. (See Chapter 20 for more details on

the history of operating systems.)

How, then, can we define what an operating system is? In general, we have

no completely adequate definition of an operating system. Operating systems

exist because they offer a reasonable way to solve the problem of creating a

usable computing system. The fundamental goal of computer systems is to

execute user programs and to make solving user problems easier. Computer

hardware is constructed toward this goal. Since bare hardware alone is not

particularly easy to use, application programs are developed. These programs

require certain common operations, such as those controlling the

I/O devices.

The common functions of controlling and allocating resources are then brought

together into one piece of software: the operating system.

In addition, we have no universally accepted definition of what is part of the

operating system. A simple viewpoint is that it includes everything a vendor

ships when you order “the operating system.” The features included, however,

vary greatly across systems. Some systems take up less than a megabyte of

space and lack even a full-screen editor, whereas others require gigabytes of

space and are based entirely on graphical windowing systems. A more common

definition, and the one that we usually follow, is that the operating system

is the one program running at all times on the computer—usually called

the kernel.(Alongwiththekernel,therearetwoothertypesofprograms:

system programs,whichareassociatedwiththeoperatingsystembutarenot

necessarily part of the kernel, and application programs, which include all

programs not associated with the operation of the system.)

The matter of what constitutes an operating system became increasingly

important as personal computers became more widespread and operating

systems grew increasingly sophisticated. In 1998, the United States Department

of Justice filed suit against Microsoft, in essence claiming that Microsoft

included too much functionality in its operating systems and thus prevented

application vendors from competing. (For example, a Web browser was an

integral part of the operating systems.) As a result, Microsoft was found guilty

of using its operating-system monopoly to limit competition.

Today, however, if we look at operating systems for mobile devices, we

see that once again the number of features constituting the operating system

1.2 Computer-System Organization 7

is increasing. Mobile operating systems often include not only a core kernel

but also middleware—a set of software frameworks that provide additional

services to application developers. For example, each of the two most promi-

nent mobile operating systems—Apple’s i

OS and Google’s Android—features

acorekernelalongwithmiddlewarethatsupportsdatabases,multimedia,and

graphics (to name a only few).

1.2 Computer-System Organization

Before we can explore the details of how computer systems operate, we need

general knowledge of the structure of a computer system. In this section,

we look at several parts of this structure. The section is mostly concerned

with computer-system organization, so you can skim or skip it if you already

understand the concepts.

1.2.1 Computer-System Operation

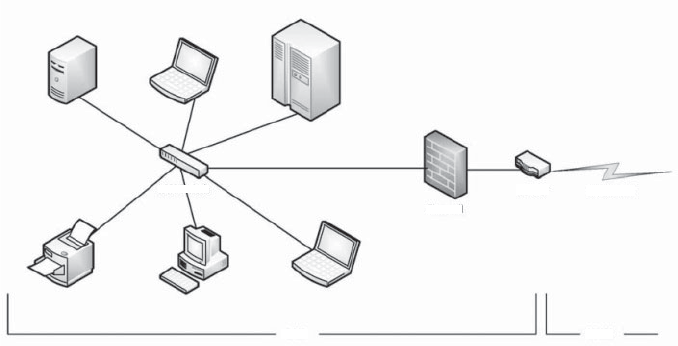

Amoderngeneral-purposecomputersystemconsistsofoneormoreCPUs

and a number of device controllers connected through a common bus that

provides access to shared memory (Figure 1.2). Each device controller is in

charge of a specific type of device (for example, disk drives, audio devices,

or video displays). The

CPU and the device controllers can execute in parallel,

competing for memory cycles. To ensure orderly access to the shared memory,

a memory controller synchronizes access to the memory.

For a computer to start running—for instance, when it is powered up or

rebooted —it needs to have an initial program to run. This initial program,

or bootstrap program,tendstobesimple.Typically,itisstoredwithin

the computer hardware in read-only memory (

ROM)orelectricallyerasable

programmable read-only memory (

EEPROM), known by the general term

firmware.Itinitializesallaspectsofthesystem,from

CPU registers to device

controllers to memory contents. The bootstrap program must know how to load

the operating system and how to start executing that system. To accomplish

USB controller

keyboard

printer

mouse

monitor

disks

graphics

adapter

disk

controller

memory

CPU

on-line

Figure 1.2 Amoderncomputersystem.

8 Chapter 1 Introduction

user

process

executing

CPU

I/O interrupt

processing

I/O

request

transfer

done

I/O

request

transfer

done

I/O

device

idle

transferring

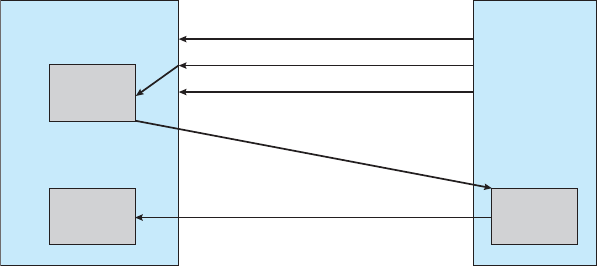

Figure 1.3 Interrupt timeline for a single process doing output.

this goal, the bootstrap program must locate the operating-system kernel and

load it into memory.

Once the kernel is loaded and executing, it can start providing services to

the system and its users. Some services are provided outside of the kernel, by

system programs that are loaded into memory at boot time to become system

processes,orsystem daemons that run the entire time the kernel is running.

On

UNIX,thefirstsystemprocessis“init,” and it starts many other daemons.

Once this phase is complete, the system is fully booted, and the system waits

for some event to occur.

The occurrence of an event is usually signaled by an interrupt from either

the hardware or the software. Hardware may trigger an interrupt at any time

by sending a signal to the

CPU,usuallybywayofthesystembus.Software

may trigger an interrupt by executing a special operation called a system call

(also called a monitor call).

When the

CPU is interrupted, it stops what it is doing and immediately

transfers execution to a fixed location. The fixed location usually contains

the starting address where the service routine for the interrupt is located.

The interrupt service routine executes; on completion, the

CPU resumes the

interrupted computation. A timeline of this operation is shown in Figure 1.3.

Interrupts are an important part of a computer architecture. Each computer

design has its own interrupt mechanism, but several functions are common.

The interrupt must transfer control to the appropriate interrupt service routine.

The straightforward method for handling this transfer would be to invoke

agenericroutinetoexaminetheinterruptinformation.Theroutine,inturn,

would call the interrupt-specific handler. However, interrupts must be handled

quickly. Since only a predefined number of interrupts is possible, a table of

pointers to interrupt routines can be used instead to provide the necessary

speed. The interrupt routine is called indirectly through the table, with no

intermediate routine needed. Generally, the table of pointers is stored in low

memory (the first hundred or so locations). These locations hold the addresses

of the interrupt service routines for the various devices. This array, or interrupt

vector,ofaddressesisthenindexedbyaunique device number, given with

the interrupt request, to provide the address of the interrupt service routine for

1.2 Computer-System Organization 9

STORAGE DEFINITIONS AND NOTATION

The basic unit of computer storage is the bit.Abitcancontainoneoftwo

values, 0 and 1. All other storage in a computer is based on collections of bits.

Given enough bits, it is amazing how many things a computer can represent:

numbers, letters, images, movies, sounds, documents, and programs, to name

afew.Abyte is 8 bits, and on most computers it is the smallest convenient

chunk of storage. For example, most computers don’t have an instruction to

move a bit but do have one to move a byte. A less common term is word,

which is a given computer architecture’s native unit of data. A word is made

up of one or more bytes. For example, a computer that has 64-bit registers and

64-bit memory addressing typically has 64-bit (8-byte) words. A computer

executes many operations in its native word size rather than a byte at a time.

Computer storage, along with most computer throughput, is generally

measured and manipulated in bytes and collections of bytes. A kilobyte,or

KB,is1,024bytes;amegabyte,orMB,is1,024

2

bytes; a gigabyte,orGB,is

1,024

3

bytes; a terabyte,orTB,is1,024

4

bytes; and a petabyte,orPB,is1,024

5

bytes. Computer manufacturers often round off these numbers and say that

amegabyteis1millionbytesandagigabyteis1billionbytes.Networking

measurements are an exception to this general rule; they are given in bits

(because networks move data a bit at a time).

the interrupting device. Operating systems as different as Windows and UNIX

dispatch interrupts in this manner.

The interrupt architecture must also save the address of the interrupted

instruction. Many old designs simply stored the interrupt address in a

fixed location or in a location indexed by the device number. More recent

architectures store the return address on the system stack. If the interrupt

routine needs to modify the processor state—for instance, by modifying

register values—it must explicitly save the current state and then restore that

state before returning. After the interrupt is serviced, the saved return address

is loaded into the program counter, and the interrupted computation resumes

as though the interrupt had not occurred.

1.2.2 Storage Structure

The CPU can load instructions only from memory, so any programs to run must

be stored there. General-purpose computers run most of their programs from

rewritable memory, called main memory (also called random-access memory,

or

RAM). Main memory commonly is implemented in a semiconductor

technology called dynamic random-access memory (

DRAM).

Computers use other forms of memory as well. We have already mentioned

read-only memory,

ROM)andelectricallyerasableprogrammableread-only

memory,

EEPROM). Because ROM cannot be changed, only static programs, such

as the bootstrap program described earlier, are stored there. The immutability

of

ROM is of use in game cartridges. EEPROM can be changed but cannot

be changed frequently and so contains mostly static programs. For example,

smartphones have

EEPROM to store their factory-installed programs.

10 Chapter 1 Introduction

All forms of memory provide an array of bytes. Each byte has its

own address. Interaction is achieved through a sequence of

load or store

instructions to specific memory addresses. The load instruction moves a byte

or word from main memory to an internal register within the

CPU,whereasthe

store instruction moves the content of a register to main memory. Aside from

explicit loads and stores, the

CPU automatically loads instructions from main

memory for execution.

Atypicalinstruction–executioncycle,asexecutedonasystemwithavon

Neumann architecture,firstfetchesaninstructionfrommemoryandstores

that instruction in the instruction register.Theinstructionisthendecoded

and may cause operands to be fetched from memory and stored in some

internal register. After the instruction on the operands has been executed, the

result may be stored back in memory. Notice that the memory unit sees only

astreamofmemoryaddresses.Itdoesnotknowhowtheyaregenerated(by

the instruction counter, indexing, indirection, literal addresses, or some other

means) or what they are for (instructions or data). Accordingly, we can ignore

how amemoryaddressisgeneratedbyaprogram.Weareinterestedonlyin

the sequence of memory addresses generated by the running program.

Ideally, we want the programs and data to reside in main memory

permanently. This arrangement usually is not possible for the following two

reasons:

1. Main memory is usually too small to store all needed programs and data

permanently.

2. Main memory is a volatile storage device that loses its contents when

power is turned off or otherwise lost.

Thus, most computer systems provide secondary storage as an extension of

main memory. The main requirement for secondary storage is that it be able to

hold large quantities of data permanently.

The most common secondary-storage device is a magnetic disk,which

provides storage for both programs and data. Most programs (system and

application) are stored on a disk until they are loaded into memory. Many

programs then use the disk as both the source and the destination of their

processing. Hence, the proper management of disk storage is of central

importance to a computer system, as we discuss in Chapter 10.

In a larger sense, however, the storage structure that we have described—

consisting of registers, main memory, and magnetic disks—is only one of many

possible storage systems. Others include cache memory,

CD-ROM,magnetic

tapes, and so on. Each storage system provides the basic functions of storing

adatumandholdingthatdatumuntilitisretrievedatalatertime.Themain

differences among the various storage systems lie in speed, cost, size, and

volatility.

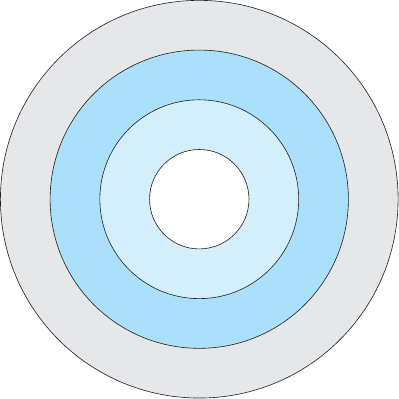

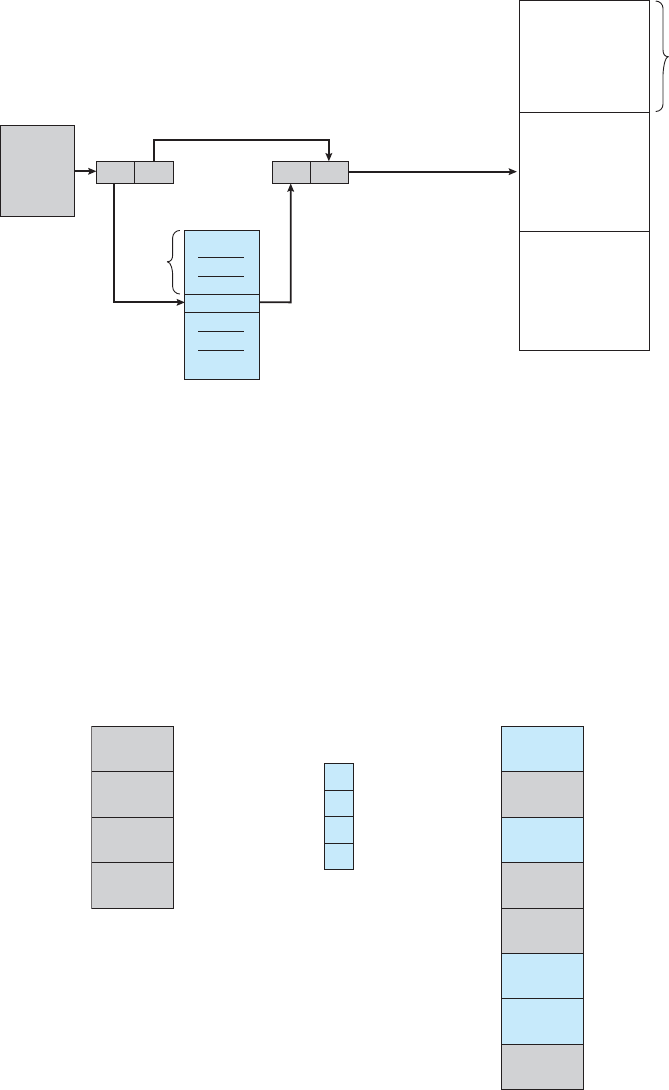

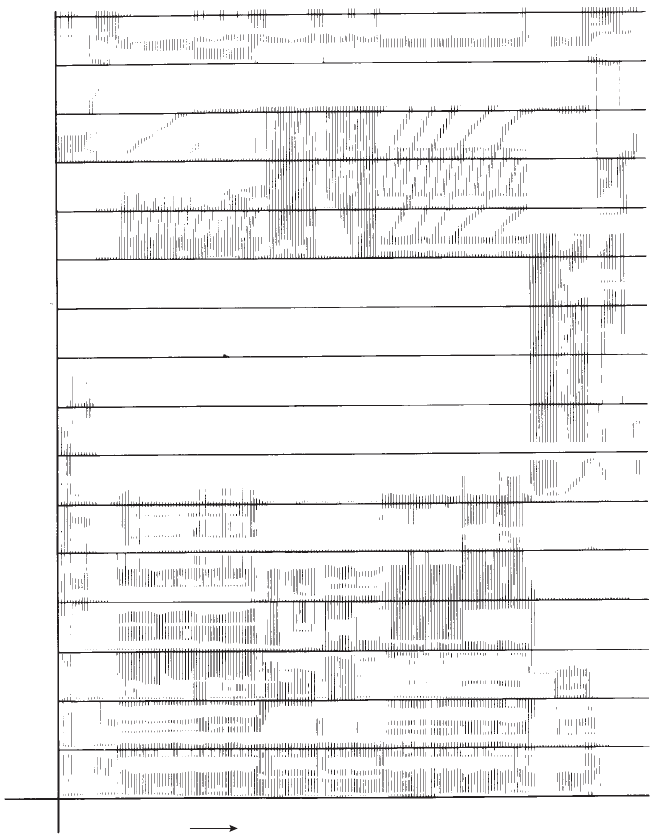

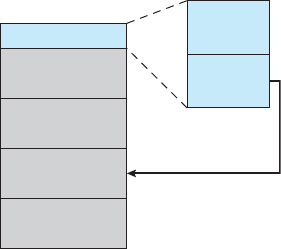

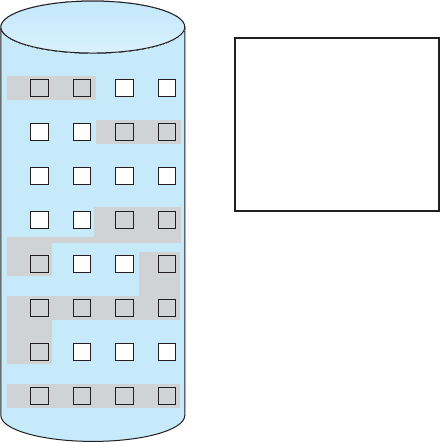

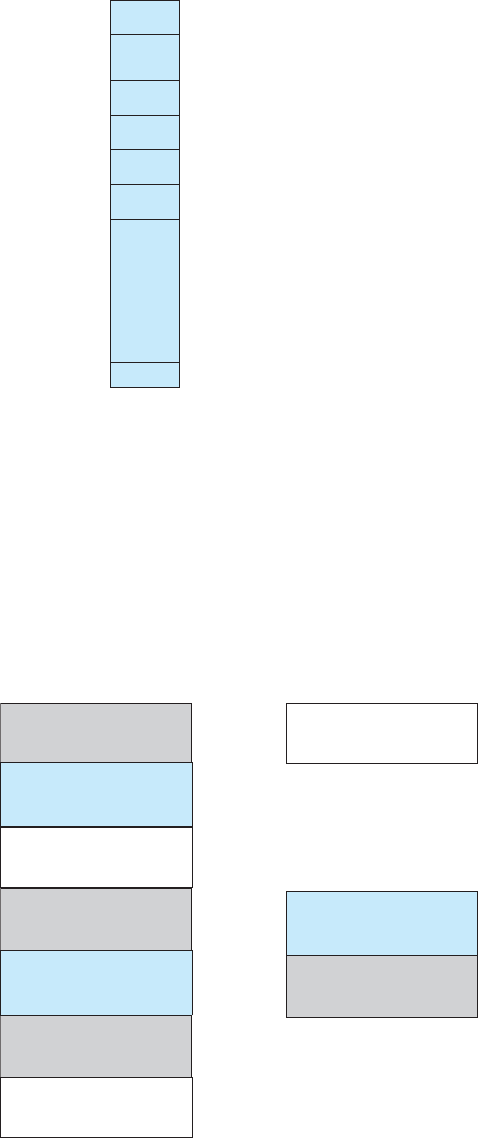

The wide variety of storage systems can be organized in a hierarchy (Figure

1.4) according to speed and cost. The higher levels are expensive, but they are

fast. As we move down the hierarchy, the cost per bit generally decreases,

whereas the access time generally increases. This trade-off is reasonable; if a

given storage system were both faster and less expensive than another—other

properties being the same—then there would be no reason to use the slower,

more expensive memory. In fact, many early storage devices, including paper

1.2 Computer-System Organization 11

registers

cache

main memory

solid-state disk

magnetic disk

optical disk

magnetic tapes

Figure 1.4 Storage-device hierarchy.

tape and core memories, are relegated to museums now that magnetic tape and

semiconductor memory have become faster and cheaper. The top four levels

of memory in Figure 1.4 may be constructed using semiconductor memory.

In addition to differing in speed and cost, the various storage systems are

either volatile or nonvolatile. As mentioned earlier, volatile storage loses its

contents when the power to the device is removed. In the absence of expensive

battery and generator backup systems, data must be written to nonvolatile

storage for safekeeping. In the hierarchy shown in Figure 1.4, the storage

systems above the solid-state disk are volatile, whereas those including the

solid-state disk and below are nonvolatile.

Solid-state disks have several variants but in general are faster than

magnetic disks and are nonvolatile. One type of solid-state disk stores data in a

large

DRAM array during normal operation but also contains a hidden magnetic

hard disk and a battery for backup power. If external power is interrupted, this

solid-state disk’s controller copies the data from

RAM to the magnetic disk.

When external power is restored, the controller copies the data back into

RAM.

Another form of solid-state disk is flash memory, which is popular in cameras

and personal digital assistants (

PDAs),inrobots,andincreasinglyforstorage

on general-purpose computers. Flash memory is slower than

DRAM but needs

no power to retain its contents. Another form of nonvolatile storage is

NVRAM,

which is

DRAM with battery backup power. This memory can be as fast as

DRAM and (as long as the battery lasts) is nonvolatile.

The design of a complete memory system must balance all the factors just

discussed: it must use only as much expensive memory as necessary while

providing as much inexpensive, nonvolatile memory as possible. Caches can

12 Chapter 1 Introduction

be installed to improve performance where a large disparity in access time or

transfer rate exists between two components.

1.2.3 I/O Structure

Storage is only one of many types of I/O devices within a computer. A large

portion of operating system code is dedicated to managing

I/O,bothbecause

of its importance to the reliability and performance of a system and because of

the varying nature of the devices. Next, we provide an overview of

I/O.

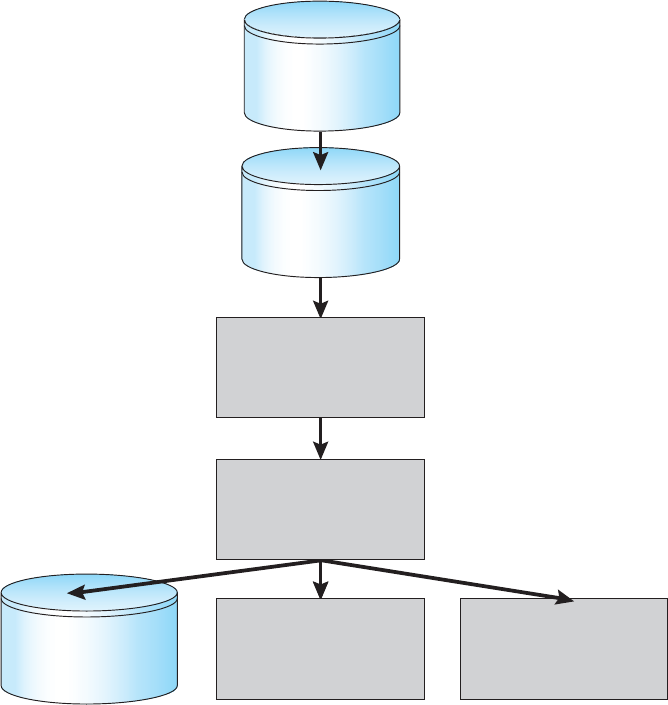

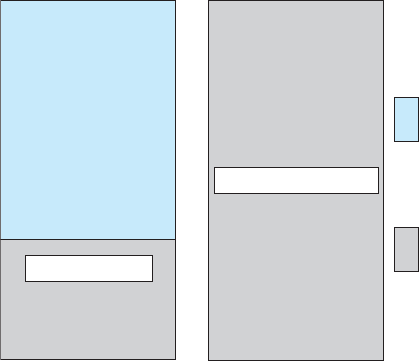

Ageneral-purposecomputersystemconsistsof

CPUsandmultipledevice

controllers that are connected through a common bus. Each device controller

is in charge of a specific type of device. Depending on the controller, more

than one device may be attached. For instance, seven or more devices can be

attached to the small computer-systems interface (

SCSI) controller. A device

controller maintains some local buffer storage and a set of special-purpose

registers. The device controller is responsible for moving the data between

the peripheral devices that it controls and its local buffer storage. Typically,

operating systems have a device driver for each device controller. This device

driver understands the device controller and provides the rest of the operating

system with a uniform interface to the device.

To start an

I/O operation, the device driver loads the appropriate registers

within the device controller. The device controller, in turn, examines the

contents of these registers to determine what action to take (such as “read

acharacterfromthekeyboard”). The controller starts the transfer of data from

the device to its local buffer. Once the transfer of data is complete, the device

controller informs the device driver via an interrupt that it has finished its

operation. The device driver then returns control to the operating system,

possibly returning the data or a pointer to the data if the operation was a read.

For other operations, the device driver returns status information.

This form of interrupt-driven

I/O is fine for moving small amounts of data

but can produce high overhead when used for bulk data movement such as disk

I/O.Tosolvethisproblem,direct memory access (DMA) is used. After setting

up buffers, pointers, and counters for the

I/O device, the device controller

transfers an entire block of data directly to or from its own buffer storage to

memory, with no intervention by the

CPU.Onlyoneinterruptisgeneratedper

block, to tell the device driver that the operation has completed, rather than

the one interrupt per byte generated forlow-speeddevices.Whilethedevice

controller is performing these operations, the

CPU is available to accomplish

other work.

Some high-end systems use switch rather than bus architecture. On these

systems, multiple components can talk to other components concurrently,

rather than competing for cycles on a shared bus. In this case,

DMA is even

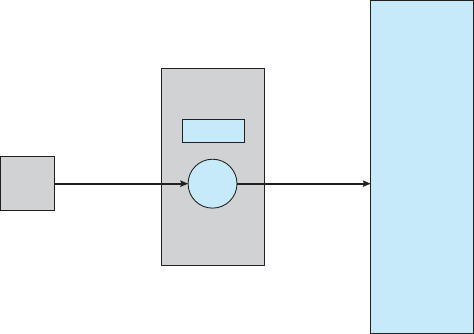

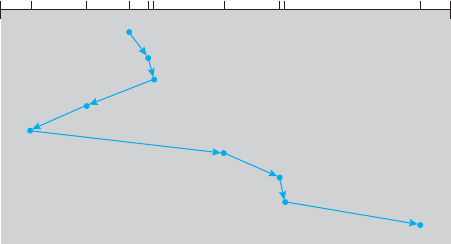

more effective. Figure 1.5 shows the interplay of all components of a computer

system.

1.3 Computer-System Architecture

In Section 1.2, we introduced the general structure of a typical computer system.

A computer system can be organized in a number of different ways, which we

1.3 Computer-System Architecture 13

thread of execution

instructions

and

data

instruction execution

cycle

data movement

DMA

memory

interrupt

cache

data

I/O request

CPU (*N)

device

(*M)

Figure 1.5 How a modern computer system works.

can categorize roughly according to the number of general-purpose processors

used.

1.3.1 Single-Processor Systems

Until recently, most computer systems used a single processor. On a single-

processor system, there is one main

CPU capable of executing a general-purpose

instruction set, including instructions from user processes. Almost all single-

processor systems have other special-purpose processors as well. They may

come in the form of device-specific processors, such as disk, keyboard, and

graphics controllers; or, on mainframes, they may come in the form of more

general-purpose processors, such as

I/O processors that move data rapidly

among the components of the system.

All of these special-purpose processors run a limited instruction set and

do not run user processes. Sometimes, they are managed by the operating

system, in that the operating system sends them information about their next

task and monitors their status. For example, a disk-controller microprocessor

receives a sequence of requests from the main

CPU and implements its own disk

queue and scheduling algorithm. This arrangement relieves the main

CPU of

the overhead of disk scheduling.

PCscontainamicroprocessorinthekeyboard

to convert the keystrokes into codes to be sent to the

CPU.Inothersystems

or circumstances, special-purpose processors are low-level components built

into the hardware. The operating system cannot communicate with these

processors; they do their jobs autonomously. The use of special-purpose

microprocessors is common and does not turn a single-processor system into

14 Chapter 1 Introduction

amultiprocessor.Ifthereisonlyonegeneral-purposeCPU, then the system is

asingle-processorsystem.

1.3.2 Multiprocessor Systems

Within the past several years, multiprocessor systems (also known as parallel

systems or multicore systems) have begun to dominate the landscape of

computing. Such systems have two or more processors in close communication,

sharing the computer bus and sometimes the clock, memory, and peripheral

devices. Multiprocessor systems first appeared prominently appeared in

servers and have since migrated to desktop and laptop systems. Recently,

multiple processors have appeared on mobile devices such as smartphones

and tablet computers.

Multiprocessor systems have three main advantages:

1. Increased throughput.Byincreasingthenumberofprocessors,weexpect

to get more work done in less time. The speed-up ratio with

N processors

is not

N, however; rather, it is less than N. When multiple processors

cooperate on a task, a certain amount of overhead is incurred in keeping

all the parts working correctly. This overhead, plus contention for shared

resources, lowers the expected gain from additional processors. Similarly,

N programmers working closely together do not produce N times the

amount of work a single programmer would produce.

2. Economy of scale.Multiprocessorsystemscancostlessthanequivalent

multiple single-processor systems, because they can share peripherals,

mass storage, and power supplies. If several programs operate on the

same set of data, it is cheaper to store those data on one disk and to have

all the processors share them than to have many computers with local

disks and many copies of the data.

3. Increased reliability. If functions can be distributed properly among

several processors, then the failure of one processor will not halt the

system, only slow it down. If we have ten processors and one fails, then

each of the remaining nine processors can pick up a share of the work of

the failed processor. Thus, the entire system runs only 10 percent slower,

rather than failing altogether.

Increased reliability of a computer system is crucial in many applications.

The ability to continue providing service proportional to the level of surviving

hardware is called graceful degradation.Somesystemsgobeyondgraceful

degradation and are called fault tolerant,becausetheycansufferafailureof

any single component and still continue operation. Fault tolerance requires

amechanismtoallowthefailuretobedetected,diagnosed,and,ifpossible,

corrected. The

HP NonStop (formerly Tandem) system uses both hardware and

software duplication to ensure continued operation despite faults. The system

consists of multiple pairs of

CPUs, working in lockstep. Both processors in the

pair execute each instruction and compare the results. If the results differ, then

one

CPU of the pair is at fault, and both are halted. The process that was being

executed is then moved to another pair of

CPUs, and the instruction that failed

1.3 Computer-System Architecture 15

is restarted. This solution is expensive, since it involves special hardware and

considerable hardware duplication.

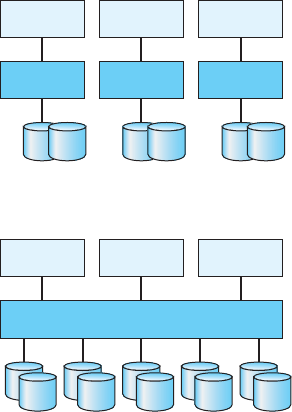

The multiple-processor systems in use today are of two types. Some

systems use asymmetric multiprocessing, in which each processor is assigned

aspecifictask.Aboss processor controls the system; the other processors either

look to the boss for instruction or have predefined tasks. This scheme defines

a boss–worker relationship. The boss processor schedules and allocates work

to the worker processors.

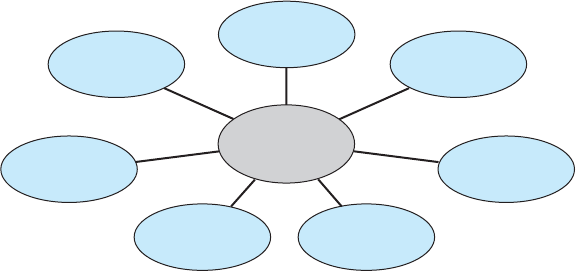

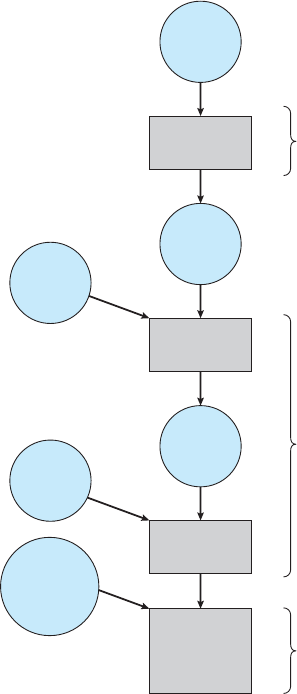

The most common systems use symmetric multiprocessing (

SMP),in

which each processor performs all tasks within the operating system.

SMP

means that all processors are peers; no boss–worker relationship exists

between processors. Figure 1.6 illustrates a typical

SMP architecture. Notice

that each processor has its own set of registers, as well as a private—or local

—cache. However, all processors share physical memory. An example of an

SMP system is AIX,acommercialversionofUNIX designed by IBM.AnAIX

system can be configured to employ dozens of processors. The benefit of this

model is that many processes can run simultaneously—

N processes can run

if there are

N CPUs—without causing performance to deteriorate significantly.

However, we must carefully control

I/O to ensure that the data reach the

appropriate processor. Also, since the

CPUs are separate, one may be sitting

idle while another is overloaded, resulting in inefficiencies. These inefficiencies

can be avoided if the processors share certain data structures. A multiprocessor

system of this form will allow processes and resources—such as memory—

to be shared dynamically among the various processors and can lower the

variance among the processors. Such a system must be written carefully, as

we shall see in Chapter 5. Virtually all modern operating systems—including

Windows, Mac

OS X,andLinux—nowprovidesupportforSMP.

The difference between symmetric and asymmetric multiprocessing may

result from either hardware or software. Special hardware can differentiate the

multiple processors, or the software can be written to allow only one boss and

multiple workers. For instance, Sun Microsystems’ operating system

SunOS

Versi on 4 p rovid ed as ym me tr ic m ultiprocessing , w he reas Ve rs io n 5 (Solari s) i s

symmetric on the same hardware.

Multiprocessing adds

CPUstoincreasecomputingpower.IftheCPU has an

integrated memory controller, then adding

CPUscanalsoincreasetheamount

CPU

0

registers

cache

CPU

1

registers

cache

CPU

2

registers

cache

memory

Figure 1.6 Symmetric multiprocessing architecture.

16 Chapter 1 Introduction

of memory addressable in the system. Either way, multiprocessing can cause

asystemtochangeitsmemoryaccessmodelfromuniformmemoryaccess

(

UMA)tonon-uniformmemoryaccess(NUMA). UMA is defined as the situation

in which access to any

RAM from any CPU takes the same amount of time. With

NUMA, some parts of memory may take longer to access than other parts,

creating a performance penalty. Operating systems can minimize the

NUMA

penalty through resource management, as discussed in Section 9.5.4.

Arecenttrendin

CPU design is to include multiple computing cores

on a single chip. Such multiprocessor systems are termed multicore.They

can be more efficient than multiple chips with single cores because on-chip

communication is faster than between-chip communication. In addition, one

chip with multiple cores uses significantly less power than multiple single-core

chips.

It is important to note that while multicore systems are multiprocessor

systems, not all multiprocessor systems are multicore, as we shall see in Section

1.3.3. In our coverage of multiprocessor systems throughout this text, unless

we state otherwise, we generally use the more contemporary term multicore,

which excludes some multiprocessor systems.

In Figure 1.7, we show a dual-core design with two cores on the same

chip. In this design, each core has its own register set as well as its own local

cache. Other designs might use a shared cache or a combination of local and

shared caches. Aside from architectural considerations, such as cache, memory,

and bus contention, these multicore

CPUsappeartotheoperatingsystemas

N standard processors. This characteristic puts pressure on operating system

designers—and application programmers—to make use of those processing

cores.

Finally, blade servers are a relatively recent development in which multiple

processor boards,

I/O boards, and networking boards are placed in the same

chassis. The difference between these and traditional multiprocessor systems

is that each blade-processor board boots independently and runs its own

operating system. Some blade-server boards are multiprocessor as well, which

blurs the lines between types of computers. In essence, these servers consist of

multiple independent multiprocessor systems.

CPU core

0

registers

cache

CPU core

1

registers

cache

memory

Figure 1.7 Adual-coredesignwithtwocoresplacedonthesamechip.